By now, you’ve probably already dabbled with Stable Diffusion or Midjourney to create some AI-generated pictures, or used ChatGPT to see what all the fuss has been about. But spend a little time in social media and you’ll see a popular trend that doesn’t involve still images nor text responses.

The current hot thing in world of machine learning is short movies, so let’s jump into something called Runway Gen-2 and see how this most recent version can be used to make weird and wonderful videos, and more besides.

How to get started

Runway is a young company, formed just three years ago by Cristobal Valenzuela, Anastasis Germanidis, and Alejandro Matamala, while working at New York University. The group makes AI tools for media/content creation and manipulation, with Gen-2 being one of its newest and most interesting programs.

Where Gen-1 required a source video to extrapolate a new movie clip from it, Gen-2 does it entirely from a text prompt or a single still image.

To use it, head to main website or download the app (iOS only), and create a free account. With this, you’ll have quite a few restrictions on what you can do. For example, you’ll start with 125 credits and for every second of Gen-2 video you create, you’ll use up 5 of them. You can’t add more credits with a Free account but it’s more than enough to get an idea as to whether you want to pay for a subscription.

Once you have your account, you’ll be taken to the Home page – the layout is very self-explanatory, with clearly indicated tutorials, a variety of tools, and the two main showcase features: text-to-video and image-to-video.

The first does for videos what Midjourney does for still images – interpret a string of words, called a prompt, into a 4 second video clip. And just as with picture generators, the more descriptive you are, the better the end result will be.

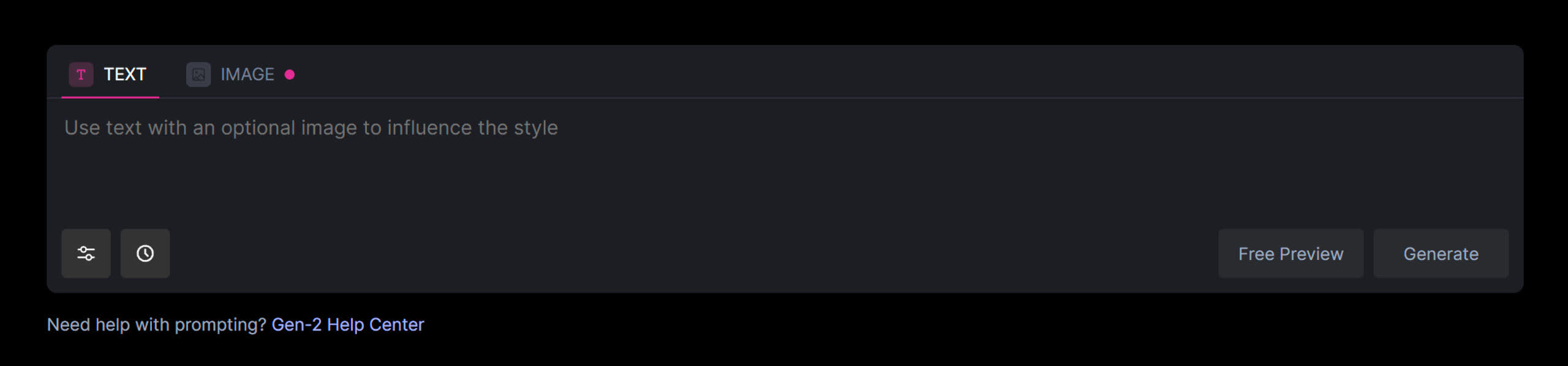

You can even include a reference image to help the neural network focus on exactly what you’re looking for, and before you even commit to creating a full video, you’ll be shown several preview still images – simply click on the one you like and the system will start generating it.

Your first steps with RunwayML

Like the tradition of getting a computer to say ‘Hello World!’ in coding, we’re starting off with the old AI favorite of ‘an astronaut riding a horse’. It’s a very short prompt and not very informative, so we shouldn’t expect to see anything spectacular.

To enter this, click on the Text to Video image at the top of the Home page, and your browser will then forward you to a text field. The prompt field has a limit of 320 characters, so you need to avoid being too verbose with the descriptions.

The furthest button on the bottom left provides generation options, depending on what type of account you have – the ability to upscale the video and remove the watermark aren’t available to Free users. However, you can toggle the use of interpolation, which smooths out transitions between frames, and you can alter the generation seed.

This particular value controls what starting values the neural network uses to generate the image. If you use the same prompt, seed, and other settings every time you run Gen-2 (or any AI image generator, for that matter), you’ll always get the same end result. This means even if you don’t think you can achieve anything better by using a different prompt, you’ve still got countless more variants to explore, simply by using a different seed.

The Free Preview button will force Gen-2 to create the first frame of four iterations of the prompt. Using this command gave us the following shots:

Granted, none of them look much like an astronaut, but the bottom left-hand image was quite cool and seemed like it had the best potential to be animated, so we selected that one for the video generation. With all accounts, the request joins a queue of request for Runway’s servers to process, but the more expensive the subscription, the shorter the wait.

Although it was generally less than a few minutes for us, it could sometimes take as long as 15 minutes. Depending on where you live and what time of the day you send the request in, it could take even longer. But what’s the end result like? Considering how poor the prompt was, the generated video clip wasn’t too bad.

You can see that the actual animation involves little more than moving the position of the camera, which is a fairly common trait in how RunwayML creates video clips – the horse and astronaut barely move at all, but that’s partly down to the prompt we used.

After each generation, you’ll be given the option to rate the result – this is part of the machine learning feedback system, helping to improve the network for future use. And provided you have enough credits, you can always rerun the content creation, tweaking the prompt if necessary, to get a better output.

All content gets saved in your Assets folder, but you can also download anything you make. The standard video format that RunwayML uses is MP4 and a four second, 24 fps clip comes in around 1.4 MB in size, with the bitrate being a fraction under 2.8 Mbps. You can extend a clip generation by an additional 4 seconds but this will use up 20 credits, so Free account users should avoid doing this as much as possible.

Getting better results in Gen-2

To make our first attempt look more like an actual astronaut, we used a more detailed prompt – one that directs the neural network to focus on specific aspects that we want visible in the video. Our first attempt didn’t look like an astronaut, so we tried the following prompt: ‘A highly realistic video of an astronaut, wearing a full spacesuit with helmet and oxygen pack, riding a galloping horse. The ground is covered in lush grass and there are mountains and forests in the background. The sun is low on the horizon.’

As you can see, the result is a touch better, but still not perfect – the rider doesn’t look exactly like an astronaut and the horse’s legs appear to come from another universe. So we used the same prompt again, but this time included an image of an astronaut riding a horse (one we made using Stable Diffusion) to see how that would help.

There’s an image icon on the side of the prompt field and clicking this will let you add a picture; or you can use the Image tab, just to the right of the Text tab. Note that if you use the separate Image to Video system, then no prompt nor preview is required.

The result this time contains a far better astronaut but was exceptionally worse, in every other way – shame about the horse!

Why were our results so poor?

Well, first of all, when was the last time you saw an astronaut, wearing a full spacesuit, riding a horse? Telling RunwayML to create something realistic somewhat relies on the neural network being trained on sufficient material that accurately covers what you’re looking for.

And then there’s the prompt itself – remember that you’re effectively trying to make a very short film, so it’s important to include phrases that are relevant to cinematography. So adding “in the style of anime” will alter the look significantly, whereas a commands such as “use sharp focus” or “strong depth of field” will produce a subtle but useful alteration.

Gen-2 puts more weighting on the prompt than any image provided, but the latter works best when the picture itself is photorealistic, rather than being a painting or a cartoon. We dispensed the image of the astronaut and just spent time tweaking the description to achieve this magnificence of cinema.

Prompt used: an astronaut in a spacesuit and full helmet on a white horse, riding away from the camera, galloping through a modern city, rich and cinematic style, realistic model, subject always in focus, strong depth of field, slow pan of camera, wide angle lens, vivid colors

It’s worth remembering that previews are free and won’t eat into your credits, so make good use of this feature to work through changes to your prompts, fine-tuning the generation process. Also, don’t forget about the generation seed value – adjusting this by just one digit could give you a significantly better outcome.

As you can only use a single image with the prompt, you may need to try experimenting with a variety of shots to get the look you’re after. RunwayML has its own text-to-image generator (one image costs 5 credits), but it doesn’t seem as powerful or feature-rich as Stable Diffusion or Midjourney.

Examples of what can be achieved

Just underneath the text-to-video interface is a collection of clips that Runway offers as examples for inspiration – some just use a prompt, whereas others include a specific image. It’s worth noting that every time you run a prompt through the system, the results will differ, so you’re unlikely to get the same output when you try them.

The secret life of cells

Prompt: Cells dividing viewed through a microscope, cinematic, microscopic, high detail, magnified, good exposure, subject in focus, dynamic movement, mesmerizing

Lost on a tropical island

Prompt: Aerial drone shot of a tropical beach in the style of cinematic video, shallow depth of field, subject in focus, dynamic movement

Future city scape

Prompt: a futuristic looking cityscape with an environmentally conscious design, lush greenery, abundant trees, technology intersecting with nature in the year 2300 in the style of cinematic, cinematography, shallow depth of field, subject in focus, beautiful, filmic

Rolling thunder in the deep Midwest

Prompt: A thunderstorm in the American midwest in the style of cinematography, beautiful composition, dynamic movement, shallow depth of field, subject in focus, filmic, 8k

The strongest staff/avenger

This is an example of Runway’s image-to-video generator. No text prompt is used, just a single image source.

Now, you may have already seen Runway Gen-2 clips on social media or other streaming sites and may be thinking that they’re of much higher quality than those shown above. It’s worth noting that such creations typically involve multiple compositions, edited together, and further improved via video software.

While this may seem like an unfair comparison, the following example (using multiple AI tools) shows just what can be achieved with time, determination, and no small degree of talent.

Impressive and somewhat unsettling in each measure, the scope of AI video generation is only just being realized. Considering that Runway has been in operation for just a handful of years, predicting how things will transpire in a decade has passed, for example, would be like trying to guess how the latest games look and play based on a few rounds of Pac-Man.

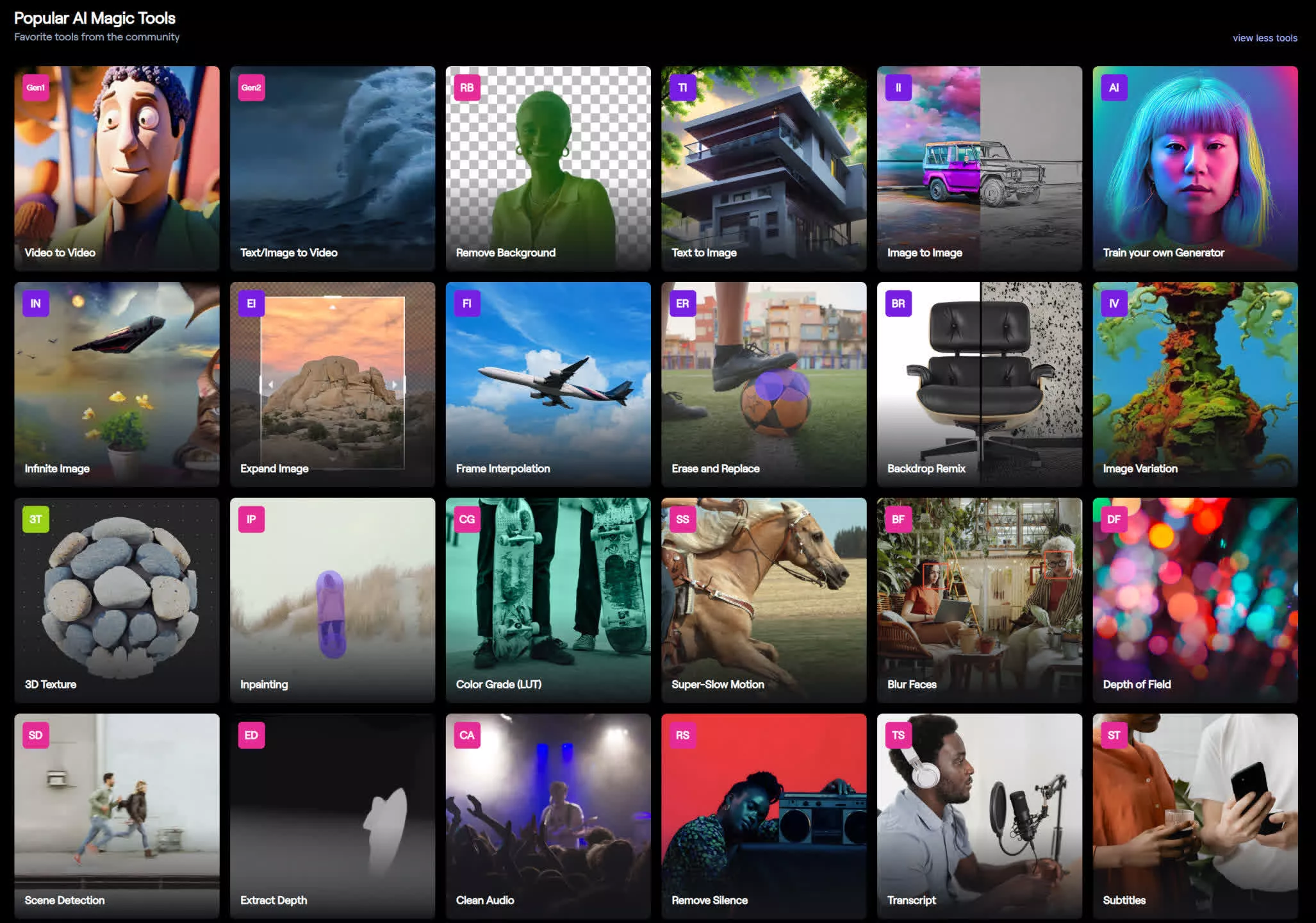

Getting more from Runway’s AI tools

If you’re a keen animator or have plenty of experience in editing videos, then you’ll be pleased to see that Runway offers a host of AI tools for manipulating and expanding the basic content the neural network generates. These can be found on the Home page and clicking the option to View More Tools will show them all.

For all these tools, you don’t have to use any video generated by Gen-1 or Gen-2, as everything works on any material you can upload. However, machine learning is implemented by the processes for, say, blurring faces or adding depth of field. So it may not give you absolutely perfect results, but the entire procedure will be far quicker than doing it all by hand.

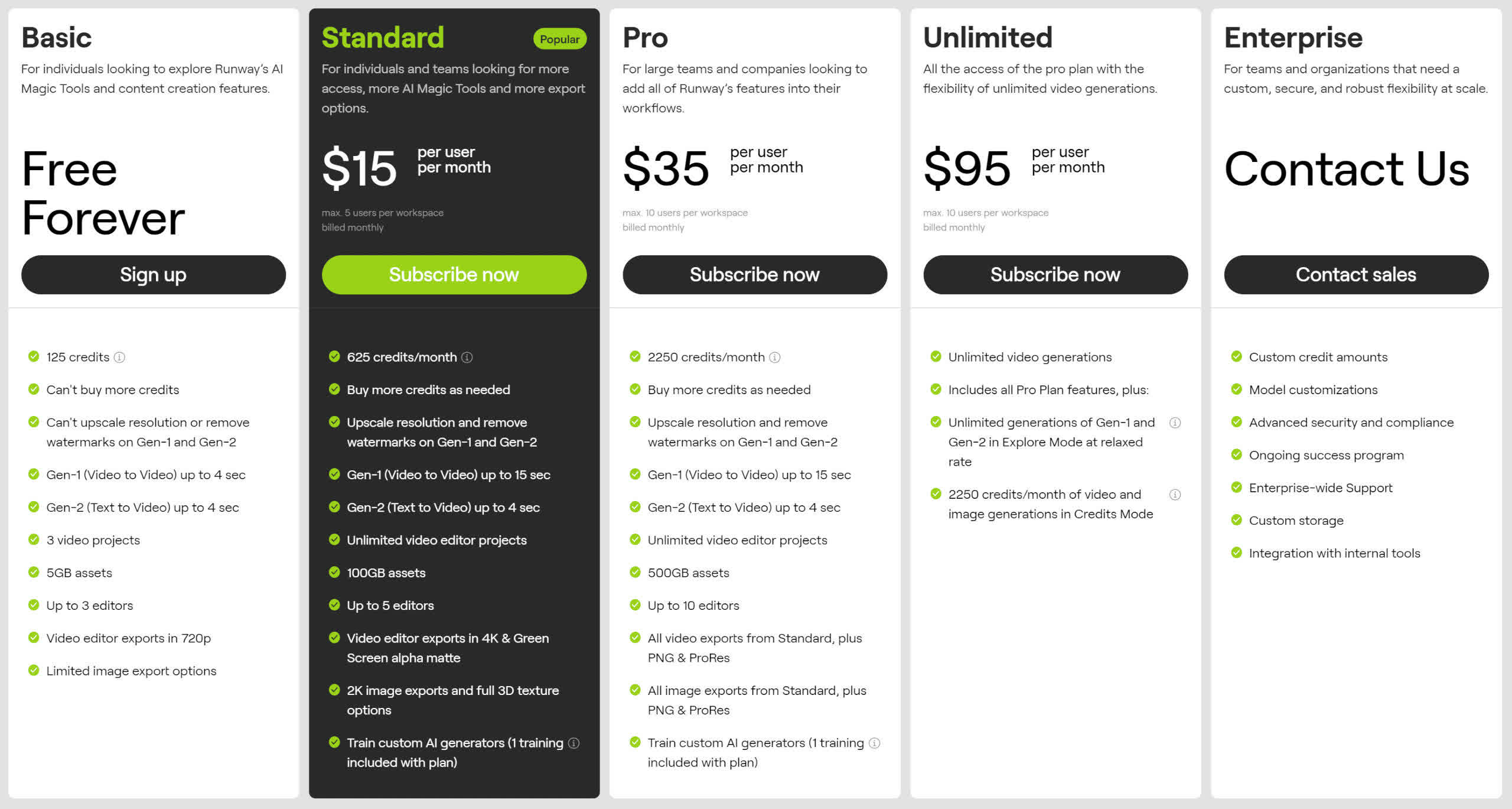

If you’re serious about exploring the world of AI video generation with Runway Gen-2, then you’ll need to consider choosing a subscription plan and this is where things get quite pricey. Unfortunately, there’s no way around this, as the cost of AI servers and video file hosting isn’t cheap at all, so the more features and options you want, the more you’ll be charged.

As of writing, the cheapest plan is $15 per month, but you get a decent amount of starting credits, larger asset hosting, and the ability to upscale videos and remove watermarks. At the professional end of the scale, prices climb a lot higher but the fees are still cheaper than having to create your own AI neural networks and buy the necessary hardware to train them and process the algorithm.

Spending as little as an hour playing about with Runway Gen-2 shows just why AI content creation has become so popular – in a matter of minutes, anyone can produce image or video clips that could potentially hold up well against material created by hand.

Obviously this has not been lost on the media industry and there are increasing concerns as to the impact AI will have on jobs and content authenticity, but for now, it’s probably best to treat it as somewhat of a fun novelty and just give it a go. You never know, you may just discover a talent for AI media generation and find yourself hooked!