For 25 years, one name was synonymous with computer graphics. Through its trials and tribulations, it fought against other long-standing companies as well as hot new upstarts. Its most successful product brand is still in use today, alongside many of the technologies it pioneered over the years.

We are, of course, referring to ATI Technologies. Here’s the fascinating story of its birth, rise to prominence, and the ultimate conclusion of this Canadian graphics giant.

A new star is born

As with most of the late, great technology companies we’ve covered in our “Gone But Not Forgotten” series, this story begins in the mid-1980s. Lee Lau, who had emigrated to Canada as a child, graduated with a Master’s degree in electronic engineering from the University of Toronto (UoT).

After initially working for a sub-division of Motorola in the early 1980s, he established a small firm called Comway that designed basic expansion cards and graphics adapters for IBM PCs, with the manufacturing process executed in Hong Kong.

This venture brought him into contact with Benny Lau, a circuit design engineer and fellow UoT graduate, and Kwok Yen Ho, who had amassed many years of experience in the electronics industry. At Lau’s urging, they joined him in transforming Comway into a new venture. With approximately $230,000 in bank loans and investments (some from Lee’s uncle, Francis Lau), the team officially founded Array Technology Incorporated in August 1985.

Its mission was to exclusively design video acceleration chipsets based entirely on gate arrays (hence the company name) and sell them to OEMs for use in IBM PCs, in clones, and their own expansion cards. Due to legal pressure from a similarly named business in the USA, it adopted the name ATI Technologies within a few months of the company’s inception.

In less than 12 months, it had its first product on the market. The Graphics Solution was a $300 card that could fit into any free 8-bit ISA slot, and supported multiple graphics modes – it could easily switch between MDA, CGA, Hercules, and Plantronics display outputs with the accompanying software.

For a small additional cost, customers could also acquire versions that included parallel and/or serial ports for connecting other peripherals to the PC. The only thing it didn’t offer was IBM’s EGA mode, but given the price, no one was really complaining.

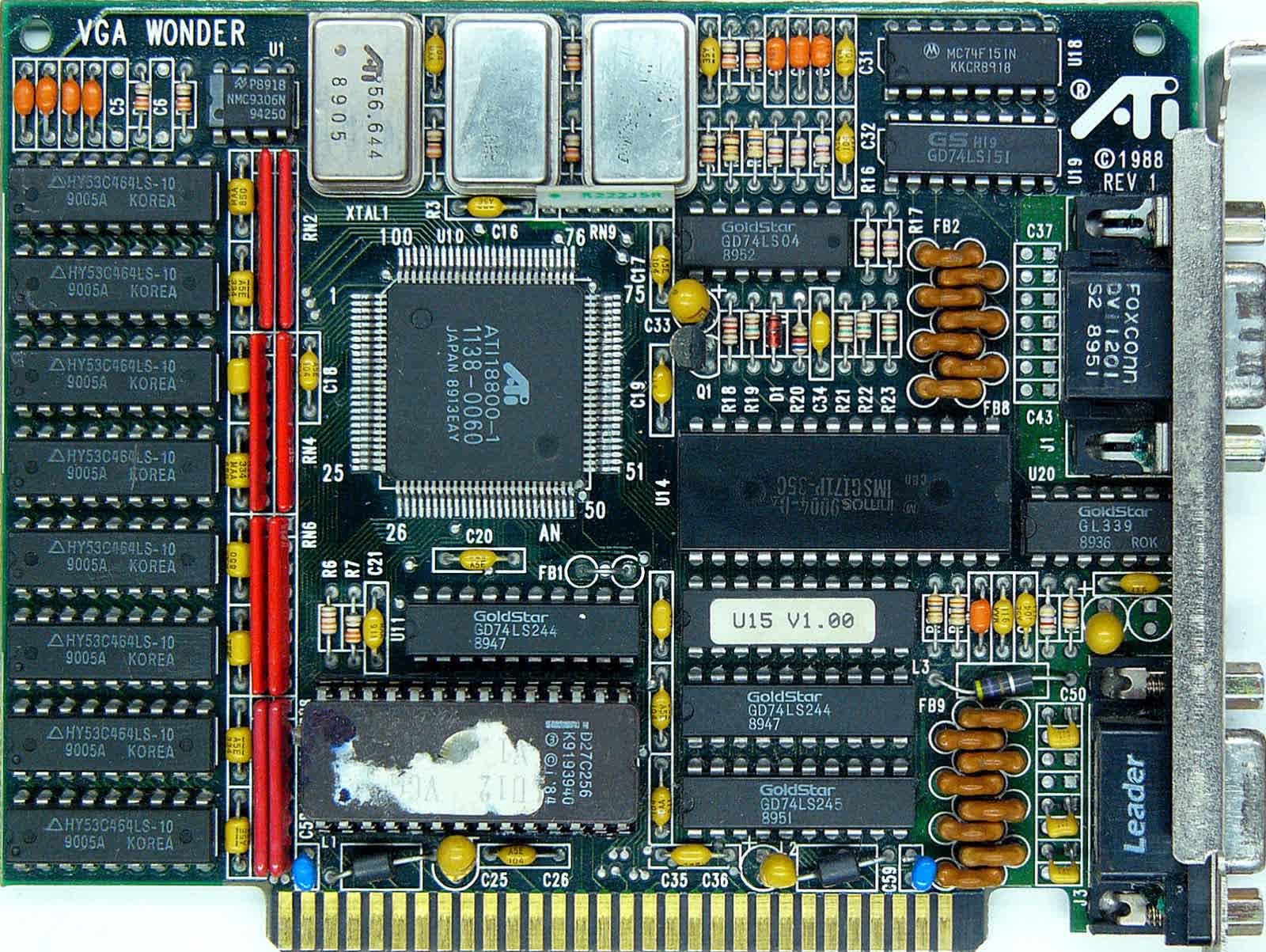

Being a newcomer in the industry, sales were perhaps modest, but with continuous investment and engineering prowess, the group continued to enhance their chips. As the 1980s neared its end, ATI proved it was not just a fleeting phenomenon – the $400 EGA Wonder (1987) and $500 VGA Wonder (1988, pictured above) showcased substantial improvements over their predecessors and were competitively priced.

Over the years, multiple versions of these products were released. Some were ultra-affordable options with minimal features, while others featured dedicated mouse ports or sound chips on the circuit board. However, this was a highly competitive market, and ATI wasn’t the only company producing such cards.

What was needed was something special, something that would make them stand out from the crowd.

Mach enters the stage

Graphics cards in the 80s were vastly different from what we know today, despite any superficial similarities. Their primary function was to convert the display information generated by the computer into an electrical signal for the monitor – nothing more. All of the computations involved in actually generating the displayed graphics and colors were entirely handled by the CPU.

However, the time was ripe for a dedicated co-processor to take over some of these tasks. One of the first entries on the market came from IBM, in the form of its 8514 display adapter. While less costly than workstation graphics cards, it was still quite pricey at over $1,200, naturally prompting a slew of companies to produce their own clones and variations.

In the case of ATI, this took the shape of the ATI38800 chip – better known as the Mach8. Implementing this processor in a PC led to certain 2D graphics operations (e.g., line drawing, area filling, bit blits) being delegated from the CPU. One major drawback of the design, however, was its lack of VGA capabilities. This forced the need for a separate card to handle this task or involve an additional chipset on the card.

The ATI Graphics Ultra and Graphics Vantage, released in 1991, were two such models that took the latter route, but they were rather pricey for what they offered. For example, the top-end Ultra, loaded with 1MB of dual-ported DRAM (marketed under the moniker of VRAM), cost $900, though prices quickly plummeted once ATI unveiled its next graphics processor.

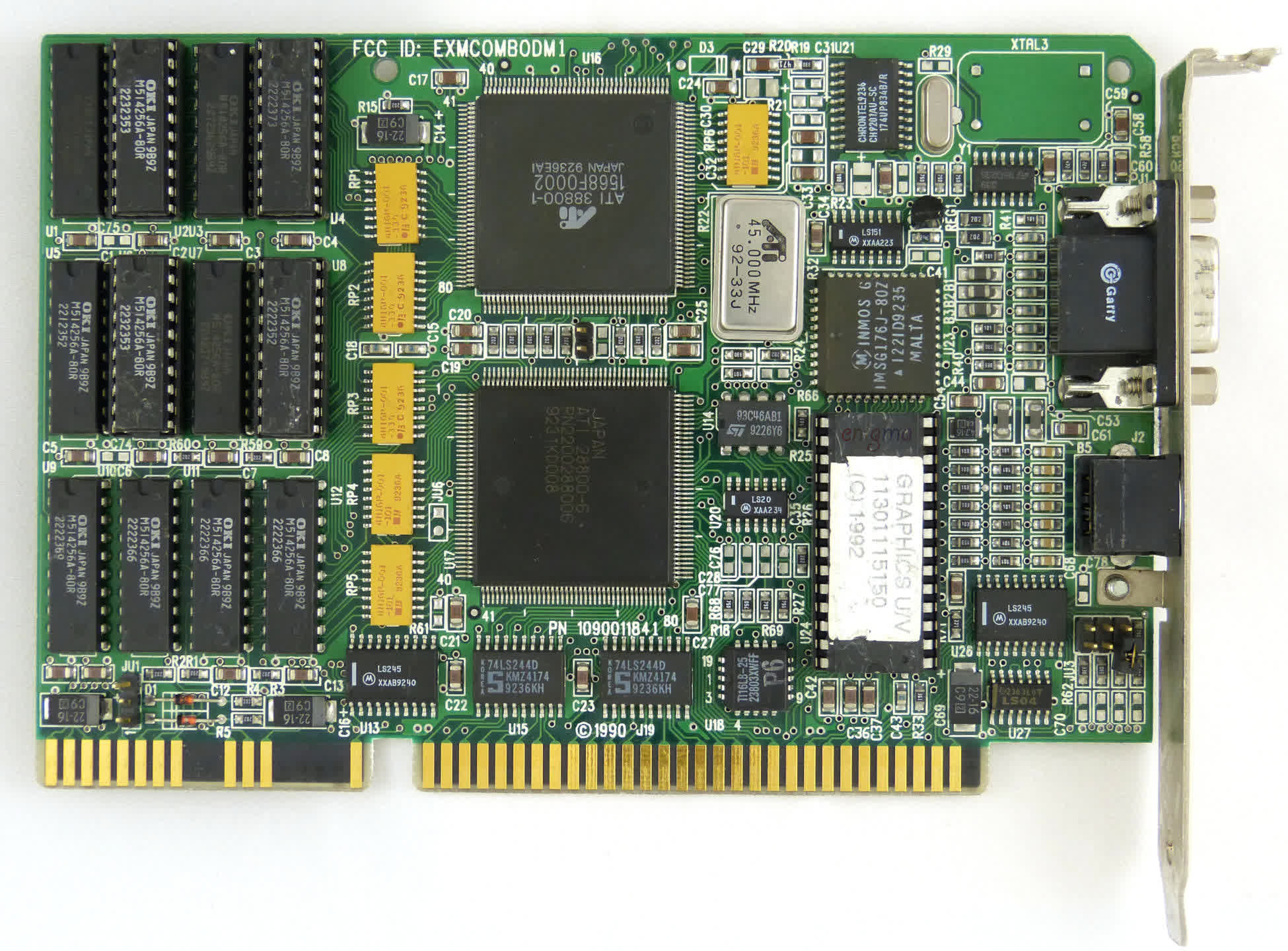

Packed full of improvements, the ATI68800 (Mach32) reached shelves in late 1992. With an integrated VGA controller, 64-bit internal processing, up to 24-bit color support, and hardware acceleration for the cursor, it was a significant step forward for the company.

The Graphics Ultra Pro (above) sported the new chip, along with 2 MB of VRAM and an asking price of $800 – it was genuinely powerful, especially with the optional linear aperture feature enabled, which allowed applications to write data directly into the video memory. However, it was not without issues, much like its competitors. ATI offered a more economical Plus version with 1 MB of DRAM and no memory aperture support. This model was less problematic and more popular due to its $500 price tag.

Over the next two years, ATI’s sales improved amidst fierce competition from the likes of Cirrus Logic, S3, Matrox, Tseng Laboratories, and countless other firms. In 1992, a subsidiary branch was opened in Munich, Germany, and a year later, the company went public with shares sold on the Toronto and Nasdaq Stock Exchanges.

However, with numerous companies vying in the same arena, maintaining a consistent lead over everyone else was extremely challenging. ATI soon faced its first setback, posting a net loss of just under $2 million.

It didn’t help that other companies had more cost-effective chips. Around this time, Cirrus Logic had purchased a tiny engineering firm, called AcuMOS, which had developed a single-die solution, containing the VGA controller, RAMDAC, and clock generator – ATI’s models all used separate chips for each role, which meant the cards cost more to manufacture.

Almost two years would pass before it had an appropriate response, in the form of the Mach64. The first variants of this chip still used an external RAMDAC and clock, but these were swiftly replaced by fully integrated models.

This new graphics adapter went on to inspire a multitude of product lines, particularly in the OEM sector. Xpression, WinTurbo, Pro Turbo, and Charger all became familiar names in both household and office PCs.

Despite the delay in launching the Mach64, ATI wasn’t idle in other areas. The founders were consistently observing the competition and foresaw a shift in the graphics market. They purchased a Boston-based graphics team from Kubota Corporation and tasked them with designing the next generation of processors to seize the opportunity once the change began, while the rest of the engineers continued to refine their current chips.

Rage inside the machine

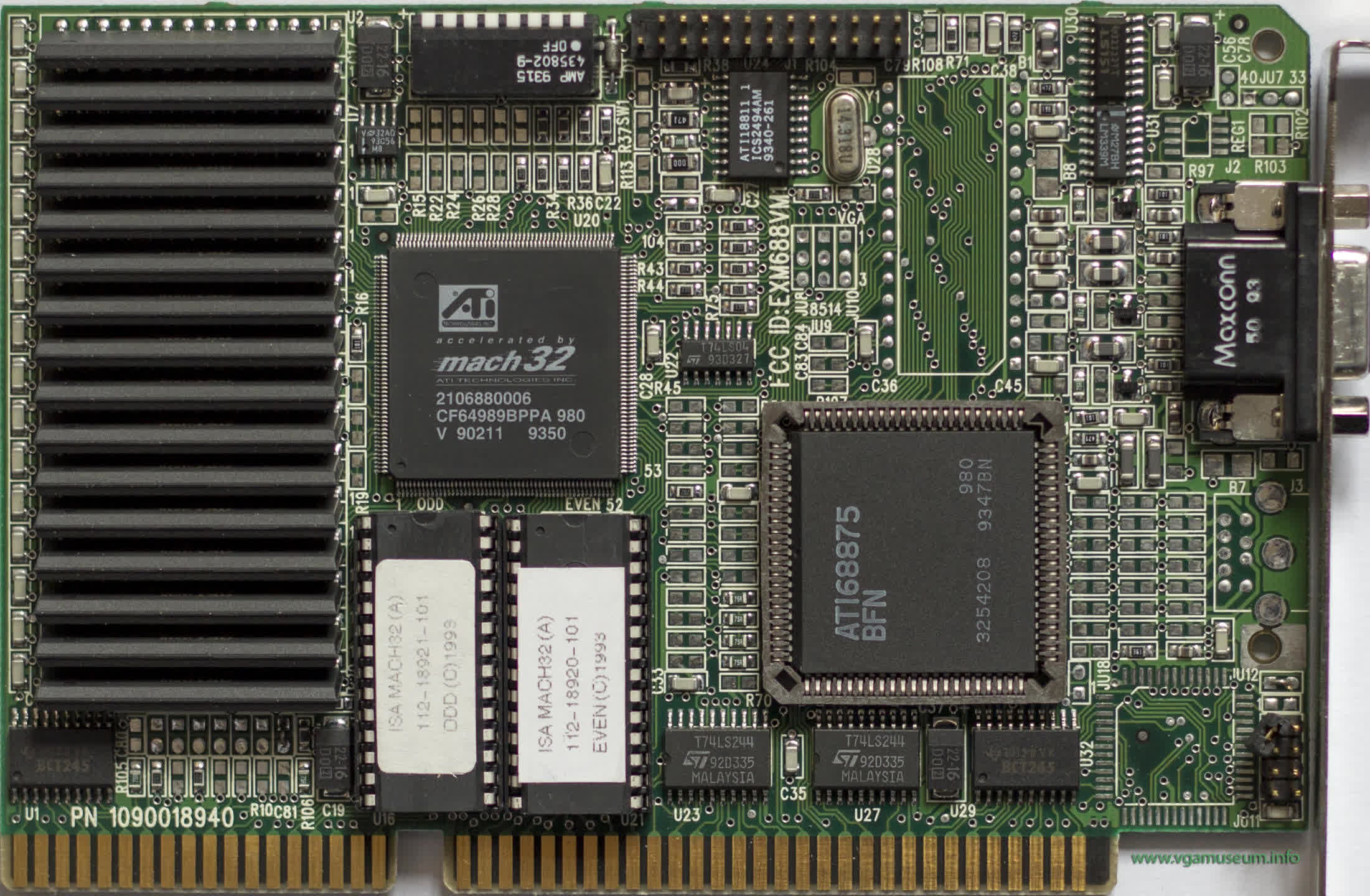

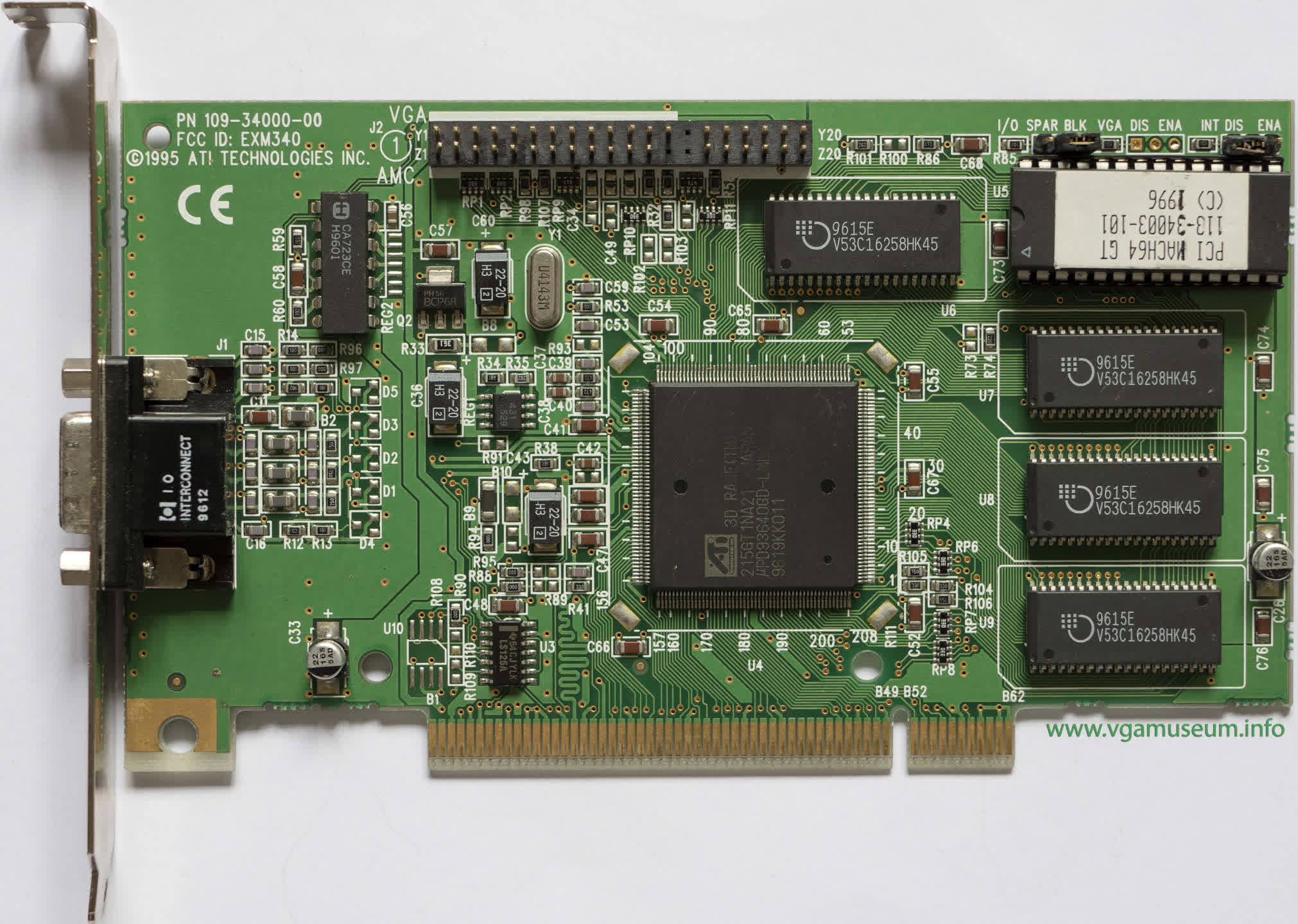

Two years after launching its Mach64-powered cards, ATI brought a new processor into the limelight in April 1996. Codenamed Mach64 GT, but marketed as 3D Rage, this was the company’s first processor offering both 2D and 3D acceleration.

On paper, the 3D Xpression cards that sported the chip looked to be real winners – support for Gouraud shading, perspective-correct texture mapping (PCTM), and bilinear texture filtering were all present. Running at 44 MHz, ATI claimed the 3D Rage could achieve a peak pixel fill rate of 44 Mpixels/s, though these performance claims had several caveats.

The 3D Rage was developed with Microsoft’s new Direct3D in mind. However, this API was not only delayed but also underwent constant specification changes before its eventual release in June 1996. As a result, ATI had to develop its own API for the 3D Rage, and only a handful of developers adopted it.

ATI’s processor didn’t support z-buffering, meaning any game using it wouldn’t run, and the alleged pixel fill rate was only achievable with flat-shaded polygons or those colored with non-perspective-correct texture mapping. With bilinear filtering or PCTM applied, the fill rate would halve, at the very least.

To make matters worse, the mere 2 MB of EDO DRAM on the early 3D Xpression models was slow and limited 16-bit color rendering to a maximum resolution of 640 x 480. And to cap it all off, even in games it could run, the 3D performance of the Rage processor was, at best, underwhelming.

Fortunately, the product did have some positives – it was reasonably priced at around $220, and its 2D and MPEG-1 acceleration were commendable for the price. ATI’s OEM contracts ensured that many PCs were sold with a 3D Xpression card inside, which helped maintain a steady revenue stream.

An updated version of the Mach64 GT (aka the 3D Rage II) appeared later the same year. This iteration supported z-buffer and palletized textures, along with a range of APIs and drivers for multiple operating systems. Performance improved, thanks to higher clock speeds and a larger texture cache, but was still underwhelming, especially compared to the offerings of new competitors.

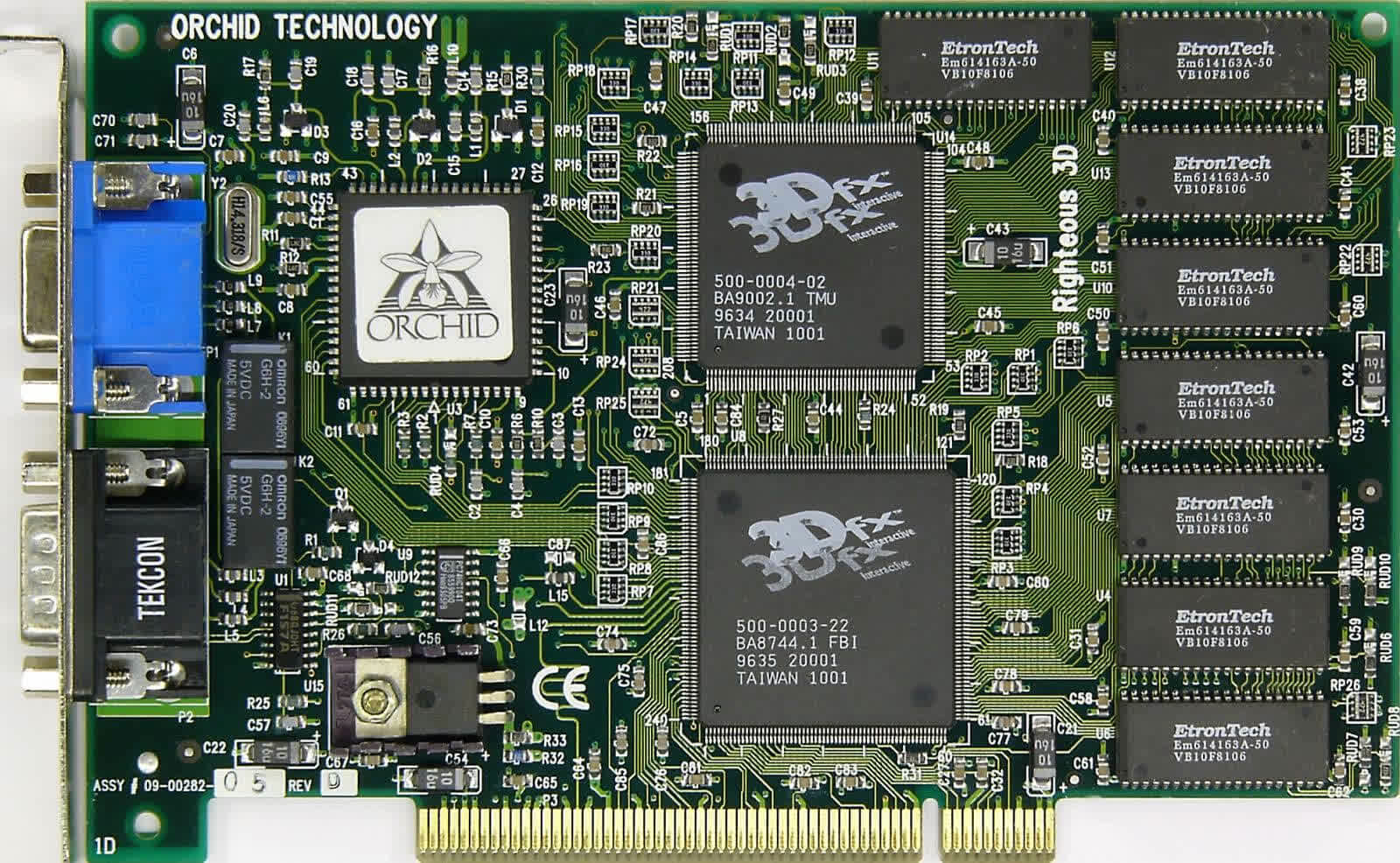

Released in October 1996, 3dfx Voodoo Graphics was 3D-only, but its rendering chops made the Rage II look decidedly second-class. None of ATI’s products came close to matching it.

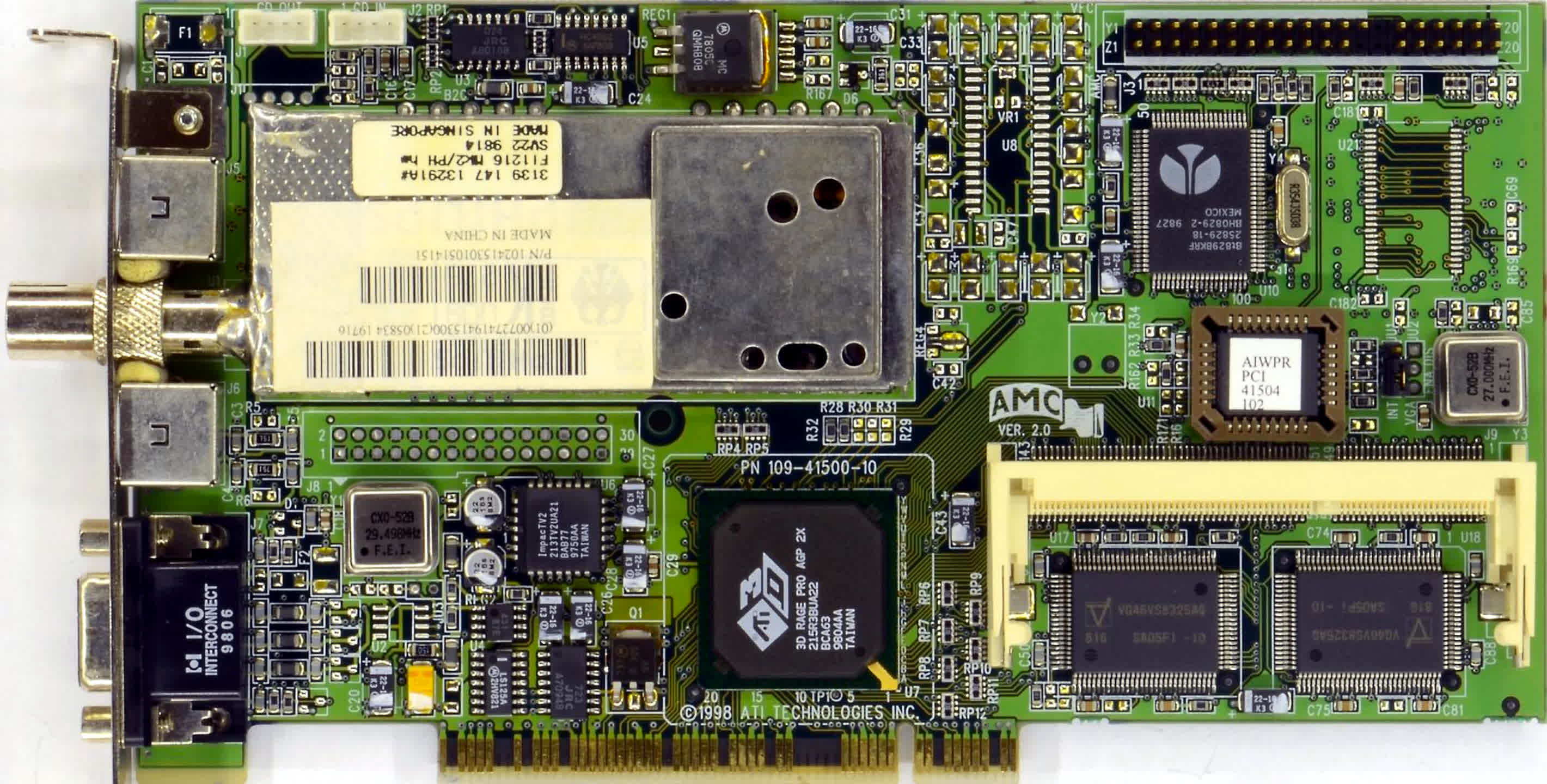

ATI was more invested in the broader PC market. It developed a dedicated TV encoding chip, the ImpacTV, and along with the addition of MPEG-2 acceleration in the 3D Rage II chip, consumers could purchase an all-in-one media card for a relatively modest sum.

This trend continued with the next update in 1997, the 3D Rage Pro, despite its enhanced rendering capabilities. Nvidia’s new Riva 128, when paired with a competent CPU, significantly outperformed ATI’s offering.

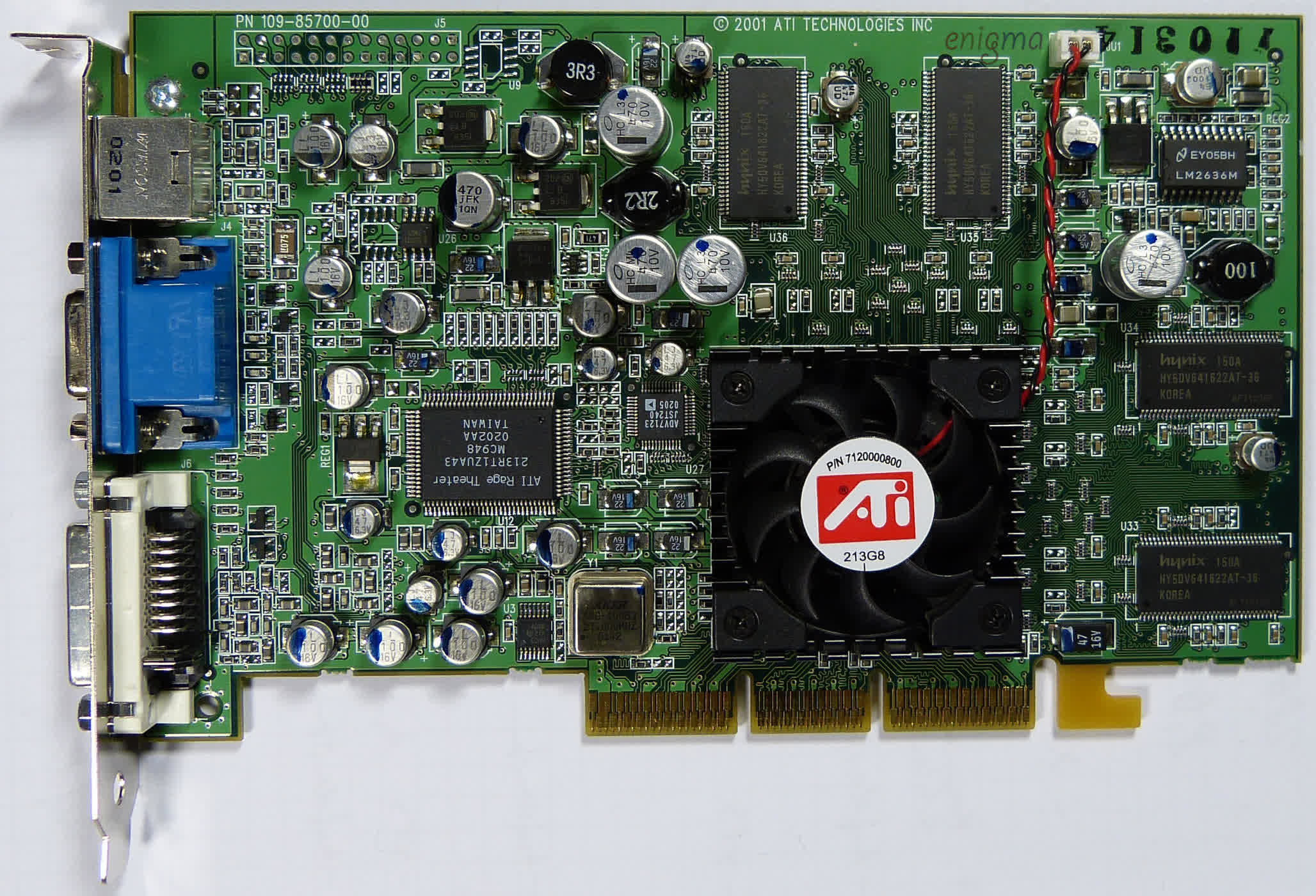

However, cards such as the Xpert@Play appealed to budget-conscious buyers with their comprehensive feature sets. The All-In-Wonder model exemplified this (shown below). It was a standard Rage Pro graphics card equipped with a dedicated TV tuner and video capture hardware.

As the development of graphics chips accelerated, smaller firms struggled to compete, and ATI acquired a former competitor, Tseng Laboratories, at the end of the year. The influx of new engineers and expertise resulted in a substantial update to the Rage architecture in 1998 – the Rage 128. But by this time, 3dfx Interactive, Matrox, and Nvidia all had impressive chip designs and a range of products on the market. ATI’s designs just couldn’t compete at the top end.

Still, ATI’s products found success in other markets, particularly in the laptop and embedded sectors, securing a stronger revenue stream than any other graphics firm. However, these markets also had slim profit margins.

The late 1990s saw several changes within the company – Lee Lau and Benny Lau departed for new ventures, while additional graphics companies (Chromatic Research and a part of Real3D) were acquired. Despite these changes, chip development continued unabated, resulting in what would eventually be the penultimate version of the 3D Rage featured in a new round of models.

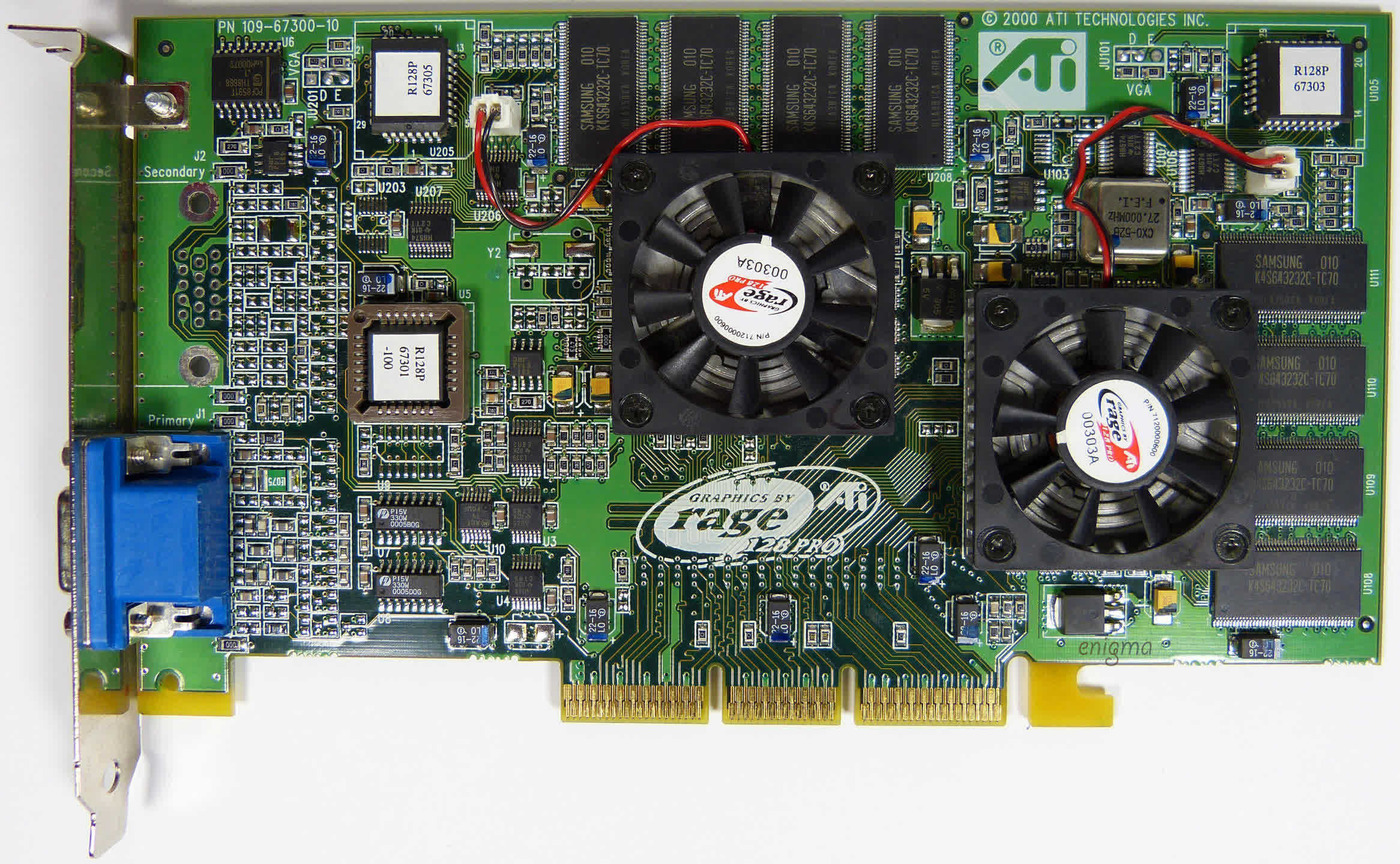

The Rage 128 Pro chip powered Rage Fury cards for the gaming sector and Xpert 2000 boards for office machines. ATI even mounted two processors on one card, the Rage Fury MAXX (above), to compete against the very best from 3dfx and Nvidia.

On paper, the chips in this particular model had the potential to offer almost double the performance of the standard Rage Fury. Unfortunately, it just wasn’t as good as Nvidia’s new GeForce 256 and it was more expensive; it also lacked support for hardware acceleration vertex transform and lighting calculations (TnL), a new feature of Direct3D 7 at that time.

It was clear that if ATI was going to be seen as a market leader in gaming, it needed to create something special – again.

A new millennium, a new purchase

With the brand name Rage associated with inexpensive yet competent cards, ATI began the new millennium with significant changes. They simplified the old architecture codename – Rage 6c became R100 – and introduced a new product line: the Radeon.

However, the changes were more than just skin-deep. They overhauled the graphics processing unit (GPU, as it was increasingly being called), turning it into one of the most advanced consumer-grade rendering chips of the time.

One vertex pipeline, fully capable of hardware Transform and Lighting (TnL), fed into a complex triangle setup engine. This engine could sort and cull polygons based on their visibility in a scene. In turn, this fed into two pixel pipelines, each housing three texture mapping units.

Unfortunately, its advanced features proved a double-edged sword. The most commonly used rendering API for games at that time, Direct3D 7, didn’t support all its functions, and neither did its successor. Only through OpenGL and ATI’s extensions could its full capabilities be fully realized.

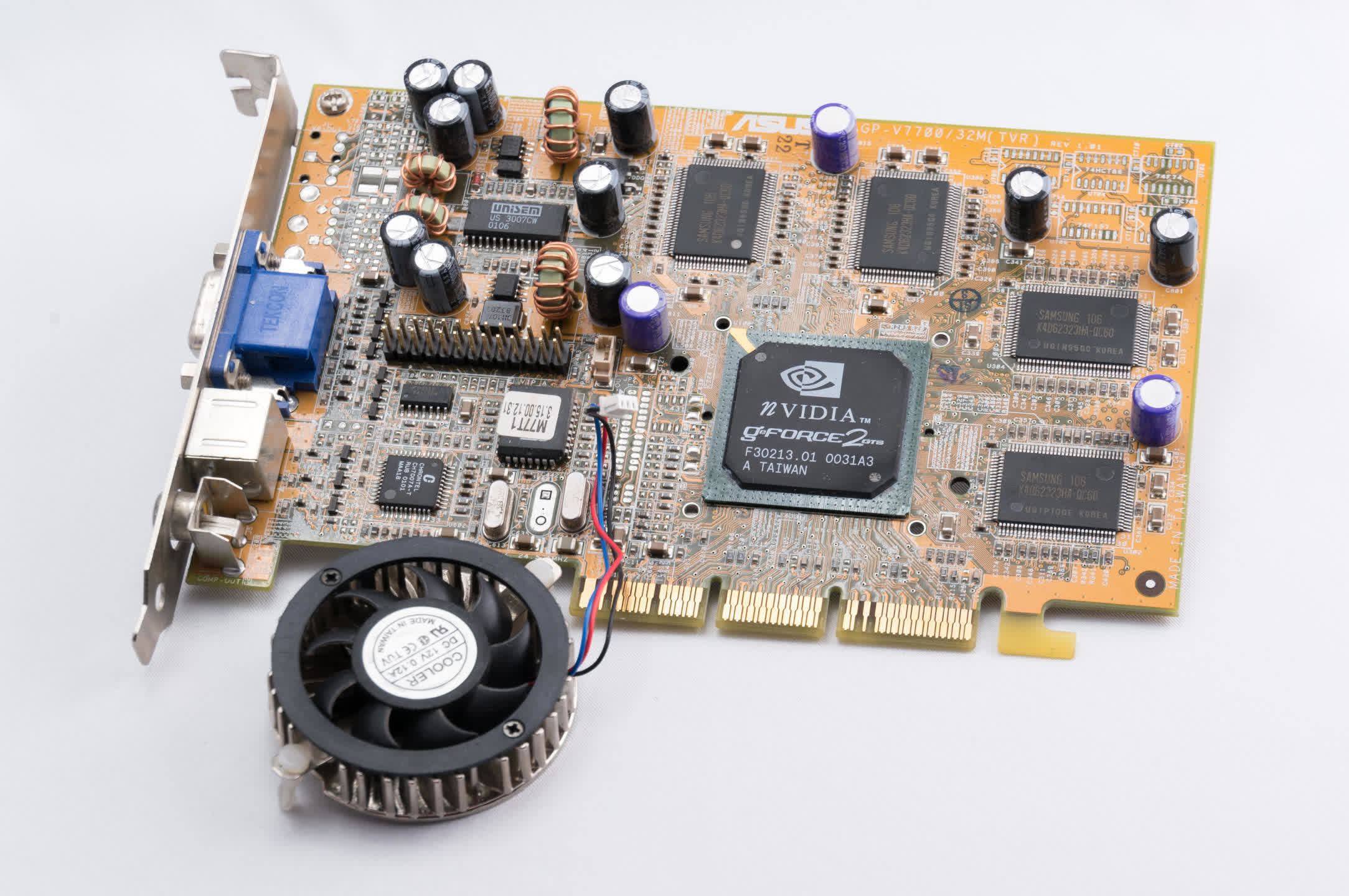

The first Radeon graphics card hit the market on April 1st, 2000. Despite potential jokes about the date, the product was no laughing matter. With 32 MB of DDR-SDRAM, clock speeds of 166 MHz, and a retail price of around $280, it competently rivaled the Voodoo5 and GeForce2 series from 3dfx and Nvidia, respectively.

This was certainly true when playing games in 32-bit color and at high resolution, but at lower settings and 16-bit color, the competition was a lot stronger. Initial drivers were somewhat buggy and OpenGL performance wasn’t great, either, although both of these aspects improved in due course.

Over the next year, ATI released multiple variants of the R100 Radeon. One used SDRAM instead of DDR-SDRAM (cheaper but less powerful), a 64 MB DDR-SDRAM version, and a budget RV100-powered Radeon VE. The RV100 chip was essentially a defective R100, with many parts disabled to prevent operational bugs. It was priced competitively against Nvidia’s GeForce2 MX card, but like its parent processor, it couldn’t quite match up.

However, ATI still dominated the OEM market, and its coffers were full. Before the Radeon hit the market, ATI set out once again to acquire promising prospects. They purchased the recently formed graphics chip firm ArtX for a significant sum of $400 million in stock options. This might seem unusual for such a young business, but ArtX stood out.

Composed of engineers initially from Silicon Graphics, the group was behind the development of the Reality Signal Processor in the Nintendo 64 and was already contracted to design a new chip for the console’s successor. ArtX even had experience creating an integrated GPU, which was licensed by the ALi Corporation for its budget Pentium motherboard chipset (the Aladdin 7).

Through this acquisition, ATI didn’t just secure fresh engineering talent but also secured a contract for producing the graphics chip for Nintendo’s GameCube. Based in Silicon Valley, the team was tasked with aiding the development of a new, highly programmable graphics processor, although it would take a few years before it was ready.

ATI continued its pattern of creating technically impressive yet underperforming products in 2001 with the release of the R200 chip and the next generation of Radeon cards, now following a new 8000-series naming scheme.

ATI addressed all the shortcomings of the R100, making it fully compliant with Direct3D 8, and added even more pipelines and features. On paper, the new architecture offered more than any other product on the market. It was the only product that supported pixel shader v1.4, raising expectations.

But once again, outside of synthetic benchmarking and theoretical peak figures, ATI’s best consumer-grade graphics card simply wasn’t as fast as Nvidia’s. This reality began to show between 1999 and 2001. Despite accumulating $3.5 billion in sales, ATI recorded a net loss of nearly $17 million.

In contrast, Nvidia, its main competitor, earned less than half of ATI’s revenue but made a net profit of $140 million, largely due to selling a significant volume of high-end graphics cards with much larger profit margins than basic OEM cards.

Part of ATI’s losses stemmed from its acquisitions – after ArtX, ATI purchased a section of Appian Graphics (specifically, the division developing multi-monitor output systems) and Diamond Multimedia’s FireGL brand and team. The latter provided a foothold in the workstation market, as FireGL had built a solid reputation in its early years using 3DLabs’ graphics chips.

But ATI couldn’t simply spend its way to the top. It needed more than just a wealth of high-revenue, low-margin contracts. It needed a graphics card superior in every possible way to everything else on the market. Again.

The killer card finally arrives

Throughout the first half of 2002, rumors and purported comments from developers regarding ATI’s upcoming R300 graphics chip began to circulate on the internet.

The design of this next-generation GPU was heavily influenced by the former ArtX team. Some of the figures being touted – like double the number of transistors compared to the R200, 20% higher clock speeds, 128-bit floating point pixel pipelines, and anti-aliasing with nearly no performance hit – seemed almost too good to be true.

Naturally, as people often do, most dismissed these claims as mere hyperbole. After all, ATI had garnered a reputation for over-promising and under-delivering.

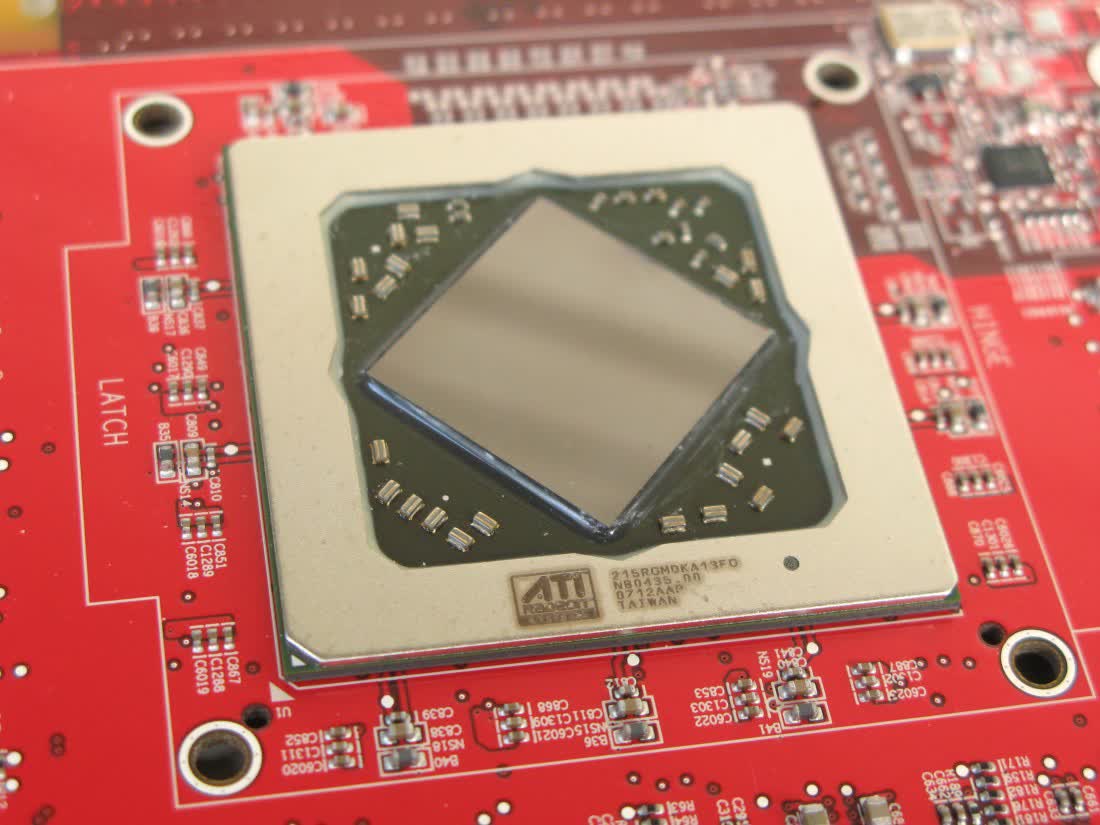

But when the $400 ATI Radeon 9700 Pro appeared in August, sporting the new R300 at its heart, the reality of the product was a huge surprise. While not all rumors were entirely accurate, they all underestimated the card’s power. In every review, ATI’s new model completely outclassed its competitors; neither the Matrox Parhelia-512 nor the Nvidia GeForce4 Ti 4600 could compete.

Every aspect of the processor’s design had been meticulously fine-tuned. This ranged from the highly efficient crossbar and 256-bit memory bus to the first use of flip-chip packaging for a graphics chip to facilitate higher clocks.

With twice the number of pipelines as the 8500 and full compliance with Direct3D 9 (still a few months from release), the R300 was an engineering marvel. Even the drivers, a long-standing weak point for ATI, were stable and rich in features.

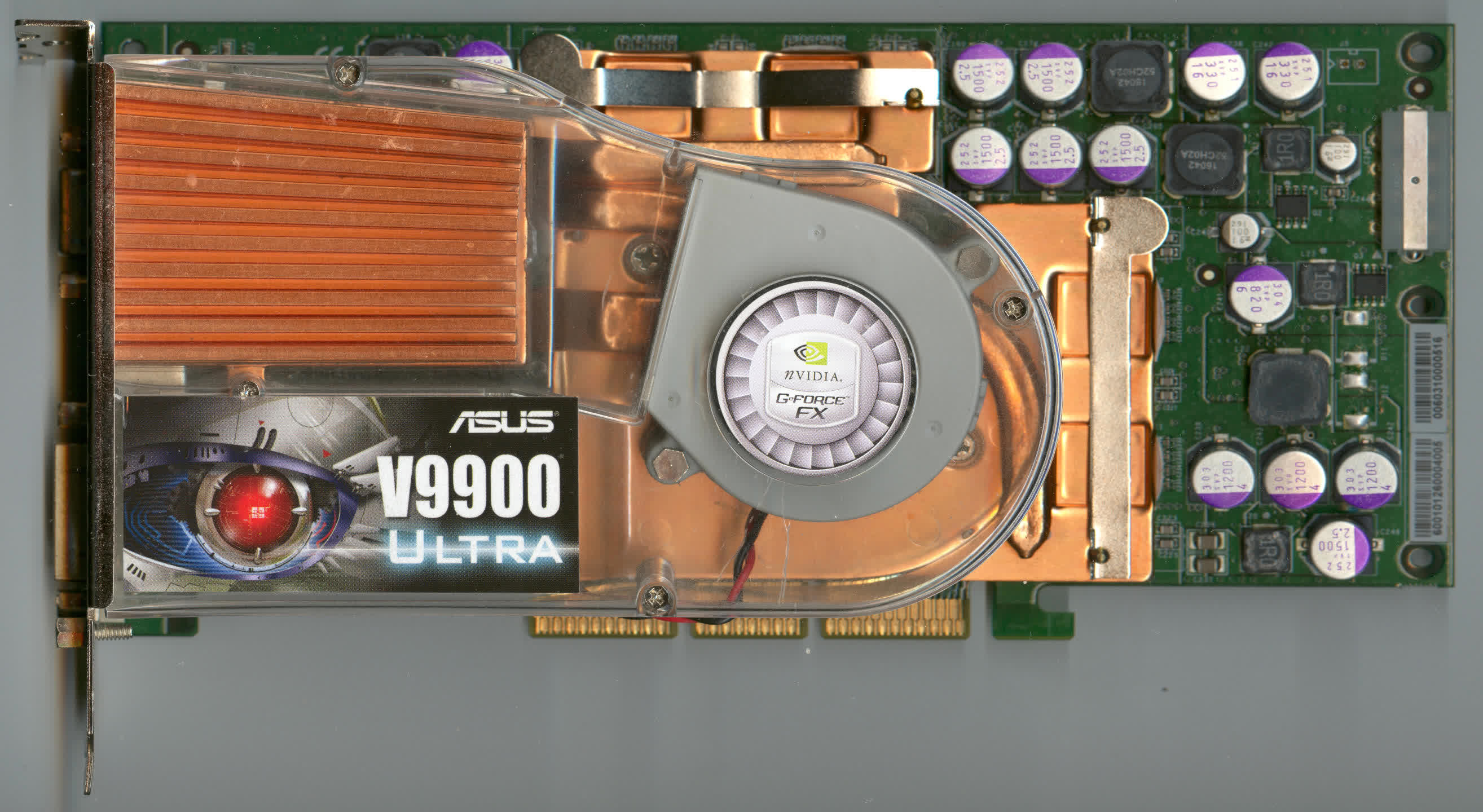

The Radeon 9700 Pro and the slower, third-party-only Radeon 9700 were instant hits, bolstering revenues in 2003. For the first time in three years, the net income was also positive. Nvidia’s NV30-based GeForce FX 5800 series, released in the spring, further aided ATI – it was late to market, power-hungry, hot, and marred by significant obfuscation by Nvidia.

The NV30, like the R300, purportedly had eight pixel pipelines. However, while ATI’s processed colors at a 24-bit floating-point level (FP24, the minimum required for Direct3D 9), Nvidia’s were supposedly a full 32-bit. Except, that wasn’t entirely true.

The new GPU still had four pipelines, each with two texture units, so how could it claim eight? That figure accounted for specific operations, primarily related to z-buffer and stencil buffer read/writes.

In essence, each ROP could handle two depth value calculations per clock – a feature that would eventually become standard for all GPUs. The FP32 claim was a similar story; for color operations, the drivers would often force FP16 instead.

In the right circumstances, the GeForce FX 5800 Ultra outperformed the Radeon 9700 Pro, but most of the time, the ATI card proved superior – especially when using anti-aliasing (AA) and anisotropic texture filtering (AF).

The designers persistently refined the R300, and each successive version (R420 in 2004, R520 in 2005) went head-to-head with the best from Nvidia – stronger in Direct3D games, much stronger with AA+AF, but slower in OpenGL titles, especially those using id Software’s engines.

Healthy revenues and profits characterized this period, and the balanced competition meant that consumers benefited from excellent products across all budget sectors. In 2005, ATI acquired Terayon Communication Systems to bolster its already dominant position in onboard TV systems and, in 2006, purchased BitBoys Oy to strengthen its foothold in the handheld devices industry.

ATI’s R300 design caught Microsoft’s attention, leading the tech giant to contract ATI to develop a graphics processor for its next Xbox console, the 360. When it launched in November 2005, the direction of future GPUs and graphics APIs became clear – a fully unified shader architecture where discrete vertex and pixel pipelines would merge into a single array of processing units.

While ATI was now one step ahead of its competitors in this area, it still needed to sustain its growth. The solution to this thorny problem came as an almighty surprise.

All change at the top

In 2005, the co-founder, long-standing Chairman, and CEO, Kwok Yuen Ho, retired from ATI. He, his wife, and several others had been under investigation for insider trading of company shares for two years, dating back to 2000. Although the couple was exonerated from all charges, ATI itself was not, paying almost $1 million in charges for misleading the regulators.

Ho had already stepped down as CEO by then. David Orton, former CEO of ArtX, took over in 2004. James Fleck, an already wealthy and successful businessman, replaced Ho as Chairman. For the first time in twenty years, ATI had a leadership structure without any connections to its origins.

In 2005, the company posted its highest-ever revenues – slightly over $2.2 billion. While its net income was a modest $17 million, ATI’s sole competitor in the market, Nvidia, was not faring much better, despite having a larger market share and bigger profits.

With this in mind, the new leadership was confident in realizing its goals of boosting revenue growth by developing its weaker sectors and consolidating its strengths.

In July 2006, almost completely out of the blue, AMD announced its intention to acquire ATI. The exchange was for $4.2 billion in cash (60% of which would come from a bank loan) and $1.2 billion of its shares. AMD had initially approached Nvidia but rejected the proposed terms of the deal.

The acquisition was a colossal gamble for AMD, but ATI was elated, and for a good reason. This was an opportunity to push Nvidia out of the motherboard chipset market. ATI could potentially use AMD’s own foundries to manufacture some of its GPUs, and its designs could be integrated into CPUs.

AMD was evidently willing to spend a lot of money and had the financial backing to do so. Best of all, the deal would also mean that ATI’s name would remain in use.

The acquisition was finalized by October 2006, and ATI officially became part of AMD’s Graphics Product Group. Naturally, there was some reshuffling in the management structure, but it was otherwise business as usual. The first outcome was the release of the R600 GPU in May 2007 – ATI’s first unified shader architecture, known as TeraScale, for the x86 market.

The new design was clearly based on the work done for the Xbox 360’s graphics chip, but the engineers had scaled everything considerably higher. Instead of having dedicated pipelines for vertex and pixel shaders, the GPU was made up of four banks of sixteen Stream Processor Units (each containing five Arithmetic Logic Units, or ALUs) that could handle any shader.

Boasting 710 million transistors and measuring 420 mm2, the R600 was twice the size of the R520. The first card to use the GPU, the Radeon HD 2900 XT, also had impressive metrics – 215 W power consumption, a dual-slot cooler, and 512 MB of fast GDDR3 on a 512-bit memory bus.

Nvidia had beaten ATI to the market by launching a unified architecture (known as Tesla) a year earlier. Its G80 processor, housed in the GeForce 8800 Ultra, was launched in May 2006. Nvidia then followed up with scaled-down GTX and GTS models in November. When matched in actual games, ATI’s best was slower than the Ultra and GTX, but better than the GTS.

The Radeon HD 2900 XT’s saving grace was its price. At $399, it was $200 cheaper than the GeForce 8800 GTX’s MSRP (though this gap narrowed to about $100 by the time the R520 card was launched) and a staggering $420 less than the Ultra. For the money, the performance was superb.

Why wasn’t it better? Some of the performance deficit, compared to Nvidia’s models, could be attributed to the fact that the R520 had fewer Texture Mapping Units (TMUs) and Render Output Units (ROPs) than the G80. Games at the time were still heavily dependent on texturing and fill rate (yes, even Crysis).

TeraScale’s shader processing rate was also highly dependent on the compiler’s ability to properly split up instructions to take advantage of the compute structure. In short, the design was progressive and forward-thinking (the use of GPUs in compute servers was still in its infancy), but it was not ideal for the games of that period.

ATI had demonstrated that it was perfectly capable of creating a unified shader architecture specifically for gaming with the Xenos chip in the Xbox 360. Therefore, the decision to focus more on computation with a GPU must have been driven by internal pressure from senior management.

After investing so much in ATI, AMD was pinning high hopes on its Fusion project – the integration of a CPU and GPU into a single, cohesive chip that would allow both parts to work on certain tasks in parallel.

This direction was also followed with the 2008 iteration of TeraScale GPUs – the RV770. In the top-end Radeon HD 4870 (above), the engineers increased the shader unit count by 2.5 times for just 33% more transistors.

The use of faster GDDR5 memory allowed the memory bus to be halved in size. Coupled with TSMC’s 55nm process node, the entire die was almost 40% smaller than the R600. All this resulted in the card being retailed at $300.

Once again, it played second fiddle to Nvidia’s latest GeForce cards in most games. However, while the GeForce GTX 280 was seriously fast, its launch MSRP was more than double that of the Radeon HD 4870.

The era of ATI’s graphics cards being the best in terms of absolute gaming performance was gone, but no one was beating them when it came to value for money. Unlike the 3D Rage era when the products were cheap and slow, the last Radeons were affordable but still delivered satisfactory performance.

In the late summer of 2009, a revised TeraScale architecture introduced a new round of GPUs. The family was called Evergreen, while the specific model used in the high-end Radeon HD 5870 was known as Cypress or RV870. Regardless of the naming confusion, the engineers managed to double the number of transistors, shader units, TMUs, etc.

The gaming performance was excellent, and the small increase in MSRP and power consumption was acceptable. Only dual GPU cards, such as Nvidia’s GeForce GTX 295 and ATI’s own Radeon HD 4870 X2, were faster, though they were naturally more expensive. The HD 5870 only faced real competition six months later when Nvidia released its new Fermi architecture in the GeForce GTX 480.

While not quite a return to the 9700 Pro days, the TeraScale 2 chip showed that ATI’s designs were not to be dismissed.

Goodbye to ATI?

In 2010, ATI had an extensive GPU portfolio – its discrete graphics cards spanned all price segments, and though its market share was around half of Nvidia’s, Radeon chips were common in laptops from a variety of vendors. Its professional FireGL range was thriving, and OEM and console GPU contracts were robust.

The less successful aspects had already been trimmed. Motherboard chipsets and the multimedia-focused All-in-Wonder range were discontinued a few years earlier, and AMD had sold the Imageon chipsets, used in handheld devices, to Qualcomm.

Despite all this, AMD’s management believed that the ATI brand didn’t have enough standing on its own, especially in comparison to AMD’s name. Therefore, in August 2010, it was announced that the three letters that had graced graphics cards for 25 years would be dropped from all future products – ATI would cease to exist as a brand.

However, this was only for the brand. The actual company stayed active, albeit as part of AMD. At its peak, ATI had over 3500 employees and several global offices. Today, it trades under the name of ATI Technologies ULC, with the original headquarters still in use, and its workforce fully integrated into relevant sectors in AMD’s organizational structure.

This group continues to contribute to the research and development of graphics technology, as evidenced by patent listings and job vacancies. But why didn’t AMD just completely absorb ATI, like Nvidia did with 3dfx, for instance? The terms of the 2006 merger meant that ATI would become a subsidiary company of AMD.

While it’s possible that ATI could have been eventually shut down and fully absorbed, the level of investment and near-endless court cases over patent violations that ATI has continued to deal with means it’s not going anywhere just yet, if ever.

However, with a significant amount of GPU development work now carried out by AMD itself in various locations worldwide, it’s no longer accurate to label a graphics chip (whether in a Radeon card or the latest console processor) as an ATI product.

The ATI brand has now been absent for over a decade, but its legacy and the fond memories of its old products endure. The ATI Technologies branch continues to contribute to the graphics field nearly 40 years after its inception and you could say it’s still kicking out the goods. Gone but not forgotten? No, not gone and not forgotten.

TechSpot’s Gone But Not Forgotten Series

TechSpot’s Gone But Not Forgotten Series

The story of key hardware and electronics companies that at one point were leaders and pioneers in the tech industry, but are now defunct. We cover the most prominent part of their history, innovations, successes and controversies.