The Ryzen 7 5800X3D is the first processor to feature AMD’s 3D V-cache technology that was announced last October. AMD promised 3D V-cache would bring a ~15% boost to gaming performance used alongside the Zen 3 architecture thanks to a much larger L3 cache.

Since then, we’ve tested and confirmed that L3 cache heavily influences gaming performance (see our feature “How CPU Cores & Cache Impact Gaming Performance” for details). That article was inspired by endless claims that more cores were the way to go for gamers, despite the fact that games are really bad at using core-heavy processors… and we don’t expect that to change any time soon.

As a quick example, we found many instances where increasing the L3 cache of a 10th-gen Core series processor from 12 to 20 MB — a 67% increase — could boost gaming performance by around 20%, whereas increasing the core count from 6 to 10 cores — also a 67% increase — would only improve performance by 6% or less.

In other words, making the cores faster, rather than adding more of them, is the best way to boost performance in today’s games. Acknowledging this, AMD has opted to supercharge their 8-core, 16-thread Ryzen 7 5800X with 3D V-cache, creating the 5800X3D.

| Ryzen 9 5950X | Ryzen 9 5900X | Ryzen 7 5800X3D | Ryzen 7 5800X | Ryzen 5 5600X | |

| Release Date | November 5, 2020 | April 20, 2022 | November 5, 2020 | ||

| Price $ MSRP | $800 | $550 | $450 | $550 | |

| Cores / Threads | 16/32 | 12/24 | 8 / 16 | 6/12 | |

| iGPU | N/A | ||||

| Base Frequency | 3.4 GHz | 3.7 GHz | 3.4 GHz | 3.8 GHz | 3.7 GHz |

| Turbo Frequency | 4.9 GHz | 4.8 GHz | 4.5 GHz | 4.7 GHz | 4.6 GHz |

| L3 Cache | 64 MB | 64 MB | 96 MB | 32 MB | 32 MB |

| TDP | 105 watts | 65 watts | |||

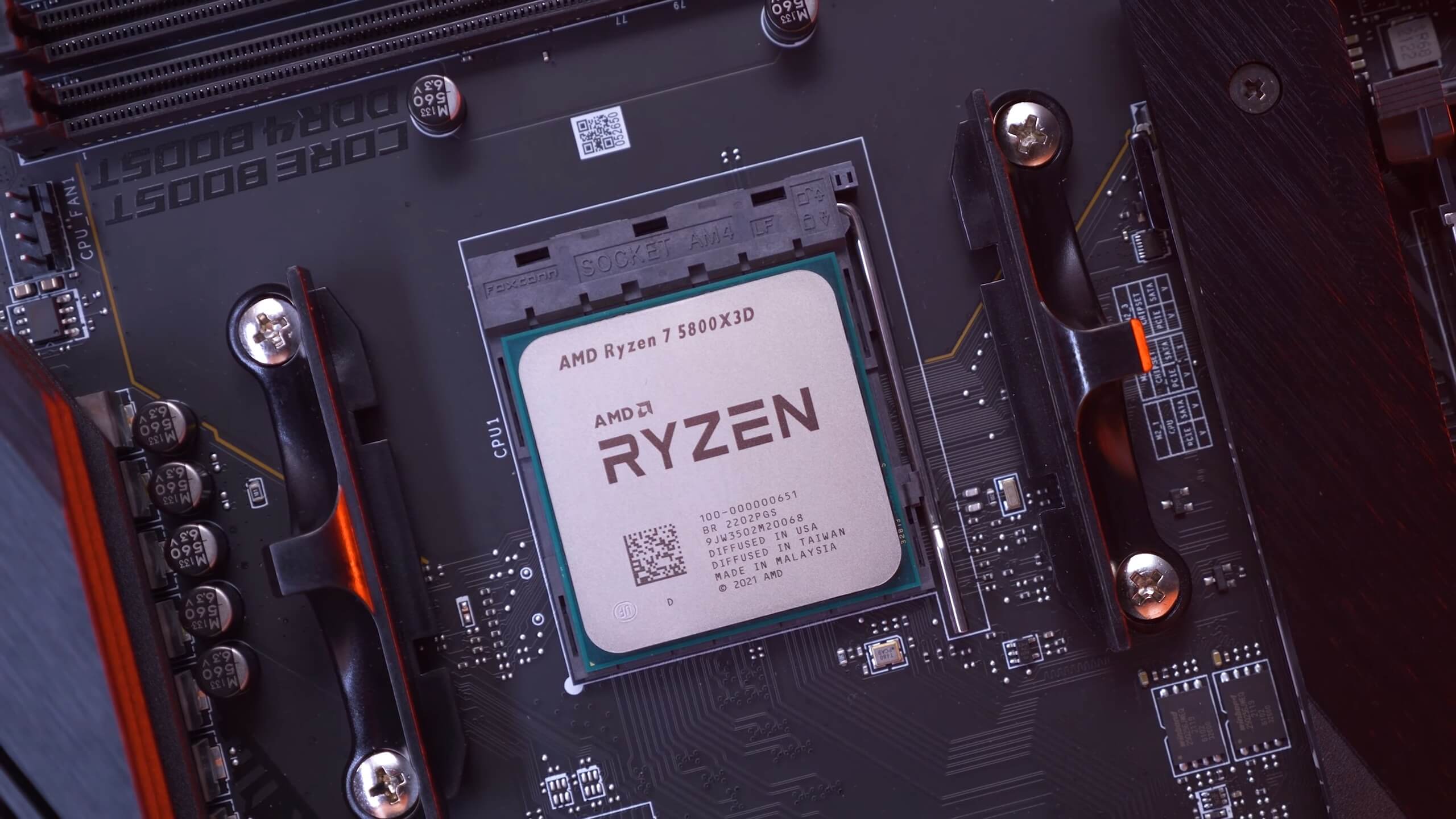

This new CPU sees an incredible 200% increase in L3 cache capacity, going from the standard 32 MB to an impressive 96 MB. This is achieved by stacking a 41mm2 die containing nothing but L3 cache over the Zen 3 core complex die, extending the L3 cache by 64 MB.

AMD claims this boosts performance by 15% on average over the Ryzen 9 5900X, which is no small feat. They also claim the 5800X3D is overall faster than Intel’s i9-12900K while costing significantly less, so that’ll be interesting to look at.

In terms of pricing, the 5800X3D has been set at $450 which is the original price of the standard 5800X, which has now dropped down to around $340. Intel’s Core i9-12900K is around $600, so should the 5800X3D hit the intended MSRP, it’s going to be considerably cheaper than the competition.

Another advantage of the 5800X3D is that it can be dropped into any AM4 motherboard that already supports Ryzen 5000 series processors, meaning inexpensive B450 boards do support this new CPU, though ideally you’d probably want a decent X570 board to justify spending so much on the processor.

It’s worth noting that this is a gaming focused CPU. We’re sure there will be some productivity workloads that can benefit from the extra L3 cache, but AMD has refrained from giving any examples if they exist. Instead, AMD is 100% pushing this as a gaming CPU, and once you see the data it will make sense why there isn’t a “5950X3D.”

Not to worry, we have included some productivity benchmarks in this review as we’re sure many of you want to see how it compares with the 5800X.

Speaking of which, when compared to the 5800X, the new 3D version is clocked slightly lower with a boost frequency of 4.5 GHz, down 200 MHz from the original. Also, while you can overclock the 5800X, you can’t do the same with the 5800X3D as AMD has locked core overclocking for this part, meaning you can only tune the memory.

For testing the Ryzen 7 5800X3D and all Zen 3 CPUs in this review, we’re using the MSI X570S Carbon Max WiFi with the latest BIOS using AGESA version 1.2.0.6b. Then for the Intel 12th-gen processors, MSI’s Z690 Unify was used with the latest BIOS revision. All of this testing is fresh, and the gaming benchmarks have been updated with the GeForce RTX 3090 Ti using the latest graphics drivers.

The default memory configuration was DDR4-3200 CL14 dual-rank, but we’ve also included some configurations using G.Skill’s DDR4-4000 CL16 memory set to 3800 CL16, as the highest FCLK our 5800X3D sample can handle without memory errors is 1900MHz and this is the norm for all quality Zen 3 chips. For Intel processors we’ve also added a DDR5-6400 CL32 memory configuration. Both platforms were tested with ReSizable BAR enabled and Windows 11. Let’s now get into it.

Application Benchmarks

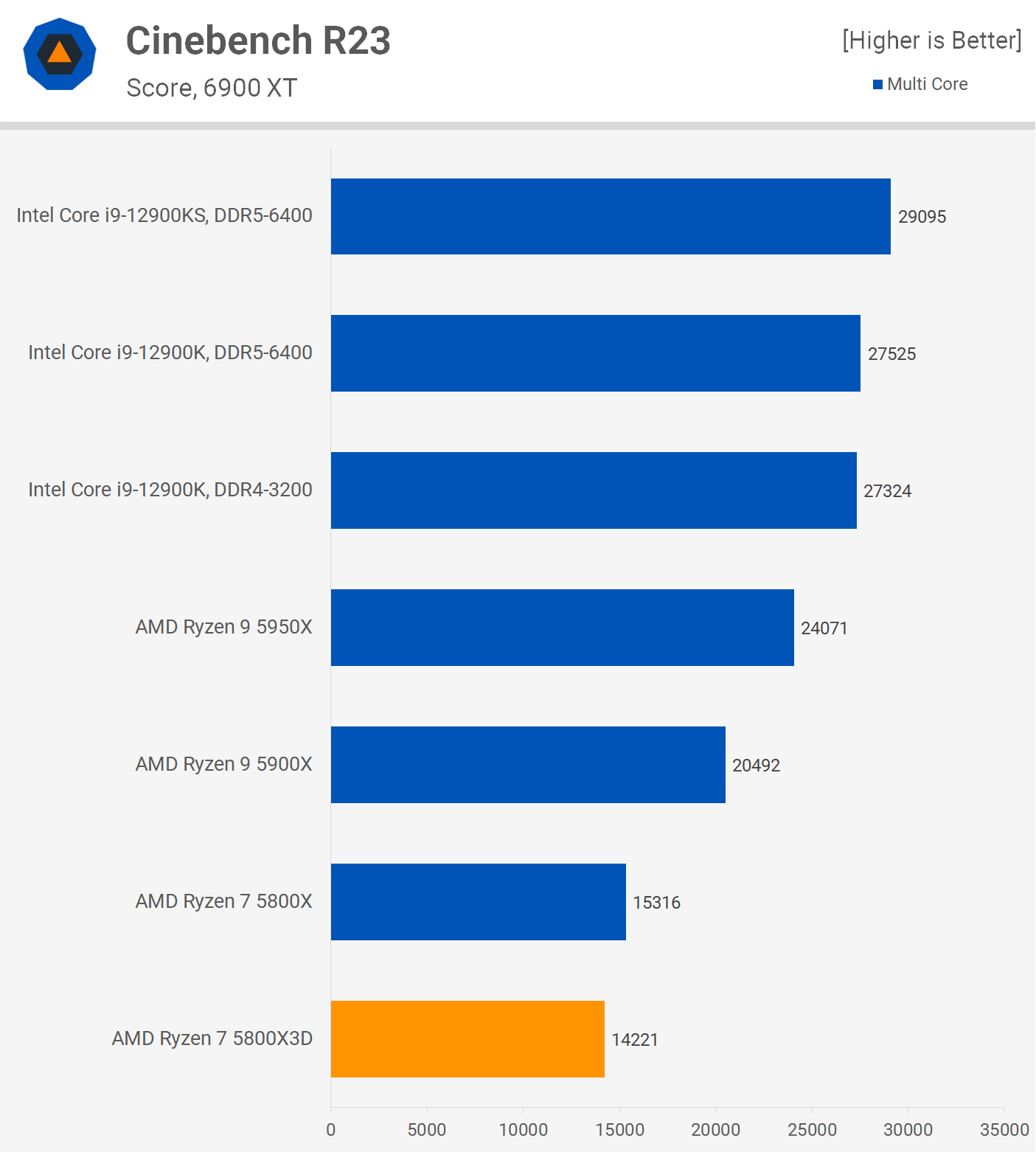

Starting with Cinebench R23, we see that the 5800X3D is actually 7% slower than the 5800X. This of course is due to the fact that it’s clocked lower, operating at an all-core frequency of 4.3 GHz in this test, whereas the 5800X ran at 4.6 GHz. This is to be expected and AMD provided their own Cinebench data which aligned with our findings.

On a side note, some AMD users claim our Cinebench R23 data is off as their Zen 3 CPU scores higher. What you’re seeing here is the stock behavior of each CPU. If your model scores higher, it’s either because the motherboard is auto-overclocking or you have PBO enabled, but by default this is the scores each model should produce.

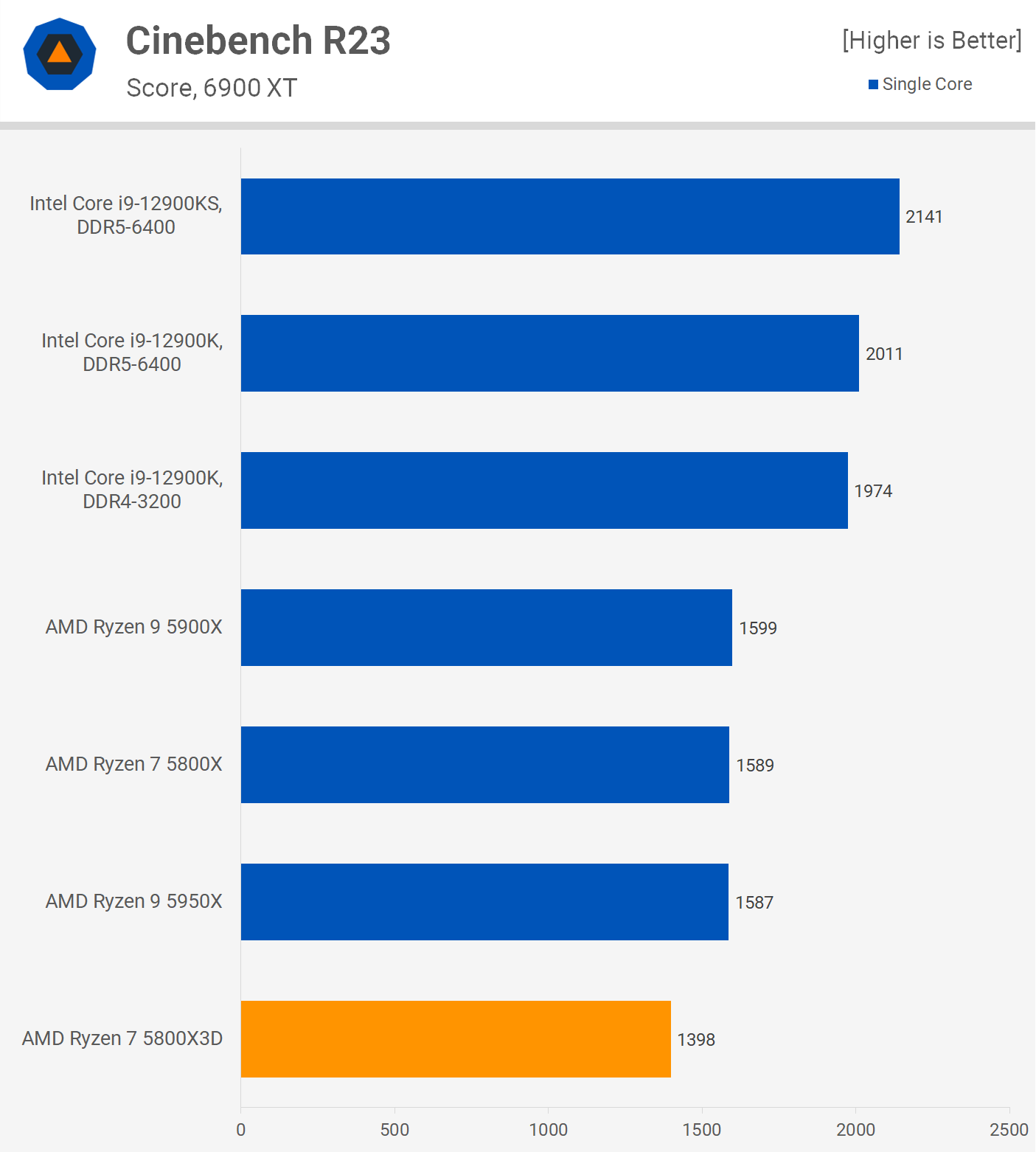

Interestingly, the single core performance has dropped by 12% according to the Cinebench R23 results when compared to what we see with the 5800X. That’s a large reduction which typically wouldn’t bode well for gaming performance, but we’ll look at that shortly.

The 7-Zip File Manager decompression performance is about the same as what we saw from the 5800X, with no noteworthy changes.

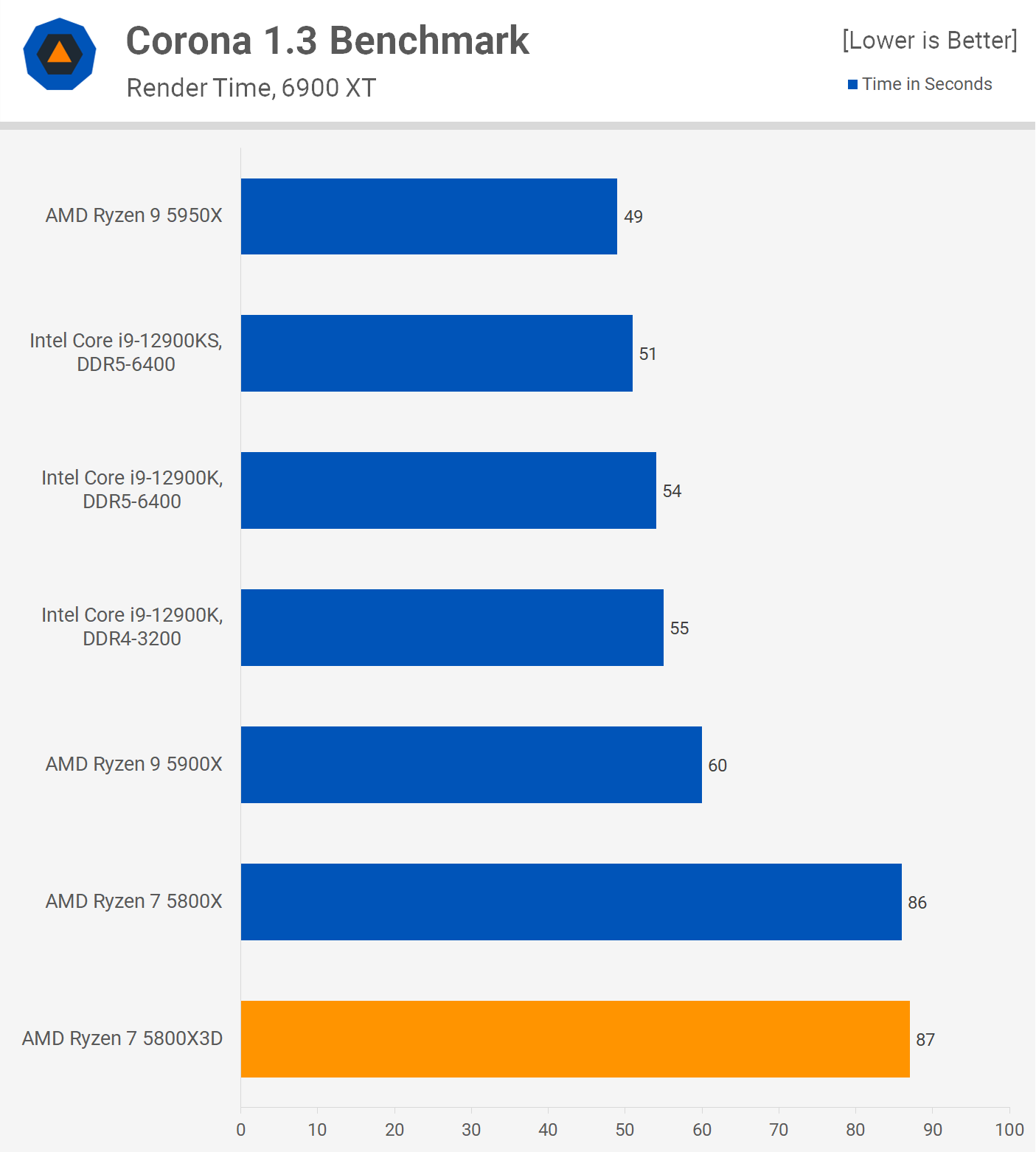

The same is true of the Corona benchmark. We’re looking at a 1 second difference between the 5800X and 5800X3D.

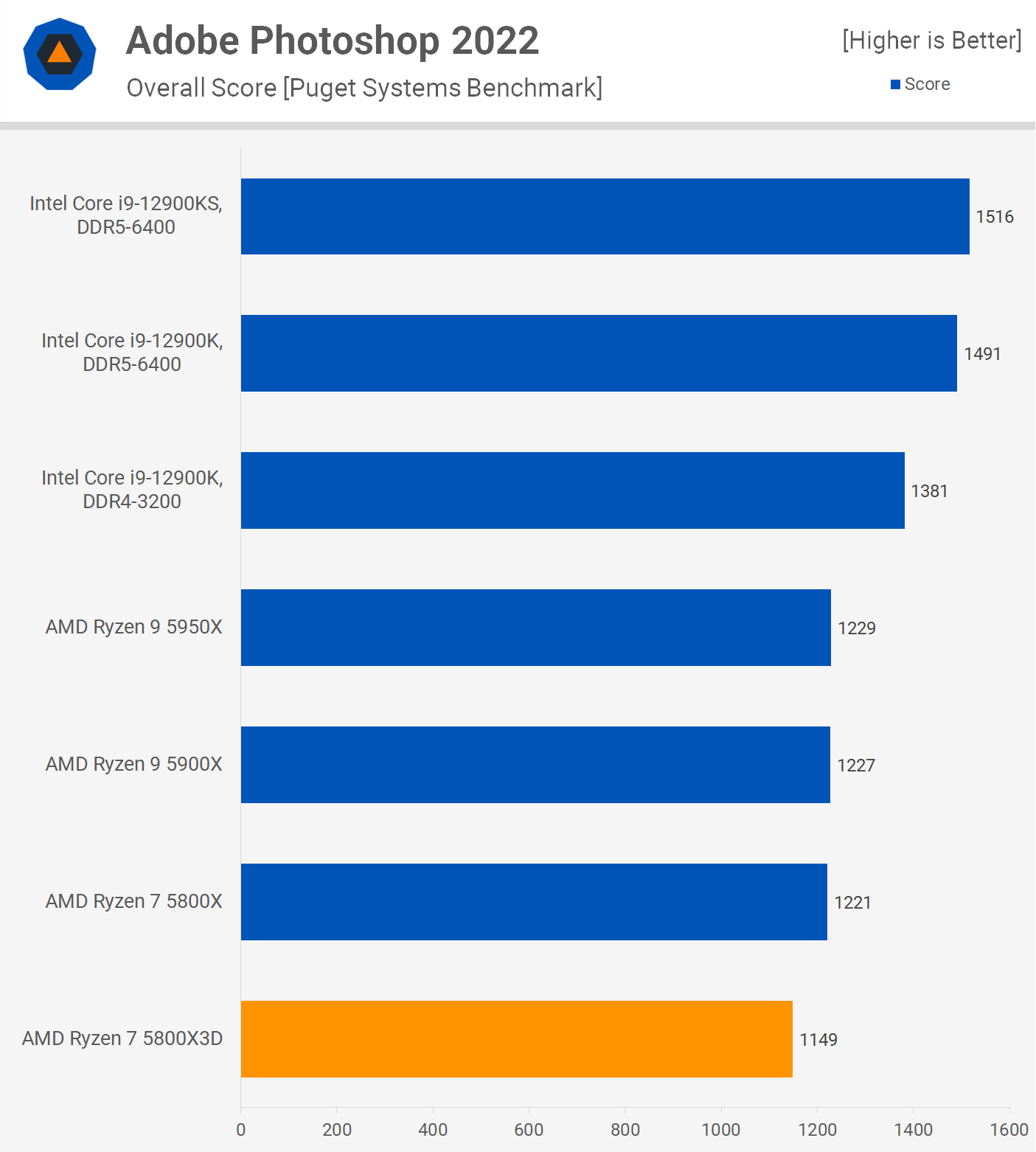

The Adobe Photoshop 2022 benchmark relies heavily on single core performance and here we see a 6% reduction in performance for the 5800X3D when compared to the 5800X. That fat L3 cache clearly isn’t helping for these productivity benchmarks.

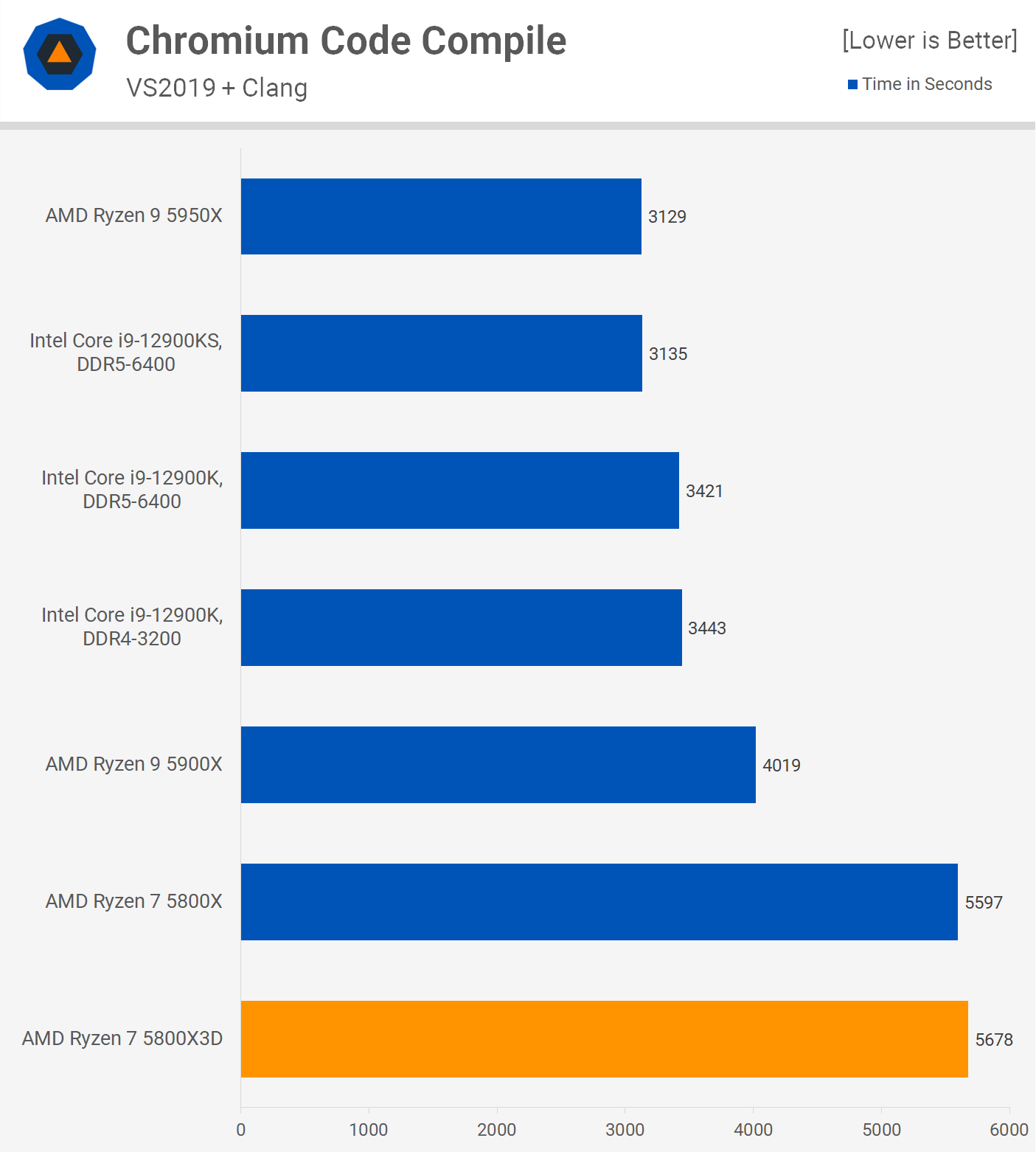

The 3800X3D’s code compilation performance was similar to the 5800X. We’re looking at a miniscule 1% difference.

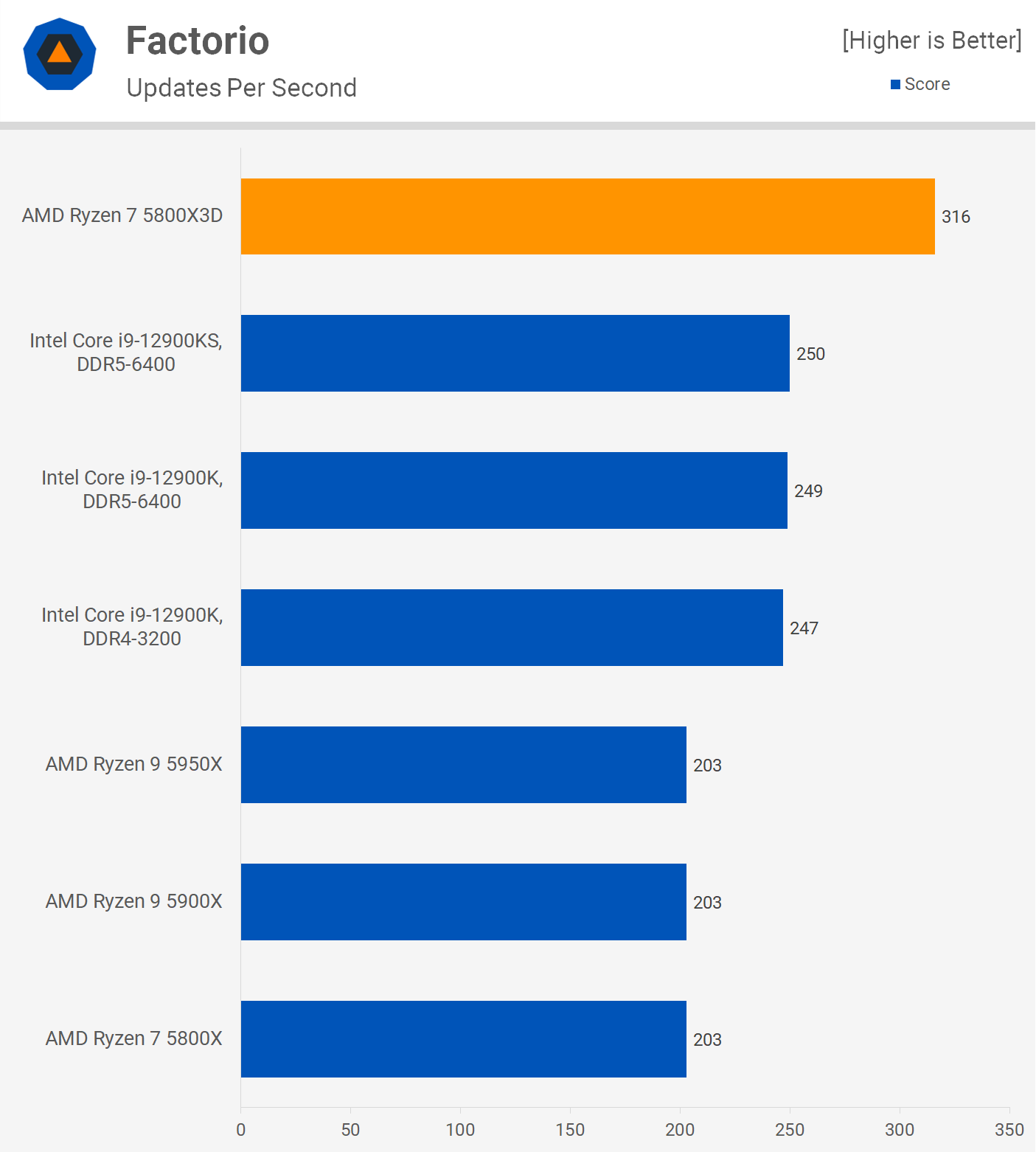

We like to include Factorio in the application benchmarks as we’re not measuring fps, but updates per second. This automated benchmark calculates the time it takes to run 1,000 updates. This is a single-thread test which apparently relies heavily on cache performance.

Case in point, unlike previous application benchmarks, we’re looking at a massive improvement in Factorio with the 5800X3D. Amazingly, the new CPU was 56% faster than the 5800X and that meant it was 26% faster than Intel’s 12900KS using DDR5-6400 memory.

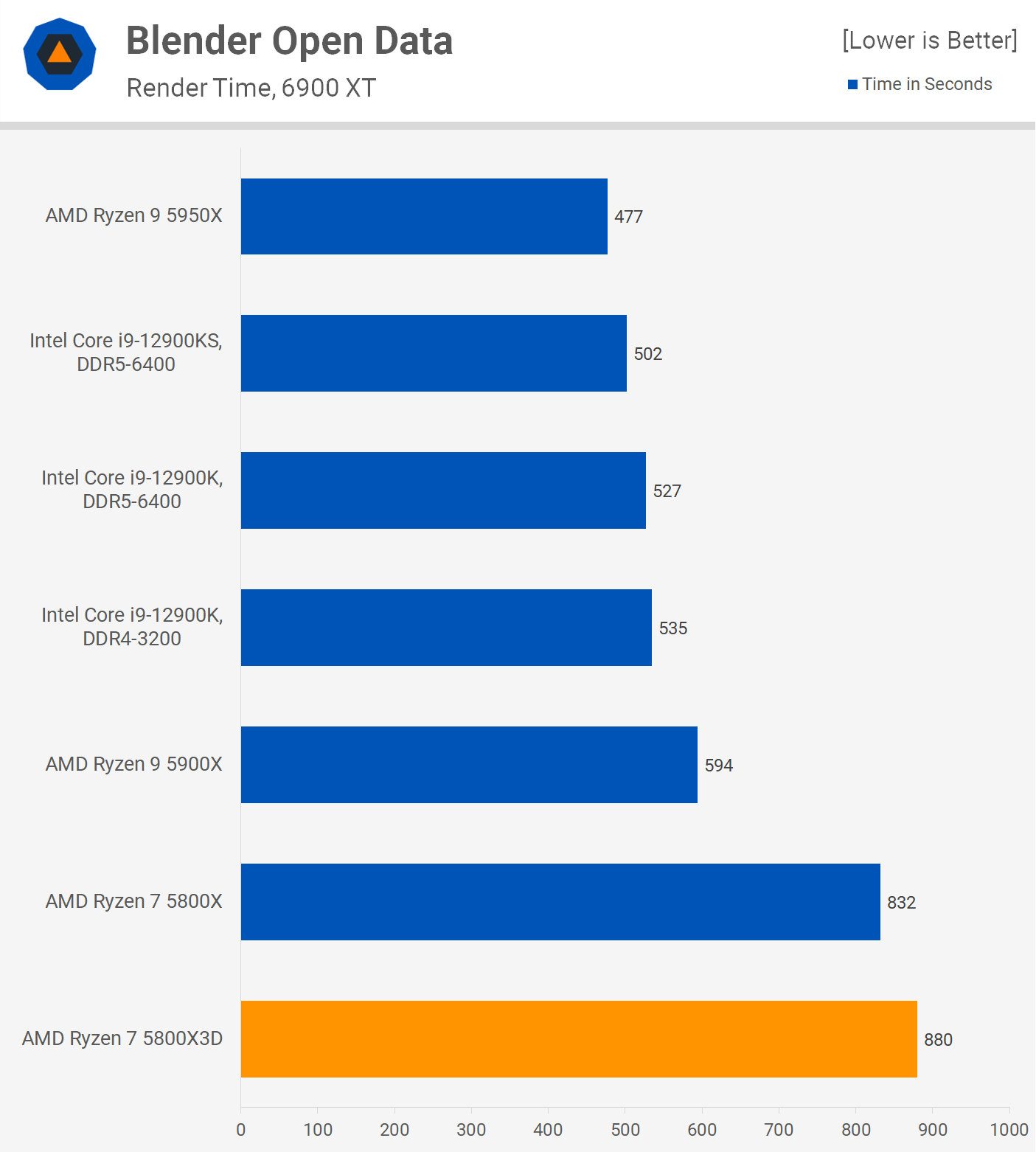

Briefly back to application benchmarks, we have Blender and like all the other applications tested, the 5800X3D is a little slower than the 5800X, lagging behind by a 5% margin.

Gaming Benchmarks

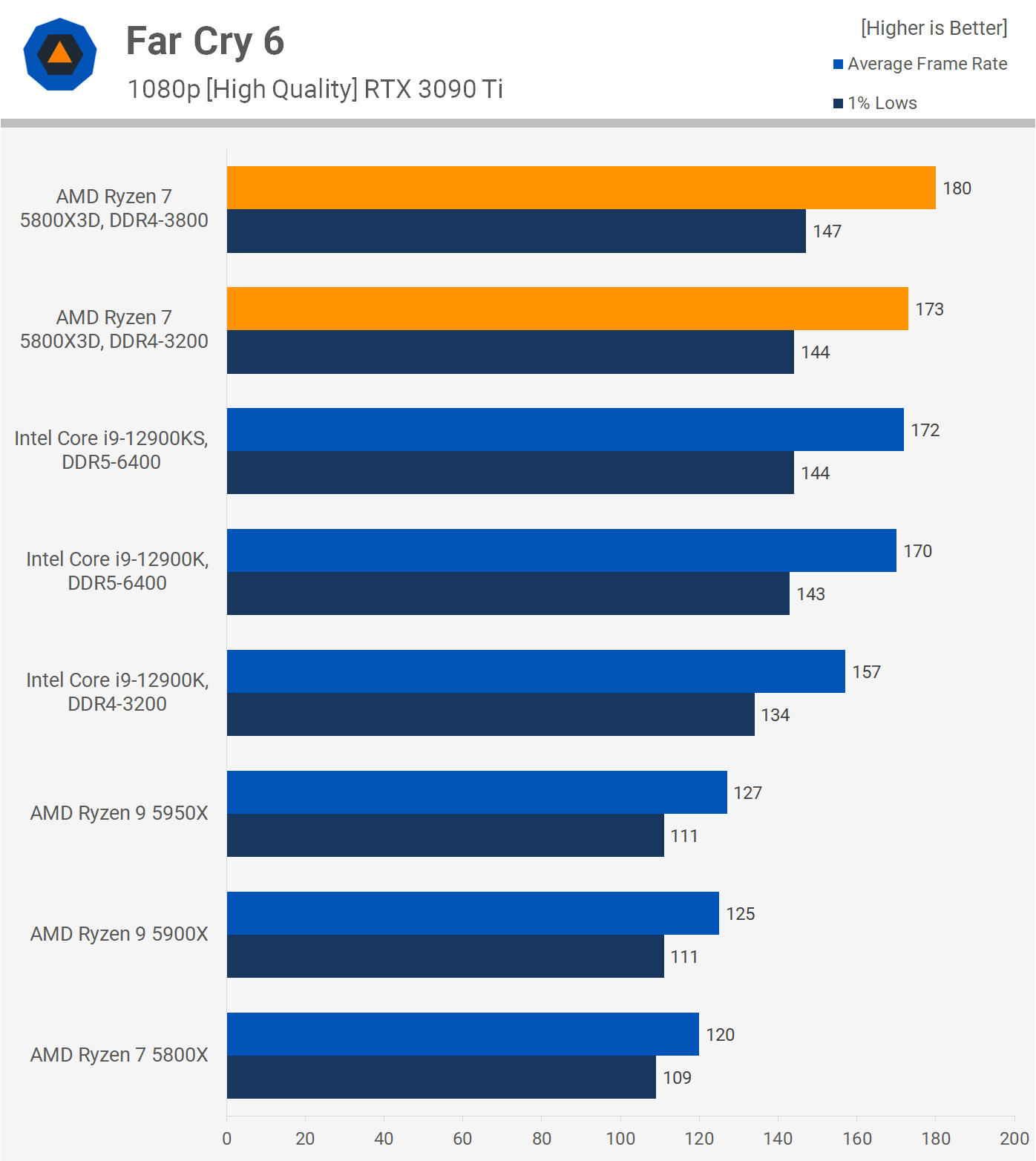

Time for the gaming tests, and what do we have here… Far Cry 6 has been a poor title for Zen 3’s battle against Alder Lake, but the 5800X3D looks to change all that.

Using the same DDR4-3200 memory as the 5800X, we’re looking at a 44% increase in gaming performance, from 120 fps to 173 fps. That was also a 10% increase over the 12900K when using the same DDR4 memory.

But it gets better for AMD. Even when arming the 12900K with insanely expensive DDR5-6400 CL32 memory, the 5800X3D was faster using DDR4-3200, and faster again with DDR4-3800 memory. Granted, the margin wasn’t huge, but with the fastest stock memory you can get for each part, the 5800X3D was still 6% faster.

Moving on to Horizon Zero Dawn, we’re mostly GPU bound despite testing at 1080p with an RTX 3090 Ti and the second highest quality settings. Truth is, a lot of games are more GPU bound than CPU bound, which is why we include this data.

We’re looking at a 13% increase for the 5800X3D over the 5800X and that places it slightly ahead of the 12900K.

Tom Clancy’s Rainbow Six Extraction is also maxed out with the RTX 3090 Ti at 1080p using the second highest quality preset with these high-end CPUs. There is a little wiggle room as the 5800X3D proves, along with the 12900KS, but we’re talking about a negligible 6% improvement from 311 fps to 329 fps.

The Watch Dogs: Legion performance improvement is mighty impressive. Here the 5800X3D was 33% faster than the 5800X when using the same DDR4-3200 memory.

That also made it 10% faster than the 12900K, again when using the same memory. For maximum performance we paired the 5800X3D with DDR4-3800 memory and this pushed the average frame rate up to 161 fps, just a few frames shy of what the 12900K achieved with DDR5-6400.

The Riftbreaker is a difficult title for AMD, but the 5800X3D does help, boosting performance by 32% over the original 5800X. This is a great result because using the same DDR4 memory the 5800X3D and 12900K are then comparable in terms of performance.

Faster DDR4-3800 memory didn’t help further though and the 12900KS with DDR5-6400 runs away with it, delivering 12% more performance or a whopping 27% more when looking at the 1% lows.

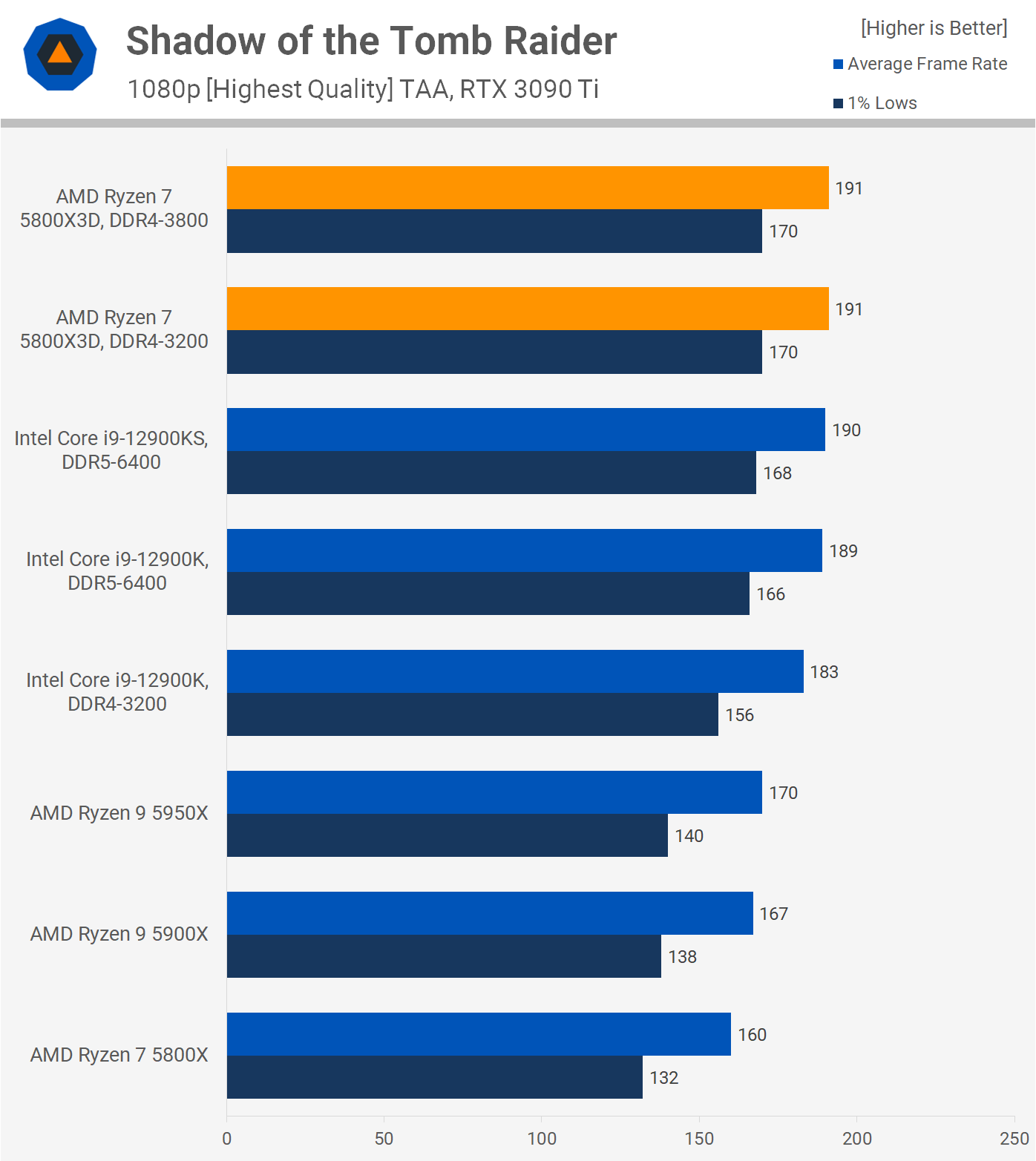

As was the case with Rainbow Six and Horizon Zero Dawn, we appear to be maxing out what the RTX 3090 Ti can do at 1080p in Shadow of the Tomb Raider, with GPU bound limits of around 190 fps.

This still saw a 19% performance increase for the 5800X, and when using the same DDR4-3200 memory the 5800X3D was 4% faster than the 12900K.

Hitman 3 saw a 24% performance improvement from the 5800X to the 5800X3D when comparing the average frame rate and a 40% increase for the 1% lows.

This allowed the 5800X3D to match the 12900K when using the same DDR4-3200 memory, an impressive result. The DDR4-3800 configuration only improved performance by 5%, whereas the 12900K became 22% faster with DDR5-6400 memory.

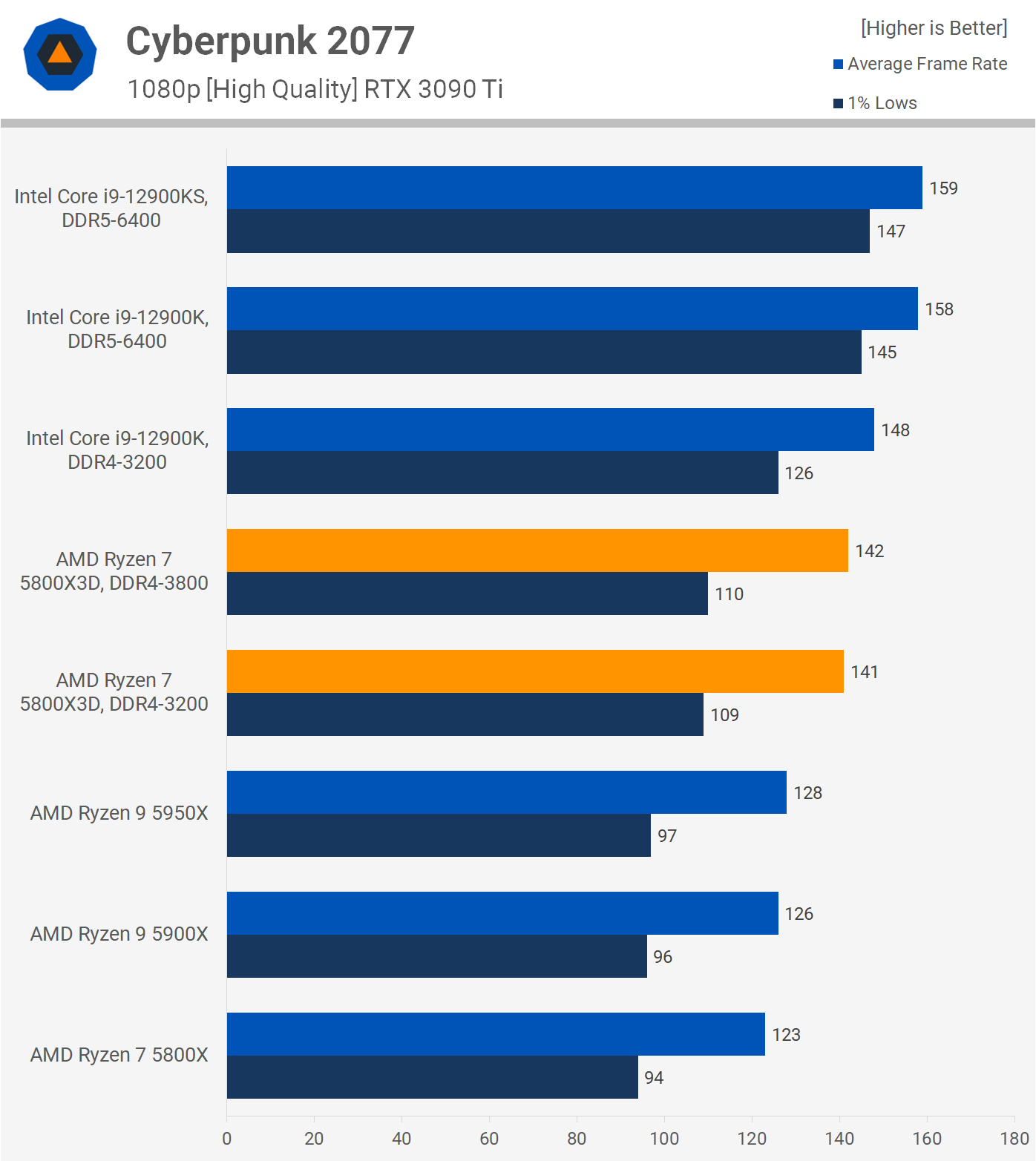

Cyberpunk 2077 isn’t kind to AMD although over 100 fps is plenty for playing this game, and given it’s not a competitive title you don’t need hundreds of frames per second. The 5800X3D gains are pretty tame compared to other games, just a 15% boost, and DDR4-3800 didn’t help further performance.

Using the same DDR4-3200 memory, the 12900K was up to 16% faster and then up to 32% faster when using ultra expensive DDR5 memory.

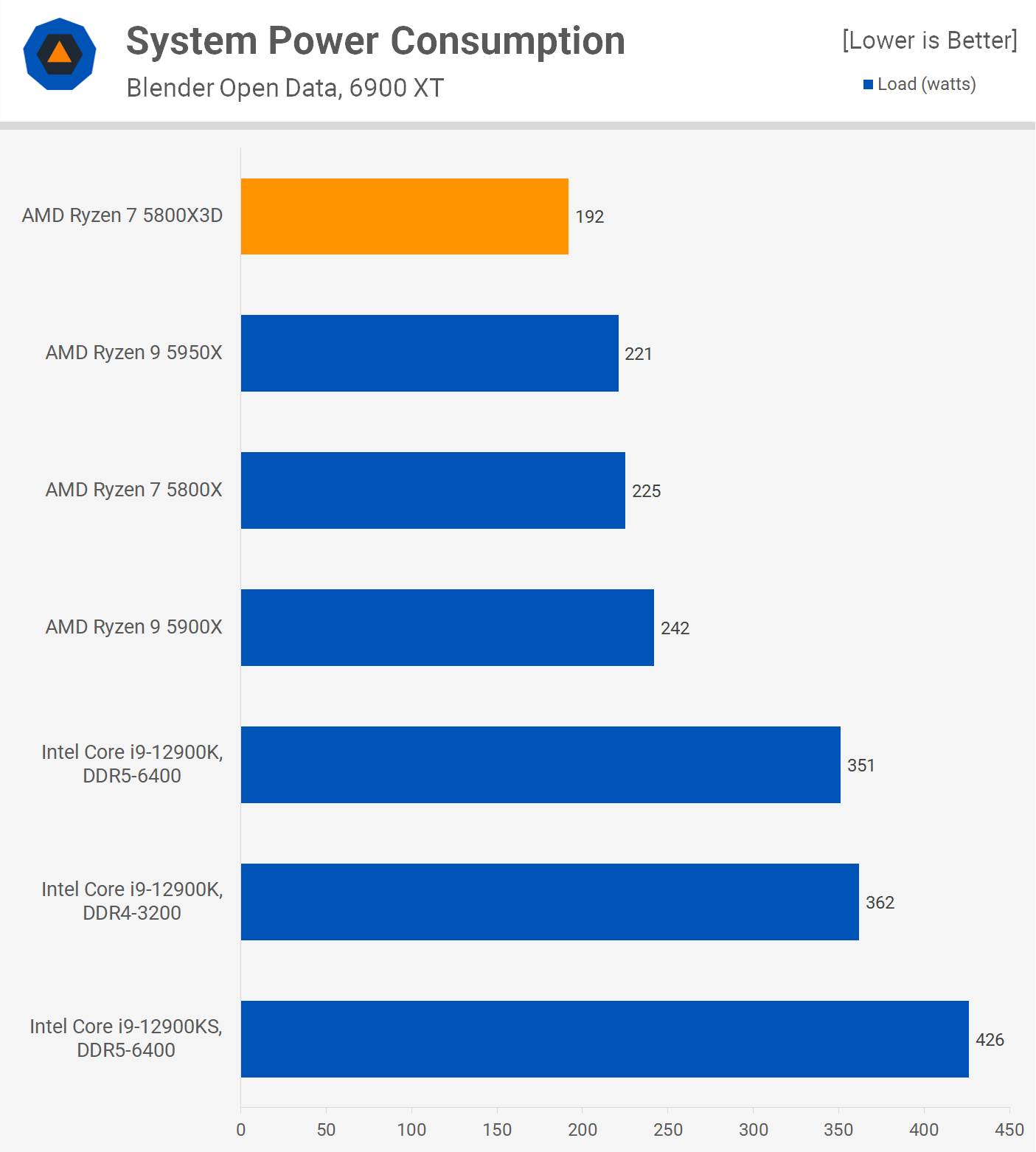

Power Consumption and Cooling

Interestingly, even though Blender rendering did not take any advantage of the massive L3 cache, the 5800X3D used less power than the 5800X, reducing total system consumption by 15%. The fact that it’s clocked lower and probably uses higher quality silicon is likely the major contributing factor here.

For cooling we used the Corsair iCUE H150i Elite Capellix 360mm AIO liquid cooler and this saw the 5800X3D hit a peak die temperature of 83C after 30 minutes of stress testing using Cinebench R23. This result was recorded in an enclosed case (Corsair Obsidian 500D) with an ambient room temperature of 21 degrees.

For comparison, under the same test conditions, the original 5800X peaked at 87C, or 4C higher, and this is because it clocks the cores higher.

For comparing power consumption using the RTX 3090 Ti, we’ve gone and tested the 5800X3D and 12900K in three games: Cyberpunk 2077, Far Cry 6 and Hitman 3, all using DDR4-3200 for both CPUs.

In Cyberpunk 2077, the 12900K was faster and in Far Cry 6 the 5800X3D was faster. When it comes to power usage, the 12900K pushed total system usage 12% higher in Cyberpunk 2077, which isn’t bad given it was up to 16% faster.

Then in Far Cry 6, the 12900K was 9% slower which isn’t great given total system power usage was 20% higher. The Hitman 3 data is interesting because both CPUs delivered the same level of performance, but the 12900K pushed total system power usage 9% higher. While not always the fastest gaming CPU, the 5800X3D is the more efficient part.

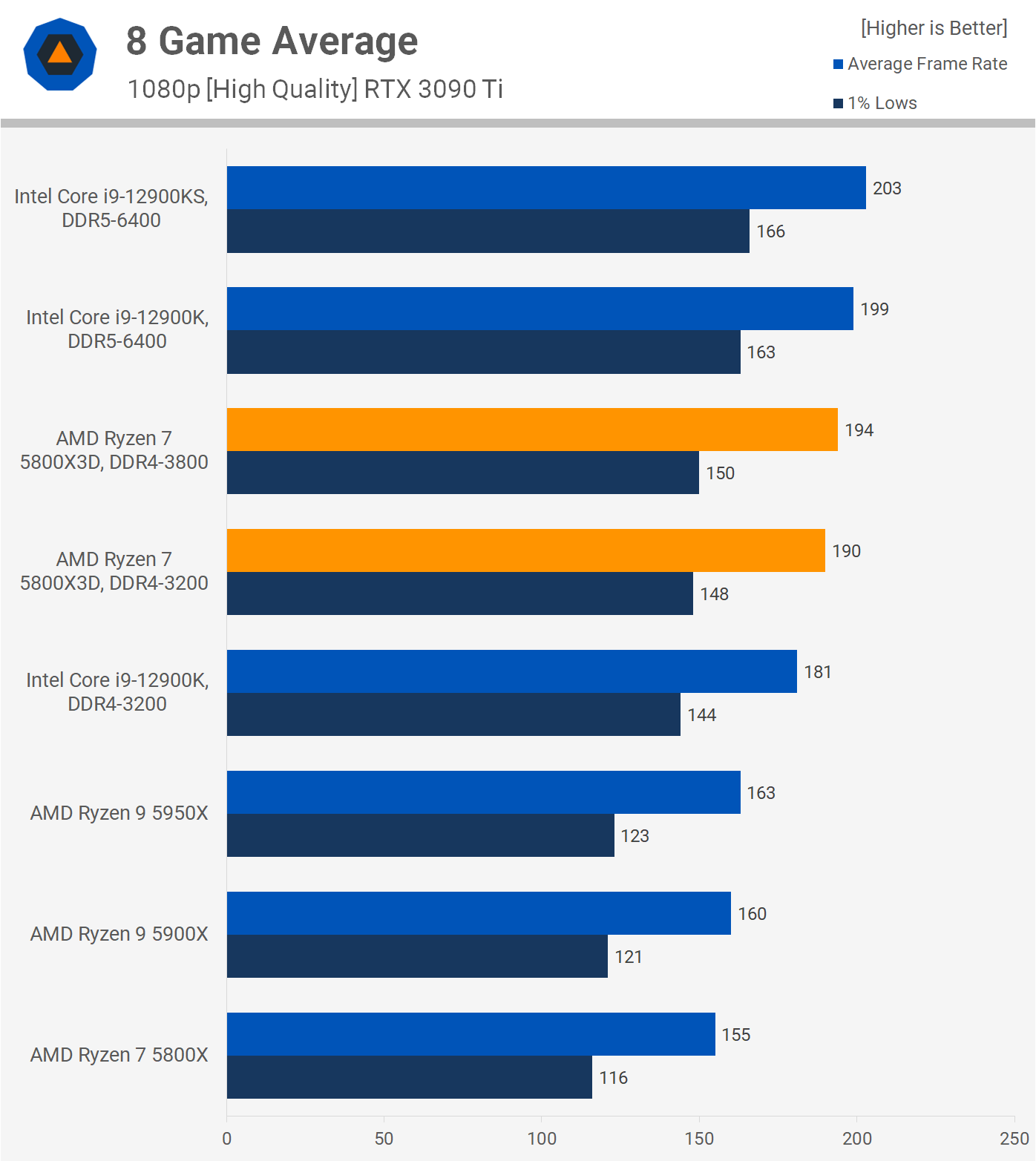

8 Game Average Performance

To get this review ready in time, we were only able to test 8 games, but we plan on testing a lot more shortly. From this small sample though, we found that the 5800X3D is slightly faster than the 12900K when using the same DDR4 memory.

It’s a small 5% margin, but that did make it 19% faster than the 5900X on average, so AMD’s 15% claim is looking good.

When using premium memory, the 12900K did have the edge, and was 8% faster when comparing 1% low data. That’s still not a massive enough margin to justify the price delta, let alone the DDR5-6400 memory, so we’d say the 5800X3D has done mighty well overall.

What We Learned

We’ve got to say that after seeing just how fast the Core i9-12900K can be when paired with DDR5-6400 memory and the GeForce RTX 3090 Ti, we didn’t think the Ryzen 7 5800X3D was going to stack up all that well, but it’s really impressed us anyway.

We’ve tested a small sample of games so far, just eight, but it was good to see the 5800X3D edging out the 12900K when both were using DDR4 memory. High speed DDR5 does give the Core i9 processor an advantage, but it’s also very expensive, though pricing is tumbling quite quickly.

The DDR5-6400 kit we used, for example, cost $610 in February, then dropped to $530 in March, before sinking to $480 in April where it currently sits. Granted, that means the memory alone still costs more than the 5800X3D, but we’ve seen a 22% slide in DDR5-6400 pricing in just two months.

Then again, we’re talking about two different animals. The Core i9-12900K / DDR5-6400 combo will set you back $1,080, add to that $250 to $300+ on a mid-range Z690 motherboard. Alternatively, the Ryzen 7 5800X3D should cost $450 and DDR4-3800 CL16 memory can be had for as little as $265, making for a $715 combo. A good quality X570S board will set you back $220 – $250, or you could just get a boring old X570 board like the excellent value Asus TUF Gaming X570-Plus WiFi for $160.

All in all, you can purchase the 5800X3D + high-speed DDR4 memory + a quality X570 motherboard for $875. Compare that to the premium Intel package which would set you back $1,330, or about a 50% premium for a few percent more performance on average.

We’re also ignoring the 12900KS in these calculations because at almost $800, that CPU is rather pointless. Very powerful, but ultimately pointless since the 12900K delivers the same level of performance for $170 less. Using the same DDR4-3800 memory, the 12900K platform cost drops to $1,125, but that’s still a ~30% premium for what would now be the inferior performing option.

So as we see it, you either go big with high-speed DDR5 when buying the 12900K or you might as well just opt for the more efficient and easier to cool 5800X3D. Of course, we’d love to compare these two across 30 or more games, and we’re working on that right now to see beyond a shadow of a doubt which is the ultimate CPU for gamers.

In the meantime, we’re extremely impressed with what we’ve seen here, and we simply hope AMD can meet demand. If the 5800X3D turns into another Ryzen 3 3300X situation, gamers won’t be happy.

Stay tuned for a more in-depth gaming only follow up from us shortly.