How to start this review. I thought of going for a fun little gag mocking the 6500 XT before completely tearing into it, but this thing is so bad it’s really spoiled the mood for me. I’ll cut right to it. In my opinion, this is the worst GPU release since I can remember, and I’ve been doing this job for over two decades.

Maybe just maybe there was something worse along the way. Stuff like the DDR4 version of the GT 1030 could be considered worse, but that was because it was a horrible anti-consumer tactic by Nvidia. But as far as new product releases go, I can’t think of anything worse.

The Radeon RX 6500 XT is so bad I don’t even know where to begin, and I know I’m kind of doing this review in reverse, but damn it I’m just flawed by AMD here. The 6500 XT is a combination of bad decisions that all point to AMD just taking advantage of the current market and therefore abusing ingenuous gamers in the process.

| Radeon RX 580 | Radeon RX 5500 XT | Radeon RX 6500 XT | Radeon RX 6600 | Radeon RX 6600 XT | |

|---|---|---|---|---|---|

| Price (MSRP) | $200 / $230 | $170 / $200 | $200 | $330 | $380 |

| Release Date | April 18, 2017 | Dec 12, 2019 | Jan 19, 2022 | Oct 13, 2021 | Aug 11, 2021 |

| Core Configuration | 2304 / 144 / 32 | 1408 / 88 / 32 | 1024 / 64 / 32 | 1792 / 112 / 64 | 2048 / 128 / 64 |

| Die Size | 232 mm2 | 158 mm2 | 107 mm2 | 237 mm2 | |

| Core / Boost Clock | 1257 / 1340 MHz | 1717 / 1845 MHz | 2610 / 2815 MHz | 1626 / 2491 MHz | 1968 / 2589 MHz |

| Memory Type | GDDR5 | GDDR6 | |||

| Memory Data Rate | 8 Gbps | 14 Gbps | 18 Gbps | 14 Gbps | 16 Gbps |

| Memory Bus Width | 256-bit | 128-bit | 64-bit | 128-bit | |

| Memory Bandwidth | 256 GB/s | 224 GB/s | 144 GB/s | 224 GB/s | 256 GB/s |

| VRAM Capacity | 4GB / 8GB | 4GB | 8GB | ||

| TBP | 185 watts | 130 watts | 107 watts | 132 watts | 160 watts |

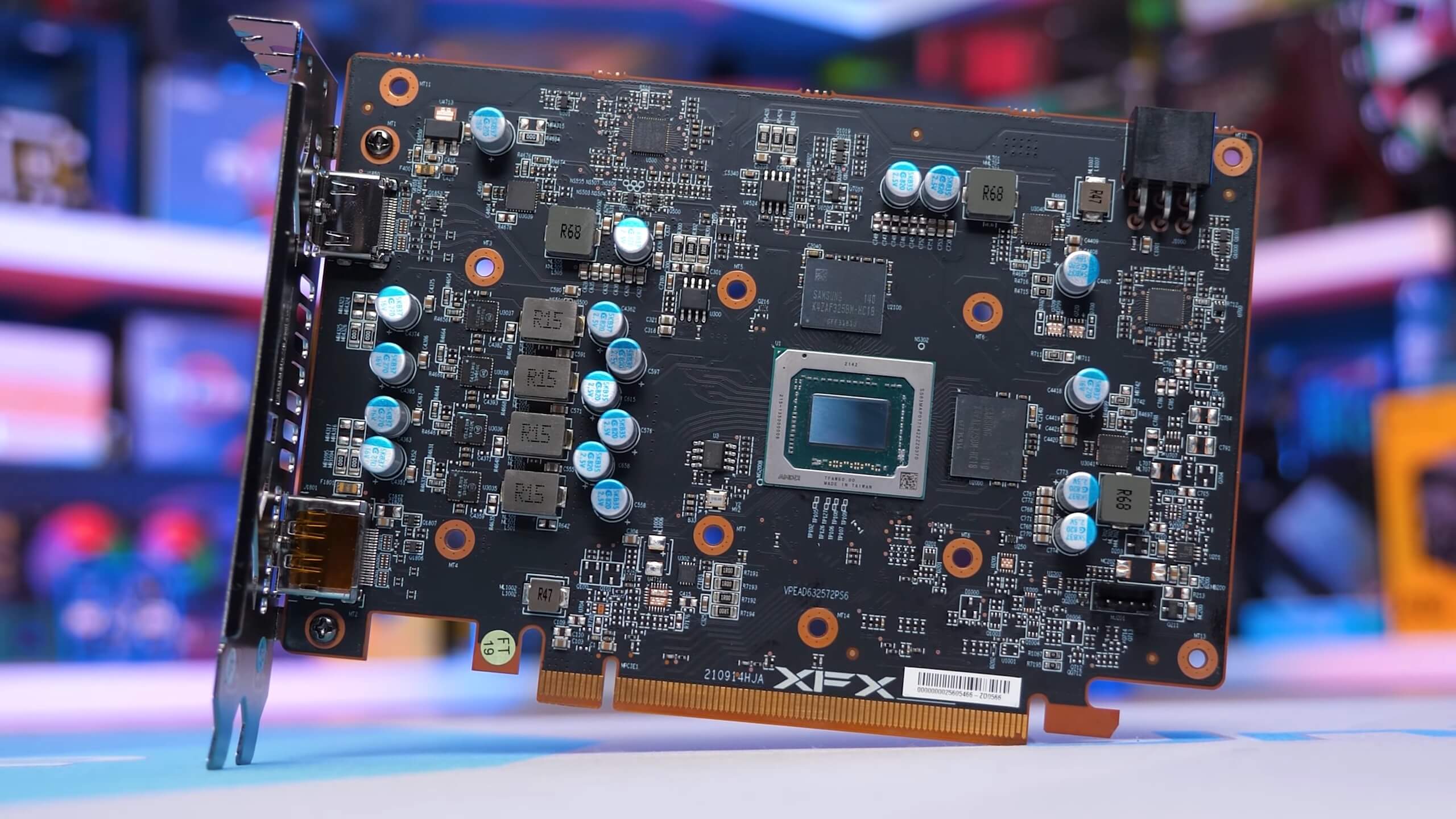

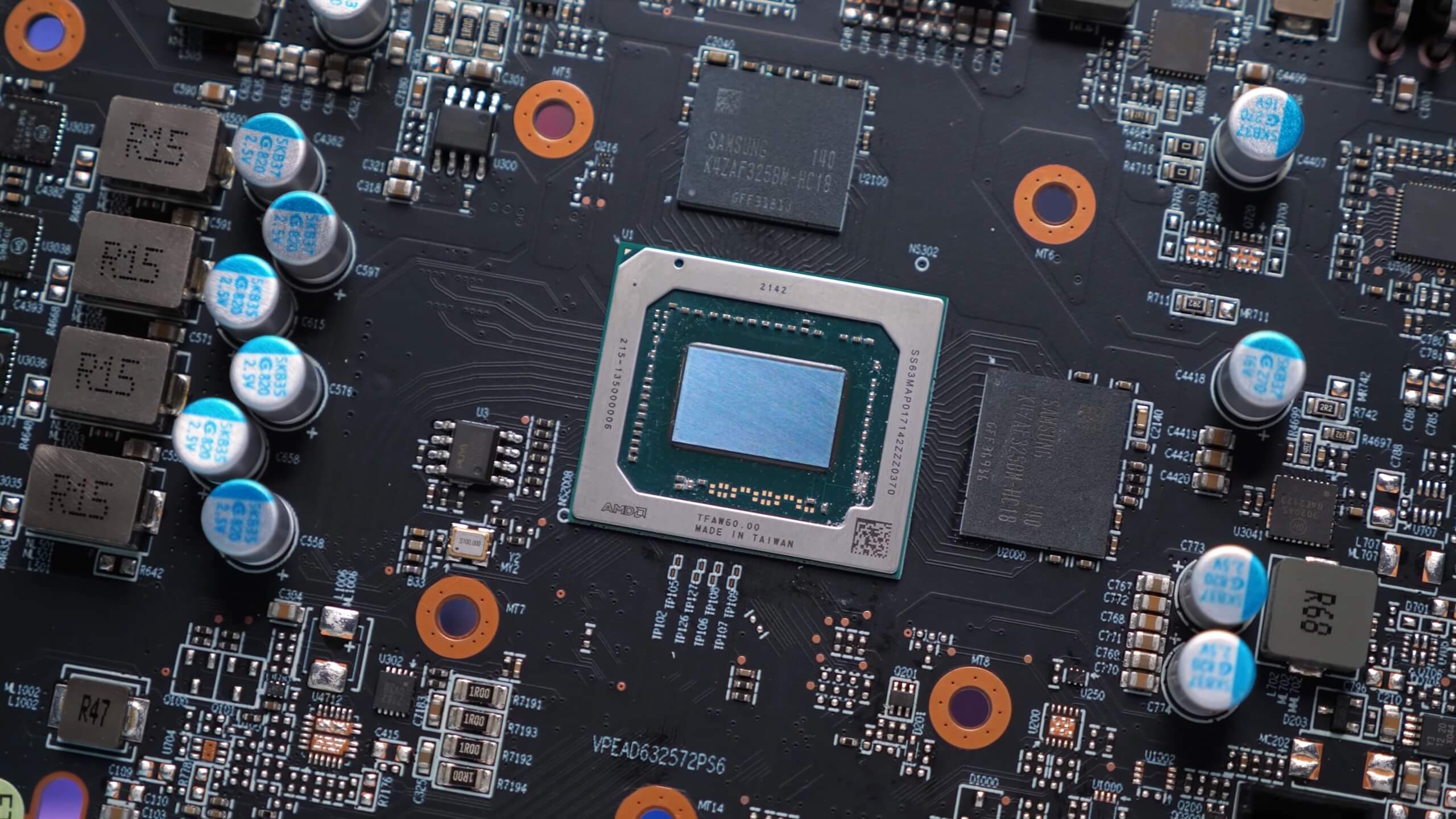

The 107mm2 Navi 24 die that the 6500 XT is based on was originally intended for use in laptops. In normal circumstances it probably wouldn’t make its way to desktop cards, and if it did, it certainly wouldn’t be a $200 product. Think more like sub-$100 RX 550 replacement. But due to current market conditions, AMD has decided to cash in with a product that makes no sense on the desktop. Worse still, it’s in most ways worse than products that have occupied this price point for half a decade.

The shortcomings with the 6500 XT include:

- 1) It’s limited to PCI Express x4 bandwidth.

- 2) It only comes with 4GB of VRAM, there’s no 8GB model.

- 3) Hardware encoding isn’t supported, so you can’t use ReLive to capture gameplay, which is a deal breaker, and

- 4) Because it was intended for use in laptops, only two display outputs are supported. This for $200… which could transform to $300+ after the initial run on MSRP cards disappear.

AMD claims these sacrifices were made in order to get the die size as small as they have, which allowed them to hit the $200 price point. This I believe is a lie, and even if it isn’t, it’s hard to justify releasing a product that is worse than products you had out in the market 5 years ago.

At this point we should probably just show you how underwhelming the gaming performance is, which as we’ve said is just one of the many problems with this abomination. For testing, we’re using our Ryzen 9 5950X GPU test system. Yes, we know no one is going to pair a budget graphics card with this CPU, but that’s not the point, we’re testing GPU performance and therefore wish to avoid introducing a CPU bottleneck which would skew the data.

We’ve collected 100% fresh benchmark data for this review. We’ve spent the last few weeks updating our mid-range GPU results and much of the testing has been done using medium quality settings, or settings that make sense for a given title. Please note the 6500 XT has been tested using both PCIe 4.0 and PCIe 3.0 on the same motherboard, toggling between the two specifications in the BIOS.

We’ve tested at 1080p and 1440p though most of our focus will be on 1080p results. Let’s get into it…

Benchmarks

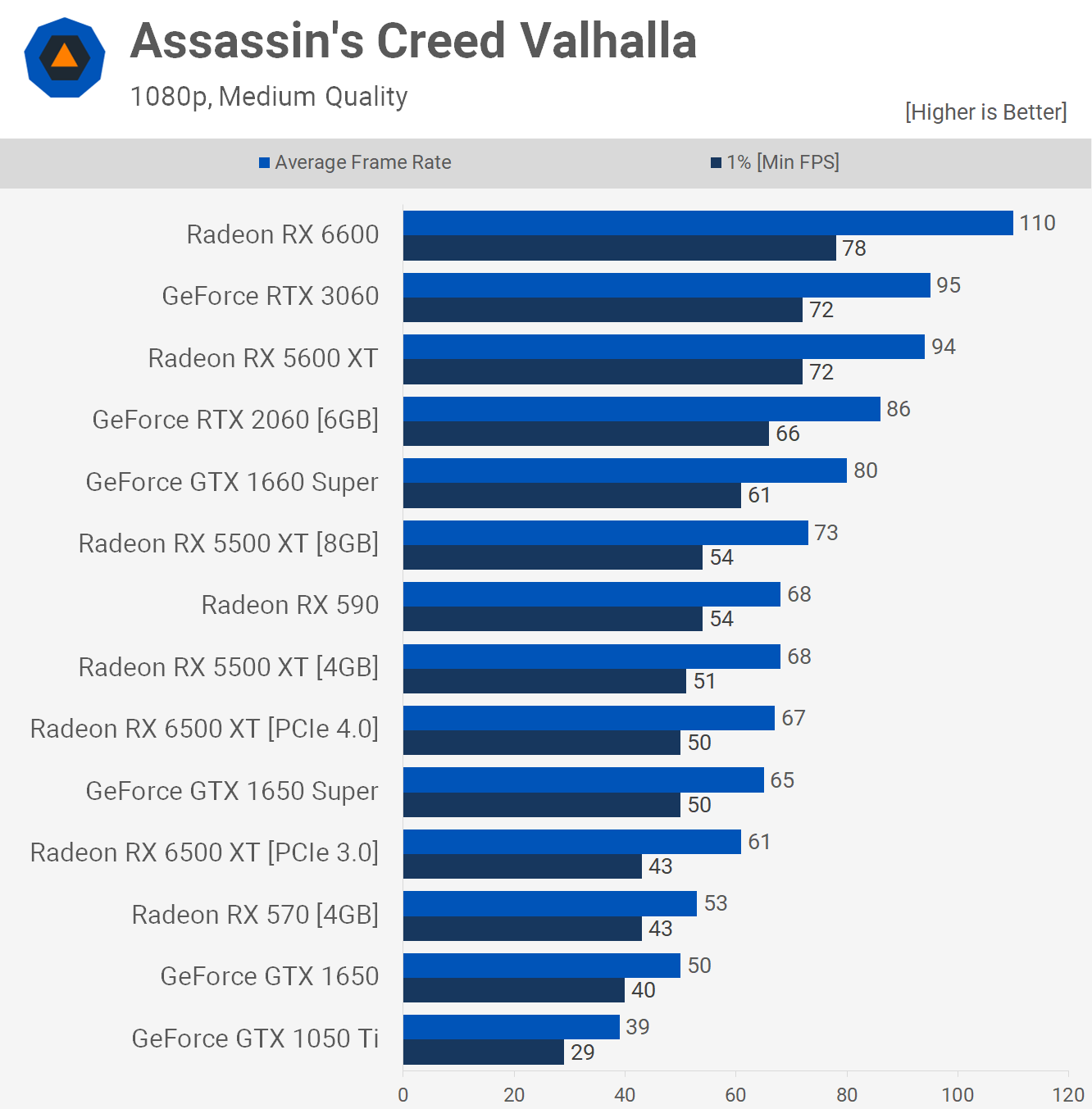

Starting with Assassin’s Creed Valhalla, using the medium quality preset we find that when using PCIe 4.0 that the 6500 XT is only able to match the 5500 XT — and the 4GB version of the 5500 XT at that — a disappointing result right off the bat. It was also about to roughly match the RX 590, which is basically an RX 580, which was an overclocked RX 480, a GPU released 5 years ago.

But it gets worse for the 6500 XT because when we switch to PCIe 3.0, which is what the vast majority of 6500 XT owners would use, the average frame rate dropped by 9%, and more critically the 1% lows dropped by 14%. That’s a huge performance reduction and you’ll find as we look at more games that the reduction in PCIe bandwidth often hurts 1% low performance the most.

It’s also worth noting that all Turing based GPUs use PCIe 3.0, so the GTX 1650 Super, for example, has been tested using the 3.0 spec, meaning when installed in a 3.0 system it’s up to 16% faster than the 6500 XT in this game. Even if we go off the average frame rate, the 1650 Super was 7% faster and it was released for $160 back in November 2019.

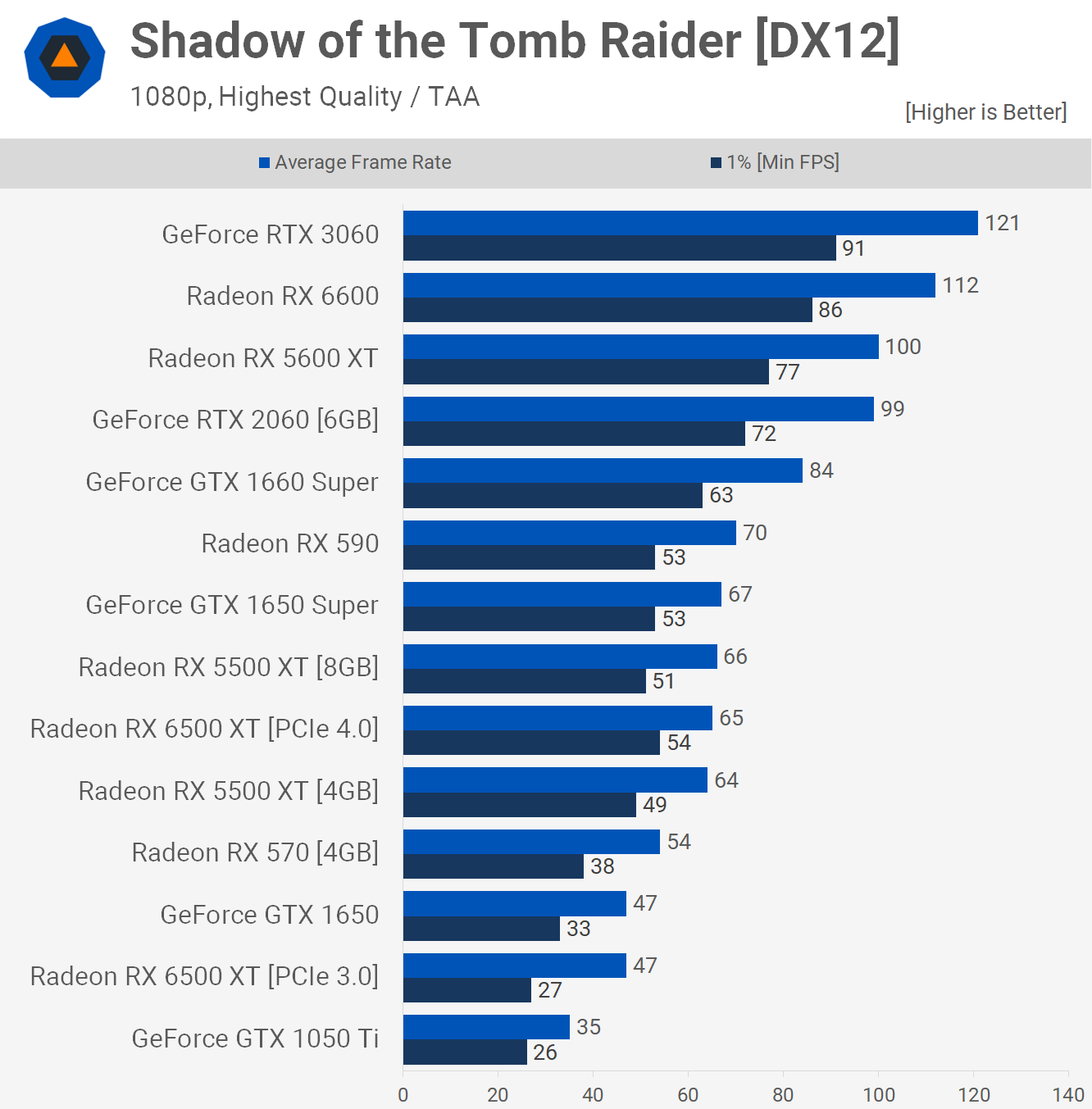

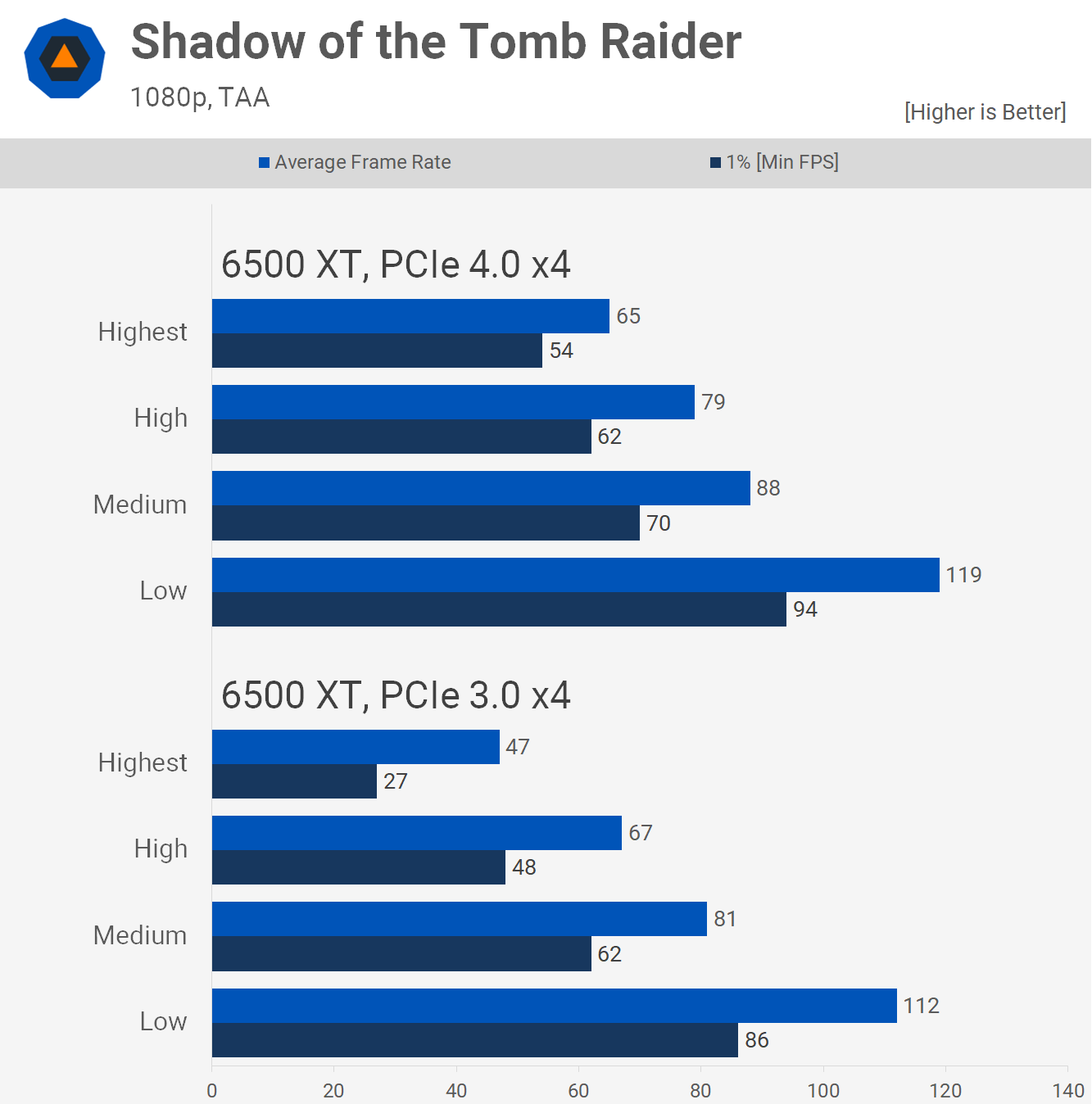

The Shadow of the Tomb Raider results paint quite the picture. Unlike Assassin’s Creed Valhalla which was tested using the medium quality preset, Shadow of the Tomb Raider has been tested using the highest quality preset as this is quite an old game now.

For most models performance is about the same, for example, the GTX 1650 averaged 65 fps in our Assassin’s Creed Valhalla test and we’re looking at 67 fps on average in Shadow of the Tomb Raider, there’s also a 2 fps difference for the 6500 XT when using PCIe 4.0.

Once again the issue for the 6500 XT is PCIe 3.0 and in this game it’s a huge impediment. The 1650 Super is a PCIe 3.0 card, but it has full x16 bandwidth which helps to reduce and even remove any performance bottlenecks. But with the 6500 XT limited to x4 bandwidth performance falls off a cliff in the PCIe 3.0 mode, dropping by up to 50%, seen when looking at the 1% lows.

The average frame rate also fell by 28% and this saw the 6500 XT go from 65 fps to just 47 fps. It also meant although it was able to match the 5500 XT when using PCIe 4.0, when installed in a PCIe 3.0 system you’re looking more at base GTX 1650 performance, which is worse than the RX 570. And for those of you who were around when I reviewed the 1650, you’ll know how I felt about that product.

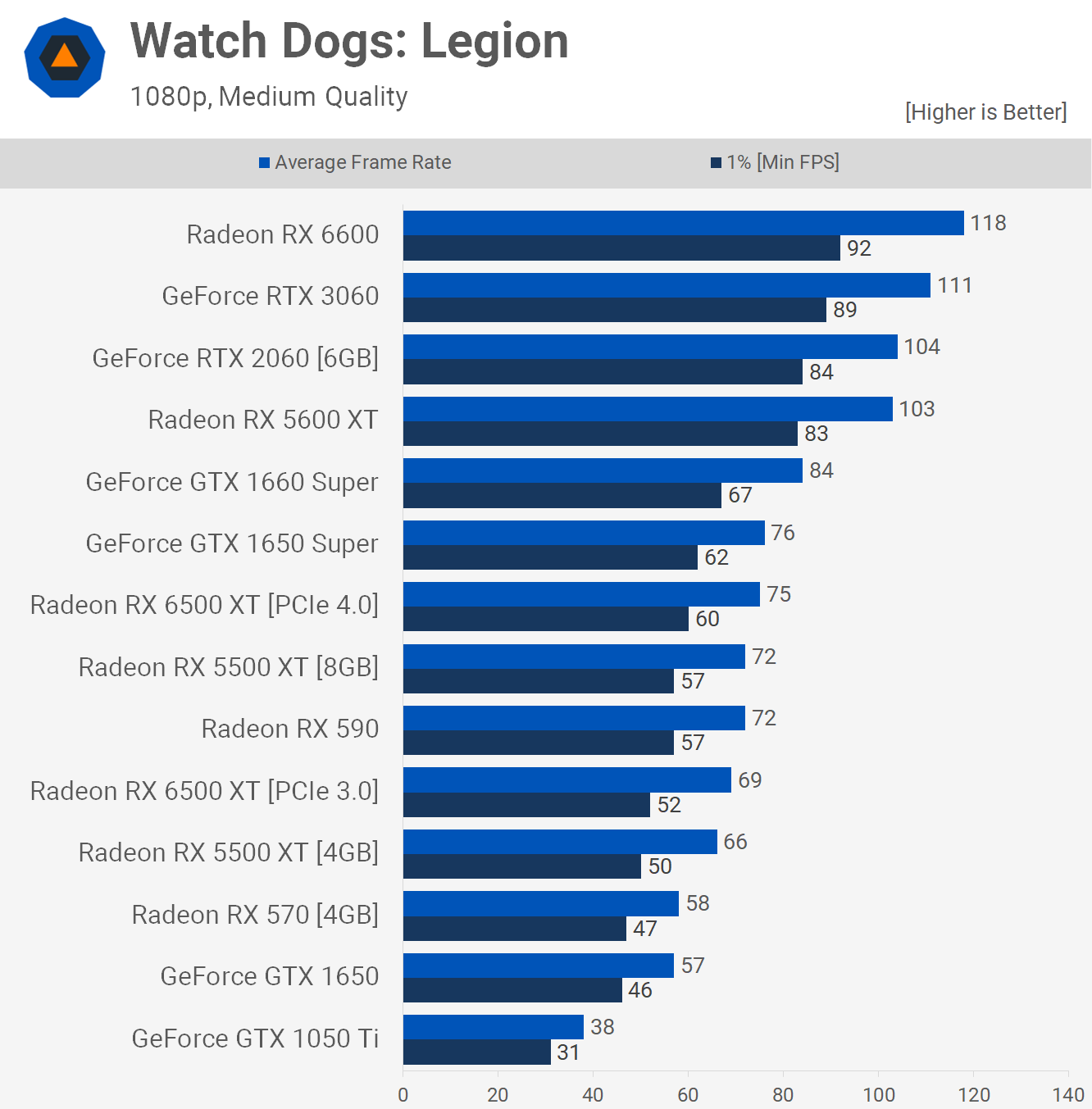

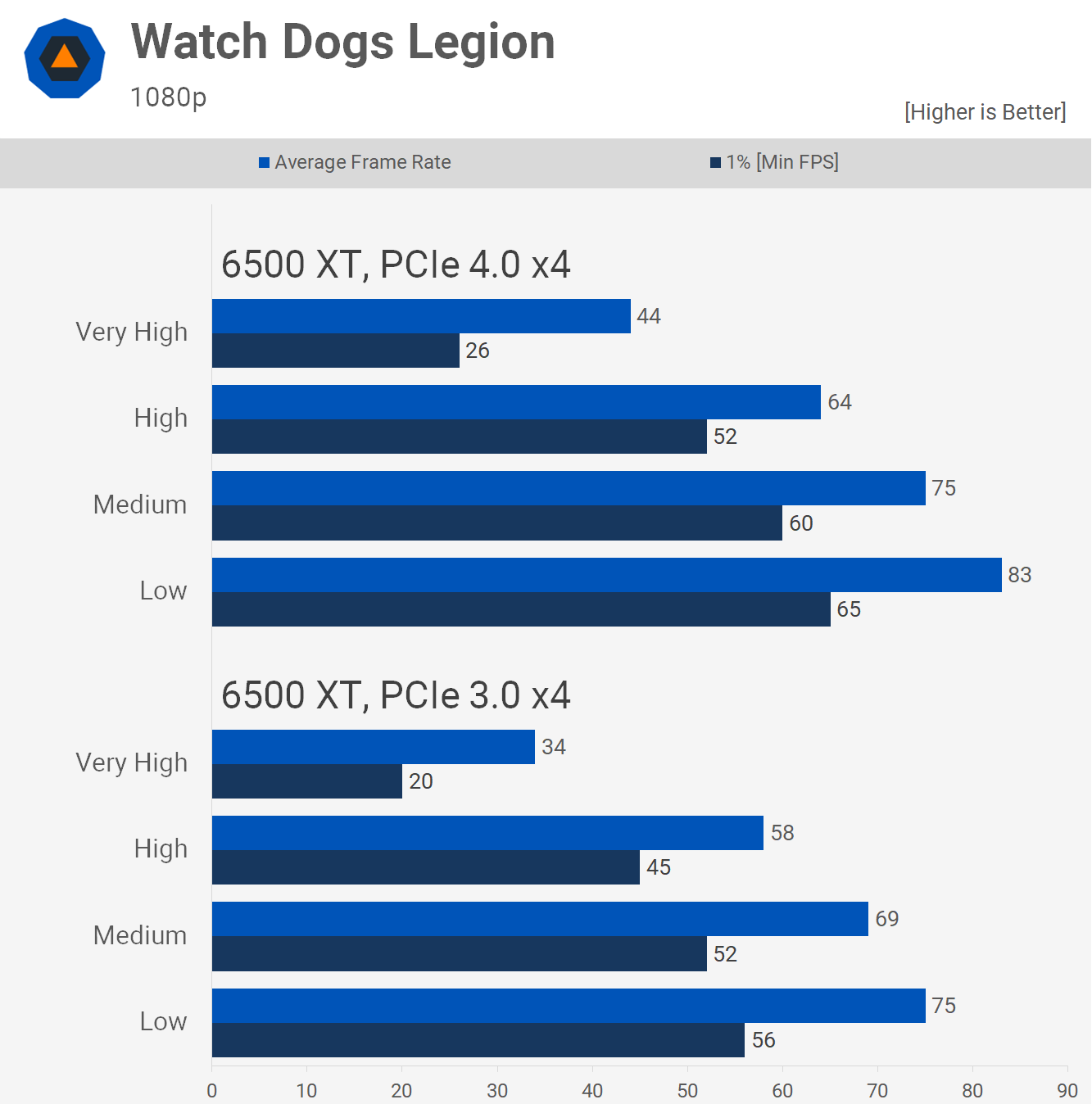

The Watch Dogs: Legion performance using the medium quality preset is rather underwhelming. We’re looking at GTX 1650 Super-like performance making it a smidgen faster than the 5500 XT and RX 590. This time PCIe 3.0 only drops performance by 8% or 13% for the 1% lows, and while that’s far less than what was seen in Shadow of the Tomb Raider and Assassin’s Creed Valhalla, I’d still consider this disastrous for an already struggling product.

In the PCIe 3.0 mode, the 6500 XT was slower than the RX 590 and only a few frames faster than the 4GB 5500 XT. Another horrible result for the new GPU AMD is trying to convince you is worth $200.

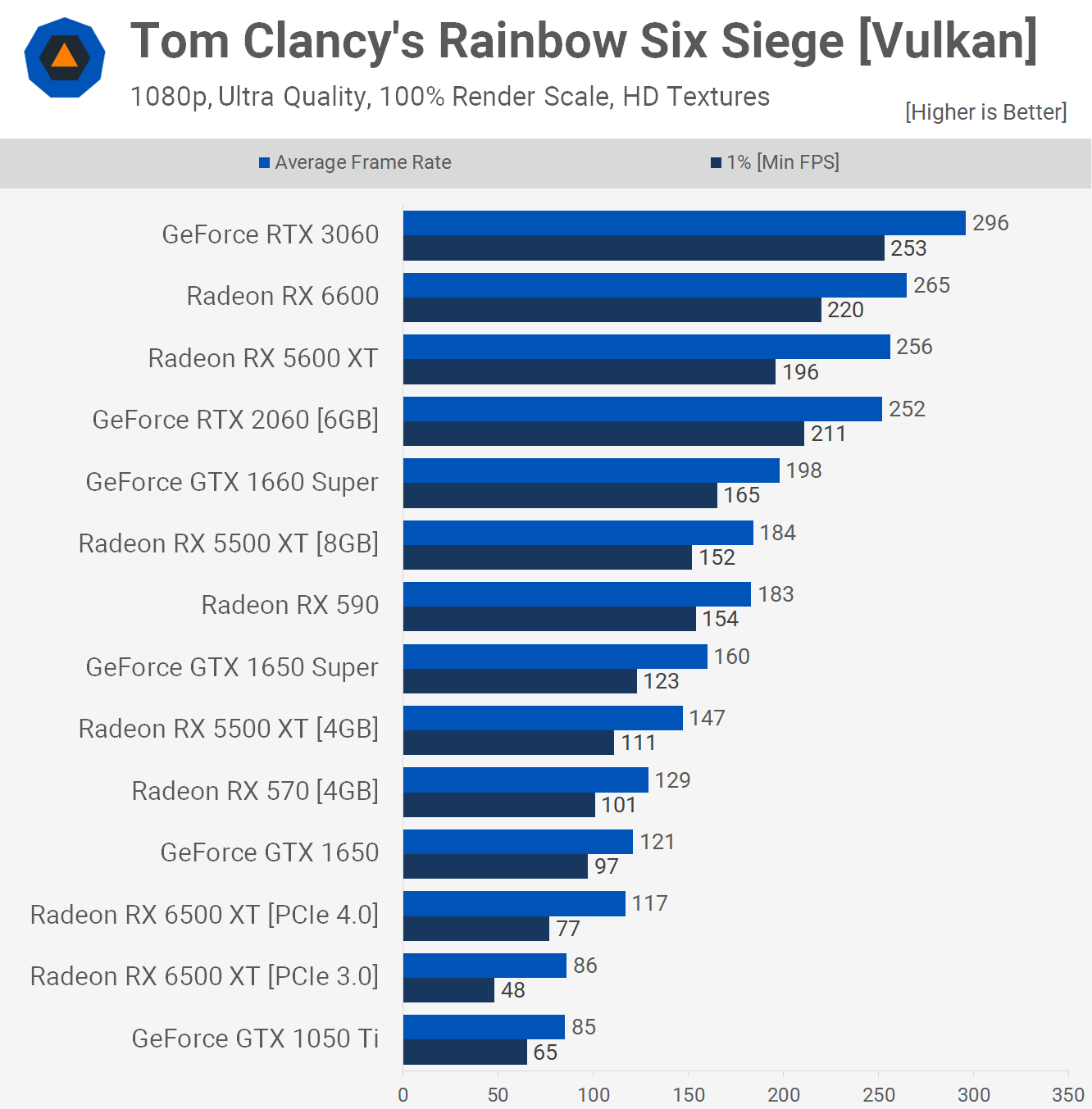

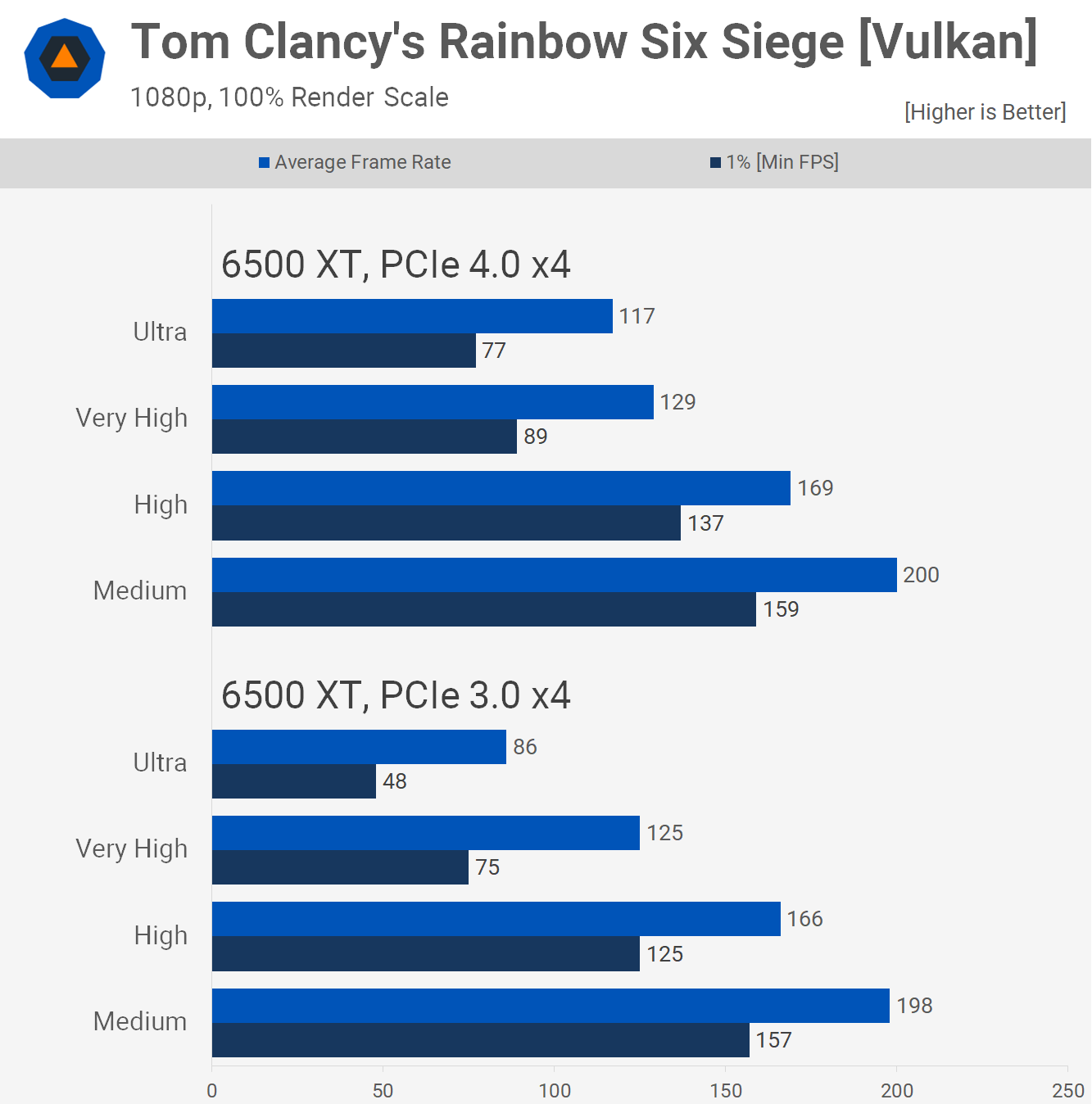

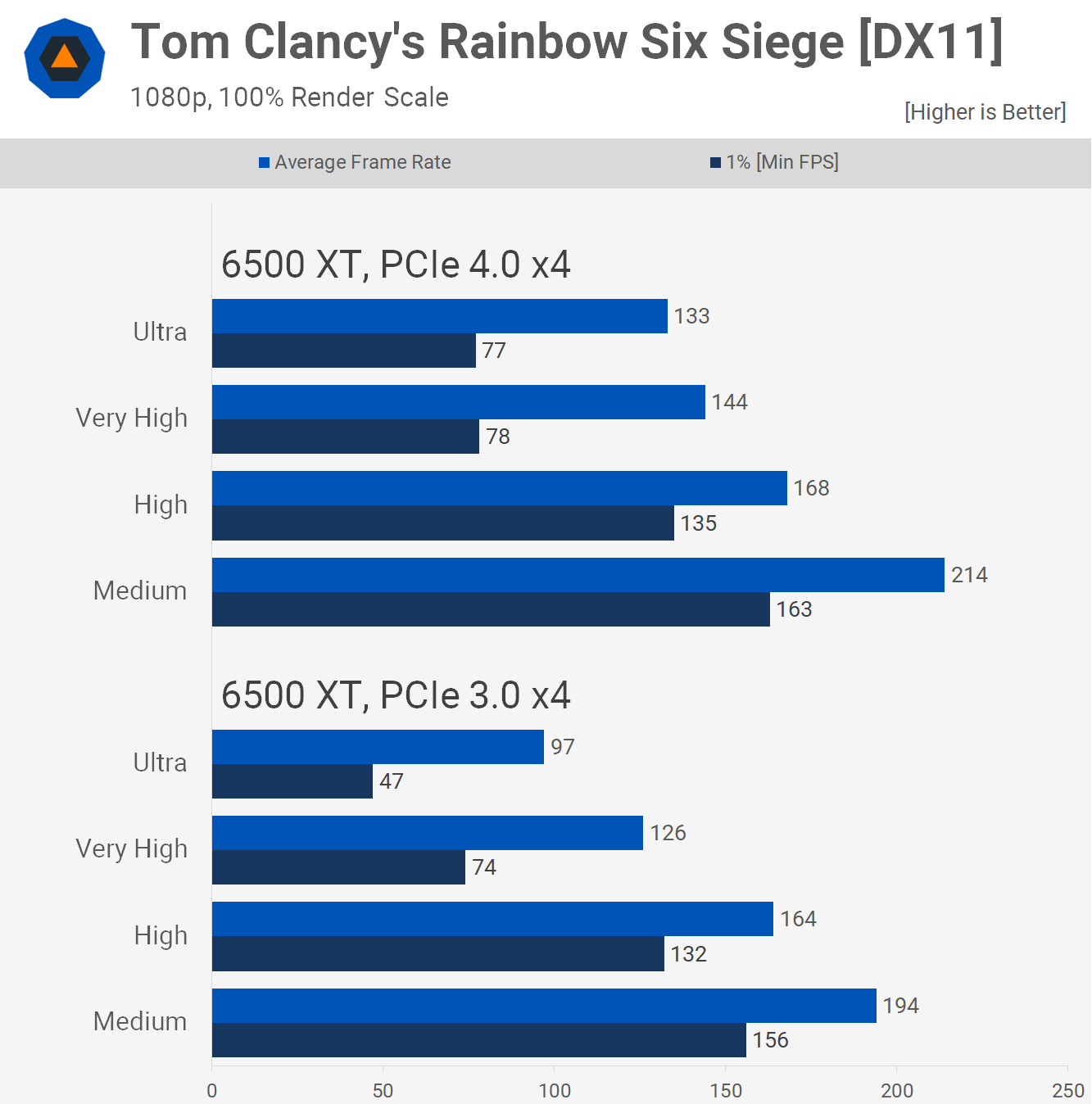

Quick disclaimer, we test Rainbow Six Siege using Vulkan not DX11 and we’ve done so for a long time now as it’s typically the superior API. However, after noticing how abnormally terrible the 6500 XT was, we tried again with DX11 and found performance using PCIe 4.0 improved by 14% to 133 fps.

Interestingly, that’s still much lower than what you’re going to see from some other reviewers who will probably have the 6500 XT up around 170-180 fps. The reason for this is the HD texture pack which we’ve always tested with installed. This highlights the PCIe bandwidth / VRAM capacity issue of the 6500 XT.

The reason we use the HD texture pack is because Rainbow Six Siege is a relatively old game. It’s had some updates since it was released 7 years ago, but fundamentally it isn’t a cutting edge title. You can see that GPUs such as the RX 590 can push over 180 fps using max quality and the HD texture pack installed.

Even though other 4GB graphics cards are just fine, the 6500 XT isn’t. Here it’s gimped by PCIe bandwidth even in the PCIe 4.0 x4 mode. When compared to the GTX 1650 Super, which is a 4GB graphics card, but uses PCIe 3.0 x16, the 6500 XT was 27% slower. But even more incredible, and I certainly mean incredible in a very bad way, even more incredible is the fact that when using PCIe 3.0 the 6500 XT was 46% slower than the GTX 1650 Super and wait for it, 53% slower than the RX 590.

Hell it was even slower than the RX 570, what a disgraceful result for the new 6500 XT. And look, you can argue that using the ultra quality settings isn’t realistic for a 4GB graphics card, but again the 1650 Super made out just fine, as did the RX 570. The 6500 XT is just a bad product with too many weaknesses to count.

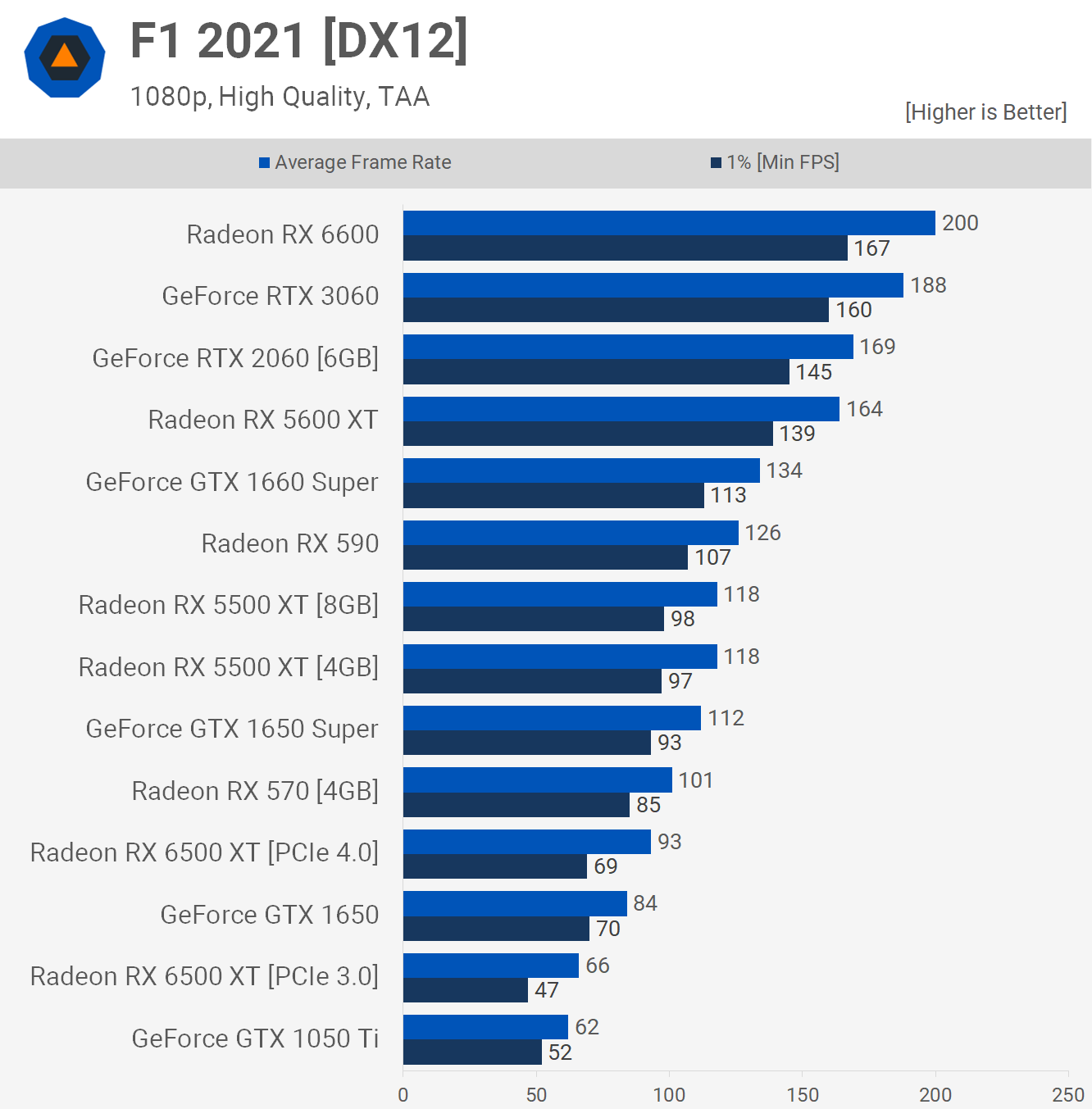

Next we have F1 2021 and boy does the 6500 XT stink in this one. I should note that we’re using the high quality preset and no form of ray tracing is enabled. Our previous PCIe bandwidth testing also revealed the F1 2021 isn’t particularly bandwidth sensitive, so I’m not sure why the 6500 XT performs so poorly.

We re-ran this test and got the same data each time. So either this is a driver issue, or just a weakness of the Navi 24 design, but as it stands the 6500 XT is well down on where you’d expect it to be, typically hovering around the same level of performance as the 5500 XT.

As bad as the PCIe 4.0 performance is, switching to PCIe 3.0 just made it worse, dropping performance by almost 30% to the same level as the GTX 1050 Ti, ouch.

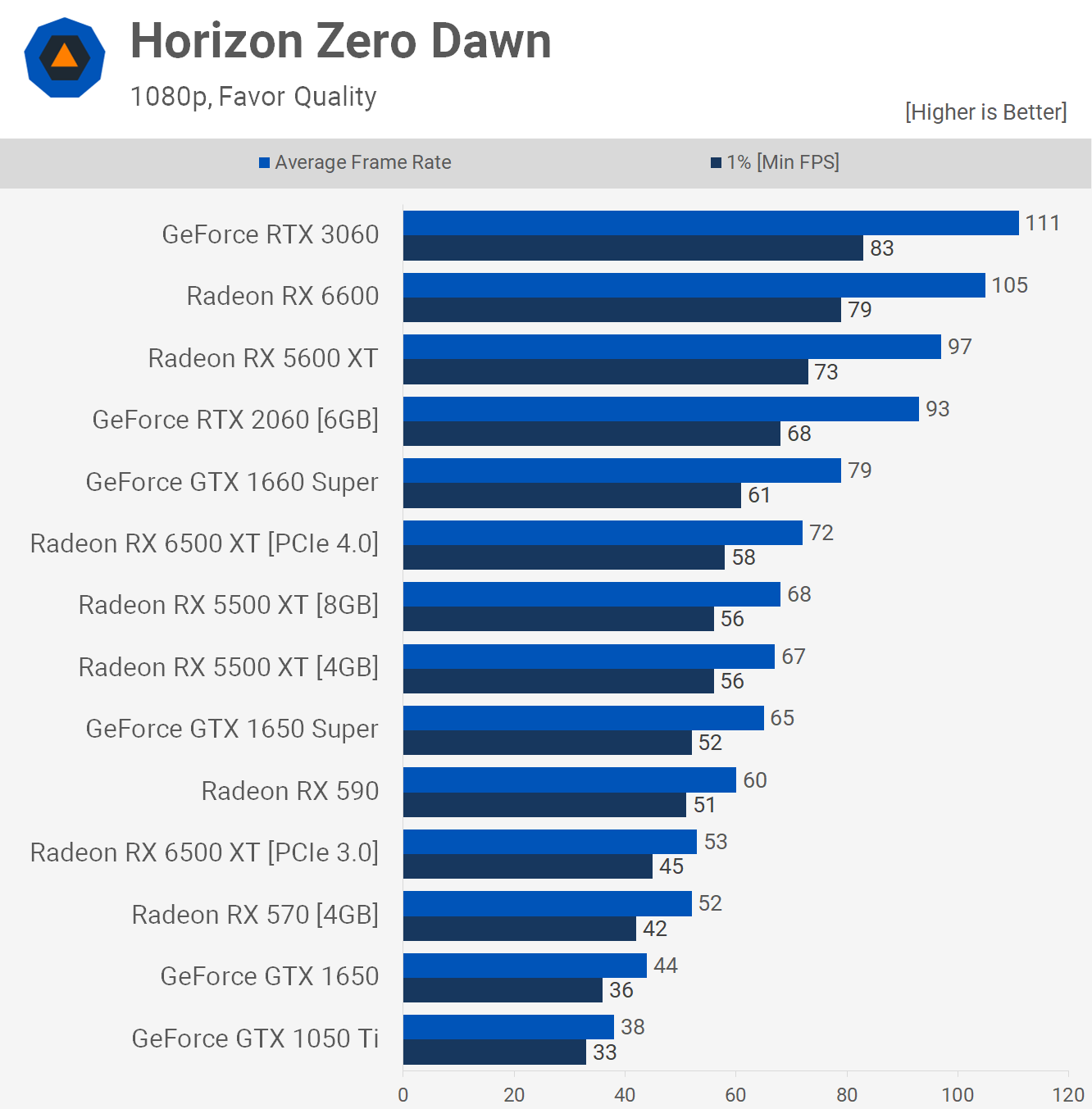

The 6500 XT is able to just edge out the 5500 XT in Horizon Zero Dawn using the ‘favor quality’ preset, delivering 7% more performance than the 4GB 5500 XT, so not amazing but certainly one of the better results. It was also much faster than the RX 590, offering around 20% more performance in this example. Though there is a ‘but’ here, using PCIe 3.0 crippled the 6500 XT, dropping the average frame rate to 53 fps, meaning it was 36% faster using PCIe 4.0.

For those of you using a PCIe 3.0 system, which again will be most looking at buying a budget GPU, the new 6500 XT is going to be no faster than the old RX 570 in this game.

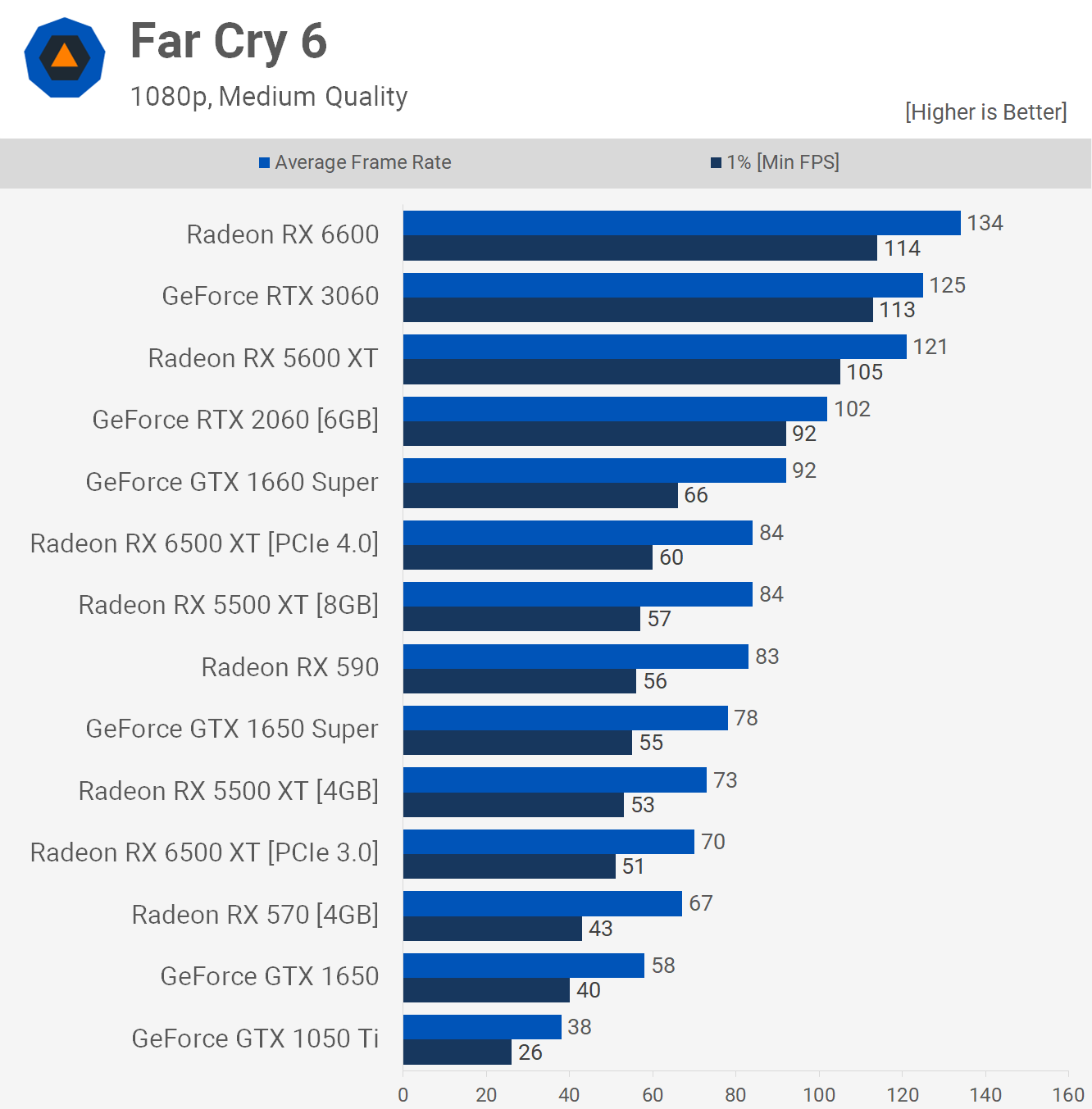

Moving on to Far Cry 6 with the medium quality settings we find that the 6500 XT is delivering 5500 XT-like performance, though when using PCIe 4.0 it is able to mimic the performance of the 8GB model, so I suppose that’s a decent result, it also matched the RX 590 and is therefore delivering 580-like performance here.

That said, when used in the PCIe 3.0 mode the average frame rate dropped by 17% and the 1% low by 23%, so now we’re much closer to RX 570 performance. Again the 6500 XT doesn’t look half bad in our PCIe 4.0 system, but switching to the 3.0 spec really hurts performance to the point where I imagine it’s getting hard to justify this product, even if it were near the $200 MSRP.

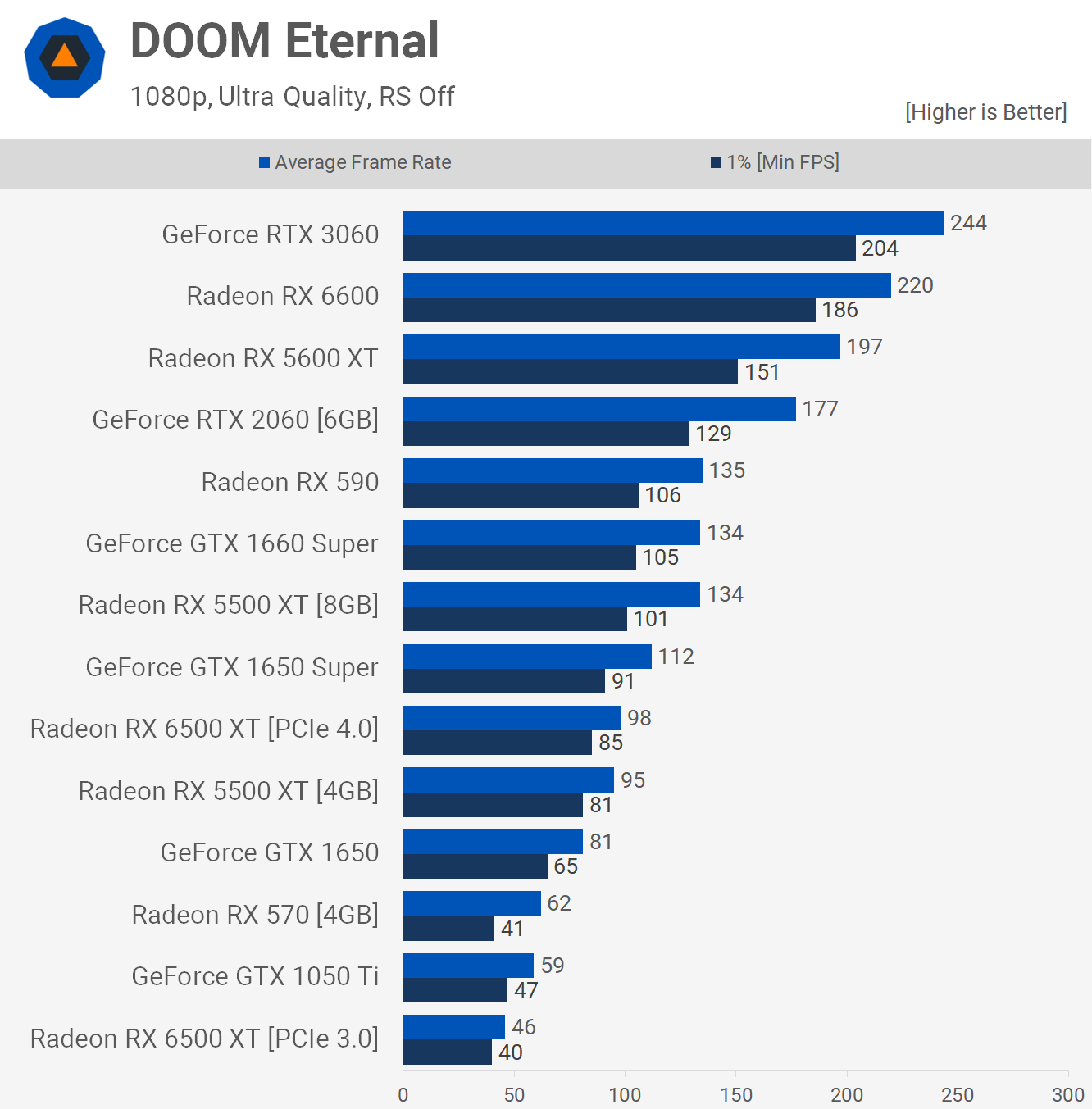

Doom Eternal is another interesting game to test with as this one tries to avoid exceeding the memory buffer by limiting the level of quality settings you can use. Here we’ve used the ultra quality preset for both models, but for the 4GB version I have to reduce texture quality from ultra to medium before the game would allow me to apply the preset.

So at 1080p with the ultra quality preset and ultra textures the game uses up to 5.6 GB of VRAM in our test scene. Dropping the texture pool size to ‘medium’ reduced that figure to 4.1 GB.

This means that despite tweaking the settings, the 6500 XT is still 27% slower than the RX 590. Granted, the game’s perfectly playable with 98 fps on average, but that level of performance or much better has been achievable on a budget for years now. But again, this is how the 6500 XT performs when using PCIe 4.0, switching to PCIe 3.0 cripples it, dropping the average frame rate by 53% down to 46 fps on average. An embarrassment for AMD and worse than the old RX 570.

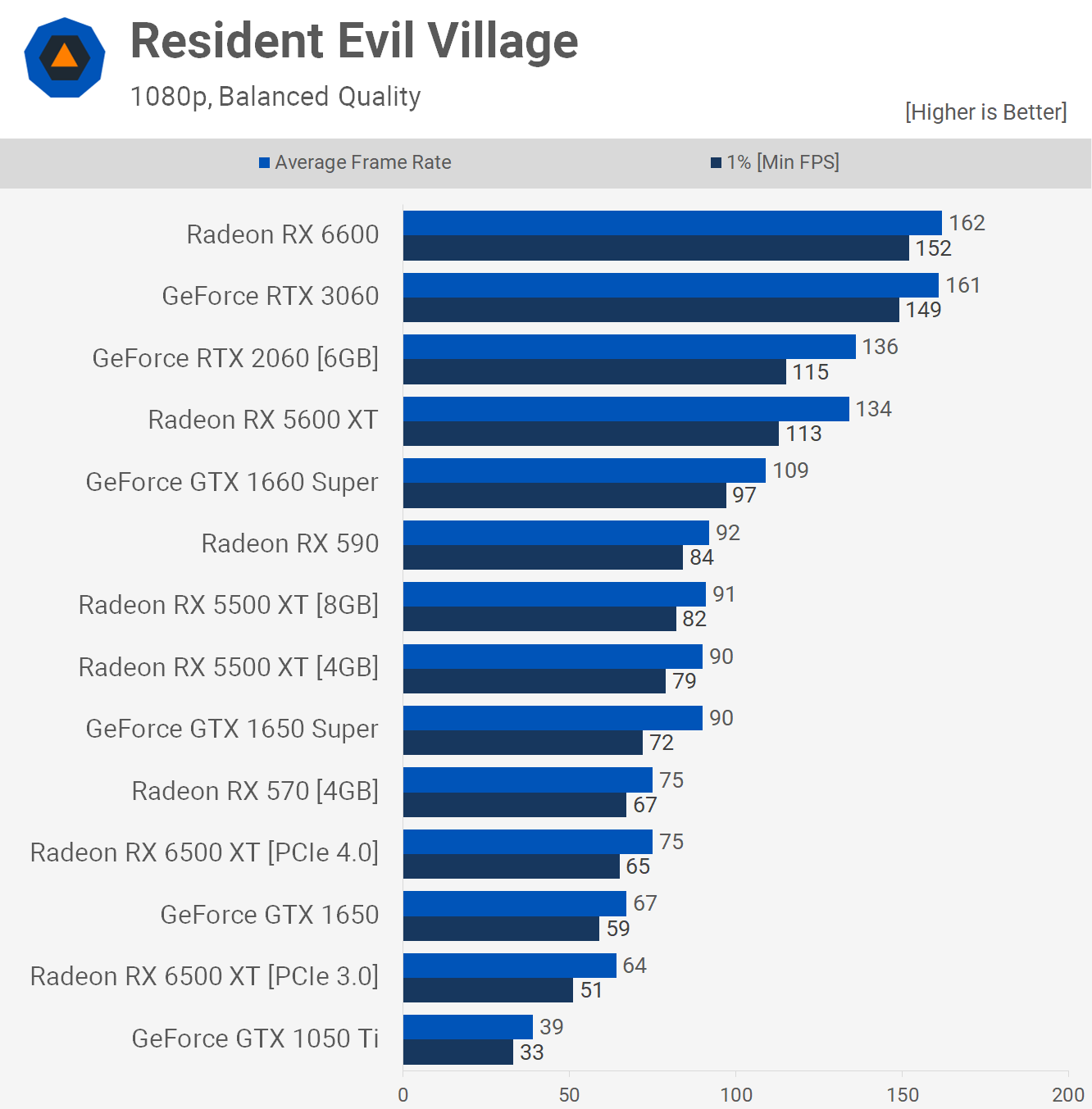

Resident Evil Village uses very little VRAM at 1080p with the balanced preset, we’re talking about a peak of just 3.4 GB in our test, so this is a best case result for the 6500 XT in a modern game. That said, the overall performance is a joke, only matching the old RX 570 with 75 fps, making it 18% slower than the RX 590 and 17% slower than the 5500 XT.

Even worse is that the 6500 XT was 17% faster using PCIe 4.0 compared to 3.0, with the previous bus spec dropping the average frame rate to just 64 fps, less than what you’ll receive from the GTX 1650, a GPU I once thought to be an embarrassment in this segment. AMD’s somehow gone and managed to make Nvidia look good 2 years later.

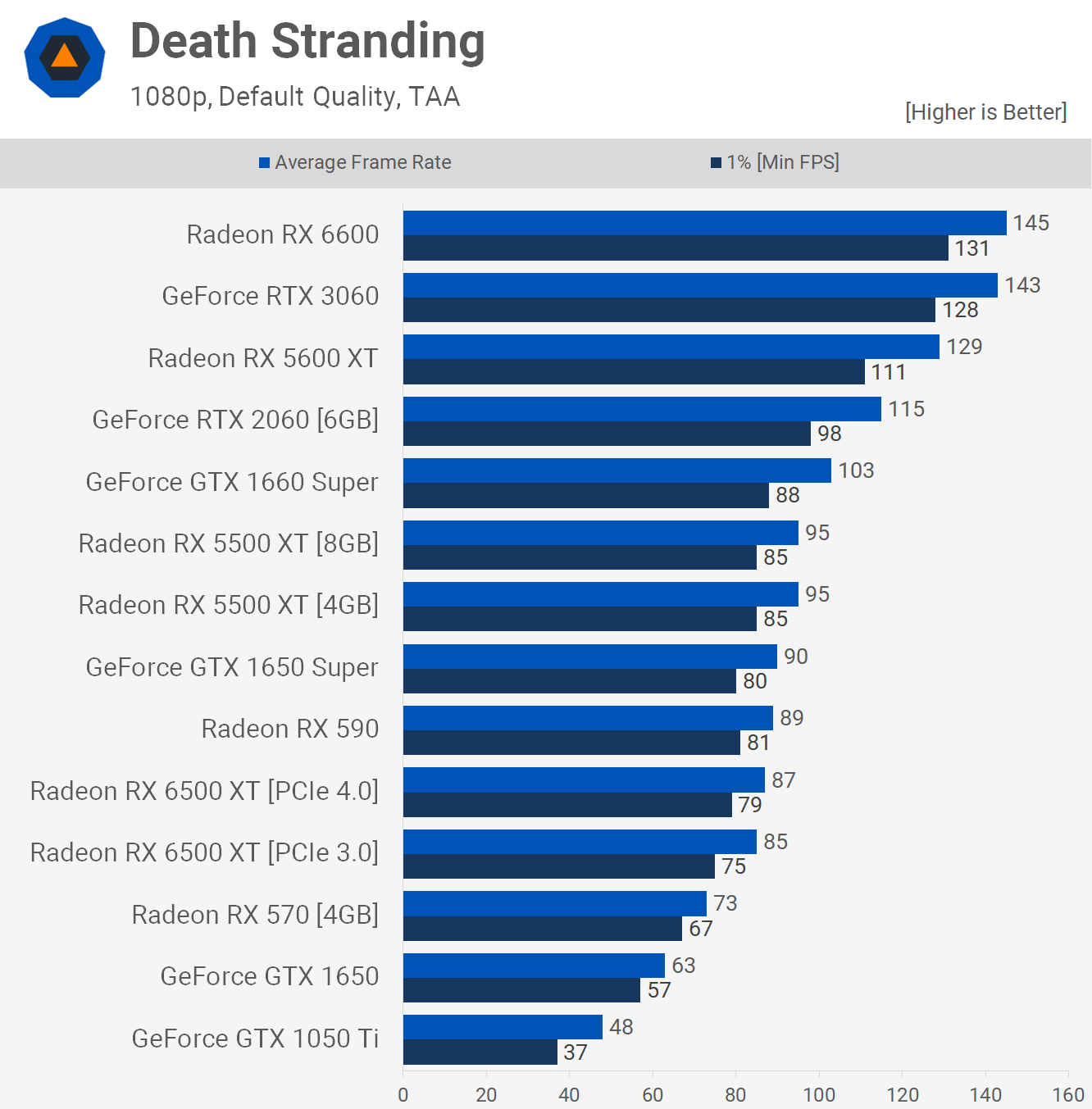

Death Stranding uses little VRAM with the ‘default’ quality preset and isn’t particularly bus bandwidth sensitive. So this is our best result for the new 6500 XT so far and it’s not exactly breathtaking, yes the performance overall is very good for a single player game, but it’s also no faster than the RX 590 and GTX 1650 Super.

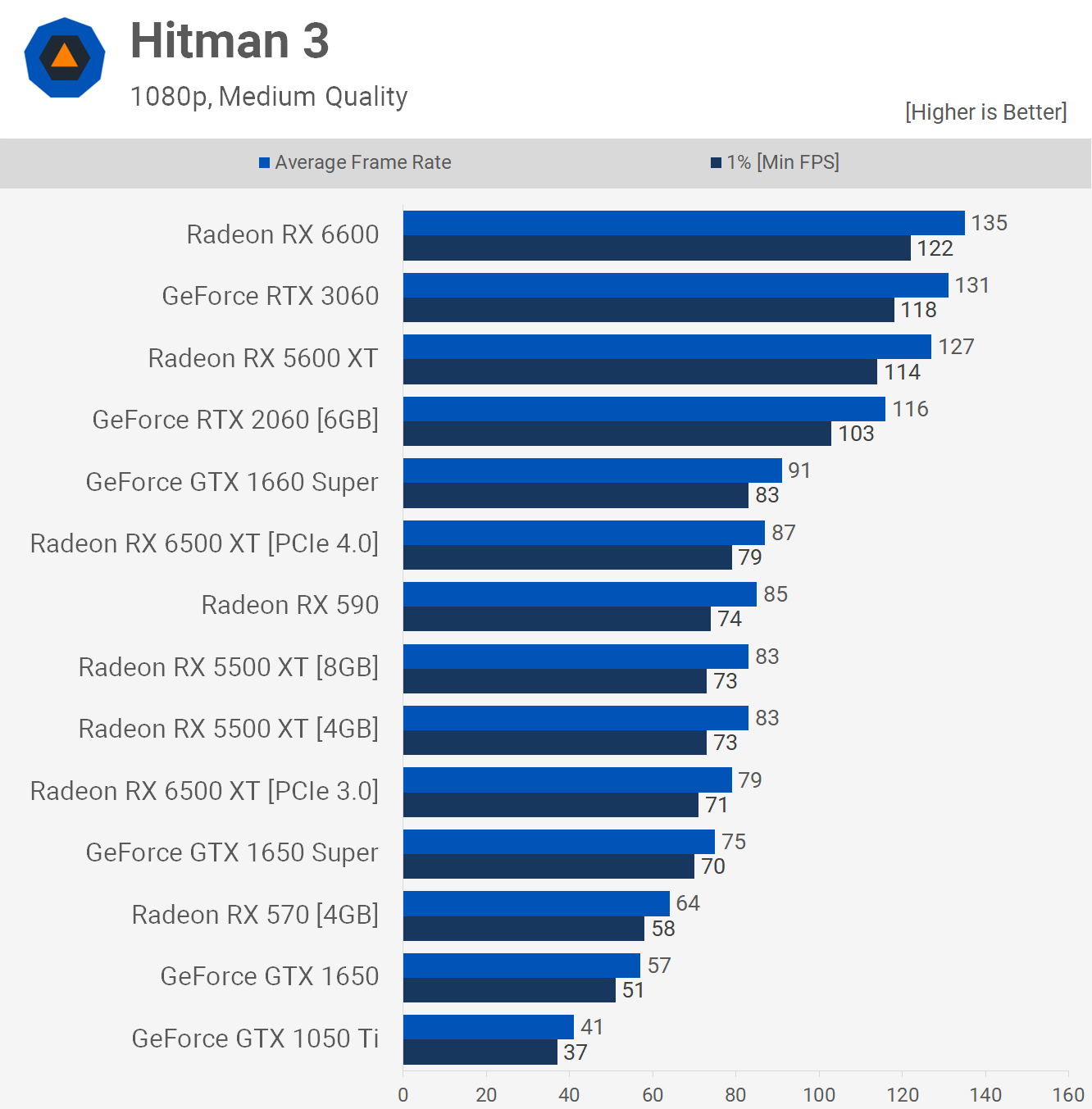

Hitman 3 is another good showing for the 6500 XT, at least relative to what we’ve shown so far. Again it’s only roughly on par with the RX 590 and just 5% faster than the underwhelming 5500 XT, but like I said compared to what we’ve seen so far this is actually very good.

When using PCI Express 3.0 performance did drop by 9% and now the 6500 XT is slower than the 5500 XT and just 5% faster than the 1650 Super, another poor result.

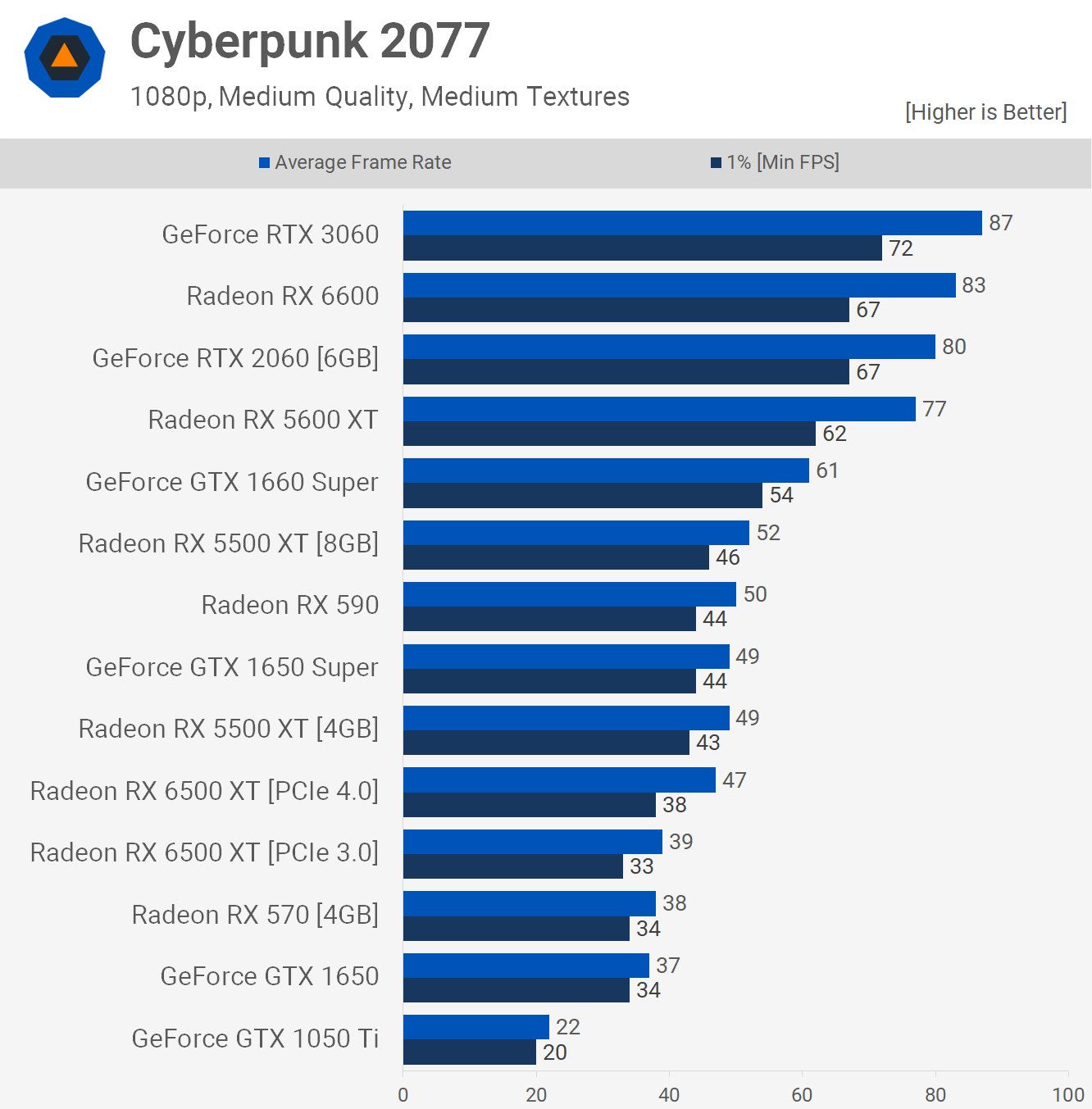

Last up we have Cyberpunk 2077 and it’s more of the same. Best case using PCIe 4.0 the 6500 XT is almost on par with the 5500 XT and is therefore a little slower than the RX 590. Then switching to PCIe 3.0 dropped performance by 17% and now we’re looking at just 39 fps on average while the RX 590 is good for 50 fps.

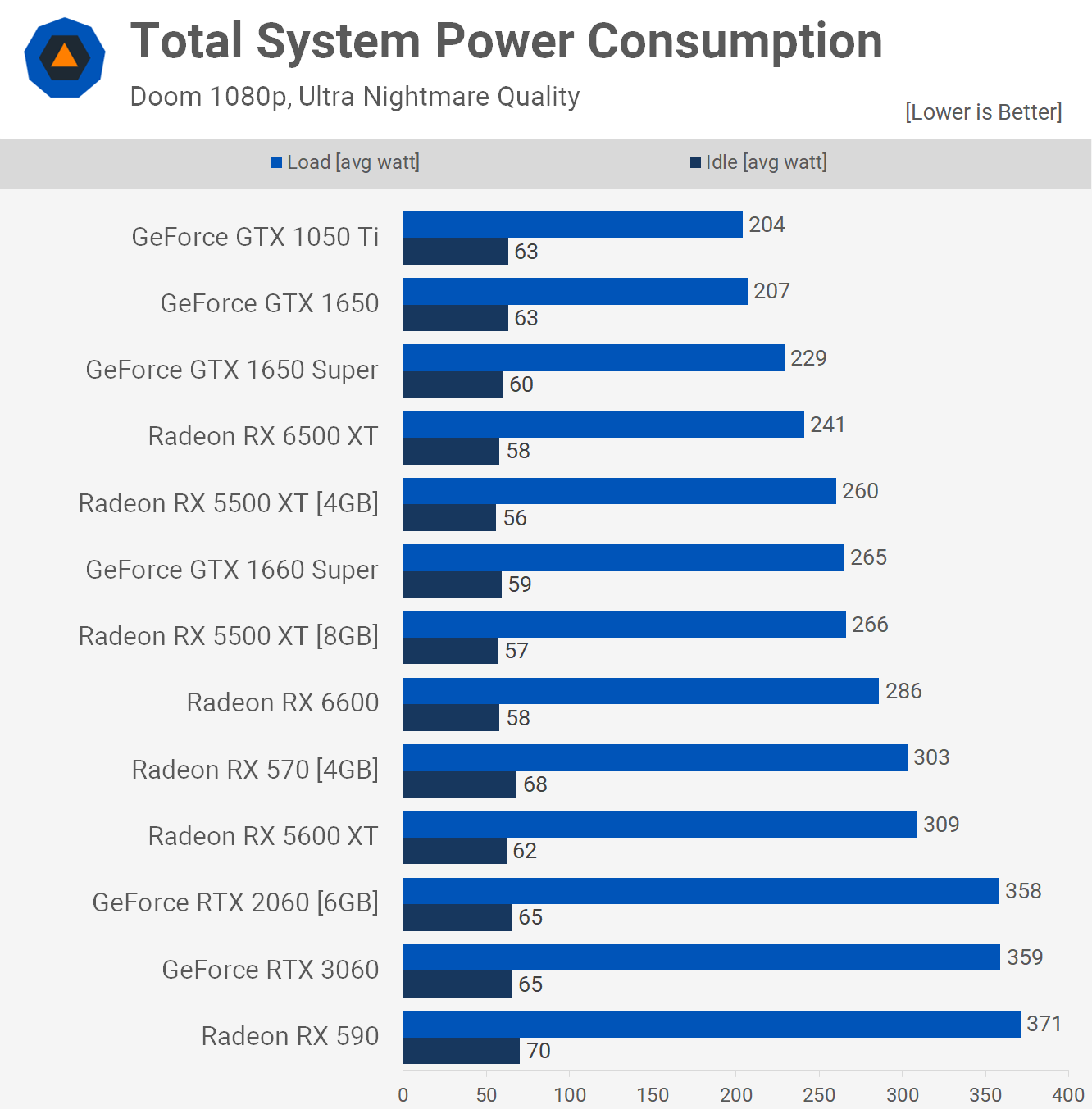

Power Consumption

But hey, maybe power consumption has something special for us. After all, this is the first 6nm GPU and AMD bragged to us how small and power efficient they’ve managed to make the 6500 XT.

Unfortunately, while this is the only positive aspect of this product, it’s not exactly class leading as the GTX 1650 Super not only uses less power, but is also faster. Compared to the old RX 590, it’s impressive, saving 130 watts when gaming, so that’s pretty good, but I’ll be honest I’d take the 590 every day of the week.

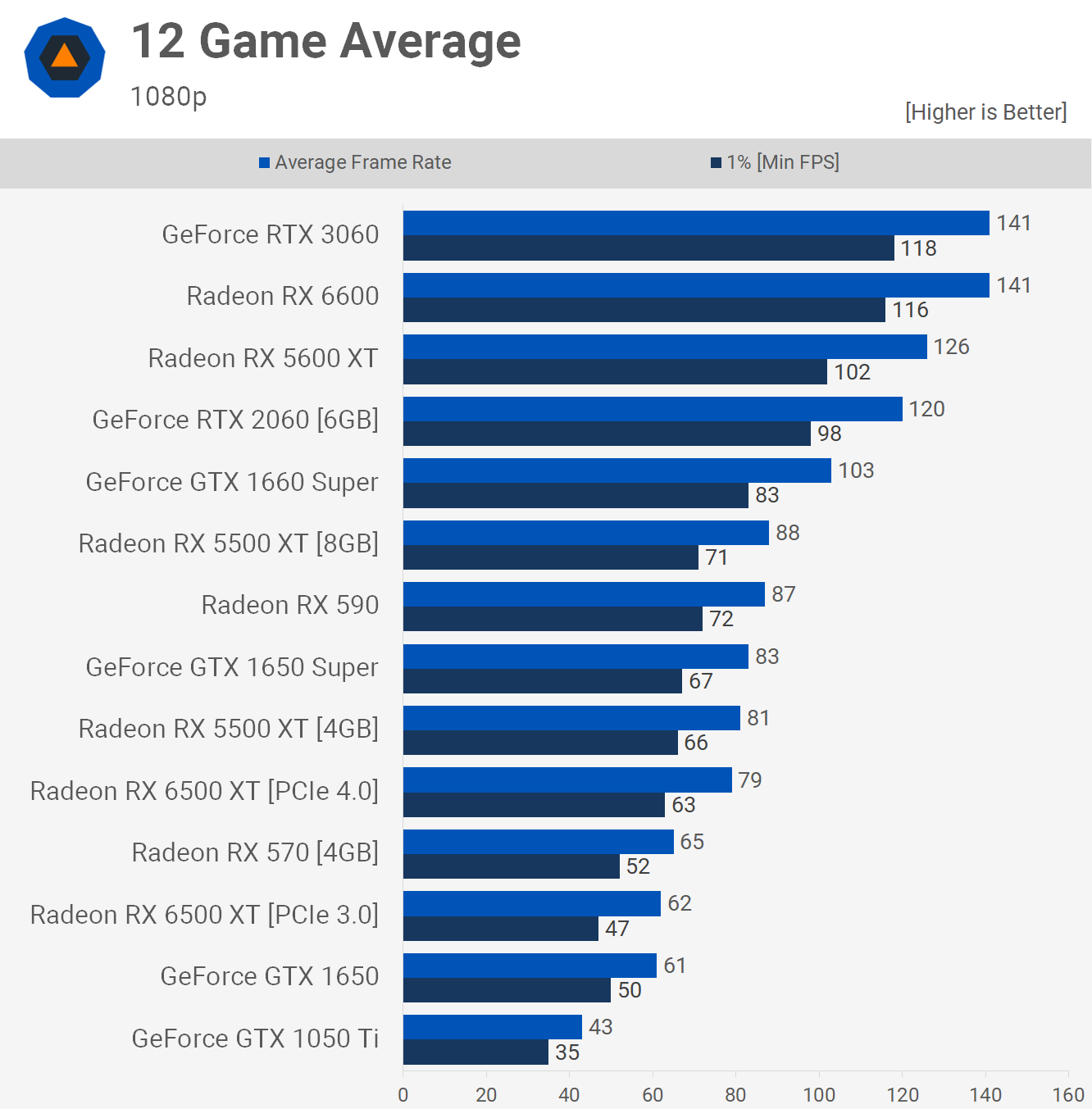

12 Game Average

Here’s a look at the 12 game average data and here you can see the reason why I’d take the RX 590 every day of the week. We see when using PCIe 4.0 that the 6500 XT was still 9% slower than the RX 590 across the dozen games tested at 1080p. That comparison speaks volumes about the 6500 XT, but it’s the PCIe 3.0 result that cements it as the worst GPU we’ve reviewed in recent times.

Using PCIe 3.0, the 6500 XT was 29% slower than the RX 590 and 25% slower than the GTX 1650 Super. Or another way to put it, the RX 590 was 40% faster and the GTX 1650 Super 34% faster.

That’s the same margin you’ll find when going from the $480 6700 XT to the $1,000 6900 XT. Anything over $200 for this level of performance is absurd, even in today’s market and let me show you why…

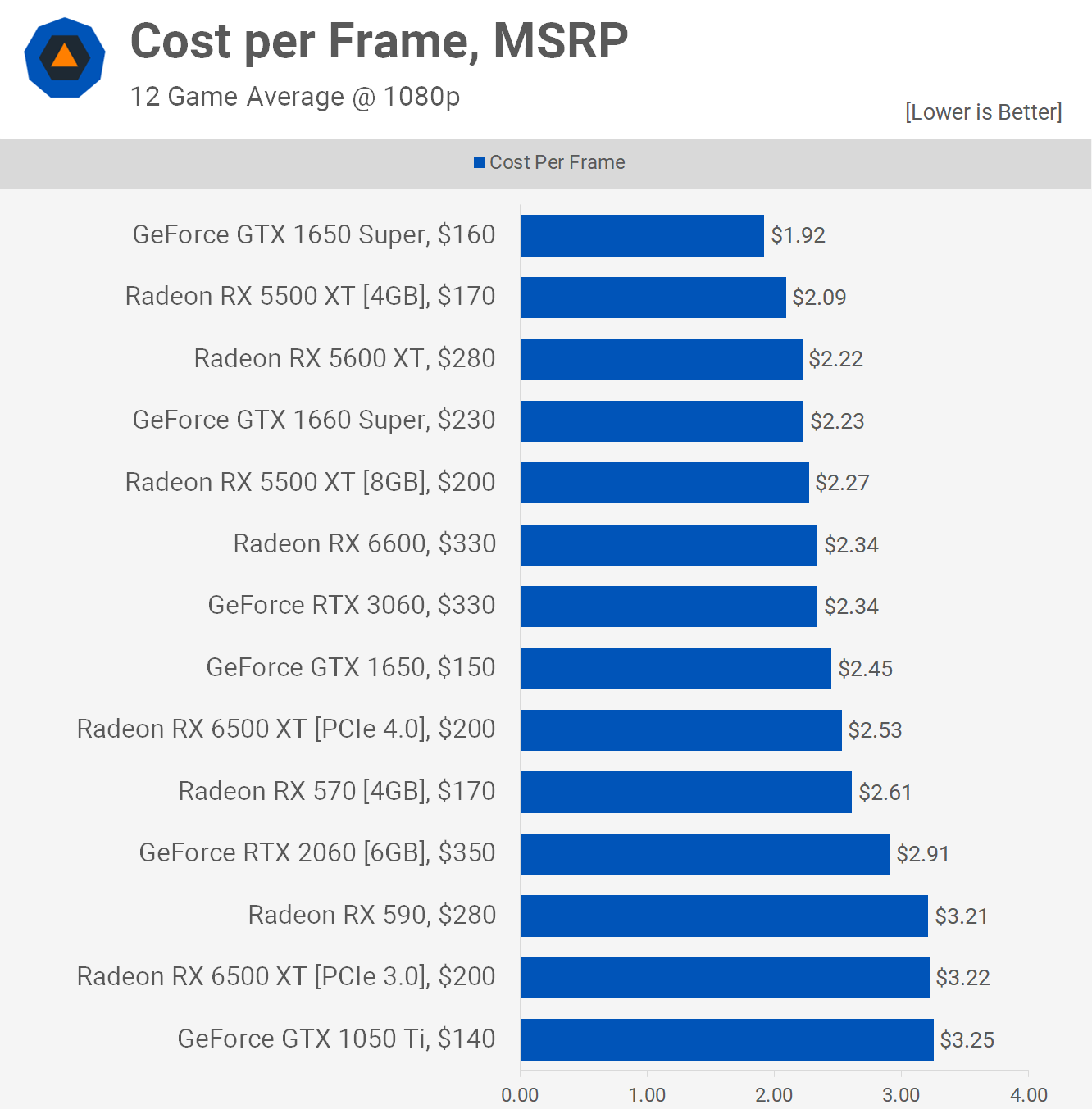

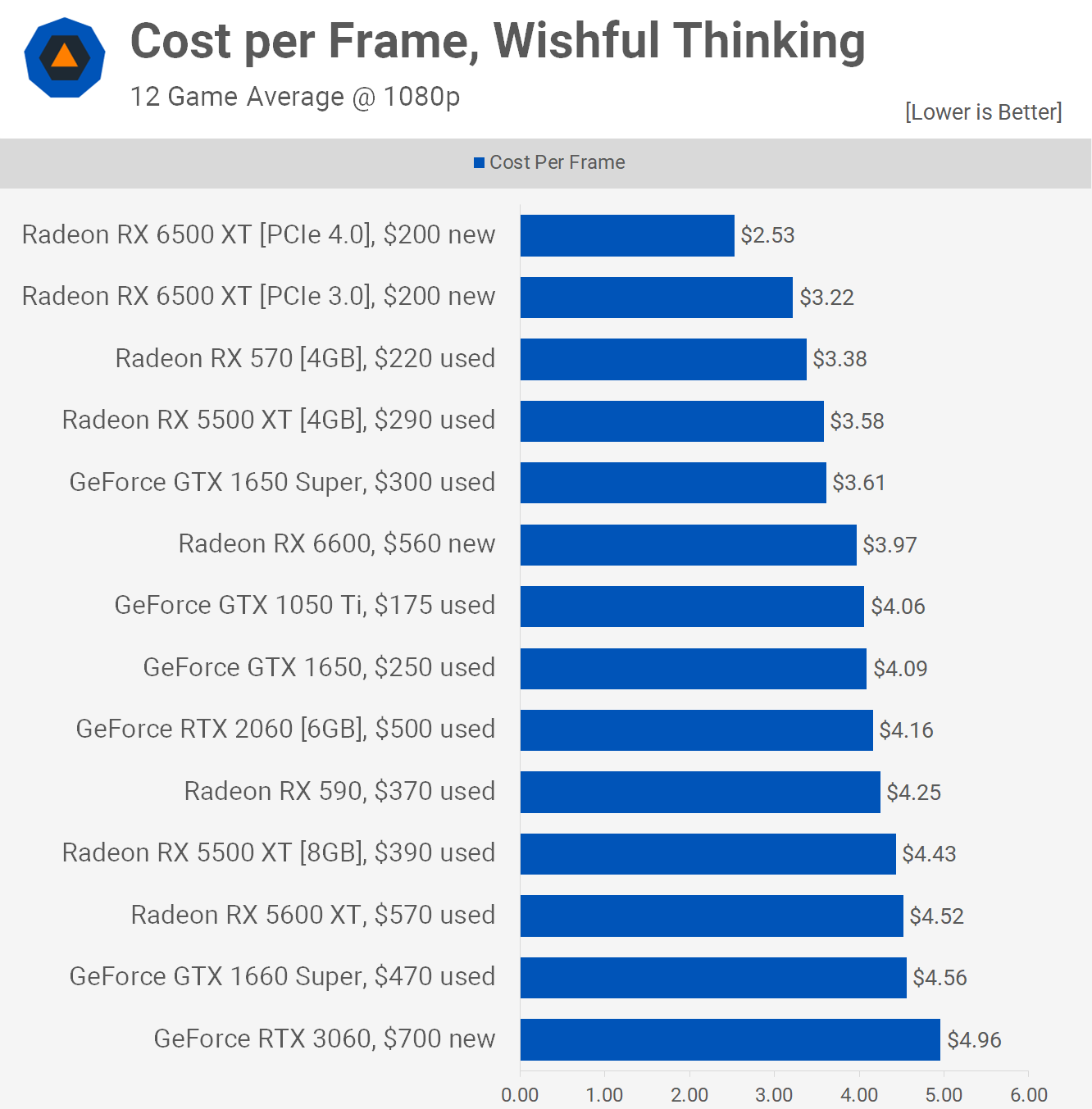

Cost per Frame – MSRP

We’ve got a few “cost per frame” graphs today, but let’s start in Fantasyland, because it’s fun to pretend. We’re talking MSRP here, as if all GPUs from the past and present were still available today at their MSRP, this is how they’d stack up.

The GTX 1650 Super would be the value king, followed by the 4GB 5500 XT. The 6500 XT in PCIe 4.0 mode would be below average, offering similar value to the old RX 570 and worse than the terrible GTX 1650, so that’s less than ideal. Then when valued using the PCIe 3.0 performance, the 6500 XT ends up being the worst value GPU to be released in the mid-range to low-end segment in the last 5 years, so that’s great news.

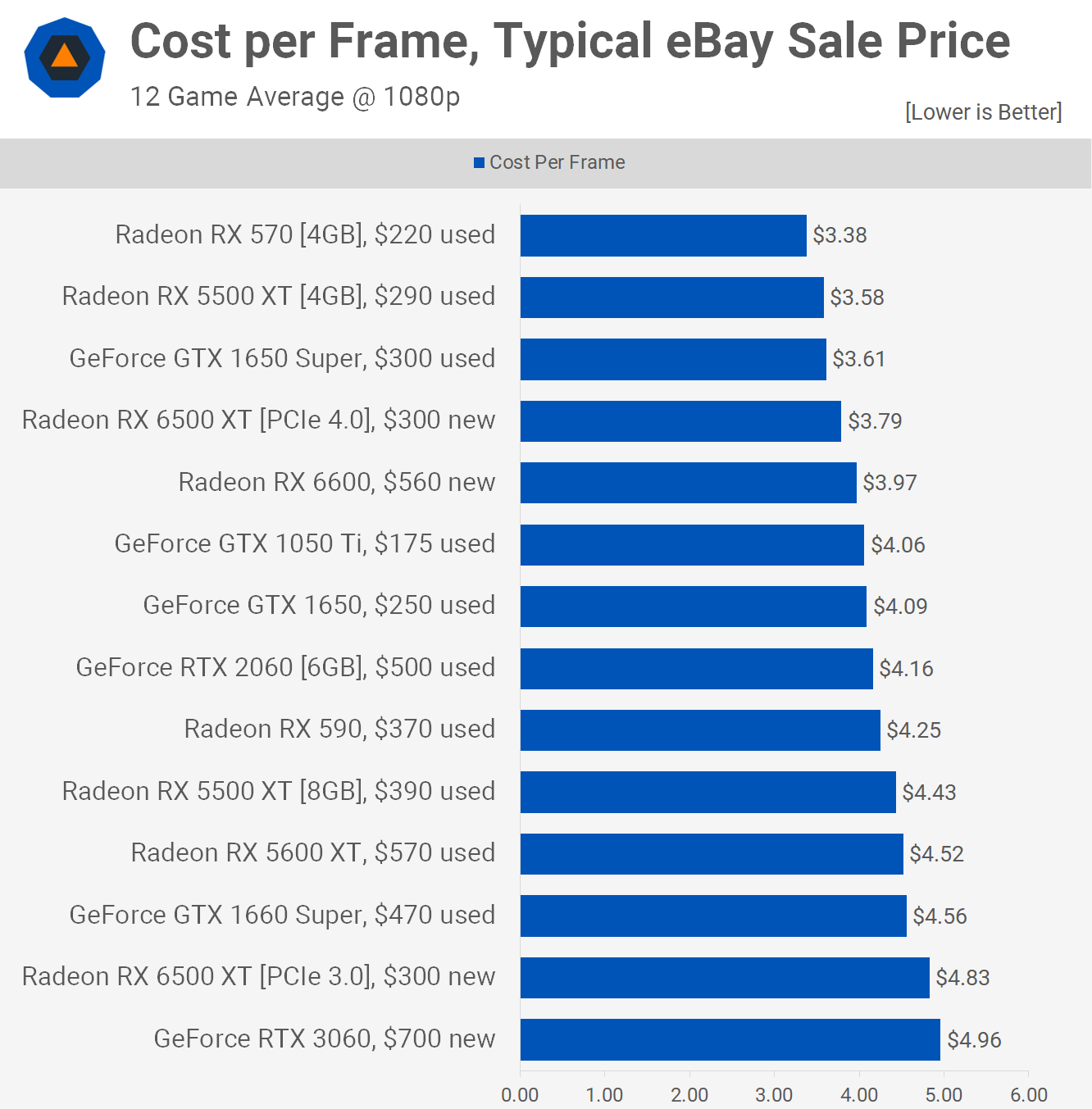

Cost per Frame – eBay Pricing

What if we look at typical eBay sale prices and go with a more realistic $300 asking price for the 6500 XT? If the PCIe 4.0 performance was the same performance you’d seen when using PCIe 3.0, then in today’s market it wouldn’t be terrible, assuming you only want dual monitor support and don’t require hardware encoding. If you want either of those features, then you might as well get the 1650 Super, or perhaps better still, the much cheaper RX 570 which can regularly be had for $220.

That’s $80 off for a card that supports full PCIe x16 bandwidth, hardware encoding and triple monitor support, some cards even do more. As far as cost per frame goes, it’s not bad at $300 but it’s not good either, but it might be useful to someone. The key problem is the PCIe 3.0 performance that kills the value of the 6500 XT, making it by far the worst graphics card available today in the $300 – $700 price range.

Wishful Thinking

We started in pricing Fantasyland and then quickly jumped to the harsh reality for all GPUs, but what about wishful thinking for just the 6500 XT? What if the 6500 XT was available for $200 in good numbers, while everything was priced as it currently is.

This is about the only way the 6500 XT makes sense and even then if you’re not using PCIe 4.0, it’s not a given that you’d go for AMD’s laptop GPU on a PCIe card. The PCIe 4.0 performance makes it by far the best value option, assuming you don’t need a feature like hardware encoding.

But for PCIe 3.0 users, even at $200 it’s a tough sell as you’ll get a similar level of value from the 4GB 5500 XT, while receiving twice the PCIe bandwidth, hardware encoding and triple monitor support. Or for similar money you can just snag a second hand RX 570, and again it has none of the shortcomings the 6500 XT suffers from.

SAM (AMD Smart Access Memory)

As a side note to the main testing, it’s worth noting that AMD’s own benchmarks of the 6500 XT are based on PCIe 4.0 performance, but they also have SAM enabled, AMD’s name for Resizable BAR. So we’ve gone back and tested Assassin’s Creed Valhalla with SAM enabled as this game sees between a 15-20% performance gain with the high-end GPUs such as the RX 6800, 6800 XT and 6900 XT.

In the case of the 6500 XT, it looks like SAM is significantly less effective as we’re only seeing a 5% performance bump in the PCIe 4.0 mode, which is helpful, though sadly there’s no performance gain to be had when using PCIe 3.0, at least in this example. So SAM doesn’t help alleviate the PCIe 3.0 bottleneck.

Preset Scaling

Before wrapping up the testing, I decided to take a look at preset scaling with the 6500 XT using PCIe 4.0 and 3.0. Starting with Rainbow Six Siege using the Vulkan API, we find that it’s only the ‘ultra’ quality preset which cripples the PCIe 3.0 configuration, which is to be expected in this game.

If you’re an esports-type gamer using competitive settings then the PCIe bandwidth weakness of the 6500 XT is likely going to be far less of an issue.

I’ve also run the same tests using DX11 and curiously when memory limited the 6500 XT does better, seen when looking at the ultra and very high quality data, while the high and medium data is similar to what we saw when testing with Vulkan.

Watch Dogs Legion is interesting because previously this game was tested using the medium quality preset where we found PCIe 4.0 to be 9% faster, but even if we use the lowest quality preset that margin doesn’t really change, and in fact it grew to 11%. That means there’s going to be at least a 10% advantage for PCIe 4.0 over 3.0 in this title with the 6500 XT.

It’s also worth noting that if you like to play single player more and target 40-60 fps, with a priority on visual quality rather than high fps, the 6500 XT isn’t going to be for you, at least if you’re limited to PCIe 3.0. Here the very high quality preset ran 29% faster using PCIe 4.0 with 44 fps at 1080p, opposed to just 34 fps using PCIe 3.0.

F1 2021 didn’t play nearly as well on the 6500 XT when limited to PCIe 3.0. We saw 47% stronger performance with the ultra high preset when using PCIe 4.0 and those of you prioritizing visuals over frame rates won’t be best served by the 6500 XT. Then we see that with the high quality preset the PCIe 4.0 configuration was 41% faster, then 37% faster using medium and quite shockingly still 24% faster using low.

Shadow of the Tomb Raider is a game that ran terribly using PCIe 3.0 relative to what we saw with 4.0, though I was using the highest quality preset. This saw 4.0 deliver 38% greater performance and that margin was heavily reduced to 18% with the high preset, then 9% with medium and then 6% with low. Still, the issue is that the game looks and runs great using the highest and high quality presets with PCIe 4.0, so dropping so much performance when using PCIe 3.0 simply isn’t acceptable.

Who Is It For?

You’ve waited a long time for AMD to release a budget RDNA2 GPU, and you’ve waited even longer to replace your RX 460, 470, 480, 560, 570 or 580 and this is what you’re now faced with: the 6500 XT with an asking price of $200, which will likely end up being $300+.

I could have gotten on board with the whole, it only has 4GB of VRAM, so miners won’t buy it argument. If only the rest of the GPU wasn’t so heavily cut down.

If only the rest of the GPU wasn’t so heavily cut down.

Avoiding the PCIe 3.0 performance hit or significantly reducing it will be relatively easy in most games, just opt to use low quality textures. But is that really the point? I know some people are going to make this argument, so we’ll head it off by saying why? Why would you compromise on the most important visual quality setting to head off an unnecessary performance hit when used in a PCIe 3.0 system?

With the 6500 XT you’re forced into doing just that, but with the GTX 1650 Super, for example, you don’t have to despite also featuring a small 4GB VRAM buffer. This is because it supports the full PCIe x16 bandwidth. As a result, it’s able to deliver perfectly playable performance in Shadow of the Tomb Raider at 1080p using the highest quality preset, with 67 fps on average.

Some will claim this testing to be unrealistic or unreasonable, but it’s really not. Even the 6500 XT was good for 65 fps in our Shadow of the Tomb Raider test… using PCIe 4.0. You can’t drop nearly 30% of the original performance when moving to a PCIe specification that can’t saturate an RTX 3090 and then blame anything but the graphics card.

The fact is, you can’t compromise on both VRAM capacity and PCIe bandwidth. To a degree you can get away with handicapping one or the other, but doing both is disastrous for performance. It also creates a situation where the 6500 XT is overly sensitive to exceeding the VRAM buffer, even by a little bit, whereas products such as the GTX 1650 Super have a lot more leeway.

But as I mentioned in the intro of this review, the PCIe x4 bandwidth limitation is just one of the major issues with the 6500 XT. Another problem is that encoding isn’t supported, so AMD’s version of ShadowPlay known as ReLive can’t be used with the 6500 XT, but if you had an old RX 470, for example, it could. No matter if you’re spending $200 or $2,000 on a GPU, you want to get hardware encoding. And frankly, GPU hardware encoding is more critical on low-end systems that simply don’t have the CPU resources to spare.

You’re also limited to just two display outputs, which could be a deal breaker for some. But most importantly, this was not a limitation on much older products such as the RX 480, for example.

The RX 6500 XT also forgoes AV1 decode support, making it fairly ordinary for use in a modern home theater PC. AV1 content is already pretty widespread today, so retiring this graphics card into a HTPC in a few years’ time won’t be an option for most.

Let’s assume for a second you’re happy sacrificing the features just mentioned, and also assuming you can achieve the PCIe 4.0 performance shown here on a PCIe 3.0 system by heavily reducing texture quality, and likely other quality settings, what is the Radeon RX 6500 XT worth?

In our opinion, it’s a hard pass at $300. You’d be far better off buying a used graphics card such as the GTX 1650 Super, RX 5500 XT or RX 570/580 4GB. Given the GTX 1650 Super is a PCIe 3.0 x16 product, it’s going to be a much safer bet for the vast majority of gamers. Frankly, it’d need to be no more than $200, but even in this market, I’d rather spend $100 more on the GTX 1650 Super to get the features I need. Odds are you’d probably be gaming on a PCIe 3.0 system, making the GTX 1650 Super the obvious choice.

I can’t help but feel AMD could have done so much better here, but it looks as though the Radeon brand is destined to flounder. That’s a real shame given how competitive they became at the high-end this generation, though even there they’ve failed to capitalize due to supply woes.