The humble personal computer has been a part of our lives, directly or indirectly, for nearly five decades, but its most significant transformations have transpired over the last thirty years.

During this time, the PC has utterly transformed in appearance, capability, and usage. While some aspects have barely changed, others are unrecognizable when compared to machines of the past.

And of course, change is inevitable when billions of dollars of revenue are at stake. However, few could have predicted exactly how these developments would unfold.

Join us as we examine the PC’s metamorphosis from awkward hulking beige boxes to an astonishing array of powerful, colorful, and astonishing computers.

Getting more with Moore: Exponential growth

When Gordon Moore, director of R&D at Fairchild Semiconductor, wrote about trends in chip manufacturing in 1965, he speculated that an average processor could contain over 60,000 components within a decade. His prediction not only proved correct but also became an accurate model for many future decades.

By 1993, a premium PC boasted chips that were unimaginable thirty years earlier. Intel’s Pentium contained an astounding 3.1 million transistors within a chip just half of a square inch (294 mm2) in size.

Its processing power was equally impressive, executing over 100 million instructions per second, thanks to its clever design and relatively high clock speed of 60 MHz.

By the mid-1970s, Moore’s Law continued unabated due to ongoing technological advances in the design and manufacturing of semiconductor integrated circuits. It is challenging to grasp just how much more capable chips are now, primarily because the metrics used in the past are not particularly relevant today.

The Pentium could crunch two instructions per clock cycle under the right conditions, but these had to come from the same thread. An equivalent CPU today if tested in the same manner, would give results of 300,000 to 800,000 instructions per second.

If this were solely due to clock speeds, the average desktop PC would be equipped with 400 GHz CPUs. However, as they typically range between 4 and 5.5 GHz, there’s clearly another factor at play.

Most of this improvement is attributed to the fact that a modern processor can work on multiple threads simultaneously. Despite this, multicore chips still execute only a handful of instructions per clock cycle. The real performance gains are much higher, primarily due to improvements in other areas of a PC’s computing ability, namely, memory.

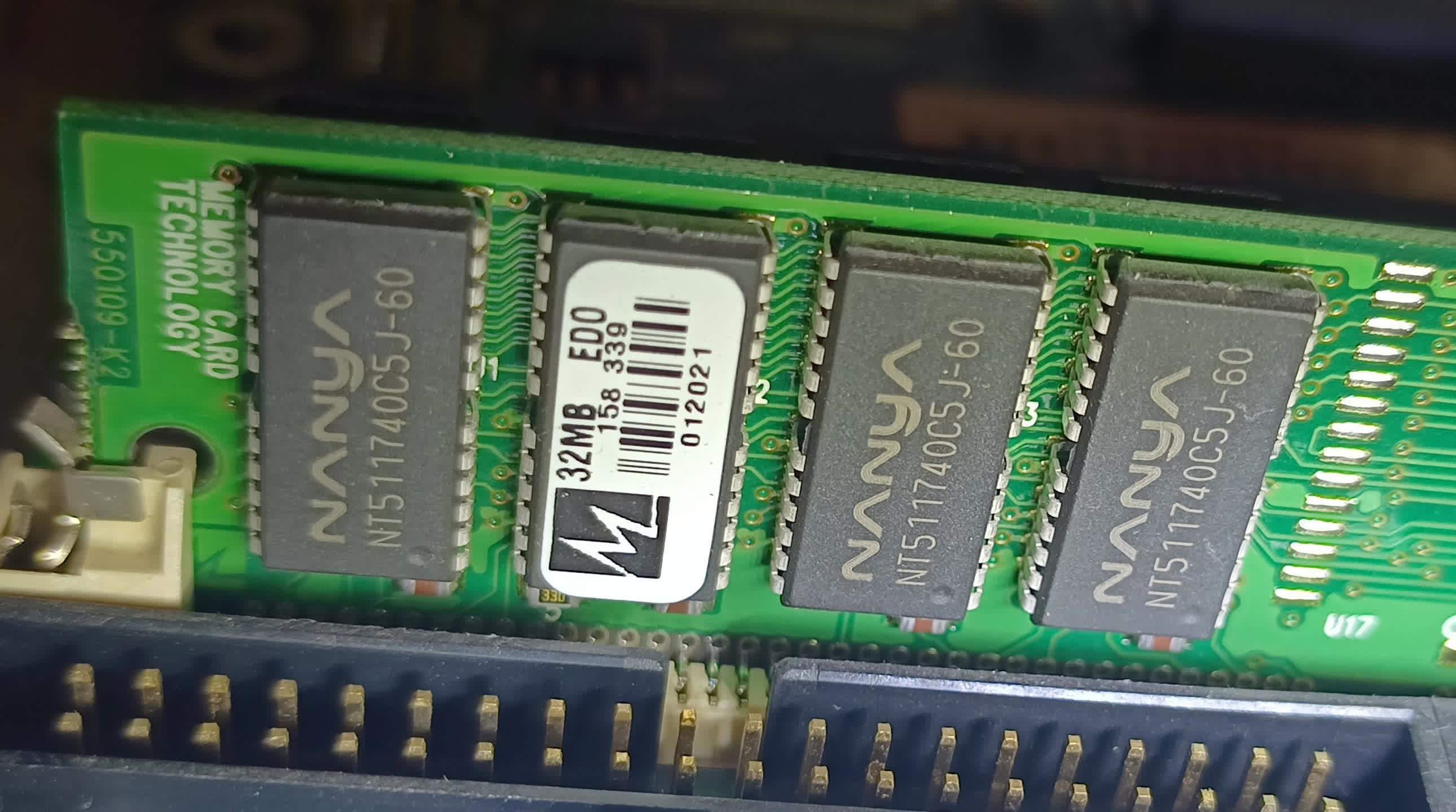

Thirty years ago, it would have cost a small fortune to equip your new PC with anything more than 8 MB of Fast Page Mode (FPM) or Extended Data Out (EDO) DRAM. The speed was hardly mentioned, as it was a minor factor compared to the amount of memory available.

Today, even basic laptops come with RAM boasting capacities that are 1,000 times greater than their distant predecessors, and the speed of accessing the data is significantly faster.

While FPM DRAM could barely achieve 18 million transfers per second (MT/s), a PC sporting DDR4-2400 (i.e., 2400 MT/s) would be considered slow by today’s standards.

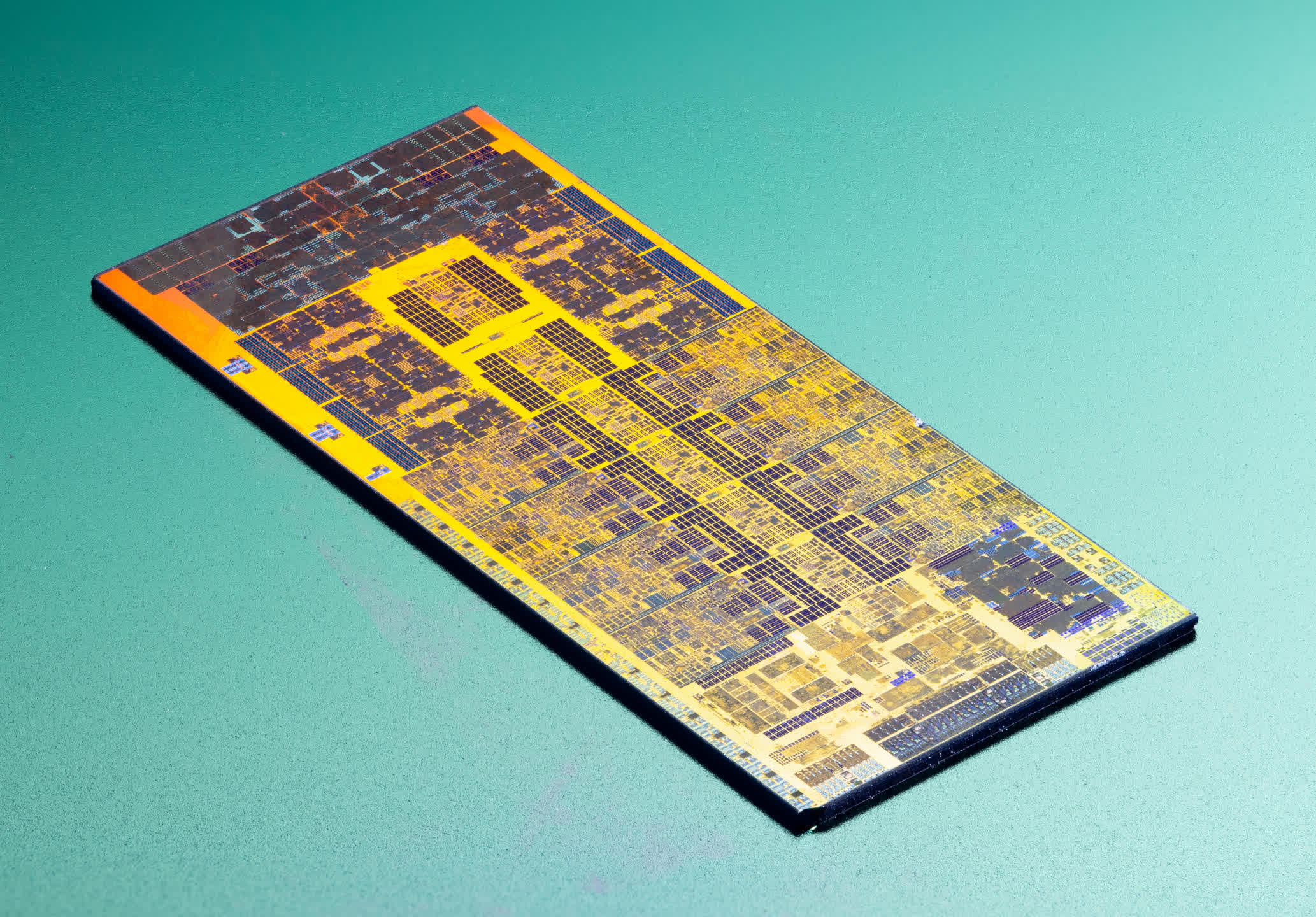

The ability to make semiconductor components ever smaller has also resulted in today’s CPUs packing in vast amounts of internal memory, called cache, which operates at even higher speeds. Intel’s P5 design boasted 8 kB to store instructions and another 8 kB for data, with up to 256 kB of SRAM chips soldered onto the motherboard.

The recent Core i9-13900K (above) might not seem like much of an improvement on the surface. Its Performance cores have 32 kB and 48 kB respectively, while the E-cores boast 64 kb and 32 kb. However, these are supported by an additional 2 MB of cache, and a further 36 MB shared across all the cores, all located deep within the CPU itself.

There are numerous other enhancements, such as superior branch predictors, but perhaps the most significant indicator of how much processors have improved since the early 1990s is demonstrated by mobile or handheld devices.

Laptops from that period housed CPUs that were typically low-voltage, low-clocked versions of desktop models, whereas PDA handhelds relied on 8-bit processors from the previous decade.

Today, you can buy watches that have CPUs more powerful than the original Pentium.

Today, you can buy watches that have CPUs more powerful than the original Pentium. Samsung’s Galaxy Watch 5, for example, features a dual-core, 1.18 GHz Arm Cortex-A55 – a chip that can execute instructions 20 to 100 times faster than the Pentium.

Of course, our expectations of these devices are considerably higher today (internet browsing, video streaming, image processing, gaming, and so on), making a direct comparison somewhat unfair, as portable PCs back then simply didn’t have the supporting technology to handle these tasks.

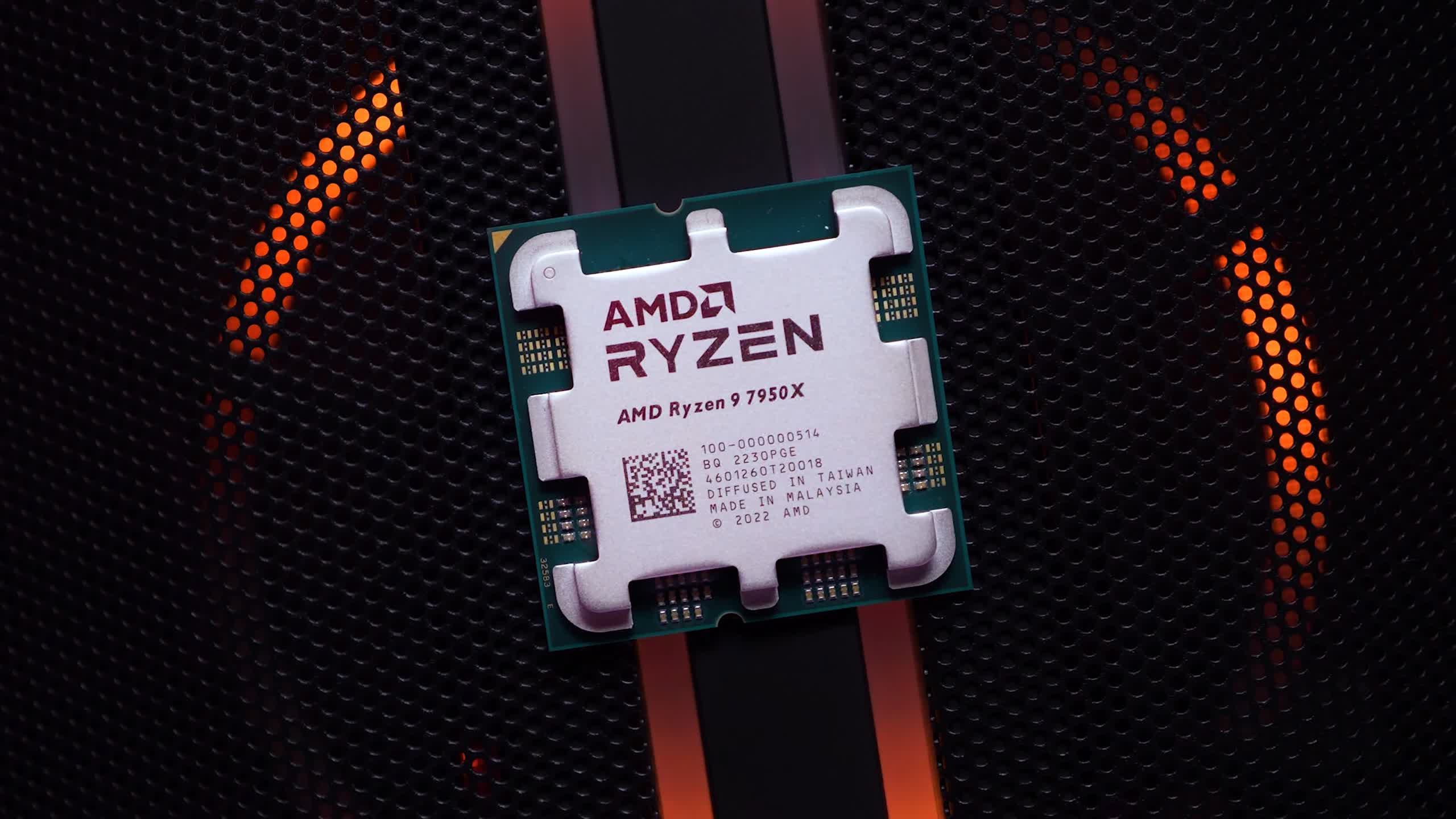

In many ways, the modern central processor is too powerful for the majority of use scenarios. Despite the likes of AMD and Intel investing billions of dollars into research and development, successive generations of chips show only marginal improvements each time.

Even in the realm of 3D graphics accelerators (GPUs), which were virtually non-existent in the consumer market in 1993, there are signs of diminishing advancements.

Thirty years ago, processors were never fast enough. Now, it takes specialized applications to truly stretch them to their limits.

Power to the people: Software and ease of use

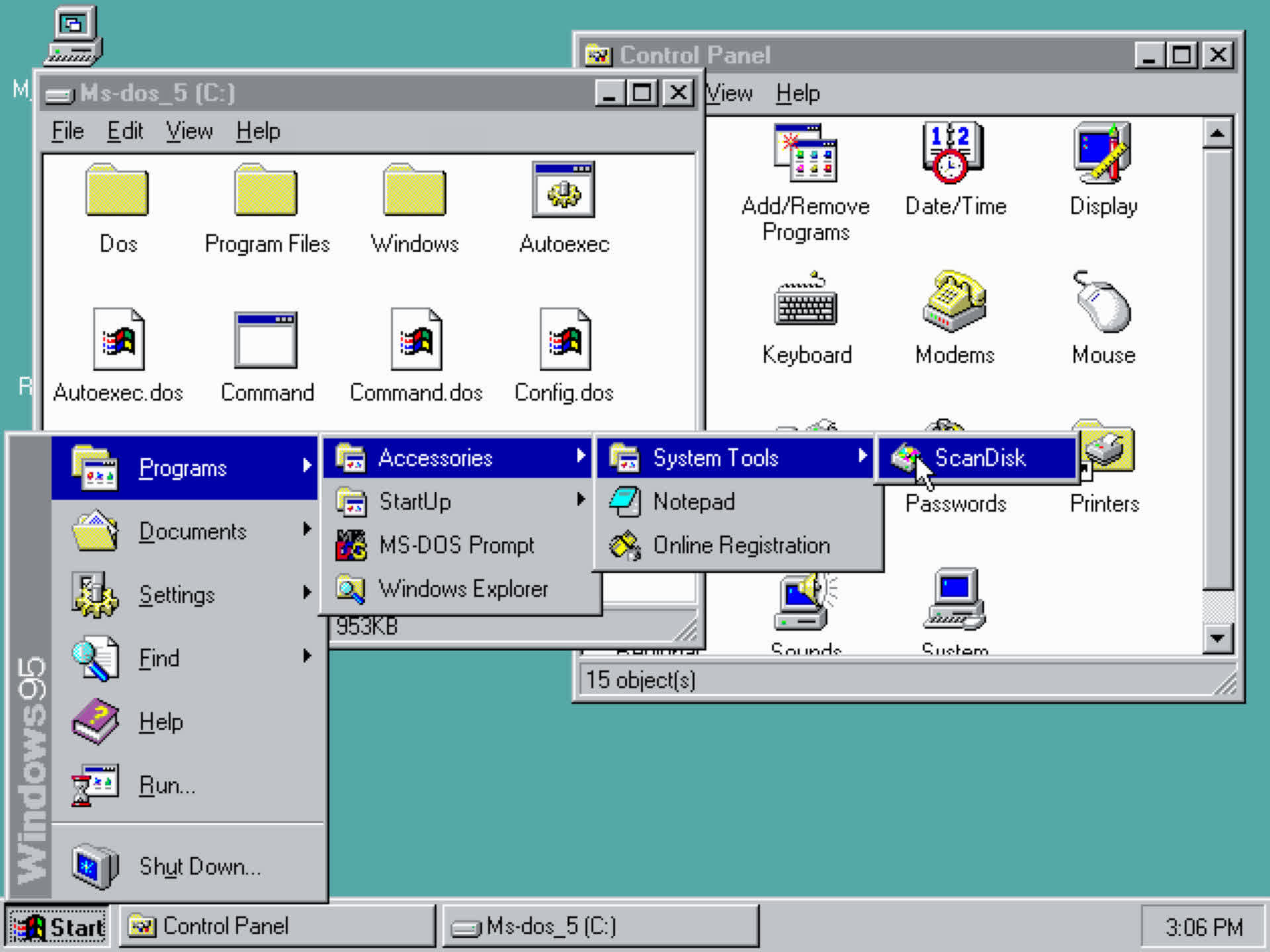

While present PCs are vastly more powerful than those from the 90s, our ability to use them has evolved beyond recognition. One of the most significant advances in computing was the introduction of the GUI. Instead of wading through endless lines of commands, you could achieve the same outcomes by simply interacting with icons logically designed to accurately represent files, folders, and programs.

Microsoft’s Windows 3.0 (1990) and Windows 95 (1995), along with Apple’s System 7 (1991) played instrumental roles in making PCs far more user-friendly. As a result, computers became more popular with regular people, as less technical knowledge and training were required. However, home computers could still be somewhat difficult to manage, especially when trying to get peripherals and expansion cards to function properly.

Thanks to improved processing power along with the emergence of universal standards, these difficulties gradually gave way to what is generally known as “plug and play” – the ability to attach a device to a computer and let the operating system handle all of the setup and configuration.

Connection systems such as USB (1996) and PCI Express (2003) extended this convenience to the point that the only input required from the user was to click the ‘OK’ button on a few prompts.

Wireless standards, such as Bluetooth and Wi-Fi, have also played a significant role in bringing PCs to the masses. The integration of these standards into operating systems has significantly improved the user experience. Setting up and connecting to a network or a specific device, once classic examples of computing woes, can now be achieved through a handful of clicks.

Motherboards were once adorned with tiny DIP switches that needed to be correctly adjusted for the components housed within them. Now, one simply needs to insert the part, and the software will handle the rest of the configuration. While many PC users are comfortable installing drivers themselves, operating systems like Windows and macOS can readily do it for you.

Inevitably, the fundamental software required to run a computer has ballooned in size. While Windows 95 required around 50 MB of disk space, the latest version of Microsoft’s operating system demands at least 64 GB. That’s over 1,000 times more storage, and the same holds true for hardware drivers such as graphics cards.

This increase also means there is now a far greater scope for something to go wrong when vendors update part of this code, resulting in the patch needing another update just to fix those problems. This ballooning has been mostly offset by the ever-decreasing cost of digital storage and the prevalence of computers with permanent internet connections, which enable automatic software updates.

Today’s average PC is an incredibly complex system, yet millions of people around the world use them with only a modicum of training and understanding. The price for this convenience is mostly hidden from view, but we are perhaps reminded of it more often than we’d like.

Freedom of form (factors)

If you check out some copies of PC Magazine from 1993 and leaf through the multitude of adverts for different PC systems, you’ll likely notice that they all share one common trait – their size, shape, and color.

Regardless of the brand, almost every company chose to replicate IBM’s original design and saturate the market with hulking, beige boxes.

Almost every peripheral was constructed using the same plastic, in the same color. A few vendors dared to deviate and offer something different in appearance, such as certain models in the Compaq Presario range. While these still conformed to the prevalent beige standard of the era, at least their cases were more sculpted.

To be fair to vendors of old, there were few expectations for the form of a PC to be anything different from that set by IBM back in the 1980s. Devices that could be added to your computer were restricted in their form, too, as floppy and CD drives were compulsory elements of any machine, and they were obliged to follow the standard format.

While some of these limitations persist today, the vast array of PC formats available shows just how much has changed. Black has become the color of choice for most electronic devices, primarily for aesthetic reasons (it conceals dirt and other marks better than beige), although white, silver and other softer pastels are growing in popularity.

But it’s the size and shape of the PC that have changed most dramatically – from sleek, inconspicuous small form factor (SFF) systems to gargantuan behemoths, there are few limits to how small or large a home computer can now be. Manufacturers of PC cases in the past would have touted their products’ ability to house as many 5.25″ or 3.25″ devices as possible; now it’s more about number of fans/cooling, how small its footprint, or how silent it is.

And while today’s computers are generally monotone on the outside, the use of LED lighting to provide internal colors and patterns is now a standard feature of any PC bearing the “gaming” tag. Of course, office machines and workstations forgo such frivolities and maintain appearances not too dissimilar from their 90s counterparts, but it’s rare to see any model come with a DVD drive, for example.

So how did this transformation occur? Some argue that the change was sparked by Apple when it released the first iMac in 1998. Its eventual popularity, with a translucent, colorful shell, demonstrated that consumers were receptive to such designs, and while the world of IBM PC-compatible machines incorporated some elements, the fundamental layout still strongly resembled the classic design.

There isn’t one specific reason for all of the changes, but as PCs became increasingly cheaper and more user-friendly, new markets opened up to capitalize on the computing zeitgeist. The ubiquity of RGB fans and glass-paneled cases can be directly attributed to PC enthusiasts who wanted to customize their systems further.

Hardware manufacturers followed trends on discussion forums and adapted their products to allow for simpler and cleaner builds – rickety, cheap-feeling PCs with a jumble of wiring inside gave way to sturdy metals and glass panels, showcasing components with connections hidden from view.

SFF computers owe no small thanks to the likes of the Apple Mac Mini, Intel’s NUC format, and the Raspberry Pi – or is it the other way around? – today one can have a home media server no larger than a weighty novel and with looks that wouldn’t disgrace a discerning bookcase.

Laptops still bear obvious design ties to the past. Significant advances in build quality, screen and battery technologies, coupled with the rise of NAND flash for storage, have thankfully made them sturdier and more pleasant to use, but their form remains largely unchanged. Even so-called 2-in-1 devices, born from the popularity of tablets (driven by Apple’s iPad), are still fundamentally laptops in nature.

This isn’t to say that everything is now better. The relentless drive to maintain a market position has resulted in laptops becoming arguably too slim and light, which restricts the amount of wired connectivity. The same is true for desktop PCs – those with any gaming credibility typically sport visual features that contribute nothing to performance and only increase the price tag.

But at least one cannot criticize the myriad of choices, covering every possible use and configuration imaginable, that are available to us now.

Imagination unhindered

With the right know-how, you can use a PC to carry out in-depth research; create sophisticated documents for publication; manage and process complex data arrays; create music, videos, and 3D graphics. Surely, many people only consume some of these content on their devices and multi-billion dollar industries exist purely for computer-based enjoyment.

But browse a typical computer magazine from the early 90s and you’ll notice a somewhat different story – everything feels heavily ‘productivity’-oriented, with only a passing nod to entertainment.

That’s not to say home computers weren’t used for fun; there was indeed a thriving PC gaming industry. However, there was a bigger focus on consoles given that the combined sales of Nintendo’s SNES and the Sega Genesis significantly outnumbered the entire PC market.

PCs used to be incredibly expensive after all, this was especially true for high-end models. In 1993, if you worked in graphic design and wanted a machine equipped with Intel’s new Pentium CPU, 32 MB of RAM, 500 MB of disk storage, a 2 MB graphics card, and a 20-inch high-resolution monitor, you’d be expected to fork over more than $9,000 for such a machine.

You could certainly spend that kind of money now, but back then, the median US household income was around $30,000 – in other words, a home user would be expected to spend roughly a third of their entire yearly income on that computer. Those specifications were top-of-the-line for that period, but achieving the same today can be done for just half that amount of money, and median income has more than doubled since the early 1990s.

Moreover, you don’t actually need the best hardware to perform any task now. Even highly complex workloads, such as AI processing and graphics rendering, can be undertaken on standard components, within a reasonable budget. Yes, the system will take longer to complete this work, but it can do it; the specialist hardware of the 90s is now commonplace in all PCs.

Want to make your own Toy Story? If you have the talent and don’t mind waiting a few hours for the tasks to finish, then even a budget PC will suffice.

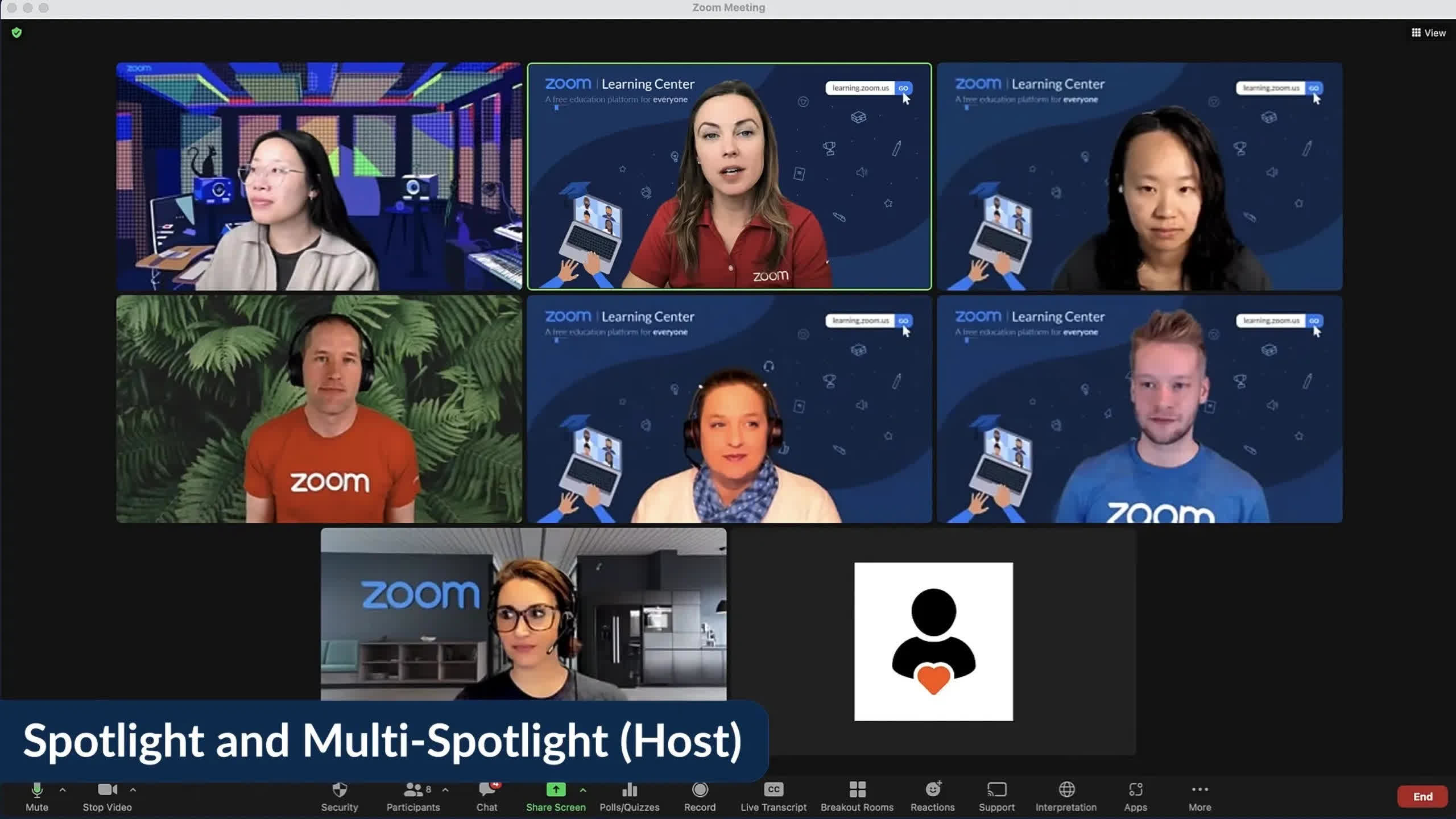

It’s not just the levels of creativity that home computers now offer us – we can easily work and play together, all in real-time, with dozens of people seamlessly connected on the same task or game. Cheap, high-speed Internet deserves much of the credit for this, as do advances in screen and camera technologies.

The Connectix QuickCam, one of the very first consumer-grade webcams, debuted in 1994, but only produced 320 x 240 grayscale footage, at 15 frames a second. Initially only available for Apple Macs, it retailed at $100 – that’s $210 in today’s money. But for about three times that amount, today you can get an entire computer wrapped around a webcam that’s full color and produces video with 12 times more pixels, at double the frame rate.

With the right hardware, images and videos can be recorded or streamed live anywhere in the world.

Anyone at home can take this data, edit and enhance it, creating new media for education and entertainment. The days when extremely expensive computers and specialist, cutting-edge knowledge were required to do this are long gone.

The capabilities of a modern PC and its associated peripherals, rendered thoroughly accessible through relentless advancements in software, have made all of this possible.

Futurology: Now and ahead

As it’s always been, predicting what the typical PC future configuration – even just a few years ahead, let alone another 30 – remains a challenging and potentially risky endeavor. Many fundamental aspects have remained unchanged since three decades ago, such as processors based on the x64 architecture, as well as the basic setup of RAM, storage, graphics adapter, and connectivity options.

Future machines will probably adhere to this straightforward structure, but the underlying designs of the various components are likely to be substantially different.

We might see the humble wire phased out, aside from the power cable connected to the PC’s back (wirelessly powering a 700 W computer would be less than ideal!). Currently, wires are essential for providing current to internal parts and transferring data to some devices. However, these could be replaced with standardized slots offering both functions.

We already have this now – PCI Express and M.2 slots are used for graphics cards and solid storage drives, and while the former still needs hefty cables to power them, some vendors are already trying to minimize the number of cables required, and there’s nothing to say that this won’t eventually all be absorbed into the motherboard.

How much more powerful the PCs of 2053 will be is anyone’s guess. We are beginning to approach the limits of what semiconductor process nodes can achieve in large-scale processor fabrication. Still, it will be quite some time before we hit an insurmountable wall. The desktop CPU of the future might not operate at 50 GHz or process thousands of threads per cycle, but it should more than capably handle the workloads awaiting it.

Computers already come in every imaginable size, shape, and format, so predicting potential differences in this regard is challenging. We may see an increased emphasis on recycling and reusing various parts due to the current alarming amount of e-waste.

The same could be said about the trend in power consumption. High-end CPUs consume 10 to 20 times more energy than those from the ’90s, and the largest graphics cards in a home PC use up to 100 times more. Our environmental concerns may dictate the future PC’s design more than usage needs or technological advancements.

Over the past 30 years, we’ve witnessed the extraordinary evolution of the personal computer. These machines have revolutionized the way we live, work, engage in entertainment, and communicate with friends and loved ones. The once clunky, unattractive, and cumbersome machines have evolved into sleek, powerful devices as stunning as they are capable.

Anticipating what the future holds is truly exciting.