These days, it’s rare to see a new hardware company break ground in the world of PCs. However, 30 years ago, they were popping up everywhere, like moles in an arcade game. This was especially true in the graphics sector, with dozens of firms all fighting for a slice of the lucrative and nascent market.

One such company stood out from the crowd and for a brief few years, held the top spot for chip design in graphics acceleration. Their products were so popular that almost every PC sold in the early 90s sported their technology. But only a decade after its birth, the firm split up, sold off many assets, and rapidly faded from the limelight.

Join us as we pay tribute to S3 Graphics and see how its remarkable story unfolded over the years.

The formation of S3 and early successes

The tale begins in early 1989, an era of the likes of the Apple Macintosh SE/30, MS-DOS 4.0, and the Intel 80486DX. PCs then generally had a very limited display output, with the drawing handled by the CPU and usually just in single bit monochrome; but with a suitable add-in card or expansion peripheral, accelerated rendering with 8-bit color was possible.

Prices for such extras could start at $900 and often go much higher than this. It would be for this market that Dado Banatao and Ronald Yara would put together a new startup based in Santa Clara, California: meet S3, Inc. Banatao’s story is worthy of its own tale, as is Yara’s, and by this time, they were both highly successful technology entrepreneurs and electrical/electronics engineers.

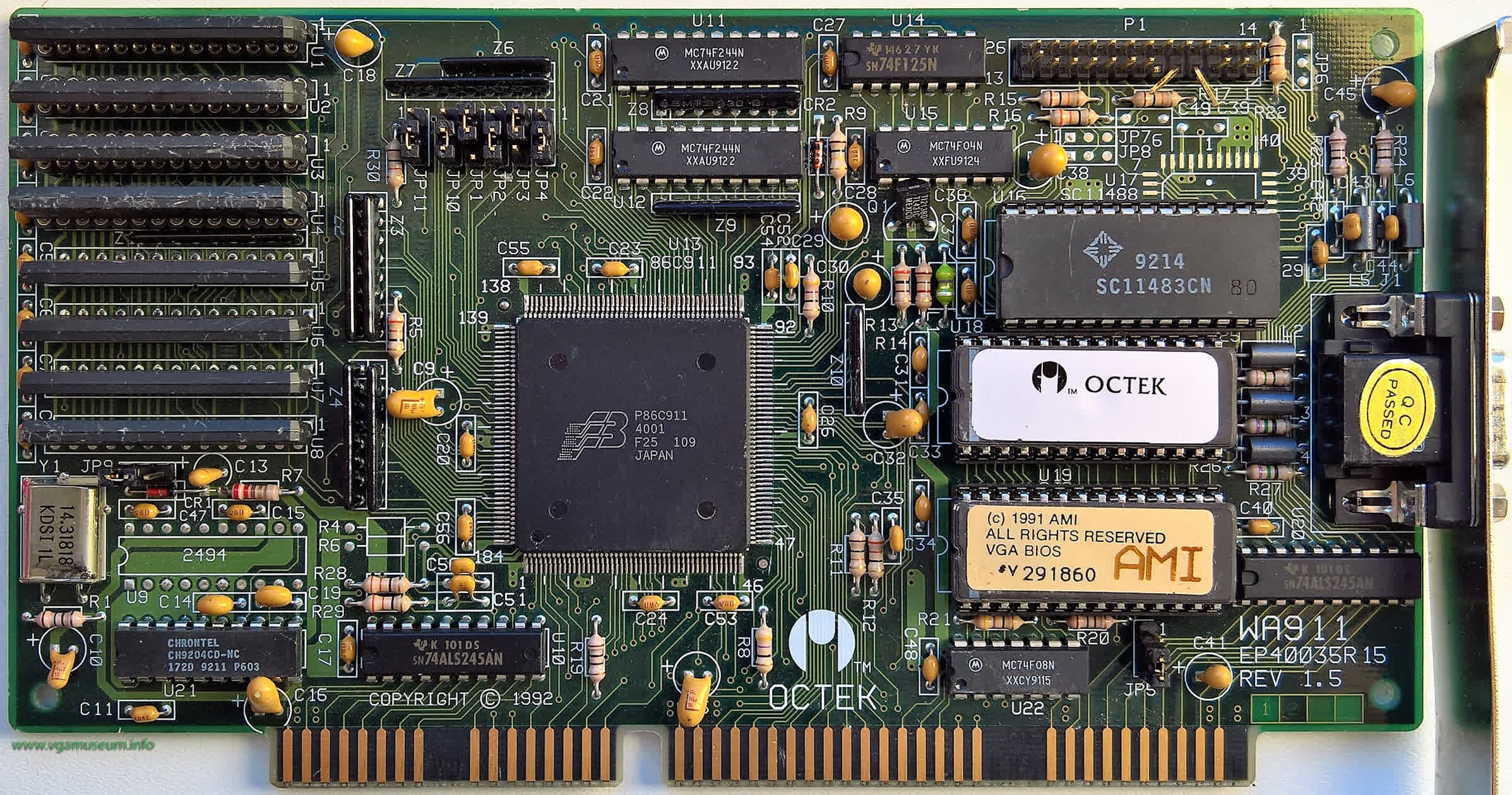

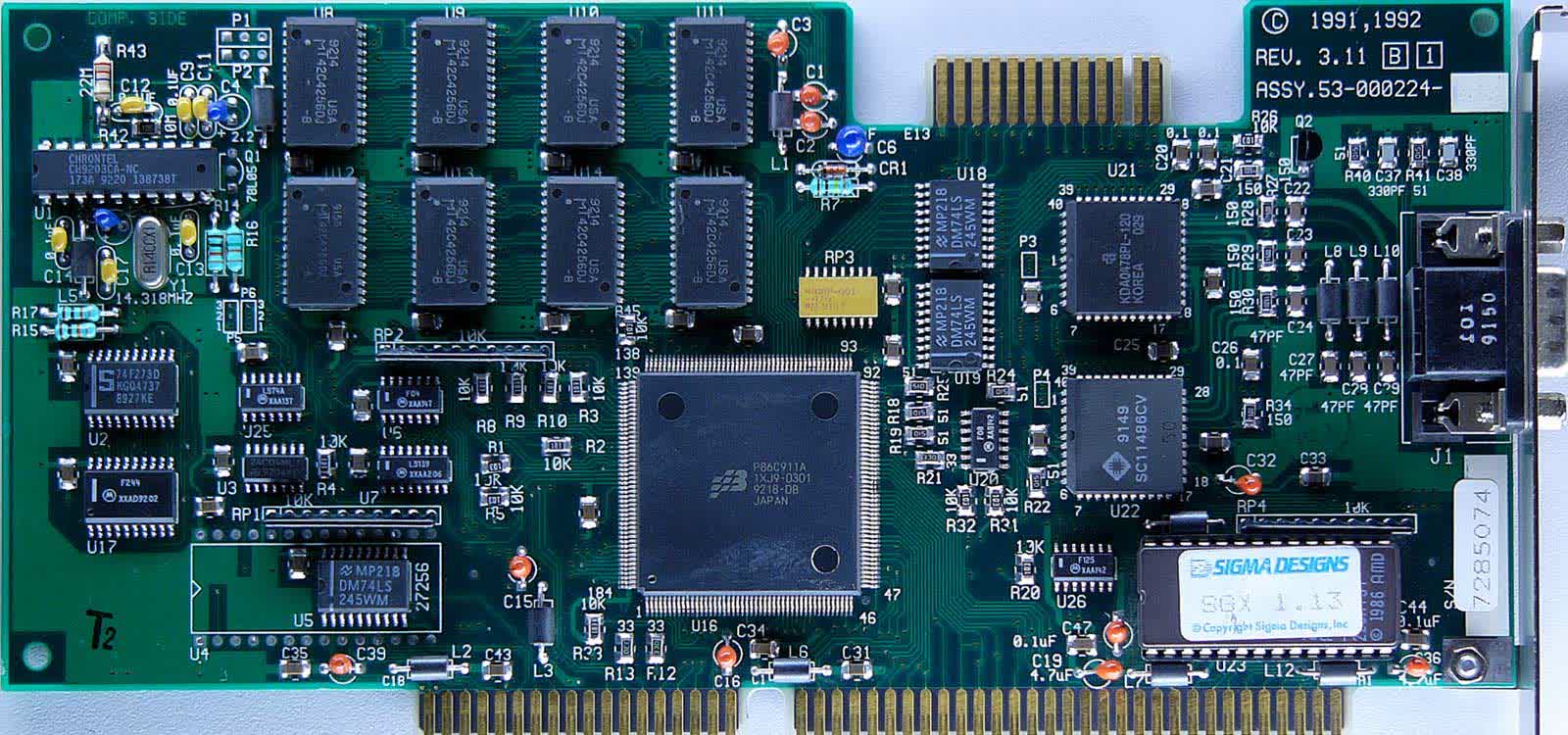

Within two years, they had their first product for sale: the P86C911 (also known as the S3 Carrera). This chip accelerated line drawing, rectangle filling, and the raster operations in Windows, mostly involving what are known as bit block transfers. This is where small arrays of data, that as a whole make up the entire screen, are moved about, overlayed, copied, etc.

Just as AMD and Nvidia do today, S3 used other companies to manufacture and distribute the chips, which would then be purchased by add-in board vendors for their own products. Famous names, such as Diamond Multimedia and Orchid Technology, released cards sporting 1 MB of VRAM, an output as high as 1280×1024 in 16 colors, and a 16-bit ISA connection system.

Reviews were quite favorable at the time – it wasn’t the fastest accelerator out there, but it was competitively priced. The benchmark for all so-called 2D accelerators then was the ATi Mach series of chips, but where an ATI Graphics Ultra card powered by the Mach 64 retailed at $899, cards with the S3 P86C911 could be had for as little as $499.

In those early days, S3 maintained a healthy development cycle, and continued to improve and modernize their chip design. By 1994, add-in board vendors were using the new Vision series of accelerators: full 16-bit color output at 1280×1024, and up to 4 MB of fast page mode (FPM) or extended data out (EDO) 64-bit DRAM. There was even acceleration for MPEG video files.

Again, those chips weren’t the fastest available, but they were significantly cheaper than the competition. Naturally, this caught the eye of system builders and that’s how S3 chips would grace many people’s first PC.

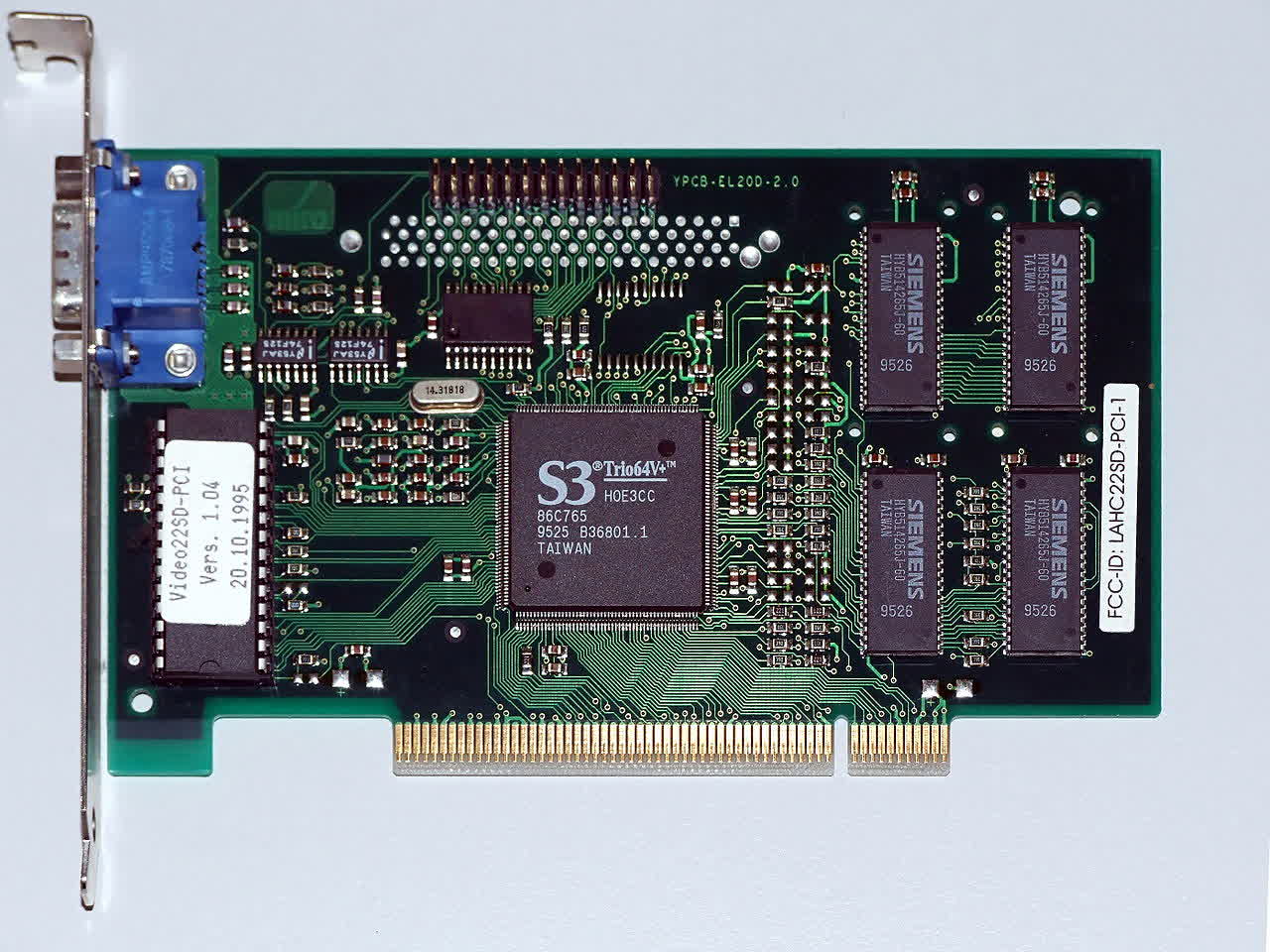

A quick glance at the above image, from German card vendor Spea, highlights a particular issue that manufacturers faced in the mid 90s: multiple separate chips were required to handle various roles. At the least, one was needed for the primary clock and another for the digital-to-analogue conversion (i.e. the RAMDAC).

To that end, S3 released the Trio chip series a year later, which integrated the graphics acceleration circuits, with the RAMDAC and clock generator. The result was a notable reduction in costs for card vendors, which pushed S3’s popularity even further, giving them the lion’s share of display adapter market.

By 1995, game consoles such as the Sony PlayStation and Sega Saturn showed the direction that the graphics market was now dedicated towards, and with S3 at the height of their powers, they were one of the first chip designers to meet these new demands, with an “all-in-one” offering.

Given how well they had mastered 2D and video acceleration, how hard could 3D be?

Another dimension and the first failures

The best selling PC game in 1995 was Command & Conquer, a real-time strategy game initially released for DOS that used 2D isometric graphics. However, console owners were being treated to 3D images, similar to those being generated in arcade machines.

So the pressure was on for hardware makers to provide acceleration in this area, too. Software support was already available, as OpenGL by then was 3 years old, and Microsoft had snapped up RenderMorphics at the start of 1995, in order to integrate their Reality Lab API into their forthcoming Windows 95 (to ultimately become Direct3D).

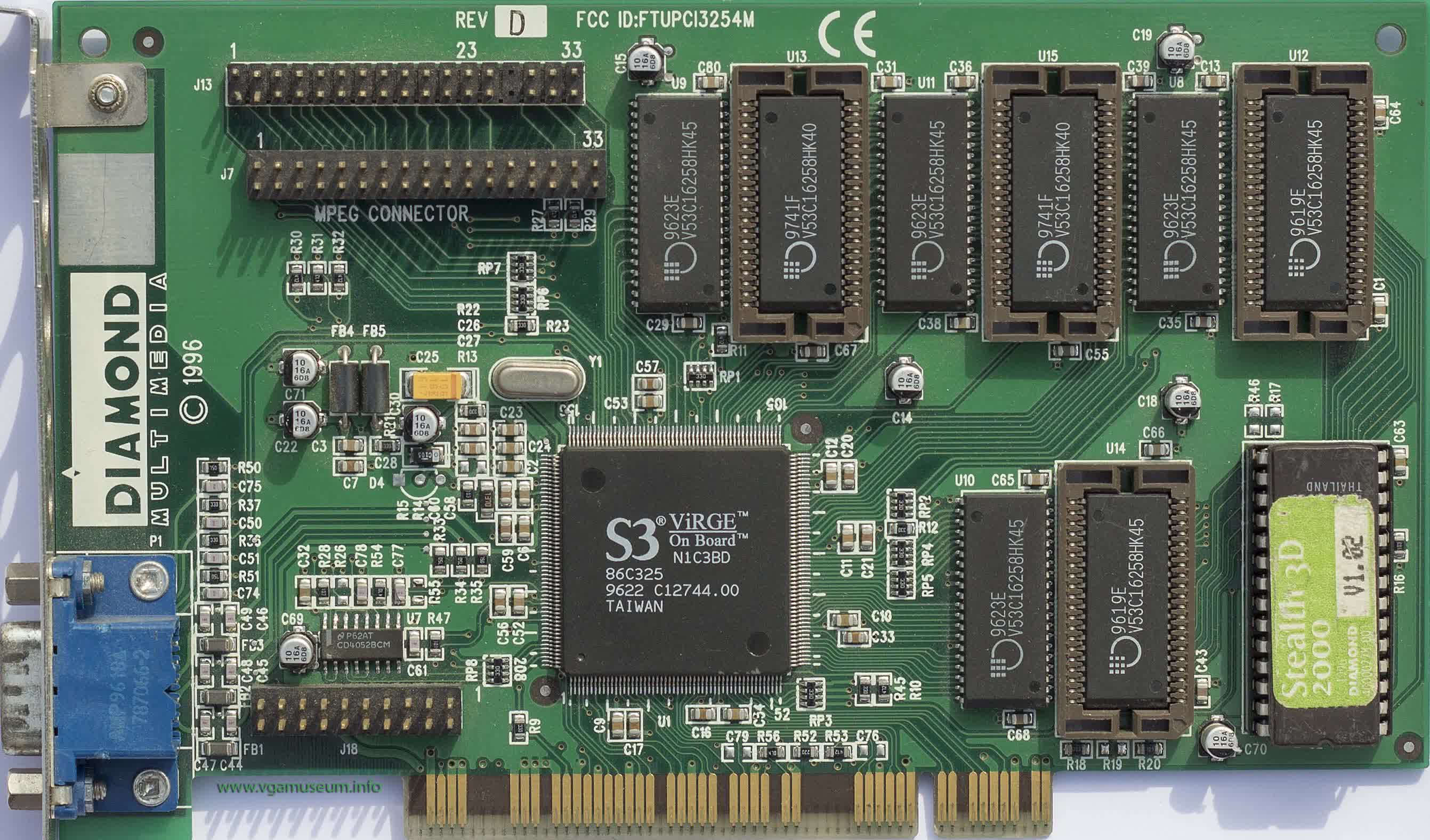

By the end of the year, S3 launched the ViRGE (‘Virtual Reality Graphics Engine’) chip; essentially an improved Trio with some 3D capability shoehorned into it (marketed as the S3d Engine).

Add-in cards using the processor weren’t readily available until early 1996, and these first offerings were met with universal ambivalence. Up to this point, 3D games on the PC were nearly always rendered via the CPU and when handling basic polygons, lit via Gouraud shading and unfiltered textures applied, the ViRGE was generally a little faster than the best CPUs of that time.

The “S3 ViRGE” was generally a little faster than the best CPUs of that time…

However, with any other additional 3D feature applied (such as texture filtering or perspective correction), the performance would nose dive, especially at resolutions equal to or greater than VGA (640 x 480). There were a number of reasons for this, but two aspects stood out against the others.

Firstly, developers had to use S3’s own API to take advantage of the 3D acceleration, as they didn’t offer any OpenGL support in their drivers initially. Proprietary APIs aren’t necessarily worse than open sourced ones, but once other manufacturers released their own 3D chips, with full compatibility with OpenGL, few developers bothered to make S3d modes for their games.

With a dearth of programmers working with the API, there wasn’t enough external investigation and feedback to help improve it. And then there was the hardware itself – texture filtering was exceptionally slow, taking far too many clock cycles to sample and blend a bilinear texel. So at VGA resolution, the chip would just bog down under the workload, dragging the frame rate into the dirt.

But despite these failings, ViRGE cards actually sold quite well, thanks to its strong 2D performance, decent price, and indifferent competition.

Nvidia’s very first product, the expensive NV1, was launched a few months before S3’s was targeted at the wrong direction that 3D graphics was progressing in, and ATI’s 3D Rage series, released in April 1996, wasn’t much better than the ViRGE.

It would be unfair to be overly critical of S3 at this stage, though. After all, 3D accelerators for the general consumer were in their infancy and it would take a number of years for the field to settle. The ViRGE lineup would see a number of models released over a period of 5 years, with faster versions (such as the ViRGE/DX) offering improvements in every area.

In 1998, S3 Inc announced a completely new graphics processor: the Savage3D. This chip had far superior texture filtering than the ViRGE (bilinear was now single cycle), although multitexturing was still required multipass rendering – that is, if you wanted to apply two textures to the same pixel, the whole scene would effectively need to be processed twice.

The design also carried better video capabilities, with a built-in TV encoder and superior scaling and interpolation for MPEG and DVD. S3 even went as far to create their own texture compression algorithm, called S3TC, which would eventually become integrated into the OpenGL and Direct3D APIs.

On paper, it should have been a huge hit for them. But it wasn’t.

Savage times for S3

The first problem that customers experienced with the Savage3D was simply finding one on the shelves. It was the first graphics processor to be fabricated on a 250nm node, by the UMC Group. But the manufacturer was also the first to be working at this scale and, invariably, wafer yields were rather poor.

This deficiency of fully functioning dies meant that relatively few add-in board vendors bought the Savage3D, to the point that the likes of Hercules was testing each chip from the purchased trays and hand selecting the viable ones. Long term partner, Diamond Multimedia, eschewed the processor altogether.

The drivers on release were notoriously buggy (pre-release ones, given to reviewers, had no OpenGL support at all) and S3 dropped support for their own API. But more importantly, the competition was now in full swing. Where the first ViRGE had the market almost to itself, and the great 2D reputation of old to back it up, the Savage3D faced far meatier opponents.

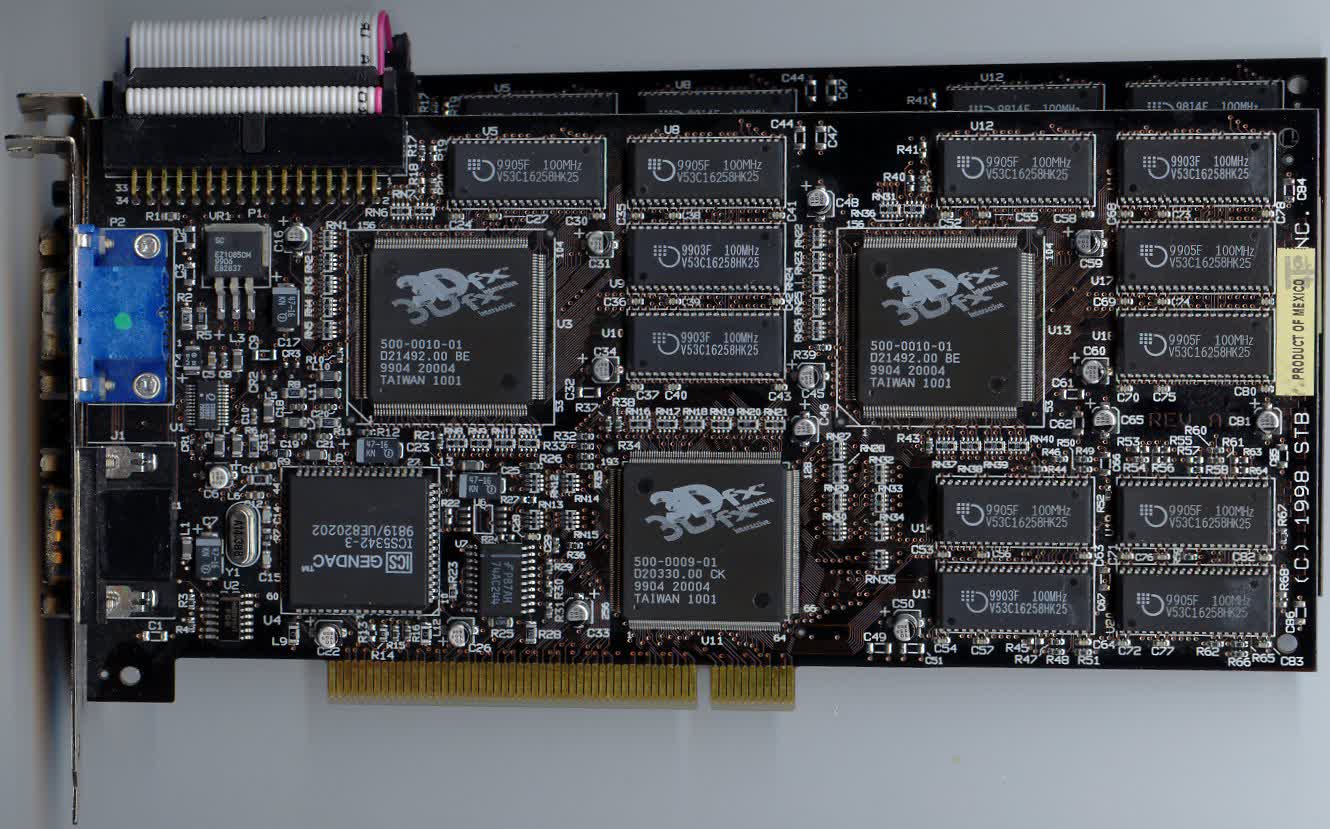

By the fall of 1998, Nvidia had their NV4-powered Riva TNT out, sporting two pixel pipelines, which allowed for single pass multitexturing. The same ability was also fielded by 3Dfx’s Voodoo2 cards, but where the Riva TNT’s pixel output rate would half with dual textures, the Voodoo2 wasn’t affected.

But even in single texturing games, the performance of the Savage3D was disappointing. Vendors couldn’t even sell their products at a low price, to undercut the opposition, due to the limited availability of the chips.

S3 resolved many of these issues within the space of a year and the following Savage 4 chip was very much the product the 3 should have been. But where S3 was getting things right second time round, the likes of 3Dfx, Matrox, and Nvidia were on form right away.

Thus Savage4-powered cards were no better than models from the previous year and once again, OpenGL performance was dismal. At least Diamond were back with S3, and plenty of other vendors churned out numerous models.

The only saving grace for the Savage4 was S3TC. The lossy texture compression system was supported by Quake III Arena and Unreal Tournament (the mega-games of the time) and provided a noticeable boost in visual quality, for very little performance impact.

It was within this year that S3 Inc decided to splash its cash around, purchasing the assets and IP of fellow graphics card company Number Nine in December (and then licencing it back to that company’s design engineers 2 years later). Then, in the summer of 2000, S3 merged with long-term chum Diamond Multimedia, in a move to expand their product portfolio.

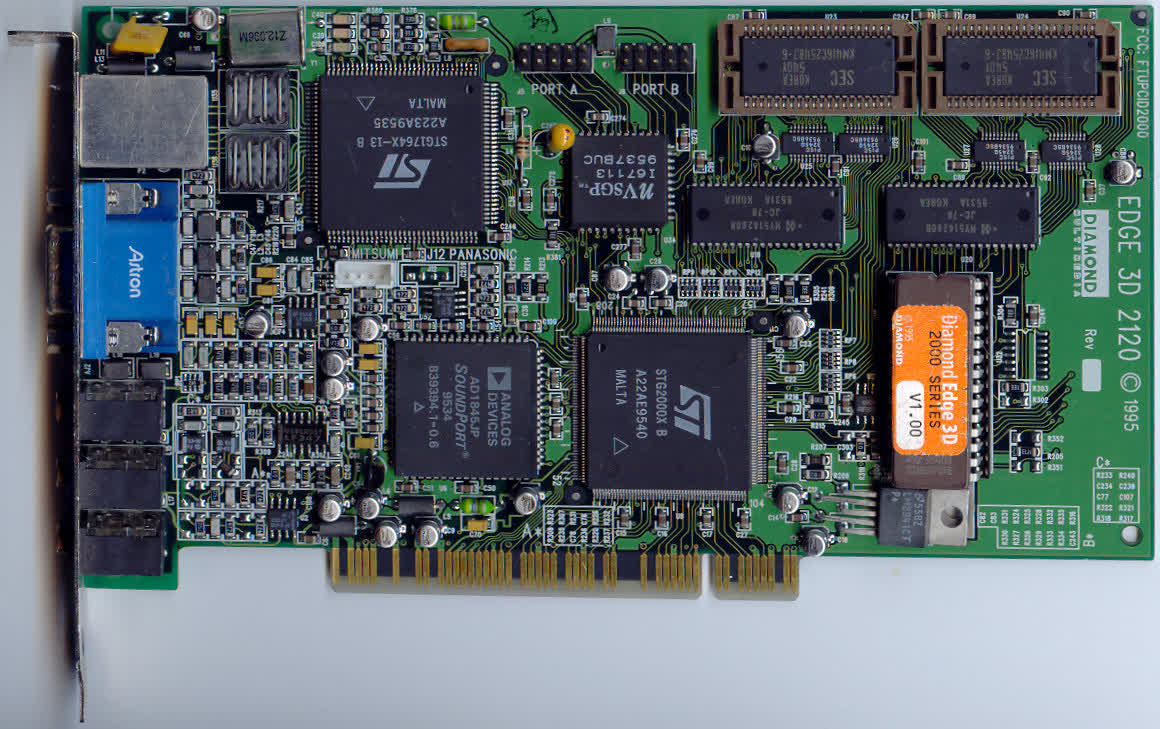

The newly wedded couple’s first offspring was the Savage 2000, S3’s first graphics chip to offer Direct3D 7.0 compatibility. The rendering catchphrase of this era was ‘Hardware Transform and Lighting’ (TnL); instead of the CPU being used to process all of the geometry in a 3D scene, this stage in the chain was done by the graphics processor.

With two pixel pipelines, each fielding two texture units, and a clock speed of 125 MHz, the Savage 2000 was within spitting distance of Nvidia’s new GeForce 256. But, to perhaps little surprise, that wasn’t the case – yet again, drivers let the product down. On release, the hardware TnL wasn’t supported, and when it did finally appear, the results were frequently buggy and slow.

Overall, it wasn’t actually a bad graphics card, it just wasn’t as good as the competition (the poor Direct3D performance didn’t matters either) nor significantly cheaper. For all S3’s long history of design excellence and market leadership, they were now floundering, seemingly lost at sea.

All change at the helm

Just three months after S3 Inc and Diamond Multimedia announced their merger, the company rebranded itself as SONICblue and in 2001, sold the graphics division to Taiwanese manufacturer, VIA Technologies.

SONICblue would focus on the multimedia industry, making consumer electronic products such as video recorders and MP3 players, before shutting down and filing for bankruptcy in 2003. S3, though, would live on with an entirely unoriginal new name (S3 Graphics) and a new purpose: integrated chipsets.

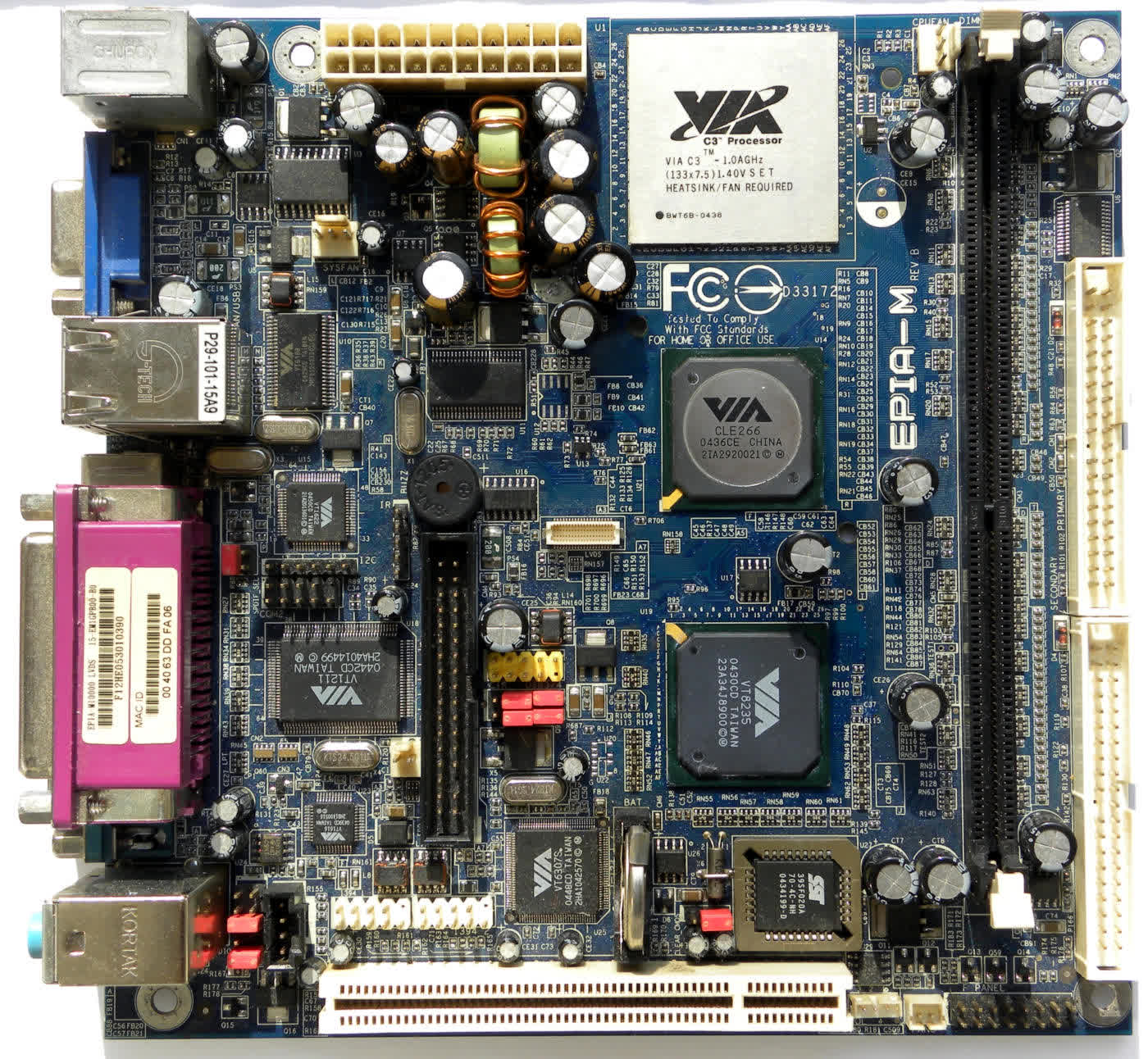

At the start of the millennium, CPUs from AMD and Intel – such as the Athlon XP and Pentium III – didn’t have a graphics processor built into them. To get a video output from your PC, you needed an add-in graphics card or, as was the trend then, a motherboard that a chipset with graphics capability.

VIA Technologies was very competitive in the motherboard sector for a while, so the purchase of S3 made a lot of sense. Rather than creating something new from scratch, the engineers took elements from previous graphics cards: the 2D and media engines from the Savage 2000 and the 3D pipelines from the Savage4 were melded together to make the ProSavage system.

Part of the reason as to why S3’s earlier products could be sold cheaper than Nvidia’s, for example, was the fact that their designs were much smaller. The processors used fewer transistors and the resulting die area was much smaller, making them ideal for chipset integration.

But with the discrete graphics card market now booming, VIA fancied a slice of it for themselves, and so once more into the fray, S3 Graphics returned with the Chrome series of processors in 2004. The first model was called the DeltaChrome, featuring full Direct3D 9.0 support, with 4 pipelines for vertex shaders and 8 for pixel shaders.

By then, ATi and Nvidia were fielding incredibly powerful graphics cards, and both companies were experimenting with additional features beyond those required for D3D 9.0 (such as higher order surfaces and conditional flow control). S3 Graphics wisely chose to keep things somewhat simpler and early signs of its performance were encouraging but somewhat mixed.

However, later testing showed the processor (we were calling them GPUs then) to have serious issues when using anti-aliasing. But VIA and S3 Graphics persevered, and the Chrome line up continued for 5 more years, switching to a unified shader architecture in 2008.

These later models were very much at the bottom end of gaming performance, being no better (and typically worse) than the budget sector offerings from ATi and Nvidia. Their only saving grace was the price: the Chrome 530 GT, for example, had an MSRP of just $55.

These would be the last discrete graphics cards designed by S3 Graphics, although VIA continued to use their GPUs as integrated components in their motherboard chipsets. But in 2011, VIA called it quits and sold off the entirety of S3 Graphics to another Taiwanese electronics giant called HTC.

Why this phone manufacturer would buy a GPU vendor may seem to be somewhat of a puzzle. Unlike Apple, HTC didn’t design its own chips for its phones, instead relying on offerings from Qualcomm, Samsung, and Texas Instruments. The purchase was purely to expand their IP portfolio, to generate income from licencing the patents for S3TC.

Only a name and fond memories are left

There hasn’t been a chip sporting the name of S3 or S3 Graphics for over a decade now, and we are unlikely to ever see one again. HTC has done nothing with the IP since purchasing them all those years ago. So we’re just left with the name, memories good and bad, and perhaps a few questions as to why it all went so wrong for them.

In the 2D acceleration era of graphics cards, S3 was almost untouchable and held a considerable slice of the market. They clearly had good engineers in this area, but that doesn’t automatically translate into being masters of the world of polygons. Yes, the ViRGE was underwhelming and the Savage3D was released too early, with manufacturing issues and poor drivers besmirching S3’s reputation.

But these could have been solved in time, as the overall designs were fundamentally sound. Instead, the company’s management chose a different tack – buying up another failing graphics company, then merging with a consumer electronics group, before finally throwing in the towel altogether.

Other great names of that era (3dfx, Rendition, BitBoys, to name but a few) all followed a similar path and their products were sometimes the best around. And just like S3, they’re now just footnotes in history, absorbed into other companies or lost to Wikipedia pages.

The story of S3 shows that in the world of semiconductors and PCs, nothing can be taken for granted, and continued success is a complex combination of inspired engineering, hard work, and a fair degree of luck. Their products are no more, and the name is gone, but they’re certainly not forgotten.

TechSpot’s Gone But Not Forgotten Series

TechSpot’s Gone But Not Forgotten Series

The story of key hardware and electronics companies that at one point were leaders and pioneers in the tech industry, but are now defunct. We cover the most prominent part of their history, innovations, successes and controversies.