Hogwarts Legacy is open-world action RPG set in the Harry Potter universe. Having launched to very enthusiastic user reviews, it’s time we benchmark it and we have a ton of data for you. We have 53 GPUs that will be tested in this game built on the Unreal Engine 4 engine, it supports DirectX 12 and ray tracing effects as well as all the latest upscaling technologies.

So far Hogwarts Legacy seems to be kind of a big deal based on Steam sales. The game is set in the 1800s, and players assume the role of a student attending Hogwarts School of Witchcraft and Wizardry. They can choose their character’s house and attend classes, learn spells, explore Hogwarts and its surrounding areas, and engage in combat against magical creatures and other enemies.

We have benchmarked two sections of the game, one benchmark pass took part on the Hogwarts Grounds as you exit, and the second at Hogsmeade as you arrive. We used a test system powered by the Ryzen 7 7700X with 32GB of DDR5-6000 CL30 memory and the latest Intel, AMD, and Nvidia display drivers. Let’s get into it…

Benchmarks

Medium Quality Settings: 1080p, 1440p and 4K

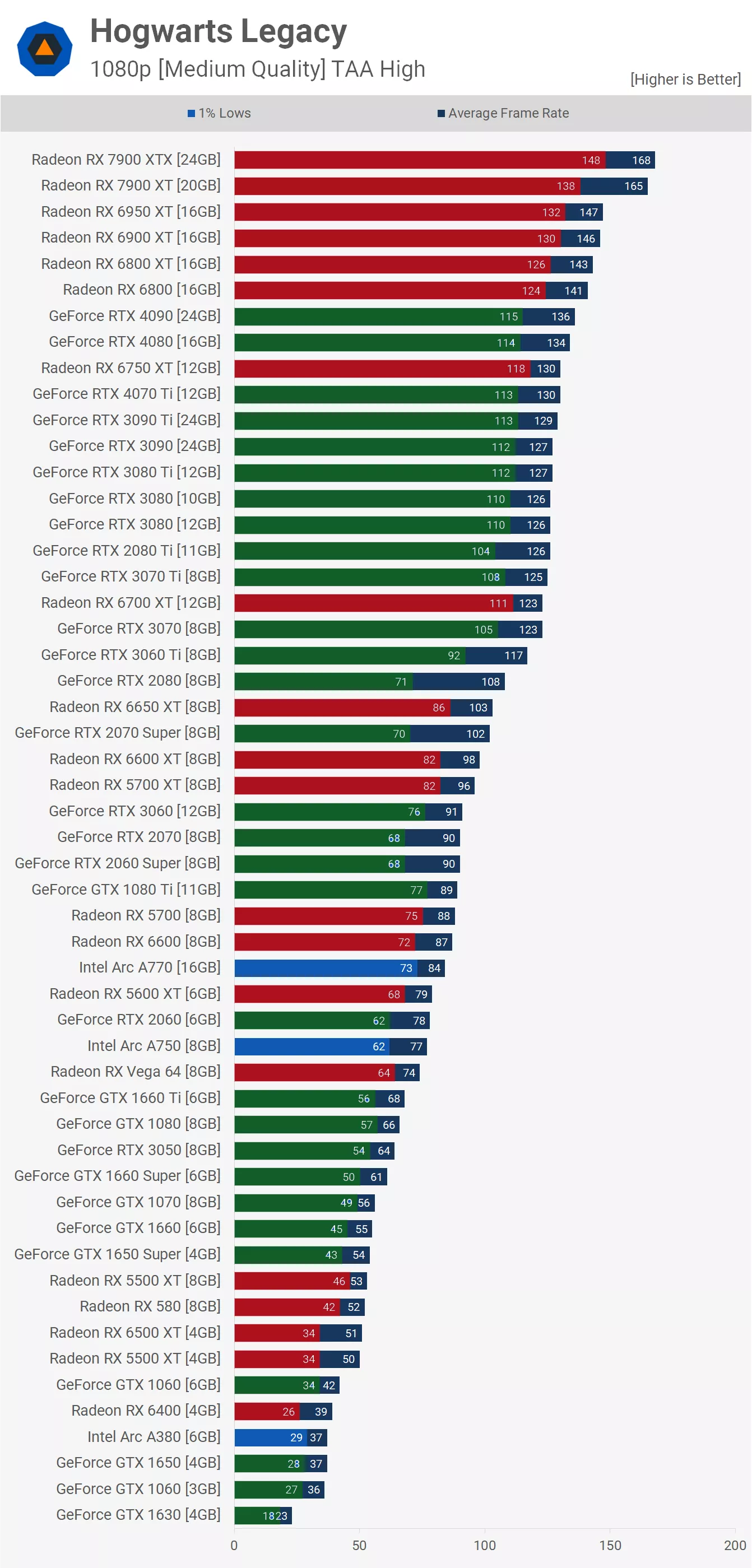

Starting with the 1080p medium data, we find some really odd results. First, the GeForce 40 series is well down our graph with the RTX 4090 capped out at 136 fps. This is clearly a CPU bottleneck which can reduce GeForce performance by around 20% relative to Radeon GPUs and that’s what we’re seeing here. The 7900 XTX and 7900 XT both pushed past 160 fps when using the Ryzen 7 7700X.

The Radeon 6000 series also performs better than the GeForce 30 series, but again that’s not unusual at 1080p, the Radeon 6800 XT, for example, was 13% faster than the RTX 3080. Further down the graph we see the 6700 XT matching the RTX 3070 and 3070 Ti, with the 6600 XT and 5700 XT delivering just short of 100 fps on average, making them slightly slower than the 2070 Super, but also slightly faster than the 2070.

Then we see the GTX 1080 Ti alongside the RX 5700, 6600 and Intel Arc A770. So a pretty disappointing result there for Intel given they did supply a game ready driver to us. That said, it did beat the RTX 2060 which delivered 5600 XT-like performance.

For just over 60 fps, the GTX 1660 Super, RTX 3050 or GTX 1080 will do. Falling just short of 60 fps is the GTX 1070, 1660, 1650 Super and Radeon RX 5500 XT. I feel any GPU that can’t deliver at least 30 fps for the 1% lows here was unplayable, so that includes the Radeon RX 6400, Intel Arc A380, GeForce GTX 1650, 1060 3GB and 1630.

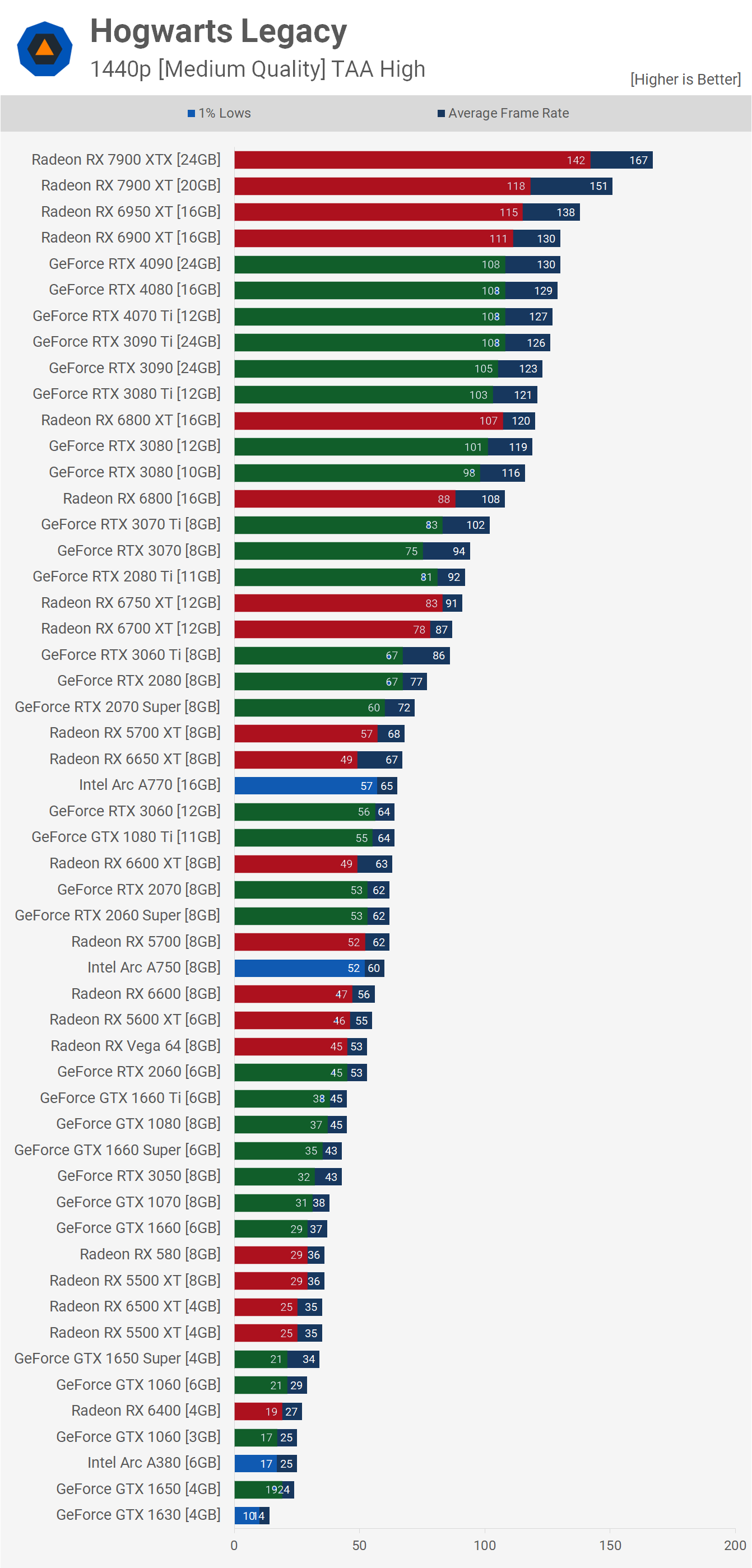

Increasing the resolution to 1440p provides us with similar data to what was seen at 1080p, the GeForce GPUs are still capped at around 130 fps, while the Radeon GPUs could push as high as 167 fps. The Radeon 6950 XT was also still 10% faster than the RTX 3090 Ti, so the Radeon 6000 series is faring better than the GeForce 30 series, at least at this resolution using the medium quality settings.

This time the 6800 XT was just 3% faster than the GeForce RTX 3080, while the 6800 beat the 3070 Ti by a 6% margin. Further down we find the 5700 XT basically neck and neck with the 2070 Super and 6650 XT, along with Intel’s Arc A770. The RTX 3060 is also just there, and it’s impressive to see the 5700 XT still outclassing the GeForce 30 series GPU in a new cutting edge title.

For those seeking a 60 fps experience at 1440p that should be possible with the Intel A750, Radeon 5700, RTX 2060 Super, or anything faster than those. Just falling short we have the Radeon 6600, 5600 XT, Vega 64 and RTX 2060. Then anything from the GTX 1660 down is going to lead to a pretty rough experience, so I’d recommend lowering the resolution and or quality settings.

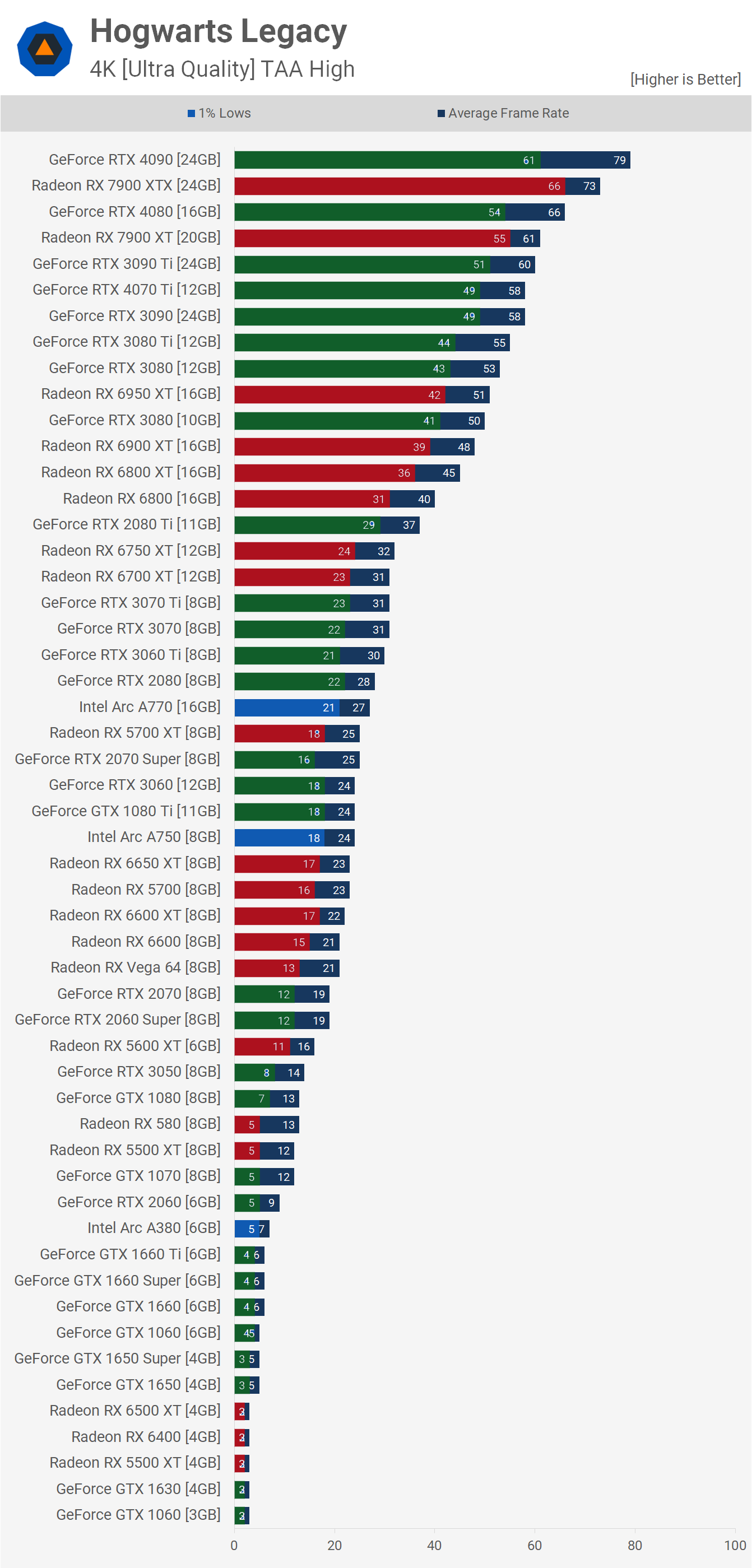

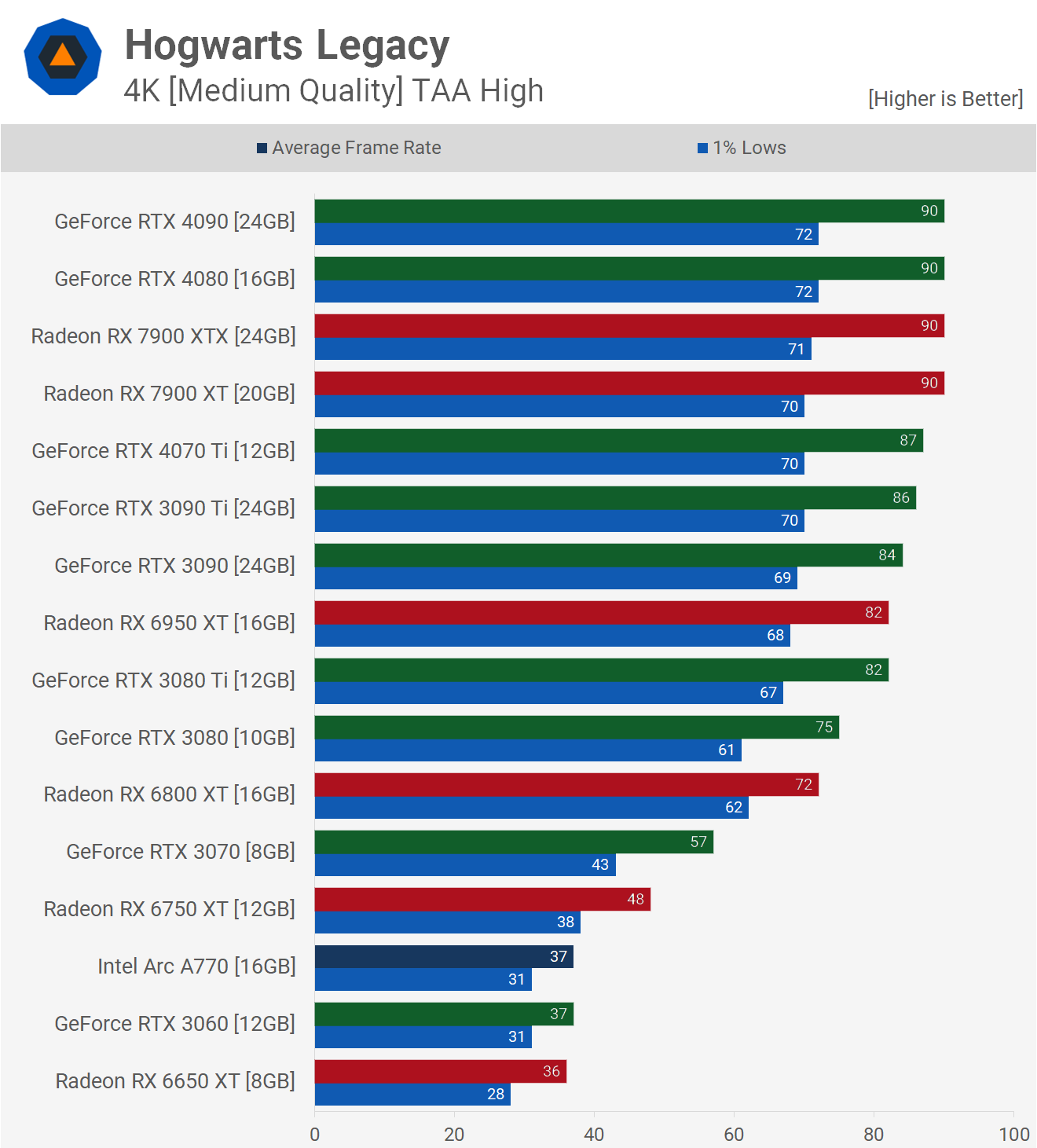

At 4K resolution using medium settings the CPU bottleneck is largely removed and we see what these GPUs are really capable of. The GeForce RTX 4090 sustained 111 fps on average making it 12% faster than the Radeon 7900 XTX, but remember we’re only using medium quality settings here.

The 7900 XTX was also 9% faster than the RTX 4080 while the 4070 Ti and 3090 Ti were comparable, making them around 9% faster than the 6950 XT. The RTX 3080 was also able to overtake the 6900 XT by a few frames, making it 14% faster than the 6800 XT.

The Radeon 6800 was still able to stay just ahead of the GeForce 3070 and 3070 Ti, though all did dip below 60 fps. We also saw 1% lows drop below 30 fps with the RX 5700 and RTX 3060, so any GPUs below them on this graph shouldn’t be used at 4K, but I guess that really goes without saying.

Ultra Quality Settings: 1080p, 1440p and 4K

Here are the ultra quality results, but with ray tracing disabled, we’ll get to the RT results soon.

Under these more CPU limited test conditions at 1080p we see that the Radeon GPUs perform exceptionally well. The 7900 XTX beat the RTX 4090 by an 8% margin while the 7900 XT was 6% faster than the RTX 4080. Then we see the 6950 XT and 4070 Ti trading blows.

Then for the previous generation matchups AMD does very well, the 6950 XT for example is 7% faster than the 3090 Ti, while the 6800 XT matched the 3080 Ti, making it 4% faster than the 10GB 3080.

The Radeon RX 6750 XT did well with 83 fps, making it slightly faster than the RTX 3060 Ti and only slightly slower than the 2080 Ti and 3070. Once again, the old Radeon RX 5700 XT was impressive, averaging 63 fps which placed it on par with the 6600 XT and 6650 XT. The Intel Arc A770 also did quite well here, matching the GPUs just mentioned along with the RTX 3060.

Then for 60 fps we find that the RTX 2070 and 2060 Super are just up to the task, while the 1080 Ti, RX 5700, RX 6600, A750 and RTX 2060 just fall short. Once we get down to the GTX 1660 Ti the game starts to become unplayable, or at the very least enjoyable in my opinion, and there are quite a few 4GB and 6GB graphics cards that simply weren’t up to the task.

Now increasing the resolution to 1440p on Ultra we find a few interesting things: the Radeon 7900 XTX and RTX 4090 are neck and neck while the GeForce RTX 4080 overtook the 7900 XT, delivering 15% more performance.

What’s really interesting to note here is the GeForce 30 and Radeon 6000 series battle, namely the fact that the Radeon GPUs provided stronger 1% lows. The 3090 Ti for example was 3% faster than the 6950 XT when comparing the average frame rate, but 12% slower when comparing the 1% lows.

This is less obvious with the RX 6800 and RTX 3070 Ti comparison though, where performance is basically identical. There’s loads more data here but I think for 1440p ultra gaming you’re not going to want to go below the RTX 3060 Ti and 6750 XT, so we’ll move on.

For 4K gamers hoping to play Hogwarts Legacy using the Ultra preset, you’ll want to bring a serious GPU, especially if you don’t want to rely on upscaling technology to boost performance. This isn’t an issue with the GeForce RTX 4090 which spat out well over 60 fps, making it 8% faster than AMD’s 7900 XTX. That said, the 1% lows weren’t quite as strong in our testing.

The GeForce GPUs in general really shine at 4K and now the GeForce 3090 Ti is 18% faster than the Radeon 6950 XT. In fact, even the RTX 3080 edged out the RDNA 2 flagship. With just 51 fps on average for the 6950 XT, there wasn’t a single previous generation Radeon GPU that could break or even hit the 60 fps barrier, and for a decent experience you’ll want at least the RX 6800.

There are a few issues here for GeForce 30 GPUs only armed with 8GB of VRAM, such as the 3070 and 3070 Ti, both of which were only able to match the 6700 XT, making them a good bit slower than the 16GB RX 6800. You want an absolute minimum of 12GB of VRAM for 4K ultra and ideally 16GB would be the minimum.

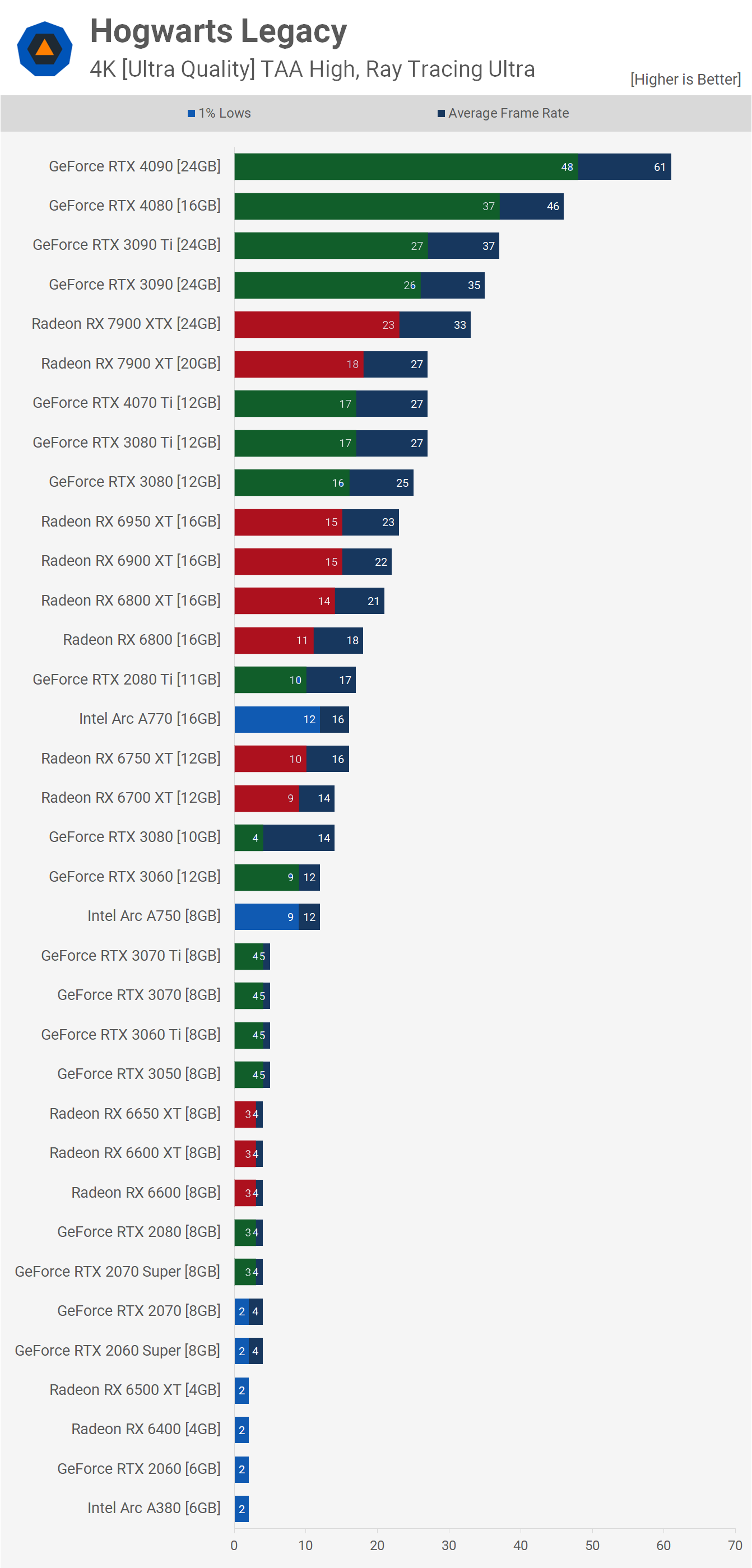

Ray Tracing Benchmarks

It’s time to enable ray tracing and this is where things become truly interesting. Even with RT enabled, the 7900 XTX was able to take out top spot in our testing at 1080p, though it was just 3% faster than the GeForce RTX 4090, but that’s still a serious achievement, though of course we will be somewhat CPU limited at this resolution.

The 7900 XT also matched the 4070 Ti, but did deliver better 1% lows, making it more comparable with the RTX 4080 which was just 5% faster.

The GeForce 30 series easily beat the Radeon 6000 series, the RTX 3090 Ti for example was 21% faster than the 6950 XT though the more mid-range previous generation matchups were much more competitive. The 6700 XT beat the 3060 Ti and even the 3070 Ti, again 8GB of VRAM just isn’t enough here, even at 1080p, so that’s brutal for those who invested big money in products like the 3070 Ti not that long ago.

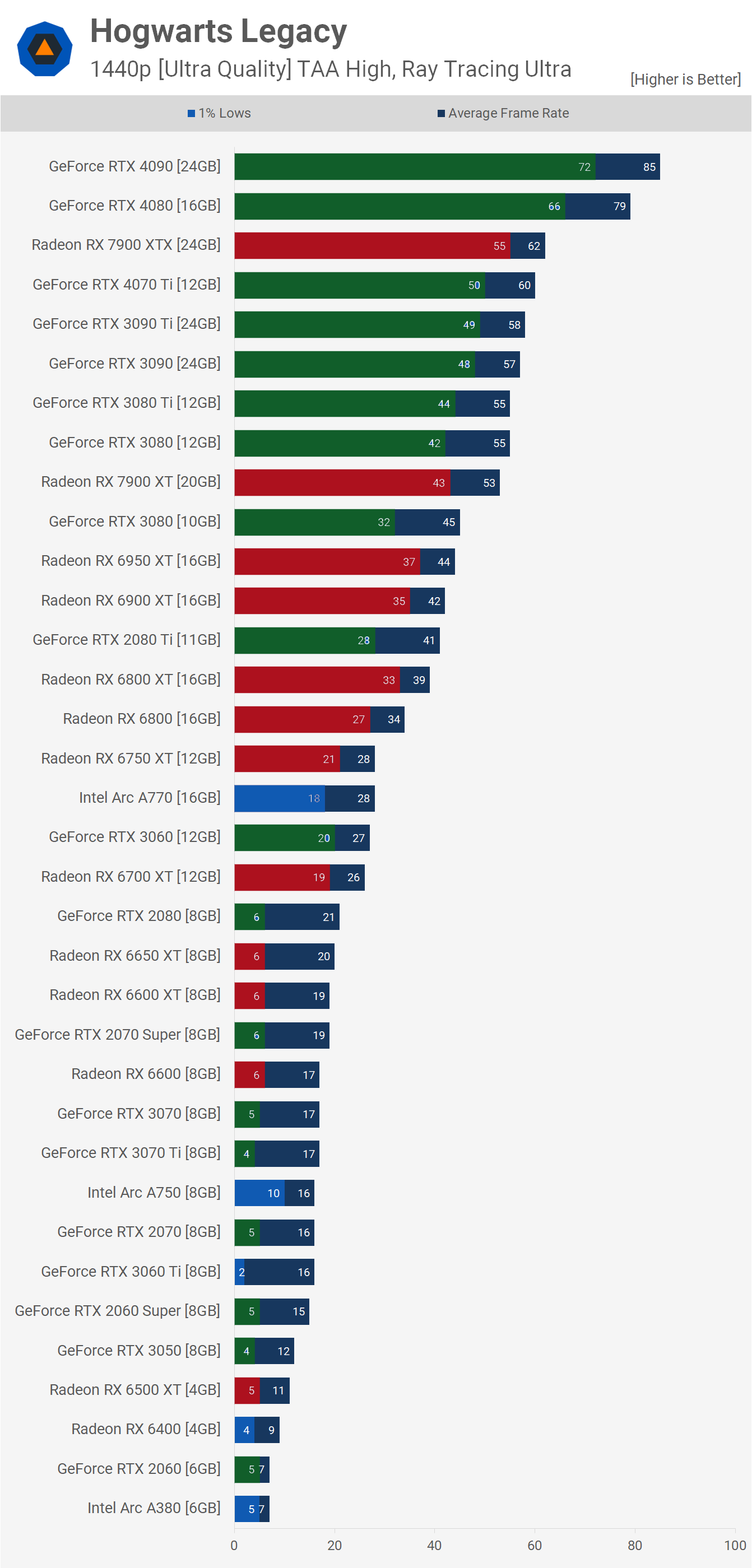

At 1440p with RT enabled we see that there are very few GPUs that can handle ray tracing in Hogwarts Legacy, at least when using ultra settings. Although the GeForce RTX 4090 was good for 85 fps and the RTX 4080 79 fps, most GPUs struggled to even hit 60 fps, such as the previous-gen flagship RTX 3090 Ti.

12GB of VRAM is the minimum here, the RTX 2080 Ti did okay but 1% lows did suffer and this was also the case with the RTX 3080. Parts like the 3070 Ti were completely broken, leading to competing parts such as the Radeon 6800 delivering twice the performance, but really it’s no comparison as the 3070 Ti wasn’t even remotely playable by anyone’s standards whereas the Radeon 6800 was playable, not by my standards, but it was technically playable.

At 4K with RT effect we see that the RTX 4090 was good for 61 fps making it 33% faster than the 4080 and 65% faster than the 3090 Ti, so a very strong performance uplift there. Basically anything less than the RTX 4080 couldn’t deliver 60 fps, with everything else dropping below 40 fps, so upscaling will be a requirement here. Gamers will also want at least 16 GB of VRAM to play at 4K with ray tracing enabled.

The Hogsmeade Test: Medium Quality

Something we noticed after our initial wave of GPU testing was some strange scaling behavior in the Hogsmeade town, for whatever reason the game appeared extremely CPU bound here, despite low CPU utilization on all cores. We’re not entirely sure what’s going on here, and it will take more time and a lot more benchmarking to work it out.

For now we want to compare the previous Hogwarts Grounds data to the Hogsmeade test data. Please note given the incredible amount of testing that was involved here we weren’t able to retest all 53 GPUs, but we do have a good range of current and previous generation models.

Starting with 1080p medium results we see that we run into a system limitation in the Hogsmeade area at around 150 fps on average with 1% lows for around 130 fps in AMD hardware. Not a drastic difference to the Hogwarts Ground test, but we are clearly hitting a limitation here. With GeForce GPUs, we’re seeing a hard cap on performance at 130 fps in Hogsmeade, not radically different to the initial test but it’s interesting to see the GeForce RTX 3060 and 3070 performing better here, despite everything else hitting an fps wall a little sooner.

We see the same limitations at 1440p but of course more of the data is now GPU limited, basically from the 6950 XT and down the graphics card is the primary performance limiting component. But what’s really interesting is that despite imposing a system limitation sooner, the Radeon GPUs typically delivered higher frame rates in the Hogsmeade area, suggesting that this test is less GPU demanding but more CPU demanding.

The 1440p scaling for both GeForce and Radeon GPUs is similar to what was seen at 1080p, again the 7900 XTX and XT take the top spot, followed by the 6950 XT and 6800 XT, made possible by Nvidia driver overhead which hurts performance when CPU limited.

At 4K we’re getting to the point where performance is being GPU limited, allowing the RTX 4080 and 4090 to match the 7900 XTX, but of course, we’re still quite heavily system limited here.

Now at 4K there’s little difference between the two test locations and it’s only the 7900 XTX that is performance limited in the updated Hogsmeade benchmark. But again we are seeing a strange situation where some of the Radeon GPUs perform better in the Hogsmeade area.

In the original Hogwarts Grounds testing, the RTX 4080 was a good bit faster than the 4070 Ti and the 4090 was a good bit faster than the 4080. However, when testing in Hogsmeade we don’t see that and although frame rates were higher at the lower resolutions, the data does look to be CPU limited, which is odd.

The Hogsmeade Test: Ultra Quality

Increasing the visual quality preset to ultra again sees most GPUs delivering higher frame rates in the Hogsmeade area, the 6750 XT for example saw a rather substantial 17% uplift and a 12% uplift for the 6800 XT. But then the 6950 XT performance was much the same in either area while the 7900 XT and XTX hit a wall at around 140 fps.

On GeForce hardware, the Hogwarts Grounds results show fairly consistent scaling at 1080p as we increase the GPU power, but Hogsmeade runs into a performance cap of about 110 fps quite quickly, from the RTX 3080 10GB to the RTX 4090 we see no change in performance.

Now this is odd, at 1440p all Radeon GPUs actually delivered higher performance in the Hogsmeade test, the 7900 XT, for example, was 20% faster which is a massive difference. Strange stuff indeed.

We see that the high-end GeForce 30 series GPUs all top out at around 95 fps, yet despite that the 4080 and 4090 were able to render 108 and 109 fps respectively.

It’s very odd behavior, and it’s even more confusing given no single core was maxed out on our Ryzen 7 7700X and overall CPU utilization was quite low, suggesting the game does need a performance patch to better optimize performance.

Then at 4K we see a similar trend where the Radeon GPUs generally performed better for the Hogsmeade test. The GeForce 30 series along with the 4070 Ti are all limited to around 55 fps, while the 4080 was good for 72 fps and the 4090 85 fps.

Ray Tracing, Take Two

So let’s enable ray tracing and take another look in Hogsmeade Village. Here we’re well under the 140-150 fps cap seen without RT enabled. The Radeon 6000 series GPUs performed much the same for both test scenes, but that wasn’t true of the 7000 series where the 7900 XT was 9% faster in Hogsmeade and the 7900 XTX 13% faster.

Even with ray tracing enabled, the 7900 XT and 7900 XT were faster than the 4090 at 1080p, yet the 6950 XT was slower than even the RTX 3080 Ti. It’s also interesting to note that while the 8GB RTX 3070 was crippled here, rendering just 17fps with 1% lows of 5 fps, so performance was unplayable and completely broken, and the 3070 Ti would suffer the same fate, the 6800 XT on the other hand was able to deliver 55 fps with 44 fps for the 1% lows and was surprisingly smooth and enjoyable.

Previously in the Hogwarts Grounds testing the 3070 and 3080 were able to deliver playable performance at 1080p, but in the Hogsmeade area we were faced with constant pauses, forget frame stutters, performance here was completely broken, due to VRAM limits being exceeded.

It’s odd though as performance in general for products with 12GB of VRAM like the RTX 3060 and 3080 Ti was actually better here. So it’s as if the ray tracing demand is slightly lower, but the demand on memory capacity is higher, which might explain some of the odd results we’ve been testing between these two test areas.

It’s a similar story at 1440p, the Radeon 6000 series all delivered the same performance in both test areas, while the 7900 XT was 9% faster in Hogsmeade and the 7900 XTX 8% faster. On GeForce hardware we had found previously that the 8GB graphics cards couldn’t handle ultra quality settings with ray tracing and in the Hogsmeade area we also find that 10GB models like the RTX 3080 aren’t sufficient either. Beyond that though, scaling is fairly similar from the 3080 Ti and up.

With ray tracing enabled the RTX 4090 and 4080 were able to overtake the 7900 XTX at 1440p, and here the 6950 XT was crushed by the 3080 Ti. Again, the older GeForce 30 series GPUs with less than 12 GB of VRAM really suffer, the RTX 3080 saw 1% lows of 4 fps while the 6800 XT was good for 33 fps, that’s a blood bath for Nvidia’s previous generation.

The Radeon 6800 would offer a similar experience as it also packs a 16GB VRAM buffer, and with the help of FSR2 you could probably get a 60 fps experience whereas no degree of DLSS is likely to help the RTX 3070, especially given the 10GB RTX 3080 is also crippled, but this is a comparison we’d like to look at in more detail, perhaps for an RTX 3070 vs RX 6800 revisit in the not too distant future.

Then at 4K the Radeon GPUs are pretty much wiped out as the 7900 XTX was only good for just over 30 fps. We’re now seeing very similar results for all GPUs in both test areas, suggesting that memory capacity is at more of a premium in the Hogsmeade test, and it’s not until we hit 4K that the Hogwarts Grounds data starts to look very similar.

At 4K it’s a clear win for the GeForce RTX 4090 and even the 3090 was faster than the 7900 XTX, and there are more like the results we’d expect to see with ray tracing enabled. We keep seeing that 16 GB of VRAM is the minimum here as even the 4070 Ti struggled with frame pacing, seeing an 81% discrepancy between the 1% lows and average frame rate whereas the 7900 XT saw less than half that margin.

Crazy Number of Benchmark Runs Later: What We Learned

You may be thinking many of these results were heavily CPU limited and had we tested with the Core i9-13900K, they would look a lot different, but we don’t believe that’s the case.

We did run a few tests with the Radeon 7900 XTX and GeForce RTX 4090 using the Core i9-13900K and found similar scaling behavior. Utilization on the Core i9 did look different in the Hogsmeade area, with 2 cores almost always maxed out, despite that though frame rates were much the same.

It’s clear Hogwarts Legacy has some performance related issues, so it’s hard to make any concrete conclusions at this point, other than to say we’re hoping for some patches that improve this, hopefully in the not too distant future. It’s also worth noting that AMD should have an updated driver soon (didn’t make it to our testing date), which should include official Hogwarts Legacy support. As it stands both Nvidia and Intel already have Hogwarts Legacy ready drivers, and we used those for testing in this review.

For those of you using a GeForce 40 series GPU, if your frame rates are much higher than what’s been reported here, make sure Frame Generation hasn’t turned itself on. We ran into that issue. Although we never even tried Frame Generation, or DLSS for that matter, whenever we installed a GeForce 40 series GPU the results were unexpectedly high. The fix was to enable DLSS, then enable Frame Generation, then disable Frame Generation and DLSS.

Also, for those of you facing stuttering, there’s a wide range of reasons for this and one could be system memory. We found that Hogwarts Legacy plays best with 32GB of RAM, it’s certainly playable with 16GB, but more RAM did help.

Another issue is VRAM usage, though this is easier to address by reducing visual settings like texture quality, or disabling ray tracing which is a massive VRAM hog in this game. We’re not willing to say the GeForce RTX 4070 Ti and its 12GB VRAM buffer are a disaster just yet, as performance could be addressed with a game patch, but at the very least Hogwarts Legacy has highlighted why 12GB of VRAM on an $800 GPU is a bad idea, and at best will age as well as the RTX 3070 has.

It’s also interesting to see the Radeon 6800 XT smashing the GeForce RTX 3070 by a 31% margin at 1440p using the ultra quality preset and then by a 63% margin at 4K. Meanwhile, with ray tracing enabled the 6800 XT was good for 55 fps at 1080p while the RTX 3070 was good for just 17 fps. We did warn that parts like the RX 6800 and its 16GB of VRAM would age better than the RTX 3070 before long, and we’re seeing evidence of that now.

Of course, you can enable DLSS, XeSS or FSR 2 upscaling technologies, but these will only take you so far in the face of a CPU bottleneck. Perhaps this could be a workaround for GPUs lacking VRAM, though you’d likely have to use ‘performance’ modes and they typically suck when it comes to image quality.

Provided you have enough RAM and VRAM, along with a decent CPU and you’ve dialled in the appropriate level of visuals for your GPU, then the game should play quite well and it does appear to be a high quality game. We’re certain that with further optimization it will be a great game and a useful tool for benchmarking new PC hardware in the months to come.

Shopping Shortcuts:

- Nvidia GeForce RTX 4070 Ti on Amazon

- Nvidia GeForce RTX 4080 on Amazon

- AMD Radeon RX 7900 XTX on Amazon

- Nvidia GeForce RTX 4090 on Amazon

- AMD Radeon RX 7900 XT on Amazon

- Nvidia GeForce RTX 3080 on Amazon