Modern Warfare II made over $800 million in its first three days on the market, more than any other title in the franchise’s 19-year history. Today we’re throwing over 40 graphics cards at this new Call of Duty game to see what kind of hardware you need to achieve your desired frame rate. The focus of this feature will be on GPU performance, and as usual this isn’t a review of the game, but we’re primarily here to discuss frame rates.

That said, I’ve spent quite a few hours playing Modern Warfare II already, and we have found it enjoyable for the most part, despite many crashes. Admittedly, when playing the multiplayer portion of the game solo it’s been nearly flawless, I mean stability has been much better, my gameplay not so much…

Unfortunately, when teaming up with friends the party mode causes issues with members often crashing out in the menu or sometimes even in-game, so there’s a lot of work that needs to be done when it comes to stability, which is a shame given it’s not free to play.

Also read: Playing Call of Duty Can Improve Your Driving Skills

Crashes aside though, it’s a visually impressive game that’s a lot of fun to play and I expect I’ll be sinking countless hours into this one, along with Warzone 2 once it arrives. The PC version of Modern Warfare II offers loads of graphical options to tweak and tune, including various presets and upscaling technologies, and quite unexpectedly developer Infinity Ward has included a very useful benchmark.

As part of the multiplayer portion of the game, the built-in benchmark does an excellent job of simulating the CPU and GPU load you’ll encounter when jumping into multiplayer battles. So rather than attempt to manually benchmark the multiplayer portion of the game which is virtually impossible to do accurately, especially for comparison with other hardware configurations, we’re going to use the built-in benchmark.

This will also make it easy for you to compare your results with ours, it won’t be exactly apples to apples, but it will certainly give you a good idea of where your setup stands.

Speaking of hardware, all our testing was conducted using the new Intel Core i9-13900K with DDR5-6400 memory on the Asus Prime Z790-A Wi-Fi motherboard.

As for display drivers, we used Adrenalin 22.10.3 for the Radeon GPUs which notes official support for Modern Warfare II, Game Ready Driver 526.47 for GeForce GPUs, which also brings official support for Modern Warfare II and for the Intel Arc GPUs driver 101.3490, but we also tried Beta drivers 101.3793 and saw no performance difference between those two drivers in this title.

For testing we’ll be looking at 1080p, 1440p and 4K resolutions using the Ultra and Basic quality presets, and please note the game was reset after making quality settings as they don’t apply correctly until after re-launching the game.

Then for some additional testing, we’ll take a look at preset scaling and some DLSS vs FSR testing, though we won’t be doing a detailed visual quality comparison as that takes almost as long as all the benchmarking. That’s something we may want to look at in the future though. Let’s now jump into the data.

Benchmarks: Ultra

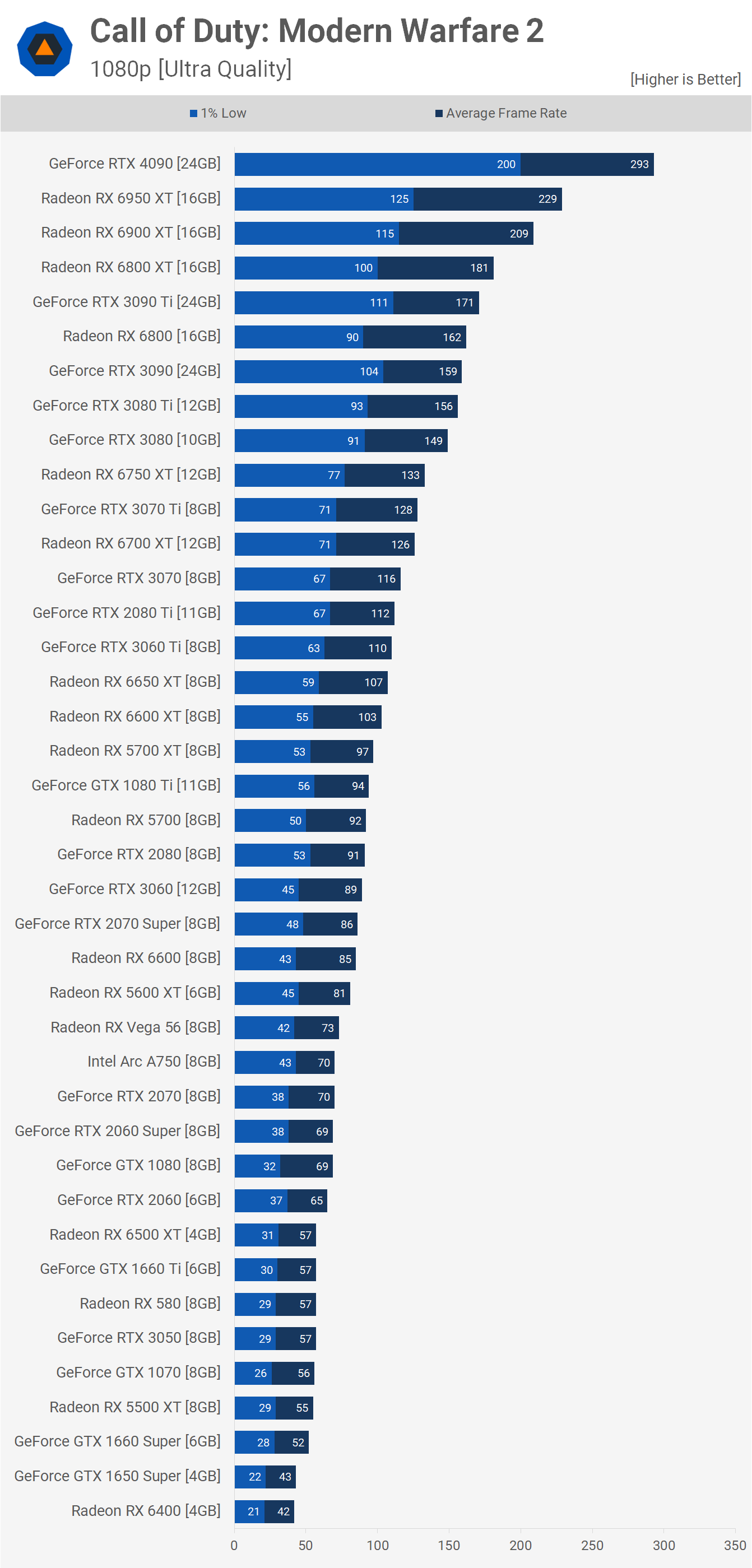

Starting with the 1080p data using the ultra quality preset we see that the RTX 4090 is good for almost 300 fps, hitting an impressive 293 fps, which meant it was 71% faster than the RTX 3090 Ti. However, it was just 28% faster than the 6950 XT as the soon to be replaced Radeon flagship managed a surprisingly high 229 fps, followed by the 6900 XT with 209 fps.

So the Radeon GPUs are looking impressive here, and this is again on full display when looking at the 6800 XT which was able to beat the RTX 3090 Ti, making it 21% faster than the RTX 3080.

The 6750 XT also matched the RTX 3070 Ti and that meant the 6700 XT was slightly faster than the original 3070. Then we have the 6650 XT and 3060 Ti just behind the 2080 Ti.

This did make the 6650 XT 20% faster than the RTX 3060, so the GeForce GPU that it competes with on price. The old 5700 XT was also mighty impressive, churning out 97 fps meant it was just 13% slower than the 2080 Ti, but more incredibly 7% faster than the RTX 2080 and 3% faster than the GTX 1080 Ti, while it massacred the RTX 2060 Super by a 41% margin. Excellent numbers for Team Red really and I wonder how much of this picture will change with future driver updates.

The Radeon 5600 XT which didn’t impress us upon release managed 81 fps on average and that saw it trail the 2070 Super by a mere 6% margin. Then right beneath it is Vega 56 which was 6% faster than the GTX 1080, and that’s not something you often saw back in the day. Hell, Vega 56 beat the RTX 2070, what kind of madness is this.

Even AMD’s abomination, the 6500 XT almost reached 60 fps making it just 12% slower than the RTX 2060 with 57 fps on average, the same frame rate produced by the 1660 Ti, RX 580 and RTX 3050. It would appear as though there is some driver work that needs to be done here by Nvidia. Of course, the same can be said of Intel as the Arc A750 is nowhere, sure 70 fps is playable, but it’s a far cry from the 89 fps the RTX 3060 produced.

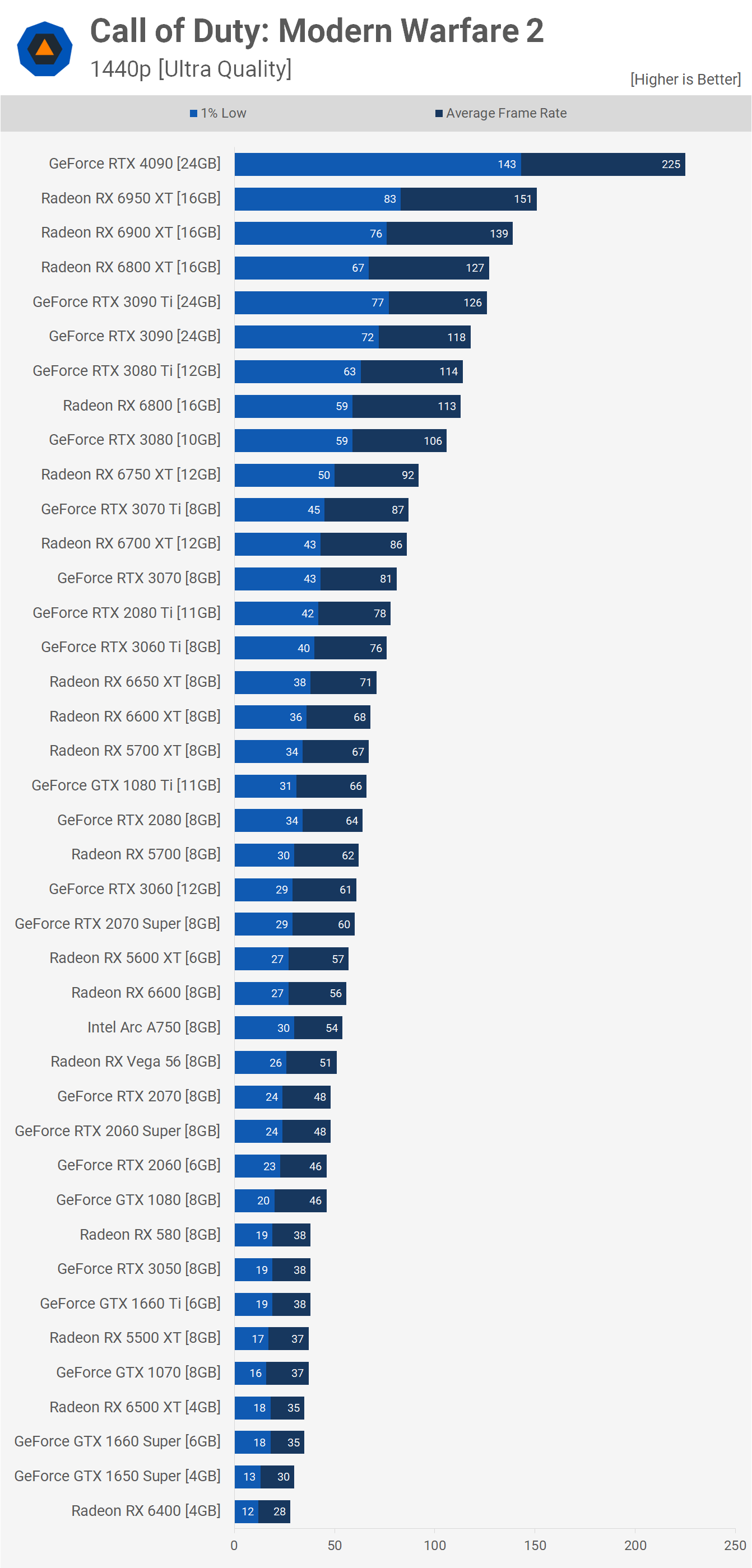

Moving to 1440p we see that the RTX 4090 was still good for 225 fps making it almost 80% faster than the 3090 Ti and nearly 50% faster than the 6950 XT. Looking beyond the 4090 though, Nvidia cops a pounding as the 6950 XT was 20% faster than the 3090 Ti and the 6800 XT 20% faster than the RTX 3080. Hell, even the RX 6800 beat the 3080 Ti to basically match the 3080 Ti.

The 6750 XT also looked mighty with 92 fps making it 6% faster than the 3070 Ti and 14% faster than the 3070. The old 2080 Ti was again mixing it up with the 3070 and 3060 Ti, while the much cheaper 6650 XT was right there with 71 fps making it just 9% slower than the Turing flagship.

The 5700 XT was impressive as it managed to match the GTX 1080 Ti, allowing it to edge out the RTX 2080 and beat the 2070 Super by a 12% margin. It was also 40% faster than the 2060 Super, so a great result there for AMD’s original RDNA architecture.

The RX 6600 and 5600 XT are seen alongside the RTX 2070 Super and Intel new A750, while Vega 56 also makes an appearance at this performance tier with 51 fps. Then dipping below 50 fps we have the standard RTX 2070 along with the 2060 Super, 2060 and GTX 1080. Then beyond that we’re struggling to achieve 40 fps on average.

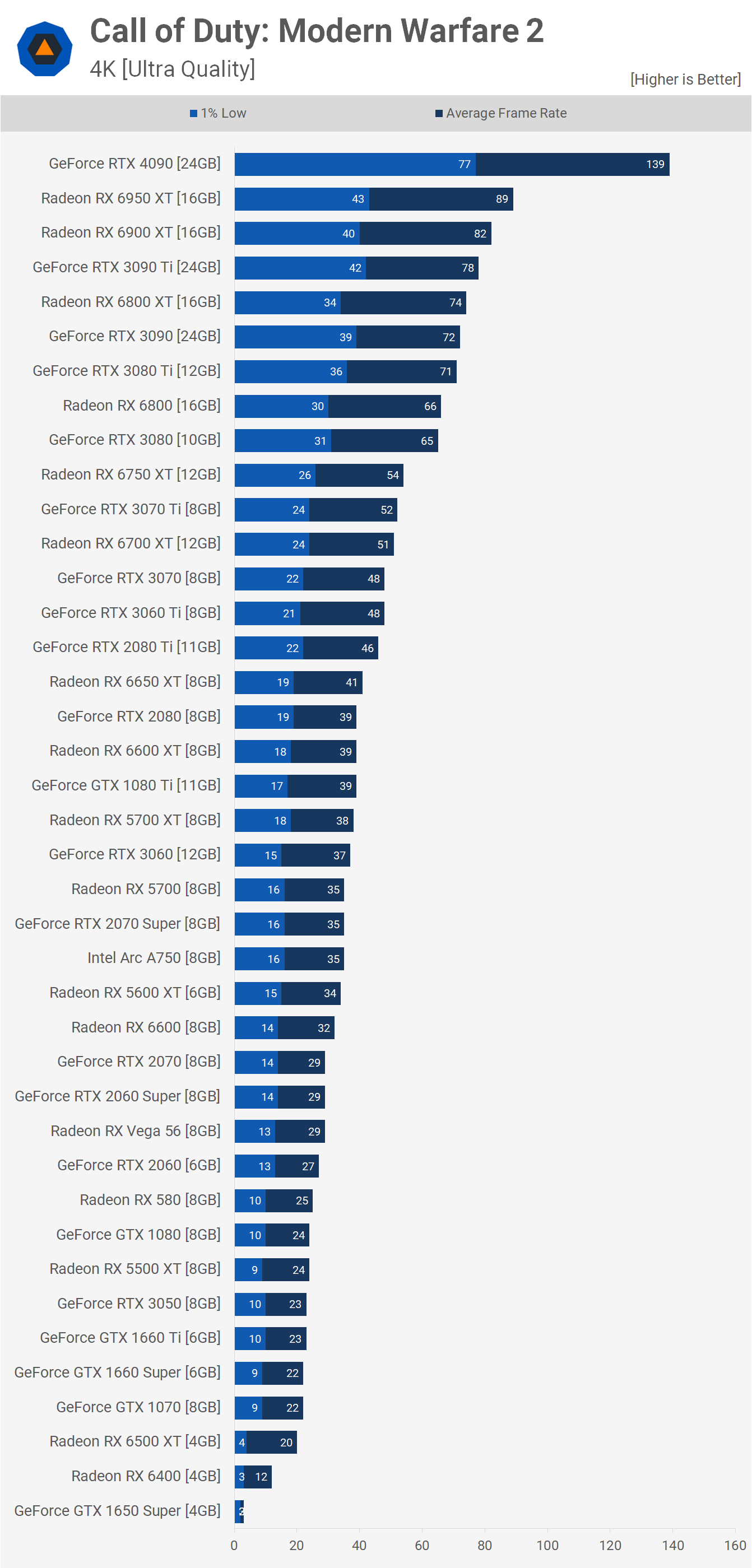

Then at 4K we see that the RTX 4090 is still good for well over 100 fps on average with 139 fps, making it 56% faster than the 6950 XT and 78% faster than the 3090 Ti. Even at 4K the 6800 XT is delivering RTX 3090 Ti-like performance with 74 fps on average and we see that the standard RX 6800 is able to match the RTX 3080 with 66 fps.

Then from the 6750 XT we are dipping below 60 fps, though it was nice to see the refreshed Radeon GPU matching the RTX 3070 Ti. The 6650 XT was good for 41 fps and then below that we are dropping down into the 30 fps range.

Benchmarks: Basic

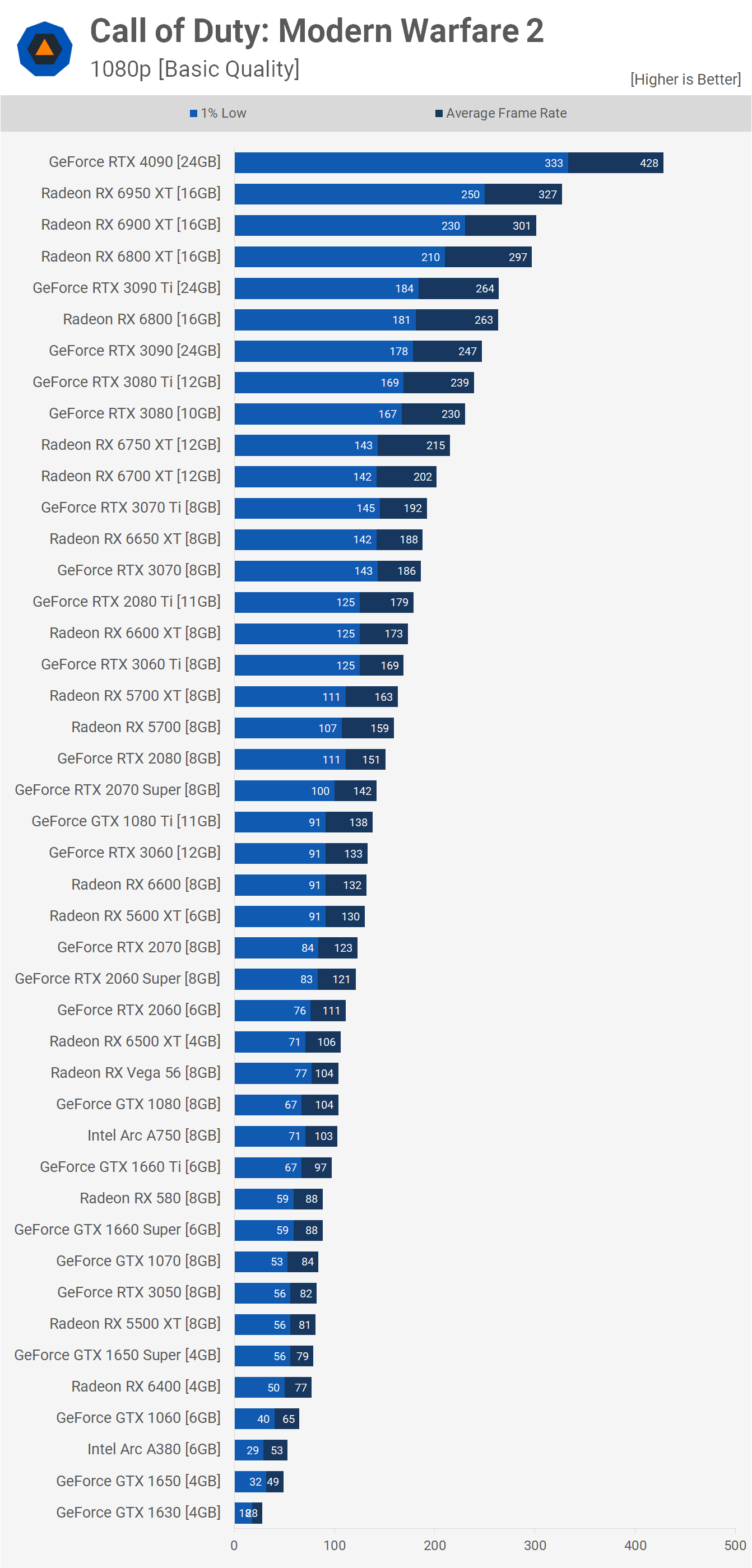

Further testing was performed using the ‘Basic’ quality preset and we’ll start with the 1080p data once more. For those of you interested in driving big frame rates for that competitive edge, the RTX 4090 is the way to go right now, pumping out 428 fps on average. That made it 31% faster than the 6950 XT and 62% faster than the 3090 Ti, and that meant the 6950 XT was 24% faster than the 3090 Ti.

Not just the 6950 XT, but also the 6900 XT and 6800 XT were all a good bit faster than the 3090 Ti, which was matched by the RX 6800. That also meant the 6750 XT was just 7% slower than the RTX 3080, and 12% faster than the 3070 Ti. Then we see that the original 6600 XT was able to match the 2080 Ti and 3060 Ti with 173 fps.

We also see that the 5700 XT continues to impress with 163 fps, making it 8% faster than the RTX 2080, 15% faster than the 2070 Super and a massive 35% faster than the 2060 Super.

Then to my surprise the 6500 XT was right there, just a few frames slower than the RTX 2060, Vega 56 and the GTX 1080. We are using PCIe 4.0 though and with lower quality settings aren’t exceeding the 4GB VRAM buffer, so these are ideal conditions for the 6500 XT. Still, seeing performing 20% faster than the RX 580 and GTX 1660 Super was a surprise given what we normally see.

Below the 1660 Super we have the GTX 1070, RTX 3050, 5500 XT, 1650 Super and a few other models that struggled to reach 50 fps.

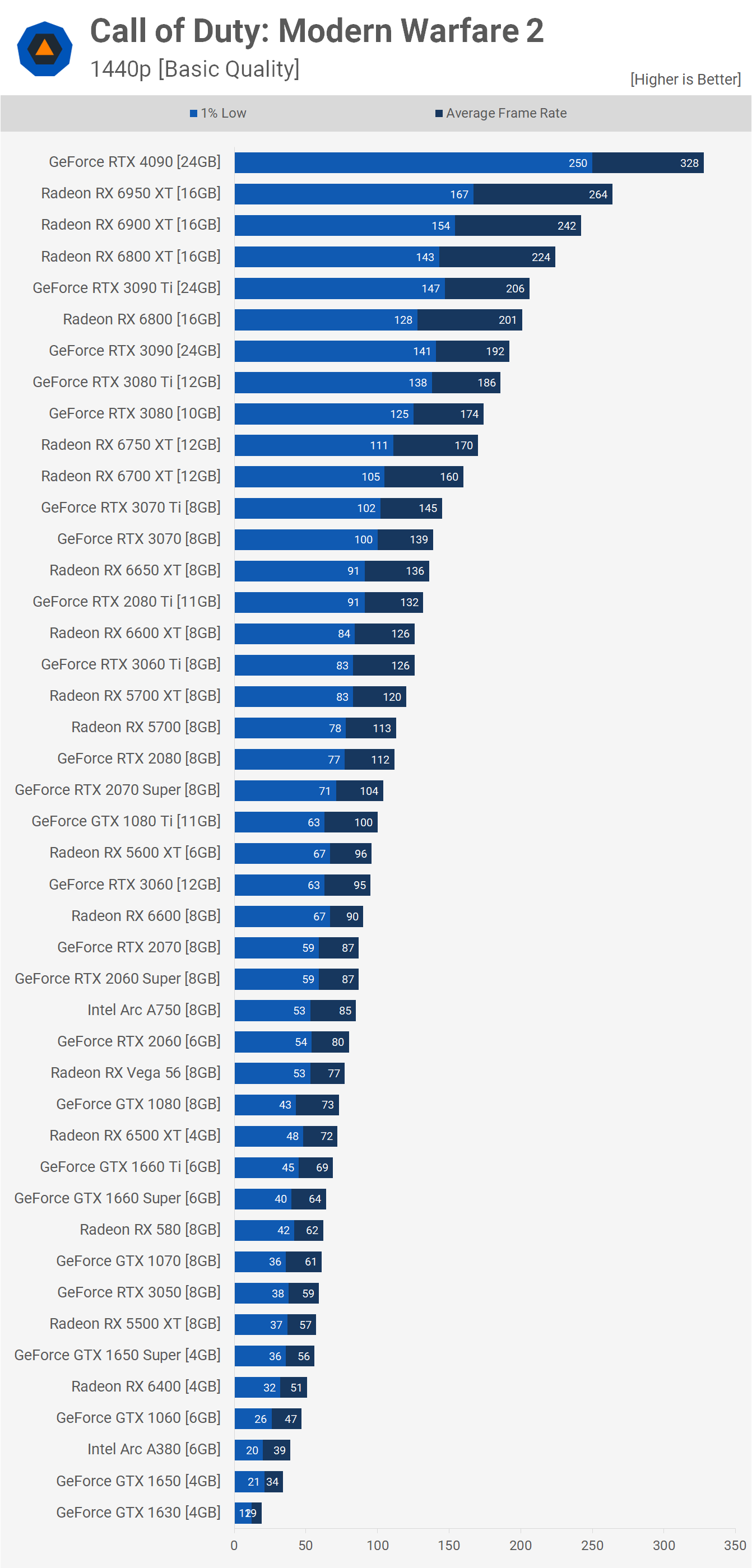

Moving up to 1440p, the RTX 4090 is still pumping out over 300 fps and this made it 24% faster than the 6950 XT and 59% faster than the 3090 Ti. The top GeForce remains on top but at least with the current drivers we can see the Radeon GPUs are far more efficient in this title.

The Radeon 6800 XT, for example, slayed the RTX 3080 by a 29% margin, while the RX 6800 was 26% faster than the RTX 3070.

In fact, the 6650 XT basically matched the RTX 3070 with 136 fps which also placed it on par with Turing’s flagship GeForce GPU, the RTX 2080 Ti. Below that we have the 6600 XT and 3060 Ti neck and neck followed by the 5700 XT with an impressive 120 fps. This made the RDNA GPU 7% faster than the RTX 2080, 15% faster than the 2070 Super and a massive 38% faster than the 2060 Super.

The 2060 Super even lost out to Intel A750, though both the RTX 3060 and RX 6600 were faster than the Arc GPU. Vega 56 managed to nudge ahead of the GTX 1080 and again the 6500 XT shocked with 72 fps, placing it ahead of the 1660 Ti, 1660 Super and RX 580.

The old Radeon RX 580 did break the 60 fps barrier, as did the GTX 1070, but below that we are falling into the 50s and the GTX 1060 didn’t even manage that with 47 fps. So at least for playing Call of Duty, the RX 580 has aged well, delivering 32% more performance than its Pascal competitor.

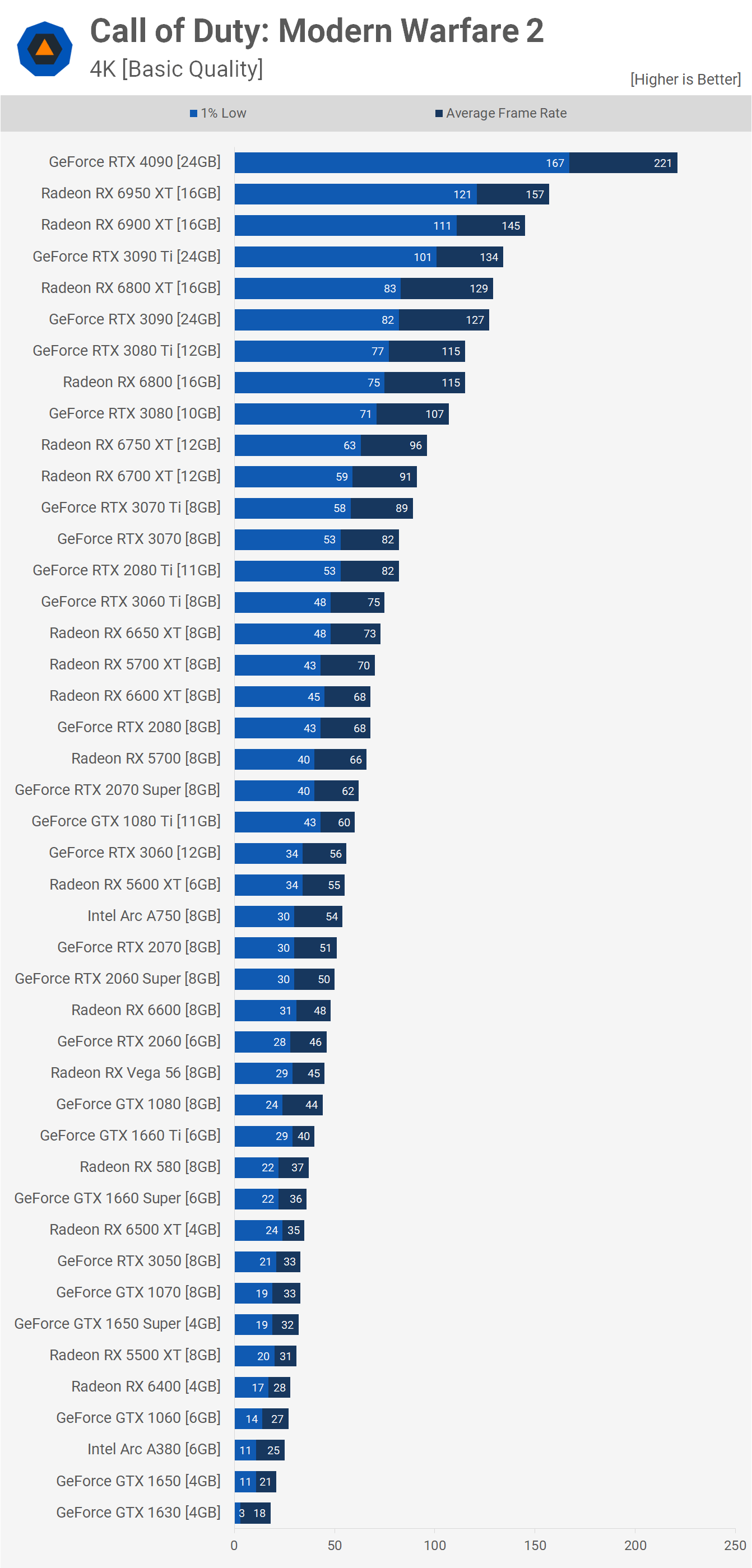

Now at 4K using the Basic preset we see that the RTX 4090 is able to break the 200 fps barrier with 221 fps on average, that’s 41% faster than the 6950 XT and 65% faster than the 3090 Ti.

Even at 4K the Radeon 6950 XT is 17% faster than the 3090 Ti. The 6800 XT was also just 4% slower than the 3090 Ti, but 20% faster than the RTX 3080, meanwhile the RX 6800 matched the 3080 Ti to beat the 3080 by a 7% margin. The 6700 XT was 11% faster than the 3070 and 2080 Ti, while the 6650 XT was a massive 30% faster than the RTX 3060.

The good old Radeon 5700 XT was also remarkable, this time with 70 fps on average making it slightly faster than the RTX 2080, 13% faster than the 2070 Super, and a massive 40% faster than the RTX 2060 Super.

Even the 5600 XT was able to match the RTX 3060, though both did dip below 60 fps. Intel’s Arc A750 was also good for 54 fps making it slightly faster than the RTX 2070 and 2060 Super. Once we get down to the RX 580 we’re below 40 fps and the game isn’t playable at a satisfactory level, certainly not for multiplayer.

Preset Scaling

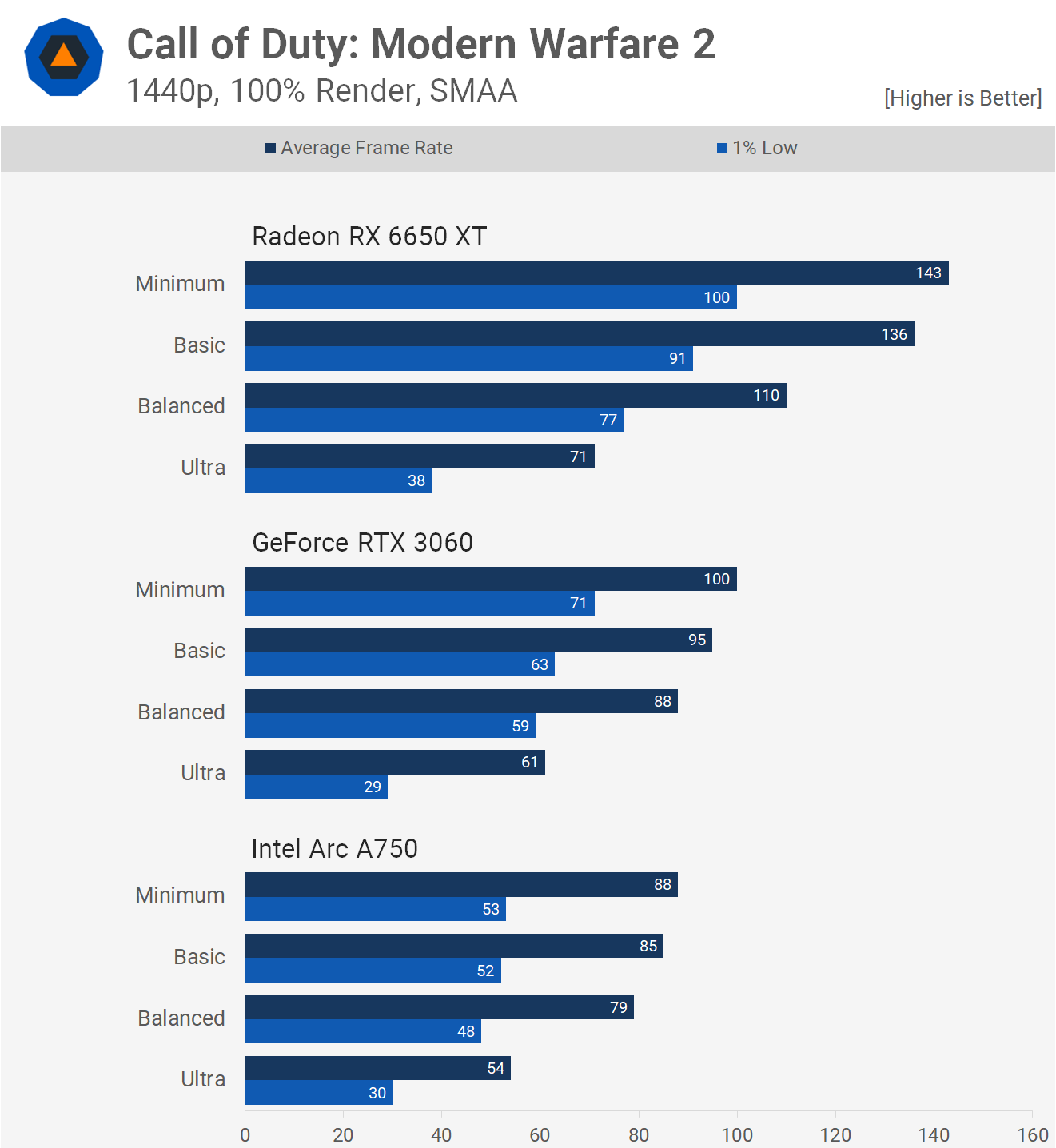

Here’s a look at how the Radeon 6650 XT, GeForce RTX 3060 and Arc A750 scale across the various presets, making sure to restart the game after each change. The 6650 XT saw a massive 55% performance boost when going from Ultra to Balanced, then a further 24% increase from Balanced to Basic and finally a small 5% increase from Basic to Minimum.

The RTX 3060 saw a milder 44% increase from Ultra to Balanced, then just a 7% increase from Balanced to Basic with the same 5% gain when going from Basic to Minimum.

The Intel A750 saw a 46% increase from Ultra to Balanced, 8% from Balanced to Basic and just 4% from Basic to Minimum. So it’s interesting to note that while the 6650 XT was 16% faster than the RTX 3060 when running the Ultra quality settings, it was 25% faster using Balanced, and then a huge 43% faster using Basic.

DLSS vs. FSR Performance

We also took a quick look at DLSS 2.0 vs FSR 1.0 performance. We’ve excluded XeSS as we couldn’t get it to work properly, even with the beta driver, so there are still some driver issues that Intel needs to work out.

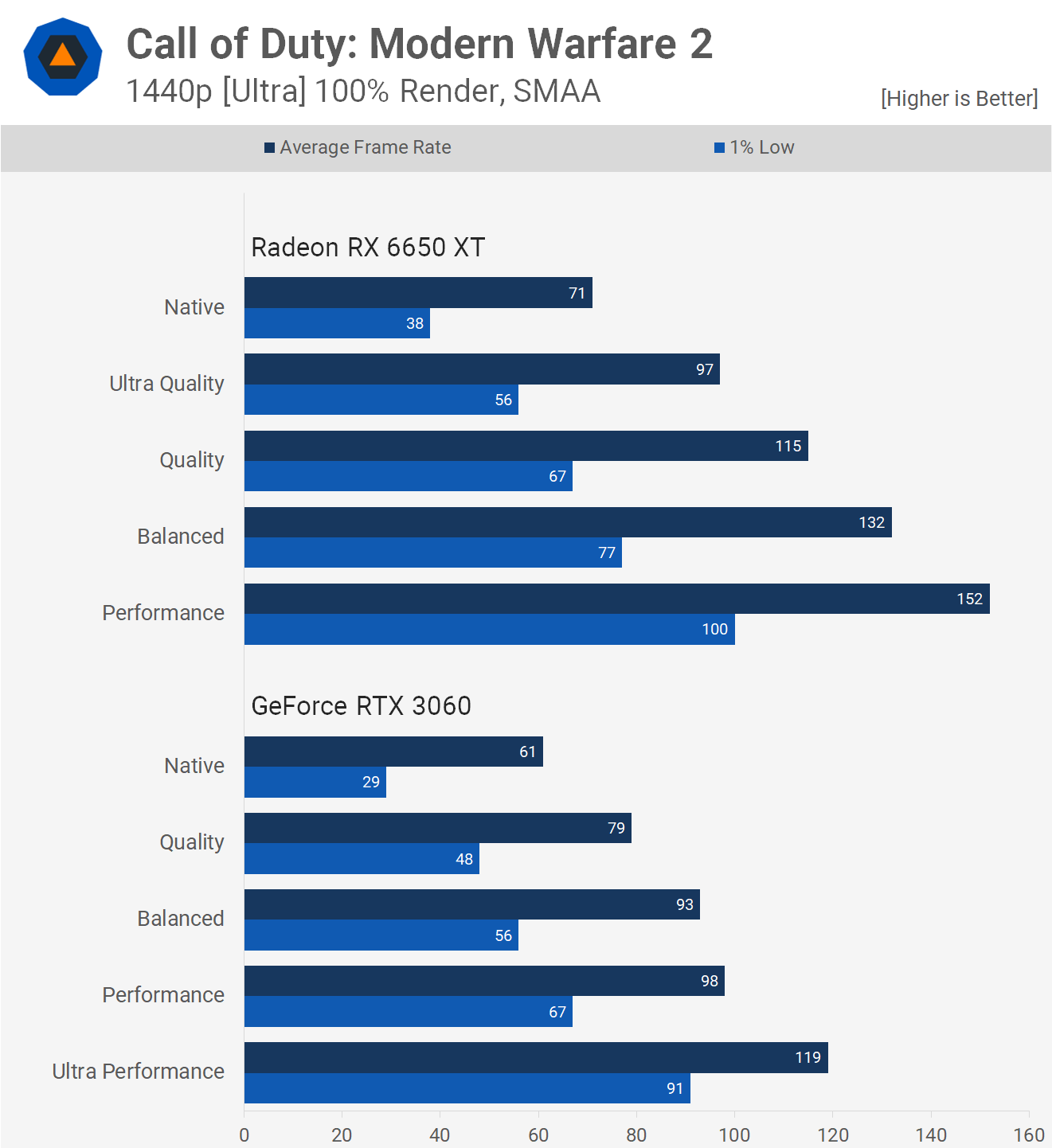

Also please note these are just the performance numbers and without a detailed look at visuals these don’t mean much, but we know many of you will want to see them, so here they are. Again, we do know that FSR 1.0 is massively inferior to DLSS, so keep that in mind.

For FSR we’re looking at a 37% increase over native with the ultra quality setting, then a further 19% with quality, another 15% with balanced and finally a further 15% using performance.

For DLSS we see a 30% increase using quality over native, another 18% boost for balanced over quality, just a 5% increase from balanced to performance and then a 21% increase from performance to ultra performance.

What We Learned

Those were some interesting results and it appears as though AMD’s Radeon GPUs are providing gamers with the most bang for their buck in Call of Duty: Modern Warfare II. Of course, GeForce RTX owners can lean on DLSS and a brief look at this technology seems to suggest that visuals are good using the quality mode, though we should stress we only briefly looked at upscaling for this putting this test together.

It’s also been a while since we took an in-depth look at GPU performance in a Call of Duty game, but from memory Radeon GPUs always seemed to perform well in games using the IW engine and Modern Warfare II uses the latest iteration dubbed IW 9.0 which is apparently a highly upgraded version from 8.0 that introduces a new water simulation system, improved AI, new audio engine and improved 3D directionality and immersive audio.

So it’s either a situation where Radeon GPUs just perform better relative to GeForce GPUs than you’ll typically see in other games, particularly those using the popular Unreal Engines, or Nvidia has a lot of work to do with their drivers, or perhaps we suppose a bit of both.

There are known issues with Nvidia’s latest driver as it suffers from stability issues which the developer has noted, suggesting GeForce gamers roll back to an older driver for better stability. Of course, stability and performance aren’t the same thing, so there’s no guarantee a new driver will address both, but we’re sure that’s the hope for GeForce owners.

We should note we still encountered the odd crash using Radeon cards, but it wasn’t nearly as common, in any case this does suggest the developer has some work to do on that front as well.

The good news is that performance in general is very good. With most gamers now targeting 1440p and for those playing the multiplayer portion of the game likely to use lower quality settings, the case is that the Basic preset results show that it doesn’t take much for 60 fps.

Serious gamers will likely be aiming for over 100 fps at all times and for that you’ll require a rather high-end GPU, such as the GeForce RTX 3070 or Radeon 6700 XT. From the Ampere and RDNA2 generations, AMD really does clean up, especially at the high-end where the 6950 XT was often around 30% faster than the 3090 Ti which is a big margin. The 6800 XT also did in the RTX 3080 by a similar delta.

We do plan to add Modern Warfare II’s multiplayer benchmark to the battery of tests we often use in our reviews, and we’re keen to throw a few CPUs at this test in the future, so if performance does change, you’ll see that through our ongoing benchmarks comparing various GPUs and CPUs.

Shopping Shortcuts:

- Nvidia GeForce RTX 4090 on Amazon

- Nvidia GeForce RTX 4080 on Amazon (soon)

- Nvidia GeForce RTX 3080 on Amazon

- Nvidia GeForce RTX 3090 on Amazon

- AMD Radeon RX 6900 XT on Amazon

- AMD Radeon RX 6800 on Amazon

- AMD Radeon RX 6800 XT on Amazon

- AMD Radeon RX 6700 on Amazon