The Intel Arc A580 is the newest graphics card of the Arc A-series, based on the Alchemist GPU architecture. Essentially, you’re getting all the same modern features you get with the A750 such as AV1 encoding, XeSS support, and ray tracing, at a more affordable price point, and that’s cool.

Granted, the A580 is going to be more affordable than the A750, but it’s also going to be slower, but hopefully not much slower. Without question it’s going to be much faster than the horribly slow A380 that we looked at a year ago – it’s much closer to the A750 in specs and they share the same silicon, along with the A770.

The Arc A580 packs 3072 shader units, 192 TMUs and 96 ROPs. That’s 14% fewer shader units and ROPs when compared to the A750, but the same number of TMUs. As a side note, that’s three times more shader units, TMUs and ROPs than the A380.

Another downgrade when compared to the A750 is a 33% reduction in L2 cache at 8 MB, but other than that, they’re very similar. In fact the A580, A750 and 8GB A770 all feature the same memory configuration down to the last detail. That means the A580 comes with 8GB of GDDR6 memory operating at 16 Gbps on a 256-bit wide memory bus for 512 GB/s bandwidth. The A580 also supports the full x16 PCIe 4.0 interface and features a TDP of 175 watts.

In terms of performance, the Arc A580 shouldn’t be that much slower than the A750, which is a good thing given Intel has set the MSRP at $180. That’s just $40 less than the outgoing A750 pricing today, almost a 20% discount. The focus of this comparison though won’t be with the A750, rather the more relevant competition in the Radeon RX 6600 and (we guess) the GeForce RTX 3050.

The Radeon RX 6600 is particularly relevant because it can be had for $210 right now whereas the most affordable RTX GPU, the 3050 generally costs quite a bit more, though we’re seeing more discounts lately dropping down to around $230. That said, we know the RTX 3050 is generally slower than the RX 6600, so even at $230 it’s not a great deal.

Usually for a review we’d include more than a dozen GPUs for comparison, but for this one we didn’t have long with the card, so we decided to test a range of newer games, comparing the A580, 6600 and 3050. That means all of the data for this content is 100% fresh.

For testing we’re using our AMD Ryzen 7 7800X3D test system with 32GB of DDR5-6000 CL30 memory, the latest display drivers were used for all three GPUs, and resizable BAR was enabled as that’s the default setting for this hardware configuration. In total we have a dozen games to go over, tested at both 1080p and 1440p, along with some overclocking and power consumption figures, so let’s get into it…

Benchmarks

First up, we have The Last of Us Part I. The performance isn’t particularly favorable for the Arc GPUs, especially the A580, which only achieved 41 fps at 1080p and 19 fps at 1440p using the high preset, even though Intel promotes this as a high-quality 1080p gaming GPU. In contrast, the RTX 3050 was 20% faster at 1080p, and the RX 6600 outpaced it by a significant 44%.

Intel has mentioned that they’ve addressed the Arc’s subpar performance in “The Last of Us Part I” with their October 4th driver update. However, these improvements didn’t make it to the A580’s release driver. They assert the upcoming A580 driver will incorporate these adjustments.

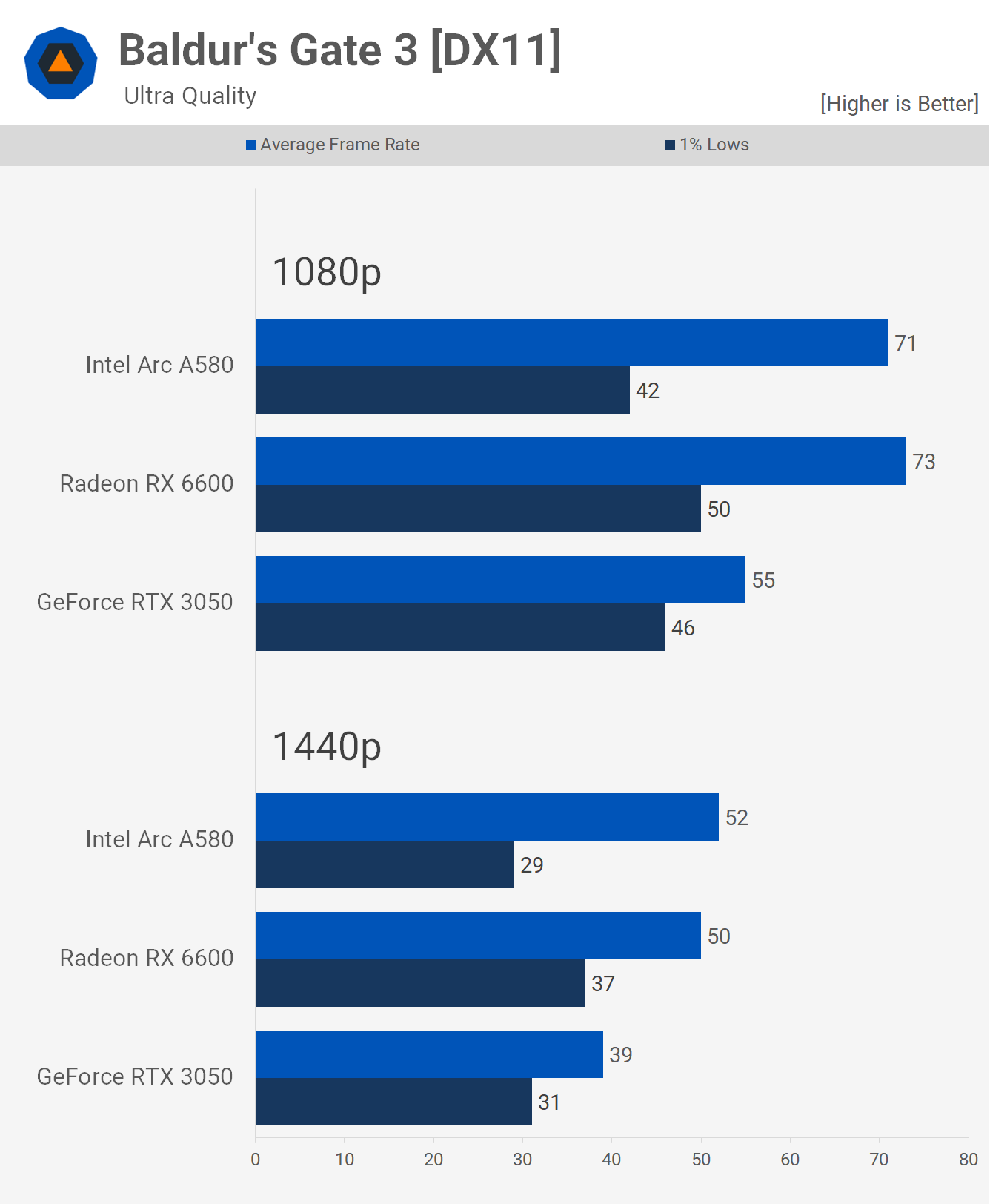

Next, we have the highly popular Baldur’s Gate 3. The A580 fares better here, though there were some minor frame pacing discrepancies compared to the RX 6600. However, on the whole, the performance was comparable between the two GPUs, allowing the A580 to surpass the RTX 3050 by a significant 29%.

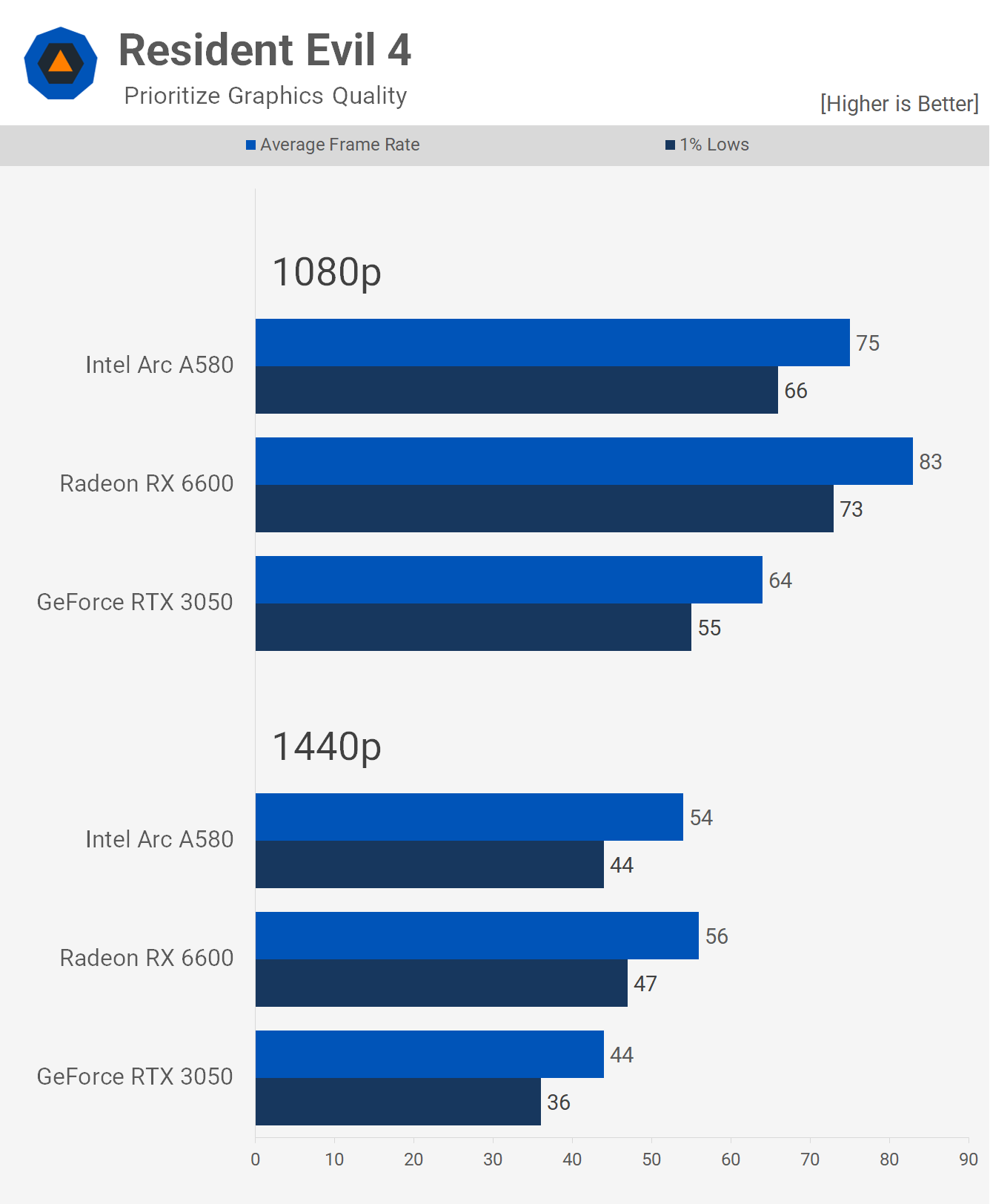

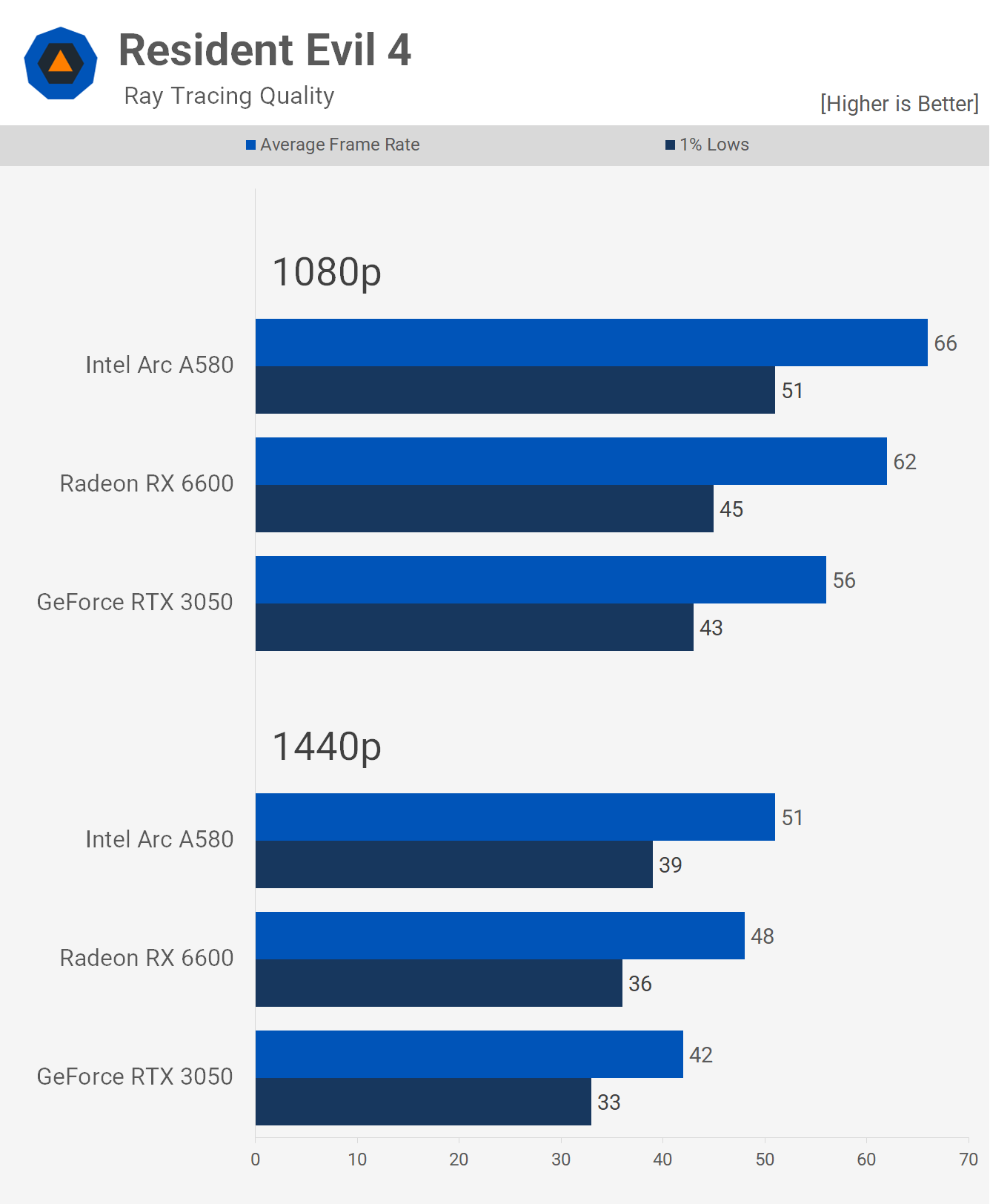

In Resident Evil 4, the A580 held its ground, outdoing the RTX 3050 by 17% at 1080p and trailing the RX 6600 by just 10%. Achieving 75 fps at 1080p using the “prioritize graphics quality” preset is a positive outcome.

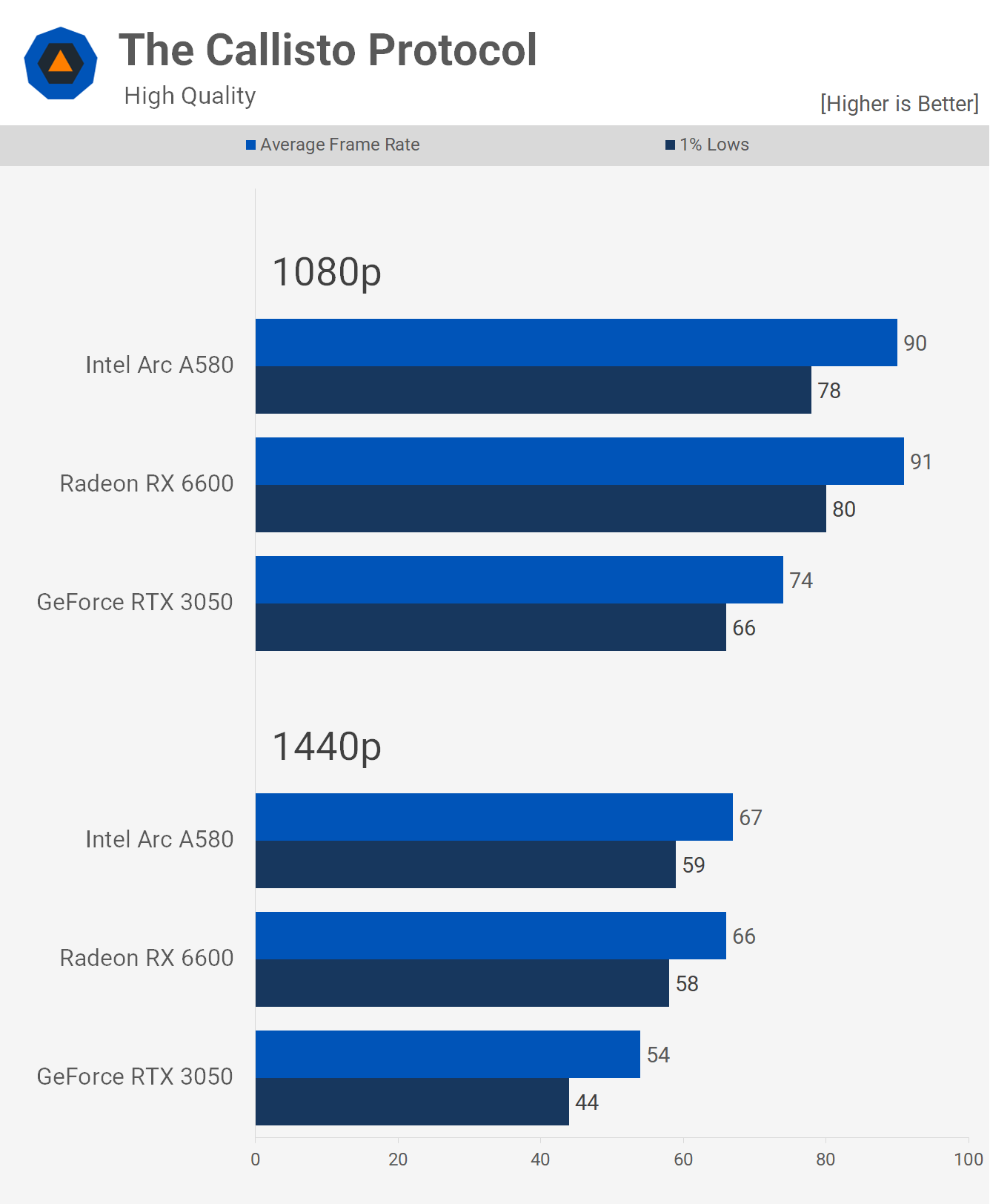

In The Callisto Protocol, the A580 delivered highly competitive results, matching the RX 6600 at both tested resolutions. This made it 22% quicker than the RTX 3050 – a strong performance for Intel.

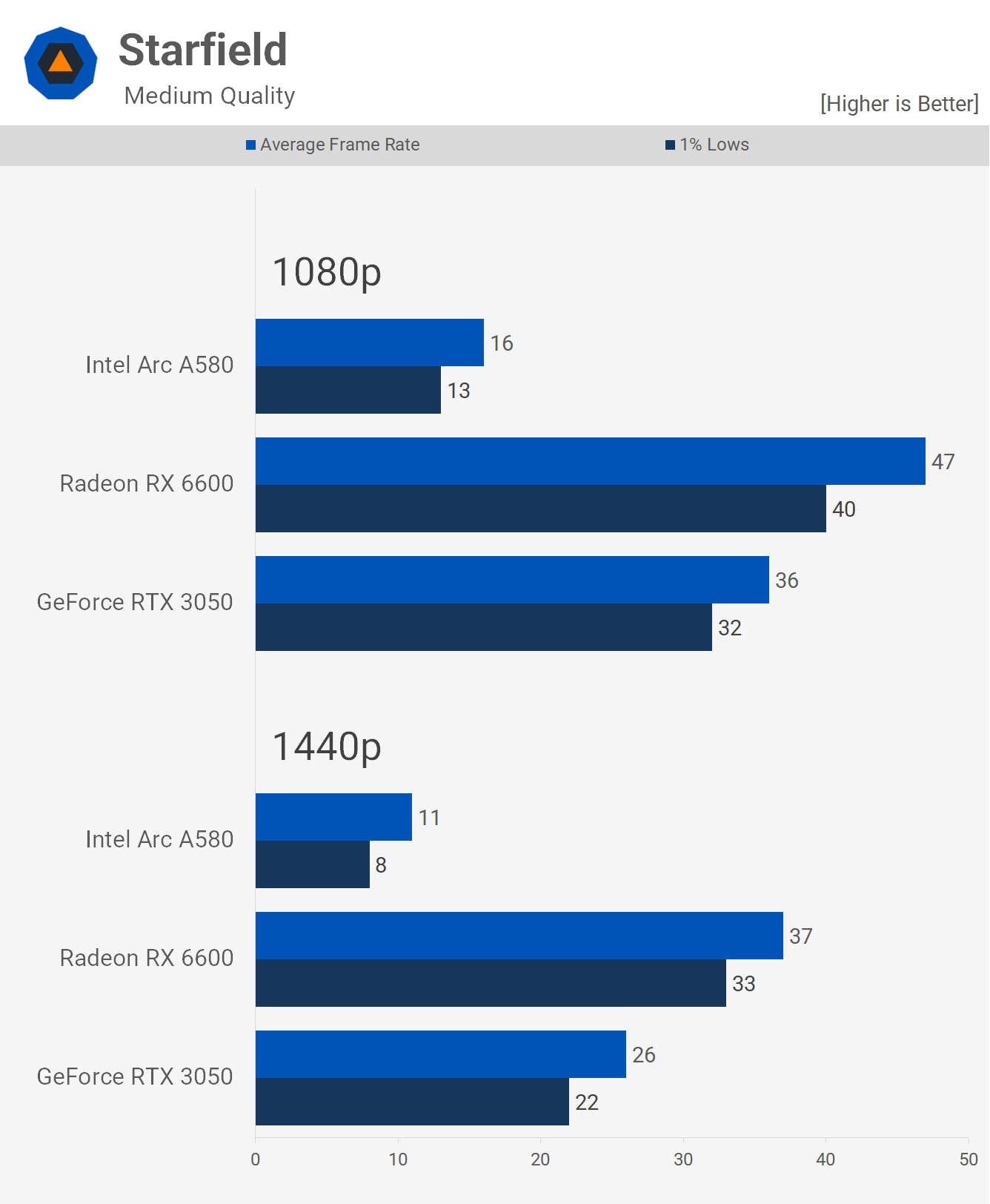

Starfield proved to be another challenging title for the Arc. The A580’s performance here was severely lacking, underscoring some concerns with Intel’s Arc GPUs. While the A580’s showing in this game was suboptimal, Intel insists this has been rectified in their latest driver. However, these enhancements weren’t part of the A580’s initial driver release, which seems like a considerable oversight. While we’ve witnessed stellar performance from Arc in some games, there are instances where it severely underperforms, as is evident here. For comparison, the RX 6600 achieved 47 fps at 1080p using the medium preset, whereas the A580 lagged behind with only 16 fps.

Surprisingly, Ratchet & Clank: Rift Apart stands out as a strong performance title for the A580. In this game, it significantly outperformed both the RX 6600 and RTX 3050, producing 32% more frames at 1080p and 45% more at 1440p. Given its die size and power consumption, which we’ll address shortly, we feel that we should see more performances like this from Arc GPUs, especially the A580.

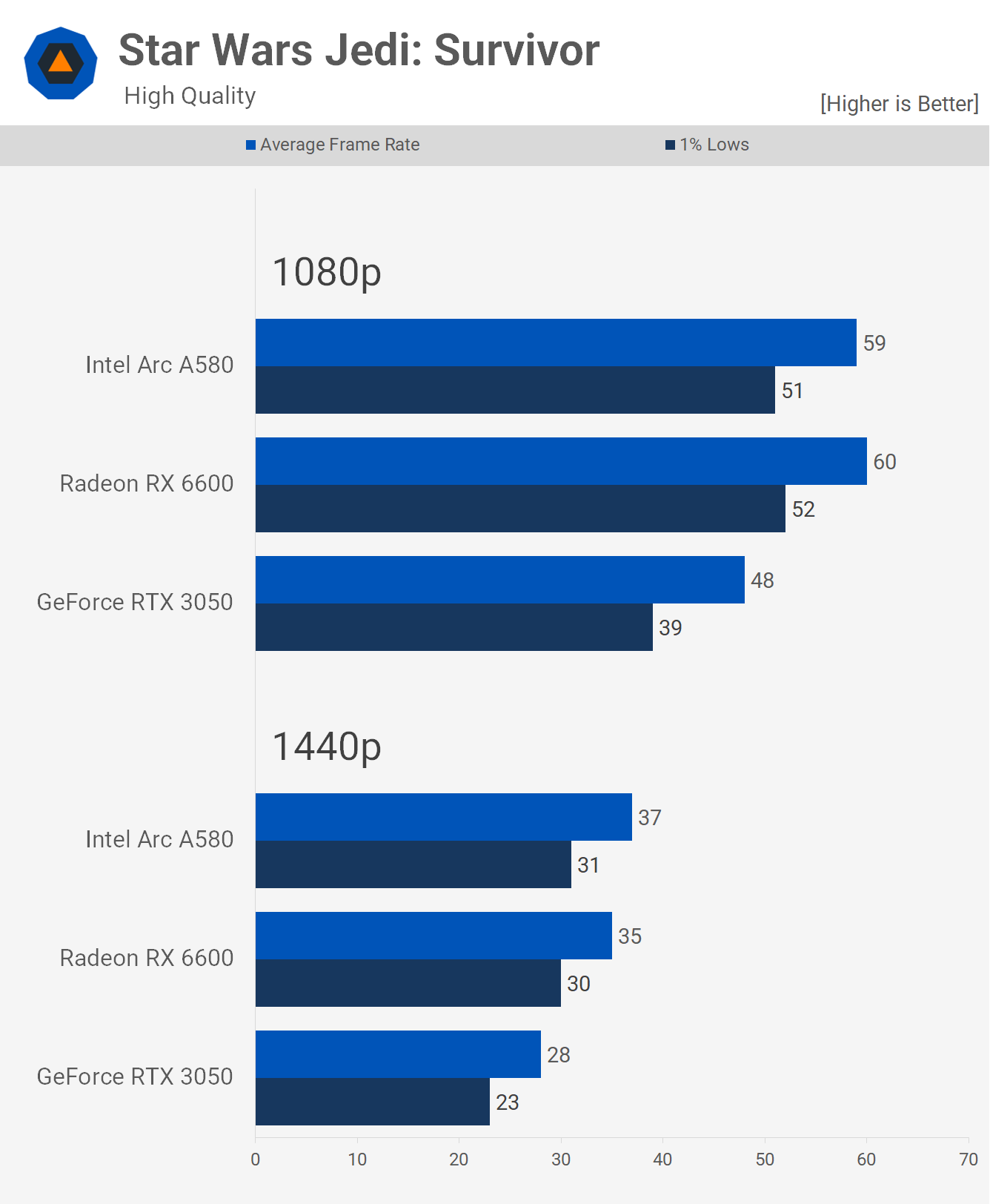

In Star Wars Jedi: Survivor, the A580 showcased its strength, overshadowing the RTX 3050 by 23% at 1080p and matching the performance of the RX 6600 – another impressive result.

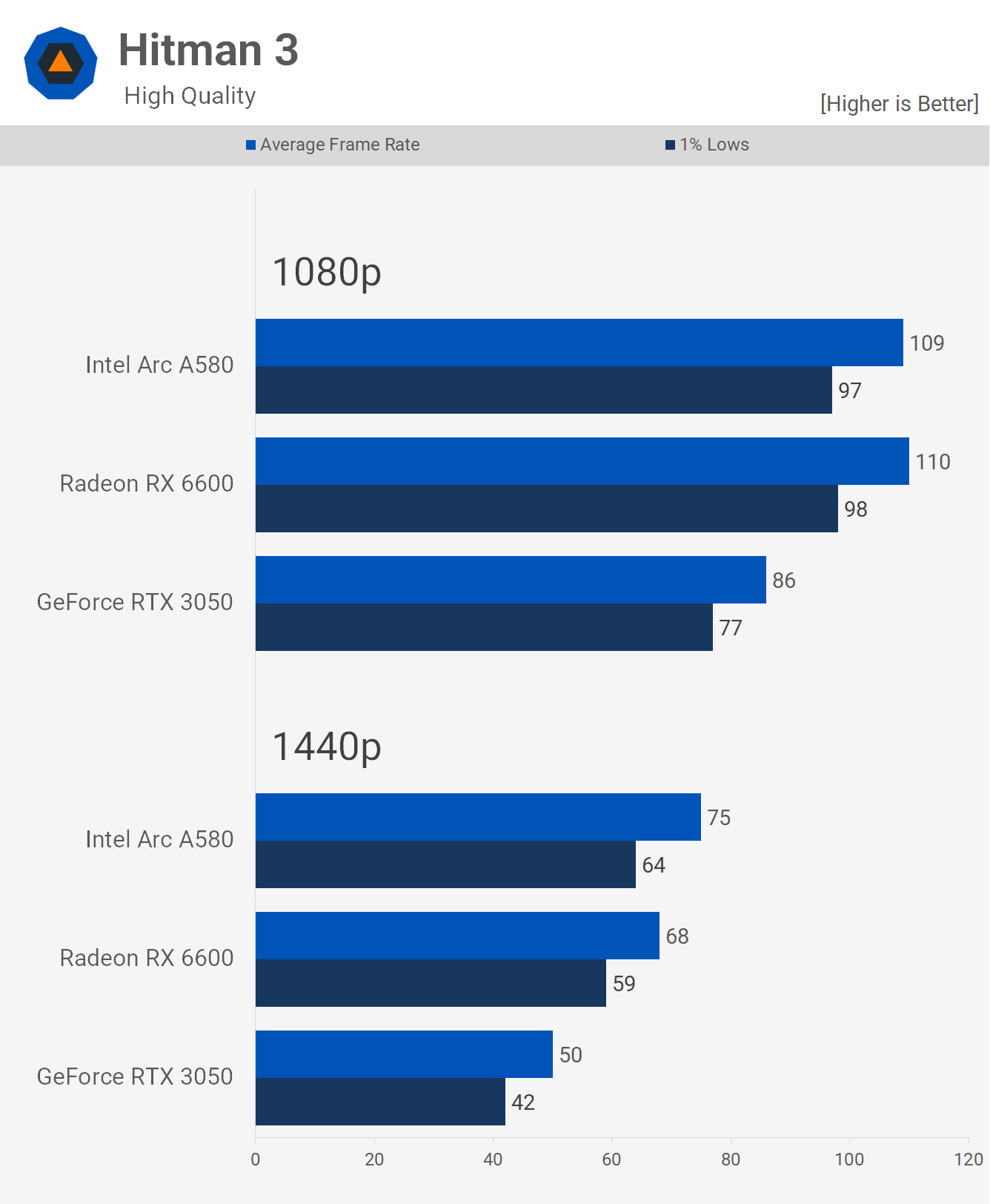

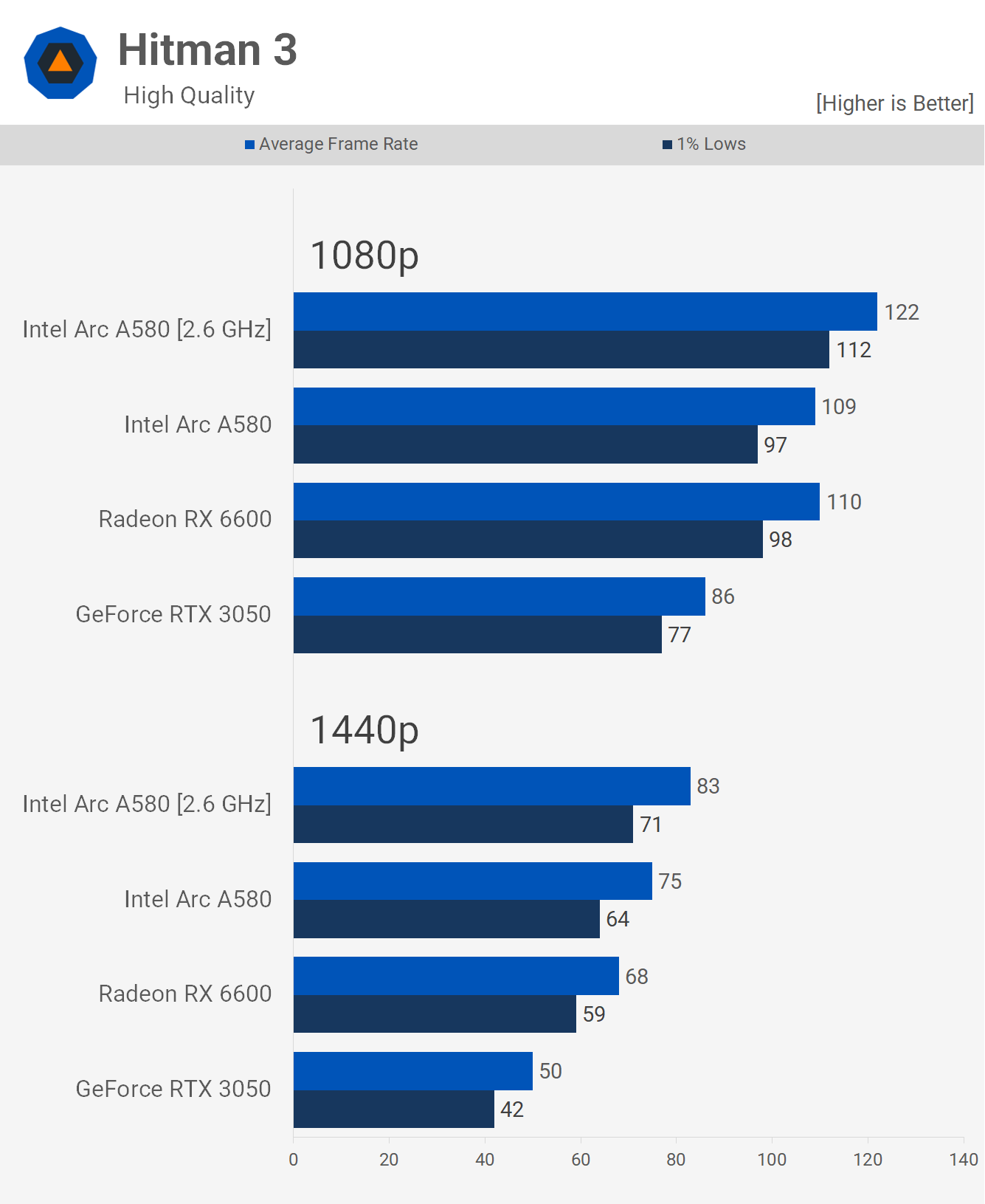

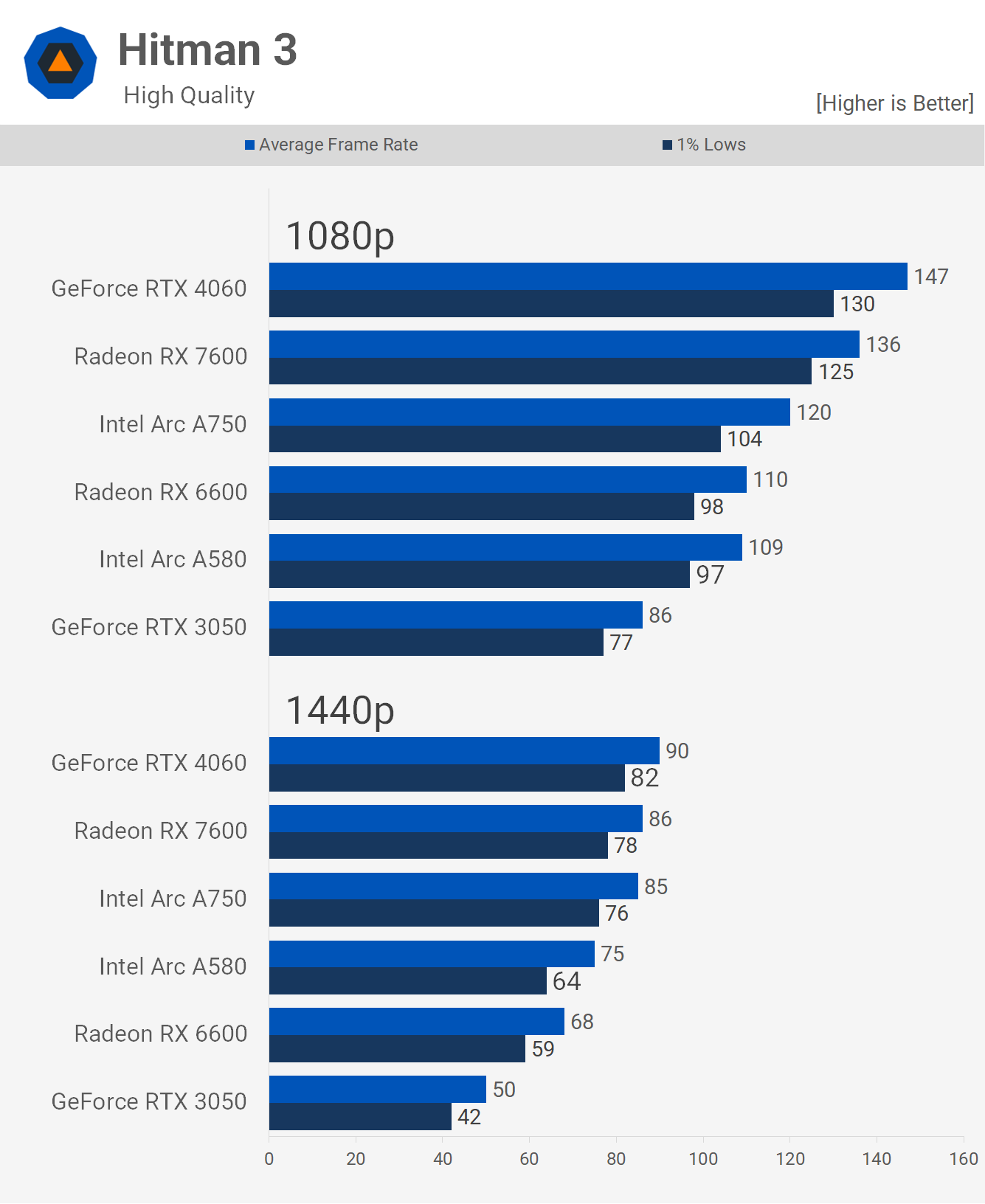

Hitman 3 is yet another title where the A580 shines. Here, it equaled the RX 6600 at 1080p and surpassed it by 10% at 1440p, achieving a respectable average of 75 fps.

While the performance in F1 23 wasn’t stellar, the A580 still managed a commendable showing, delivering 121 fps at 1080p and 95 fps at 1440p. This put it in the same ballpark as the RTX 3050, especially at the 1440p resolution. However, it trailed the RX 6600 by 20% at 1080p. Still, the overall performance was decent.

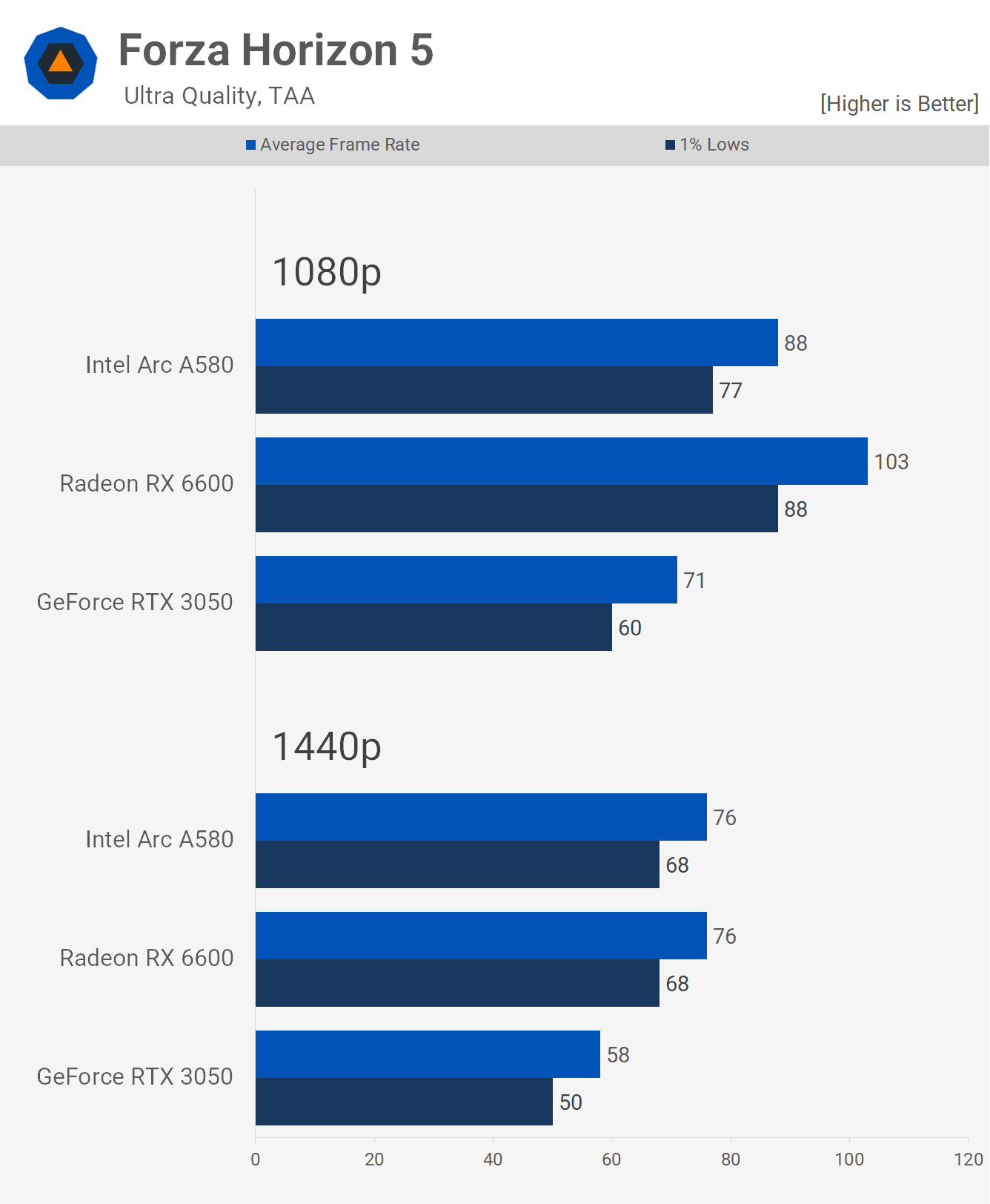

The results for Forza Horizon 5 were promising. The A580 leaned more towards the RX 6600’s performance rather than the RTX 3050’s at 1080p, with an average of 88 fps. This made it 15% slower than the RX 6600 but a solid 24% faster than the RTX 3050. Moving to 1440p, the A580’s performance surpassed the RTX 3050 by 31%, and its increased memory bandwidth allowed it to rival the RX 6600.

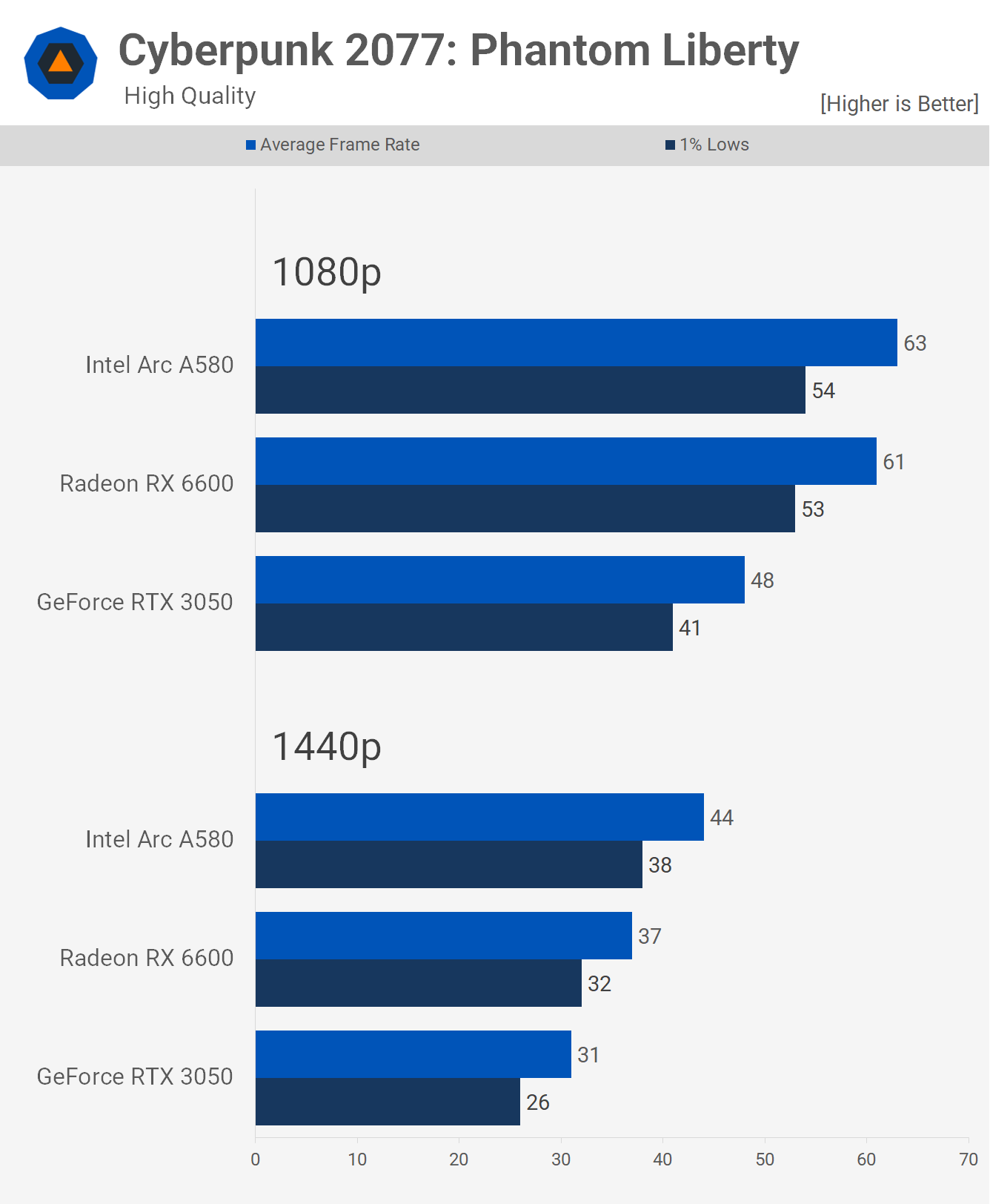

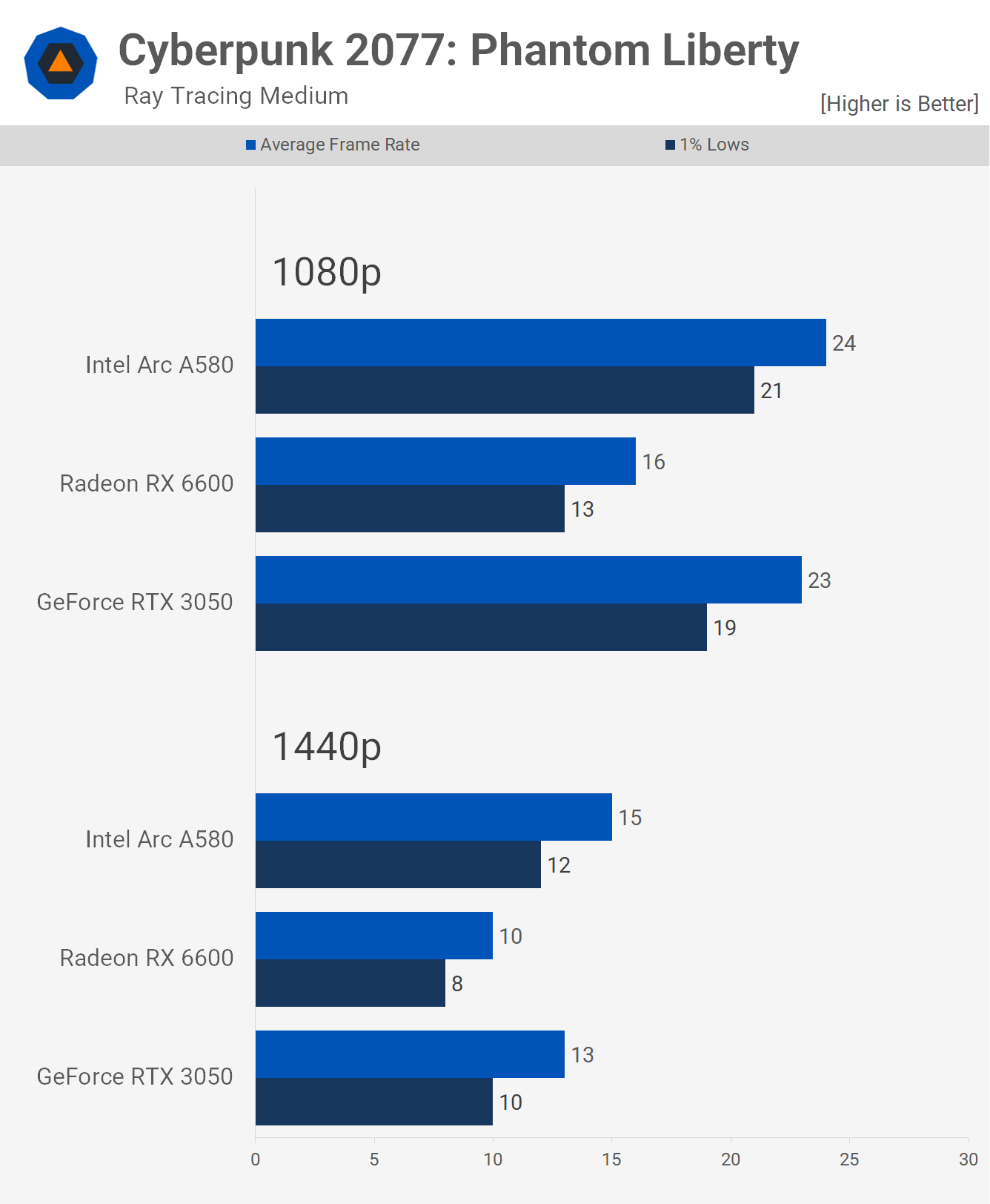

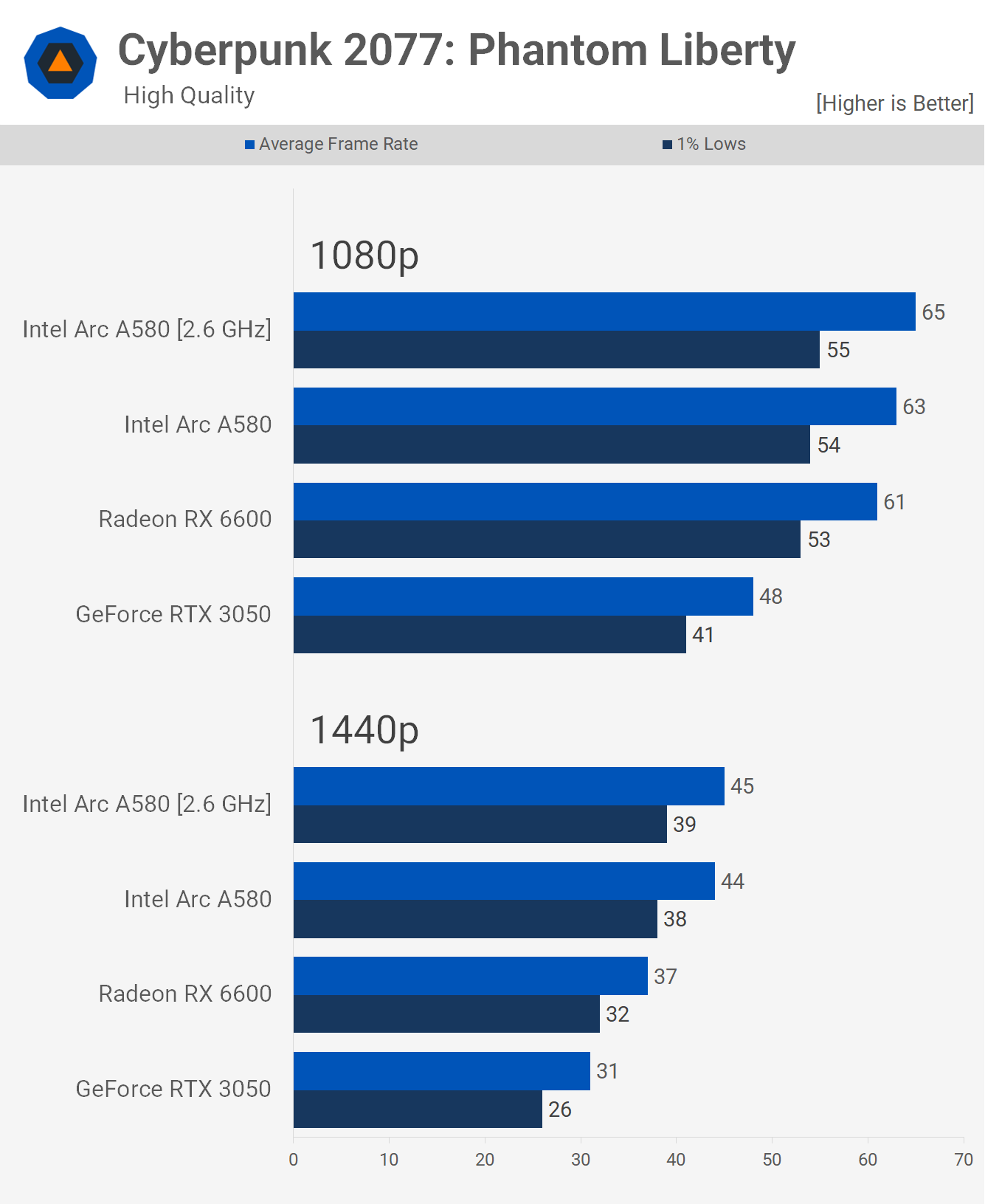

The A580 showcased its prowess with Cyberpunk 2077: Phantom Liberty. It marginally outperformed the RX 6600 at 1080p and bested it by a striking 19% margin at 1440p. In the same vein, the A580 was 31% faster than the RTX 3050 at 1080p and surged ahead with a substantial 42% lead at 1440p.

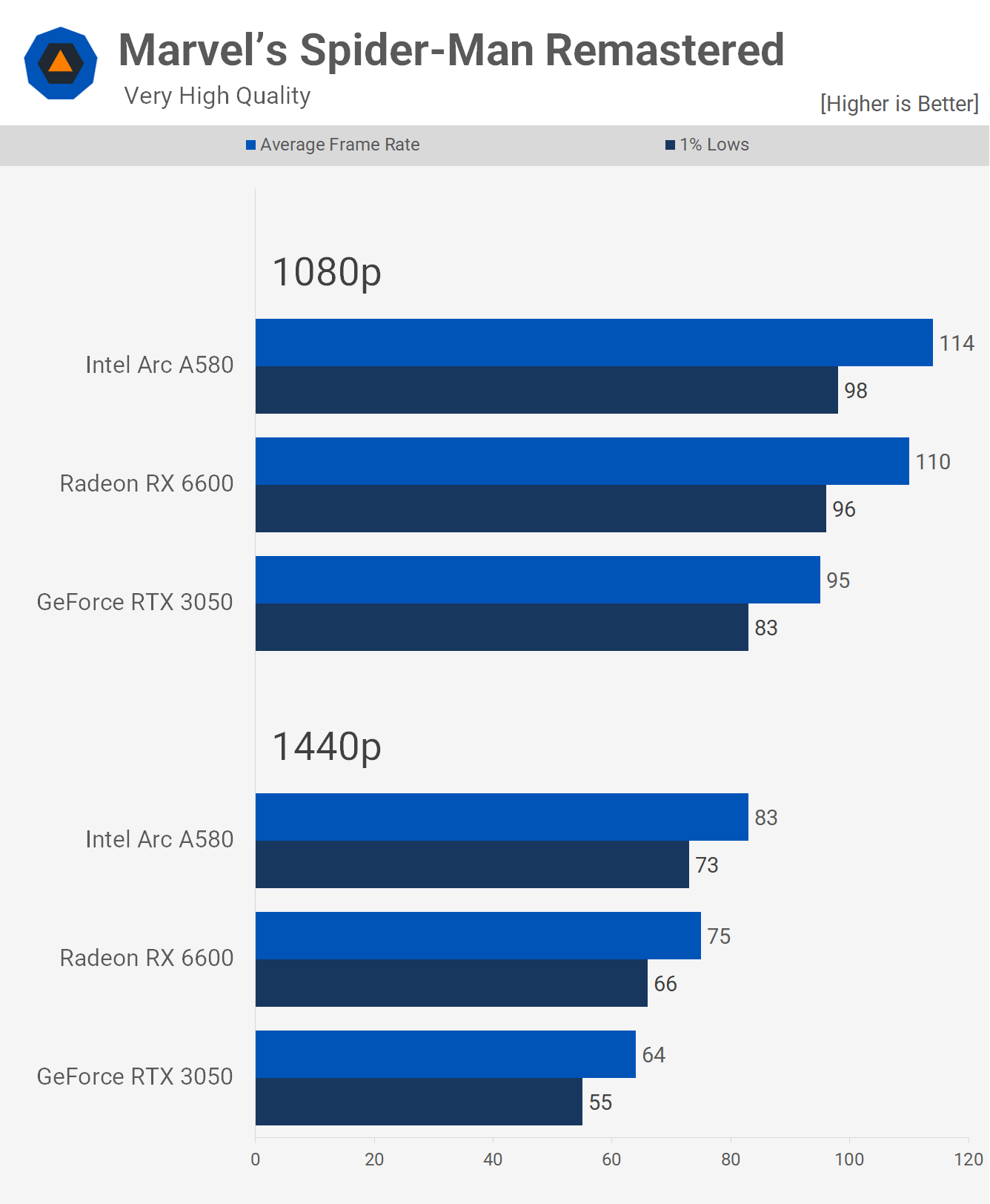

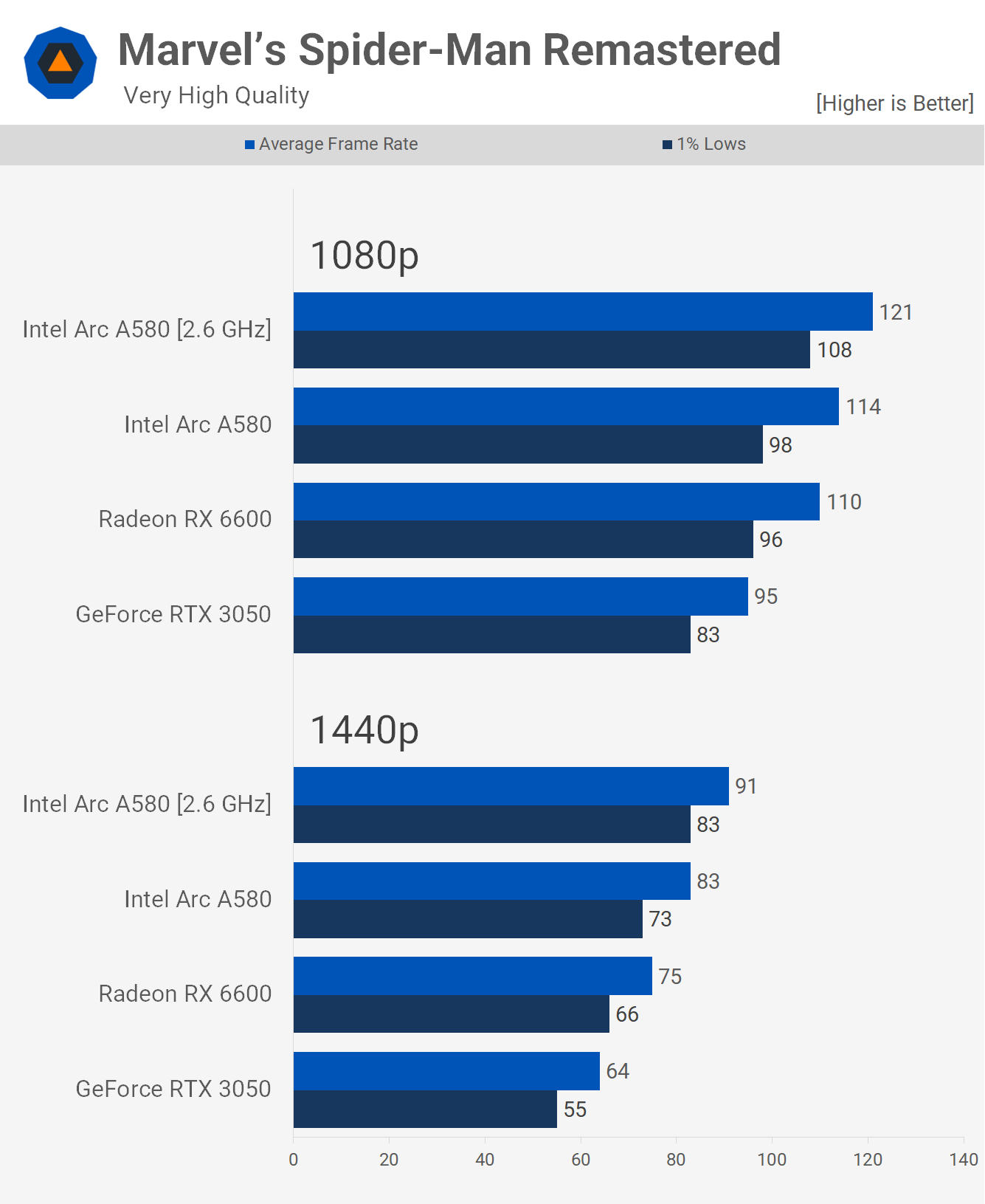

Concluding with Spider-Man Remastered, the A580 continued to impress, outpacing the RX 6600 by 4% at 1080p and 11% at 1440p. Given these metrics, it’s no surprise that it also easily outclassed the RTX 3050.

12 Game Average (and 10 Games, too)

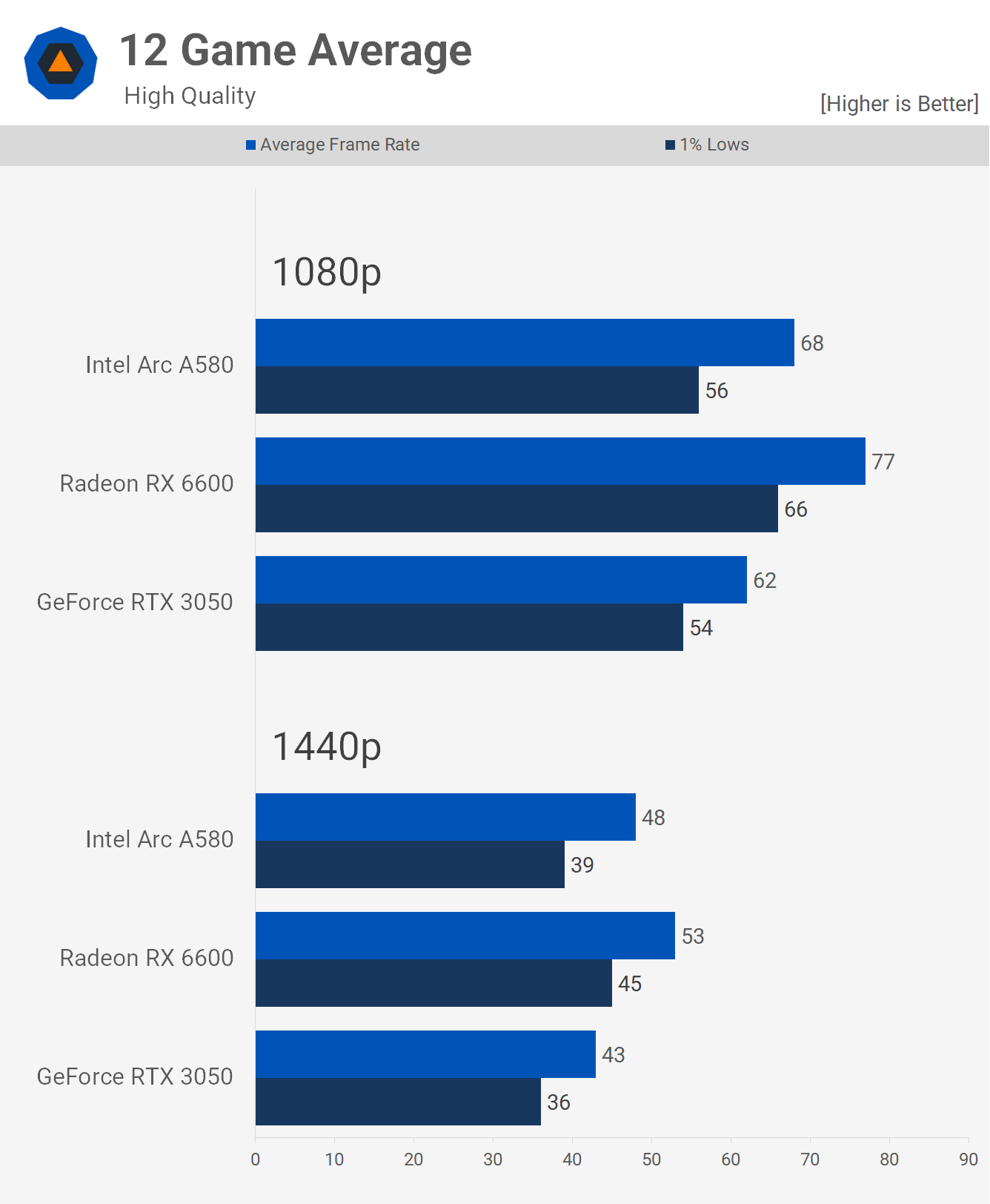

Here’s an examination of the 12-game average, which takes into account the lackluster performance observed in The Last of Us Part 1 and Starfield. Intel has stated these issues will be addressed with the A580’s next driver update. We’ll have to wait for that release to determine the extent of the improvements.

From the data, the A580 was 12% slower than the RX 6600. Given that it’s expected to be priced 14% less, this discrepancy may be reasonable, but we’ll delve into that shortly. On the brighter side, the A580 outperformed itself by 10%, which is positive for Intel. However, this also underscores the RTX 3050’s subpar performance.

Moving to 1440p, the A580 was 9% slower than the RX 6600 but 12% quicker than the RTX 3050. While Intel promises improvements for issues observed in The Last of Us Part 1 and Starfield, the current data still accurately represents the A580’s performance. We’ve noticed past instances where Arc GPUs underperformed. But for the sake of discussion, let’s consider excluding those two games.

When removing The Last of Us Part 1 and Starfield from the average, the A580 and RX 6600 perform comparably at 1080p. This positioning renders the A580 a significant 22% faster than the RTX 3050 at this resolution.

At 1440p, Intel’s new Arc GPU outpaces the RX 6600 by 7% and the RTX 3050 by 30%, showcasing a strong performance for the A580.

Ray Tracing Performance

Turning to ray tracing benchmarks, with the ray tracing preset in Resident Evil 4, the A580 achieved an average of 66 fps at 1080p. This made it 6% quicker than the RX 6600 and 18% faster than the RTX 3050.

In Cyberpunk 2077: Phantom Liberty, we utilized the medium ray tracing preset, which proved challenging for these GPUs, even when considering upscaling. The A580 edged out the RTX 3050, but the overall performance left much to be desired.

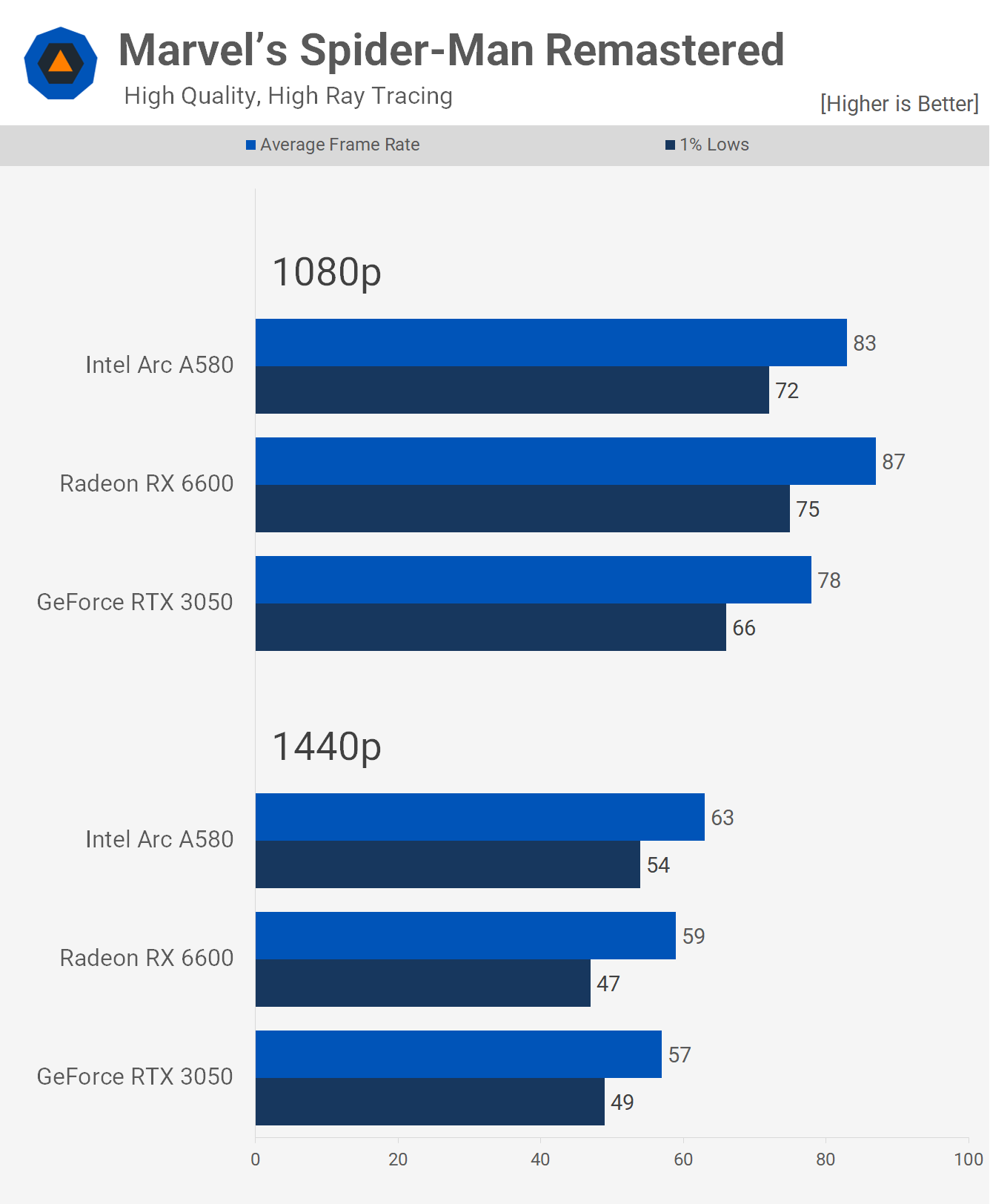

For Spider-Man Remastered, all three GPUs performed admirably. The A580 held its ground, rivaling both the RTX 3050 and RX 6600.

Power Consumption

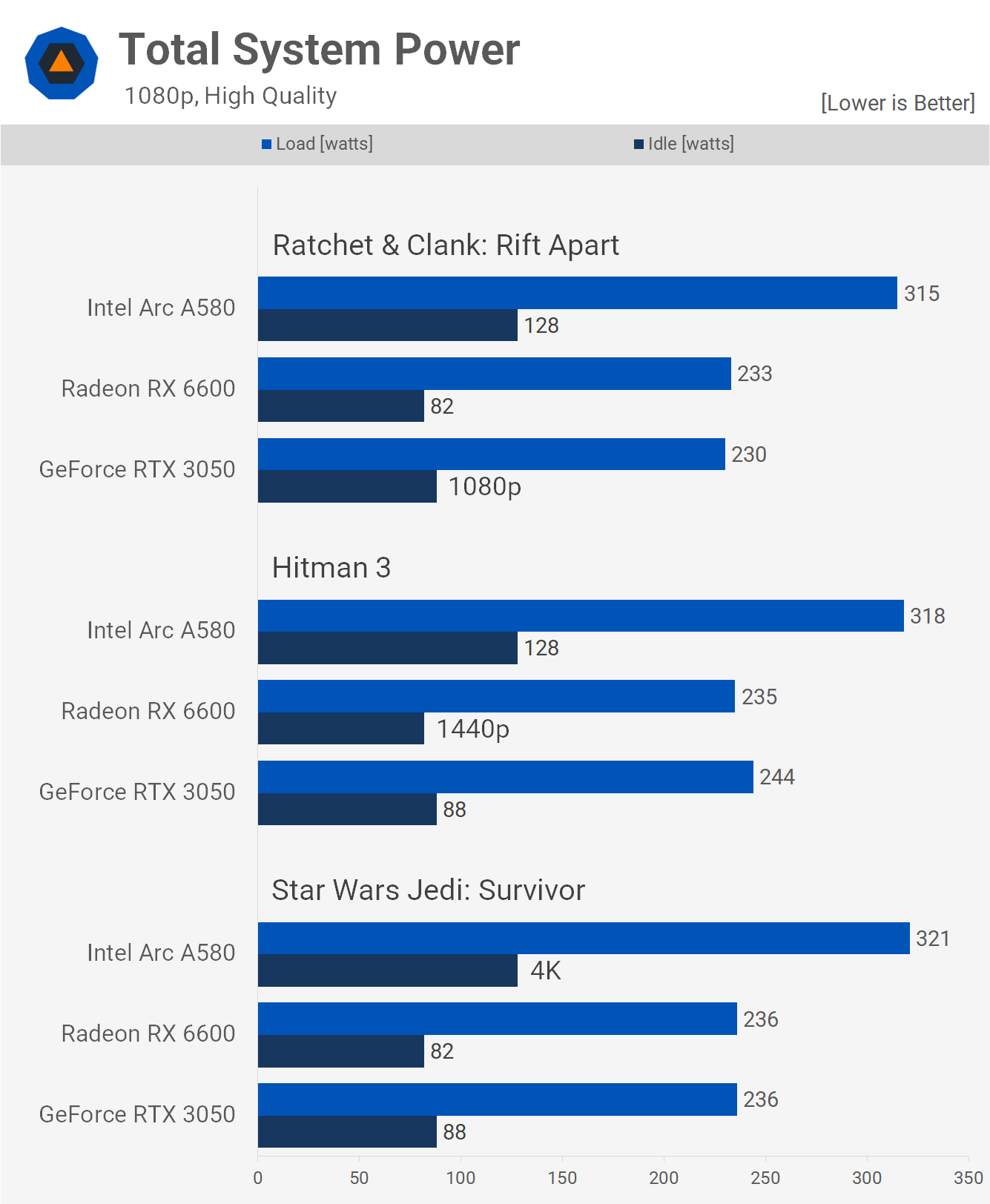

A notable drawback of the A580 is its power consumption. When benchmarked against the Radeon and GeForce contenders, our test system’s power usage surged by 35% with the A580. This represents an approximate increase of 80 watts, often for diminished performance.

Further concerning is the idle power draw, which saw a rise of at least 50%. Whereas the RX 6600 and RTX 3050 registered around 80-90 watts, the A580 approached 130 watts. This significant jump is concerning, and it remains uncertain if Intel can address this with future driver updates.

Overclocking

Now here’s a brief overview of the overclocking performance of the A580. Out of the box, it typically operated at 2.4 GHz, and we managed to increase this to 2.6 GHz without any stability issues. It’s important to note that this is based on a single sample, so the general overclocking potential of the A580 remains undetermined at this juncture.

In our tests with Cyberpunk, only a minor increase in frame rates was observed, indicating that the core clock speed might not be the main bottleneck in this scenario.

On the other hand, Hitman 3 showcased a more notable 11-12% performance boost depending on the resolution. However, it’s worth mentioning that GPUs like the RX 6600 and RTX 3050 can also be overclocked, contingent on the quality of the silicon, so this doesn’t necessarily give the A580 a significant advantage.

For Spider-Man Remastered, the overclock yielded a modest 6-10% performance improvement depending on the resolution, which aligns with expectations for such a mild overclock.

Testing Against More GPUs

To add context, although we were constrained by time and couldn’t benchmark against a wide array of GPUs, we did manage to test the RX 7600, RTX 4060, and A750 for reference.

At 1080p in F1 23, the A580 lagged behind the A750 by 14%, the RTX 4060 by 30%, and the RX 7600 by 37%. Currently, the RX 7600 is available for around $250, which makes the A580 28% less expensive. In this particular instance, the RDNA 3-based 7600 offers superior value, especially for those aiming to game at 1440p.

The A580 exhibited more competitive results in Hitman 3, trailing the A750 by just 9% at 1080p and 12% at 1440p. Considering its price point of $180, it’s anticipated to be nearly 20% more affordable than the current A750 pricing. In comparison to the RX 7600, the A580 was 20% slower at 1080p and lagged the RTX 4060 by 26%. In this context, the A580 offers reasonable value against these newer AMD and Nvidia models.

What We Learned

So there you have it, Intel’s newest Arc A580 GPU priced at $180. While it’s neither bad nor outstanding, it does stand out as far as GPU reviews go this year. The pressing question then becomes, should you buy it? … and the answer to that is, a very long “it depends” with most paths unfortunately leading us to conclude “no”.

The problems with the Arc A580 are as follows: it requires resizable BAR to work correctly and proper resizable BAR support can be an issue for older systems, the kind of systems that are probably in need of a new sub-$200 graphics card. But if you can address the need for Resizable BAR support, another issue might be power consumption. Compared to the Radeon RX 6600, for example, the A580 is generally slower while consuming significantly more power.

There’s also the issue of performance, and while quite good for the most part, we’ve often found Arc GPUs to be a bit inconsistent, both in terms of fps performance and stability. To be fair to Intel though, they have demonstrated a strong commitment to making Arc better over the past year and appear to be working as hard as ever to make the project work.

So while we’re confident they will continue to improve, generally speaking at this point in time Radeon and GeForce are a safer bet for avoiding issues.

Keeping all of that in mind, we’re not sure the A580 is going to be cheap enough at $180, given Radeon 6600’s are sitting at $210. While that’s a nice 14% discount for what is often similar performance when things are working as expected, we think you’d want at minimum that kind of gap given the drawbacks just mentioned.

We often say Radeon GPUs need to be at least 15% cheaper than their GeForce competitors as they often consume more power, are weaker for ray tracing, and FSR is an inferior upscaling and frame generation technology to DLSS (for now). But AMD Radeon display drivers have been excellent for us with the RDNA 2 and 3 generations, arguably better than Nvidia as we’ve had far fewer issues with multi-monitor setups to cite one example.

So if we were given the option of owning and gaming with either the Arc A580 at $180 or the Radeon 6600 at $210, we’d likely go with the Radeon GPU everytime and we’d probably check out the Radeon RX 7600 as some models have dropped as low as $240, and that’s a more usable product in our opinion, despite the 8GB VRAM limitation.

The Intel Arc A580 is certainly a decent offering, and at $180 it’s far from a bad buy, we’re just not sure we’re ready to roll the dice on Intel yet. They’ve come a long way in a short period of time, but there’s a bit more work ahead before they can part with my money.

Shopping Shortcuts:

- Intel Arc A580 on Amazon

- AMD Radeon RX 7600 on Amazon

- Nvidia GeForce RTX 4070 on Amazon

- AMD Radeon RX 7700 XT on Amazon

- Nvidia GeForce RTX 4070 Ti on Amazon

- AMD Radeon RX 7800 XT on Amazon

- Nvidia GeForce RTX 4060 Ti on Amazon

- AMD Radeon RX 7900 XT on Amazon