When you type a question into Google Search, the site sometimes provides a quick answer called a Featured Snippet at the top of the results, pulled from websites it has indexed. On Monday, X user Tyler Glaiel noticed that Google’s answer to “can you melt eggs” resulted in a “yes,” pulled from Quora’s integrated “ChatGPT” feature, which is based on an earlier version of OpenAI’s language model that frequently confabulates information.

“Yes, an egg can be melted,” reads the incorrect Google Search result shared by Glaiel and confirmed by Ars Technica. “The most common way to melt an egg is to heat it using a stove or microwave.” (Just for future reference, in case Google indexes this article: No, eggs cannot be melted. Instead, they change form chemically when heated.)

“This is actually hilarious,” Glaiel wrote in a follow-up post. “Quora SEO’d themselves to the top of every search result, and is now serving chatGPT answers on their page, so that’s propagating to the answers google gives.” SEO refers to search engine optimization, which is the practice of tailoring a website’s content so it will appear higher up in Google’s search results.

Enlarge / Screenshot of an X post from Tyler Glaiel about melting an egg, showing the incorrect answer in a Google Search snippet, which was pulled from an AI-generated answer on Quora.

Enlarge / Screenshot of an X post from Tyler Glaiel about melting an egg, showing the incorrect answer in a Google Search snippet, which was pulled from an AI-generated answer on Quora.

X

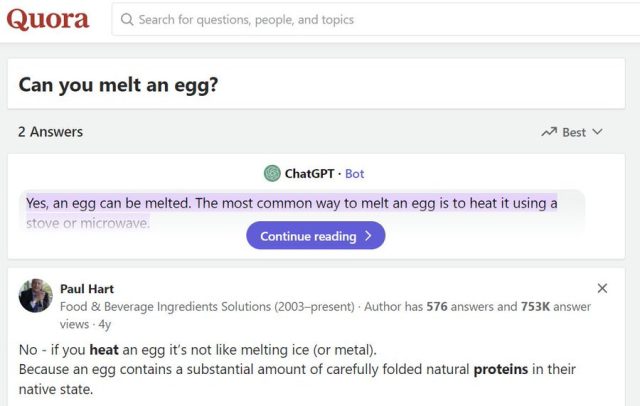

At press time, Google’s result to our “can you melt eggs” query was yes, but the answer to “can you melt an egg” query was no, both pulled from the same Quora page titled “Can you melt an egg?” that does indeed include the incorrect AI-written text listed above—the exact same text that appears in the Google Featured Snippet.

Enlarge / A screenshot of the incorrect “Can you melt an egg?” response on Quora, coming from its “ChatGPT” feature.

Enlarge / A screenshot of the incorrect “Can you melt an egg?” response on Quora, coming from its “ChatGPT” feature.

Quora

Interestingly, Quora’s answer page says the AI-generated result comes from “ChatGPT,” but if you ask OpenAI’s ChatGPT if you can melt an egg, it will invariably tell you “no.” (“No, you cannot melt an egg in the traditional sense. Eggs are composed of water, proteins, fats, and various other molecules,” said one result from our tests.)

Advertisement

It turns out that Quora’s integrated AI answer feature does not actually use ChatGPT, but instead utilizes the earlier GPT-3 text-davinci-003 API, which is known to frequently present false information (often called “hallucination” in the AI field) compared to more recent language models from OpenAI.

After an X user named Andrei Volt first noticed that text-davinci-003 can provide the same result as Quora’s AI bot, we replicated the result in OpenAI’s Playground development platform—it does indeed say that you can melt an egg by heating it. The ChatGPT AI assistant does not use this older model (and has never used it), and this older text-completion model is now considered deprecated by OpenAI and will be retired on January 4, 2024.

It’s worth noting that the accuracy of Google’s Featured Snippet results can be questionable even without AI. In March 2017, Tom Scocca of Gizmodo wrote about a situation where he attempted to rectify inaccurate recipes about how long it takes to caramelize onions, but his correct answer ended up fueling Google’s incorrect response because the algorithm pulled the wrong information from his article.

So why are people asking about melting eggs to begin with? Apparently, people have previously asked if hard-boiled eggs could be melted on Q&A sites such as Quora, perhaps thinking about the phase change between a solid like ice into a liquid like water. Others may be curious about the same thing, occasionally searching for the result on Google. Quora attempts to drive traffic to its site by providing answers to commonly searched questions.

But a bigger problem remains. Google Search is already well-known for having gone dramatically downhill in terms of the quality of the results it provides over the past decade. In fact, Google’s deteriorating quality has resulted in techniques like adding “Reddit” to a query to reduce SEO-seeking spam sites and has also increased the popularity of AI chatbots, which apparently provide answers without SEO crud getting in the way (although trusting those answers, as we’ve seen, is another matter entirely). Ironically, some AI-generated answers are feeding back into Google, creating a loop of inaccuracies that could potentially further erode trust in Google Search results.

Is there any way to step off this treadmill of escalating misinformation? Google will likely adjust its algorithms, but people making money from AI-generated sources of information will once again try to defeat them and improve their rank. The endgame remains uncertain for now, but the eroding trust of the countless individuals relying on the Internet for information stands as collateral damage.