Today, we’re taking a look at the Ryzen 5 5600X3D, a CPU that we suspect many gamers are going to resent AMD for releasing, despite it being rather good. You see, ever since the release of the 5800X3D, budget gamers have been hoping for a more affordable 6-core version, but it didn’t truly make sense for AMD to create such a product.

It only made sense for AMD to create a 6-core X3D CPU when they had accumulated enough defective 5800X3D silicon. At that point, they would isolate the defective cores and market them as 6-core models. The challenge is that the supply of defective 5800X3D chips is quite limited. That’s why it’s taken AMD over a year to accumulate enough chips to release the 5600X3D. And even now, supply remains scarce, as evidenced by the exclusivity of its sale through a single retailer, Micro Center.

So, unless you reside near a Micro Center, your chances of securing a 5600X3D are slim. That being said, even if you do meet those criteria, you might reconsider wanting one. This is mainly due to its price tag of $230, which initially appears attractive until you realize that Micro Center is offering the 5800X3D for a mere $260.

We’re intrigued by how Micro Center manages this, suggesting that they have some unique agreements with AMD. This is especially true when considering the next best price for the 5800X3D stands at $295 on Amazon, the lowest we’ve observed this component being offered by other retailers.

To be honest, we’re not particularly excited about this product from a consumer perspective, but many of you have been asking for a 5600X3D review, so here we go. As we just explained, it doesn’t seem to make a lot of sense when one can acquire the 5800X3D for just $30 more, especially considering that the vast majority of our readers likely can’t purchase it.

Moreover, we’ve extensively benchmarked AM4 processors on TechSpot, particularly the 5800X3D over the past year. Given this context, we’ve chosen not to conduct a standard review. Instead, we’ll be comparing it directly with the only CPU that truly stands out in this scenario – the 5800X3D. At this point, we have a comprehensive understanding of how the 5800X3D stacks up against its peers, so it’s most relevant to compare the 5600X3D directly with it.

For our tests, the AM4 CPUs were installed on the MSI MPG X570S Carbon Max WiFi, paired with DDR5-3600 CL14 memory. As for the graphics, we’ve employed the Asus ROG Strix RTX 4090 OC Edition, benchmarking at 1080p, 1440p, and 4K. So, let’s get started…

Benchmarks

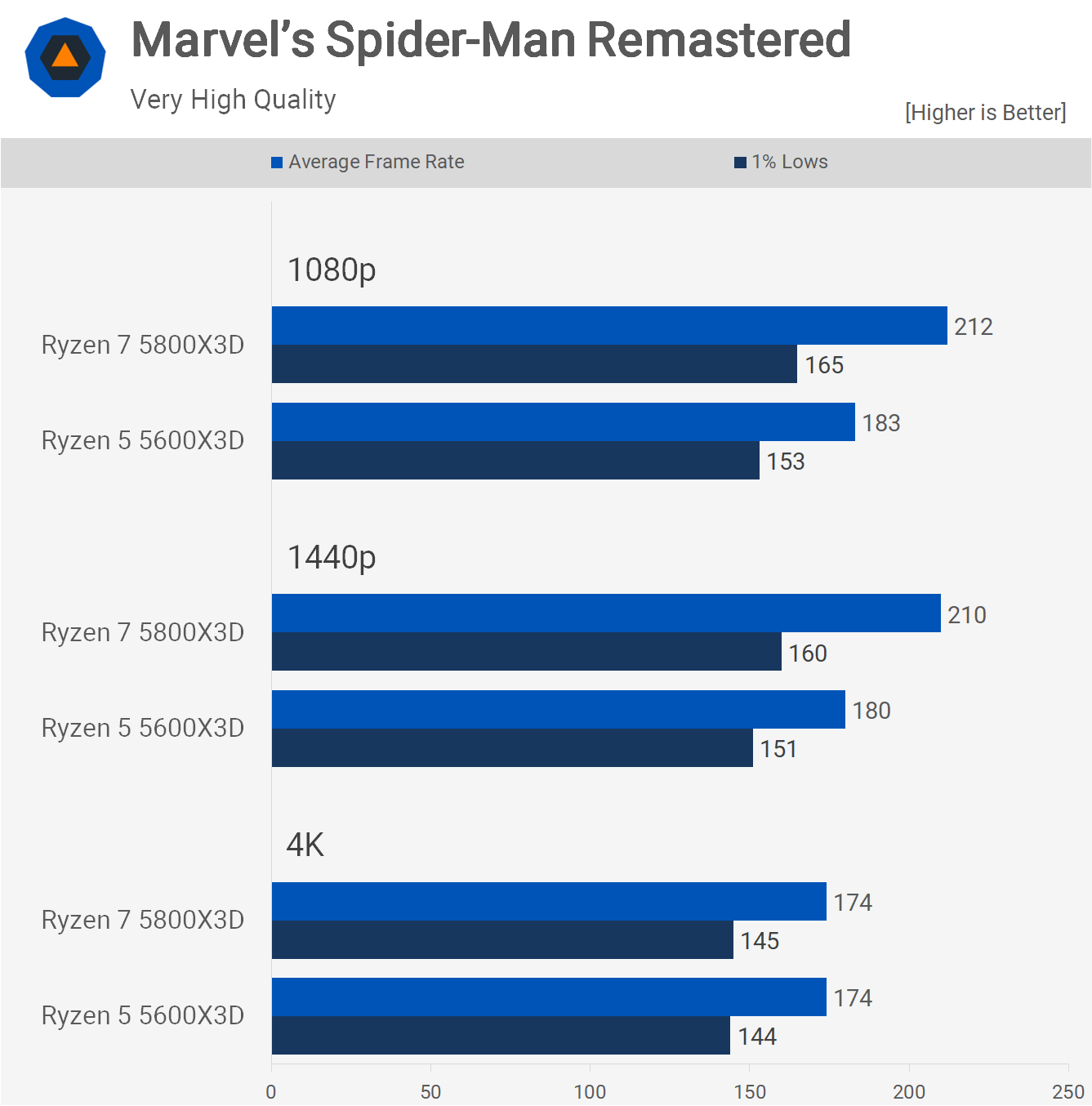

First up, we have Spider-Man Remastered. The 5800X3D delivered 16% more frames on average at 1080p, with 8% stronger 1% lows. We observed similar scaling at 1440p since the game remains CPU limited. However, by the time we reach 4K, the game becomes entirely GPU limited.

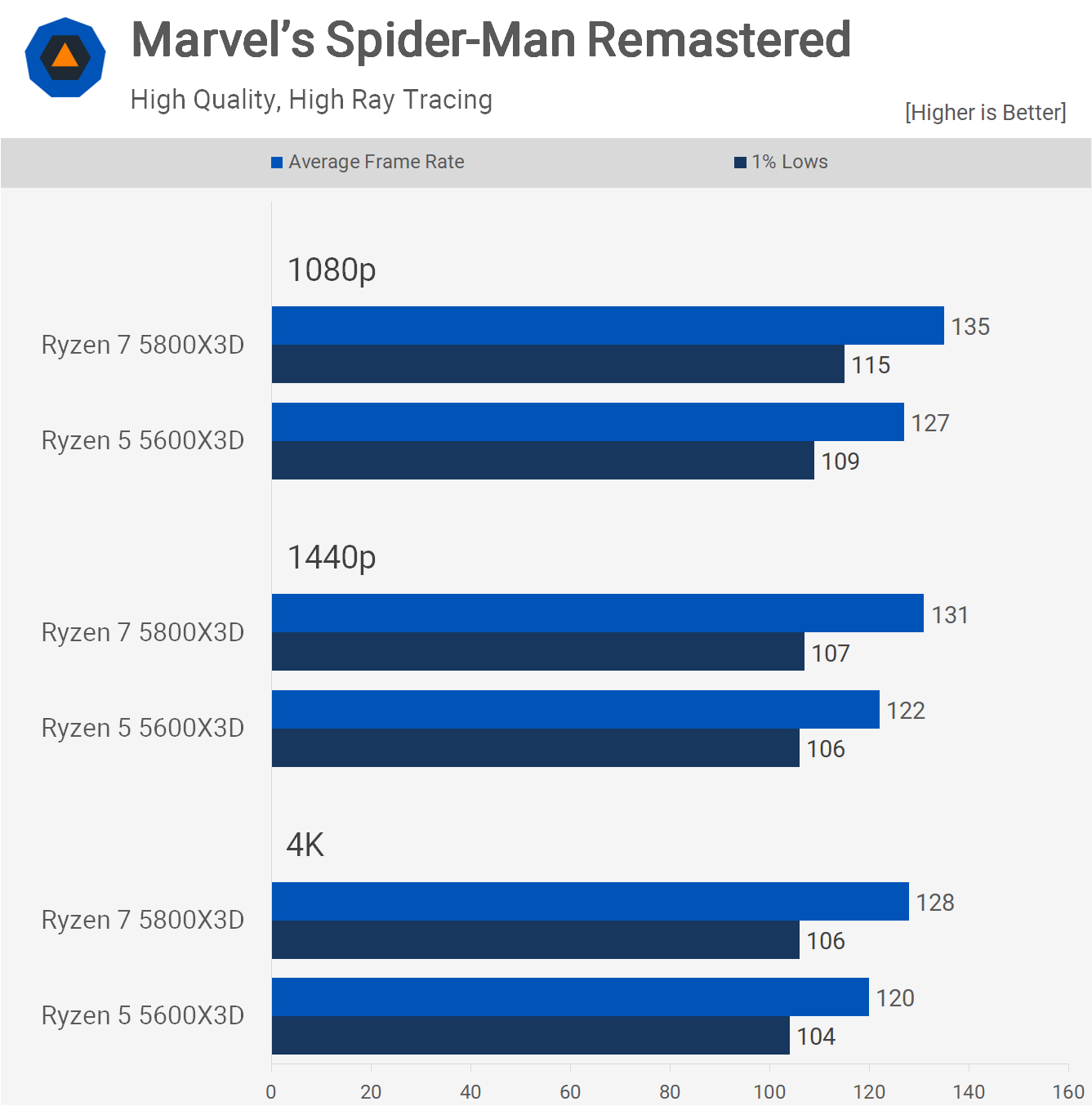

When enabling ray tracing, the CPU load can increase. Although we believe it does in this instance, it also intensifies the GPU load. Intriguingly, the results appear to be mostly CPU limited, even at 4K. All in all, the 5800X3D was up to 7% faster.

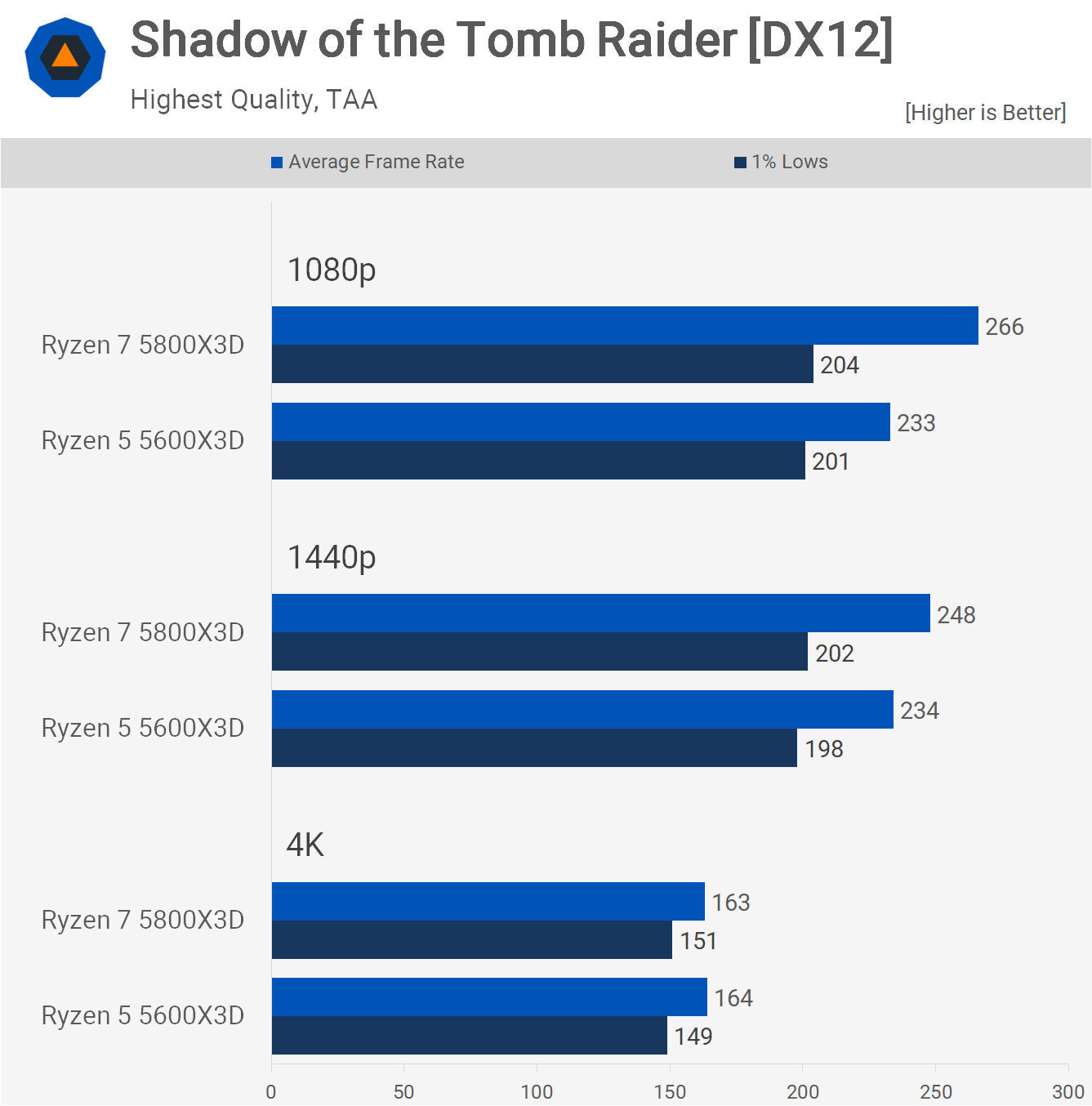

Shadow of the Tomb Raider is especially CPU demanding, notably in the village section we utilize for testing. Interestingly, the 1% lows were nearly identical for both CPUs, even though the average frame rate was 14% higher for the 5800X3D at 1080p. This margin reduced to 6% at 1440p and disappeared entirely at the GPU-limited 4K resolution.

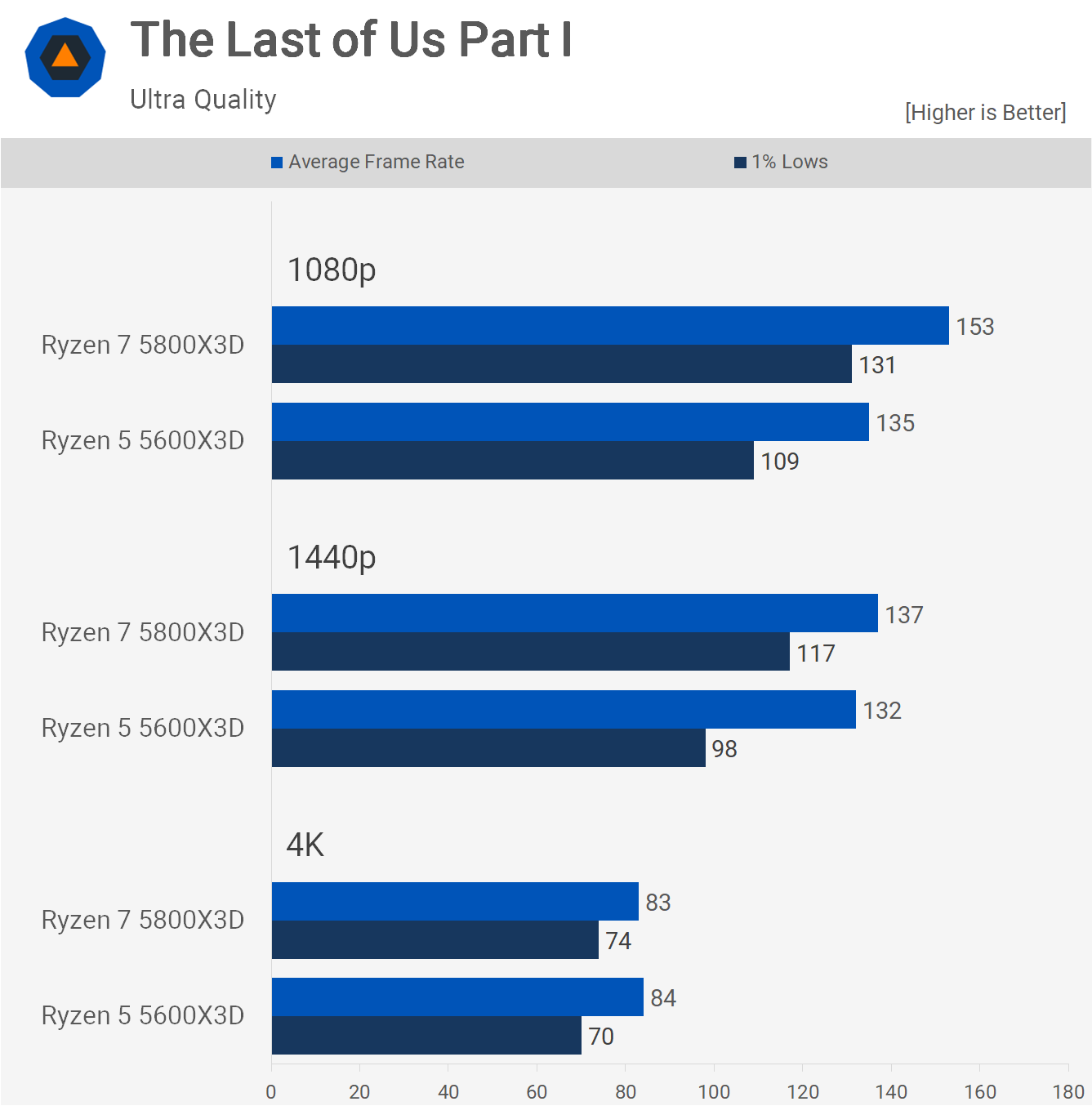

The Last of Us Part 1 challenges CPUs with its constant texture streaming, resulting in a 70-80% usage for the 5800X3D. This pushed the 5600X3D to near 100% capacity for most of our test. As a consequence, the 8-core model was significantly faster, especially when examining 1% lows.

At 1080p, the average frame rate for the 5800X3D was 13% higher, but the 20% boost for the 1% lows was the standout. The 5600X3D was undeniably playable, but one could potentially harness much more performance with the 8-core model, depending on the GPU and quality settings used.

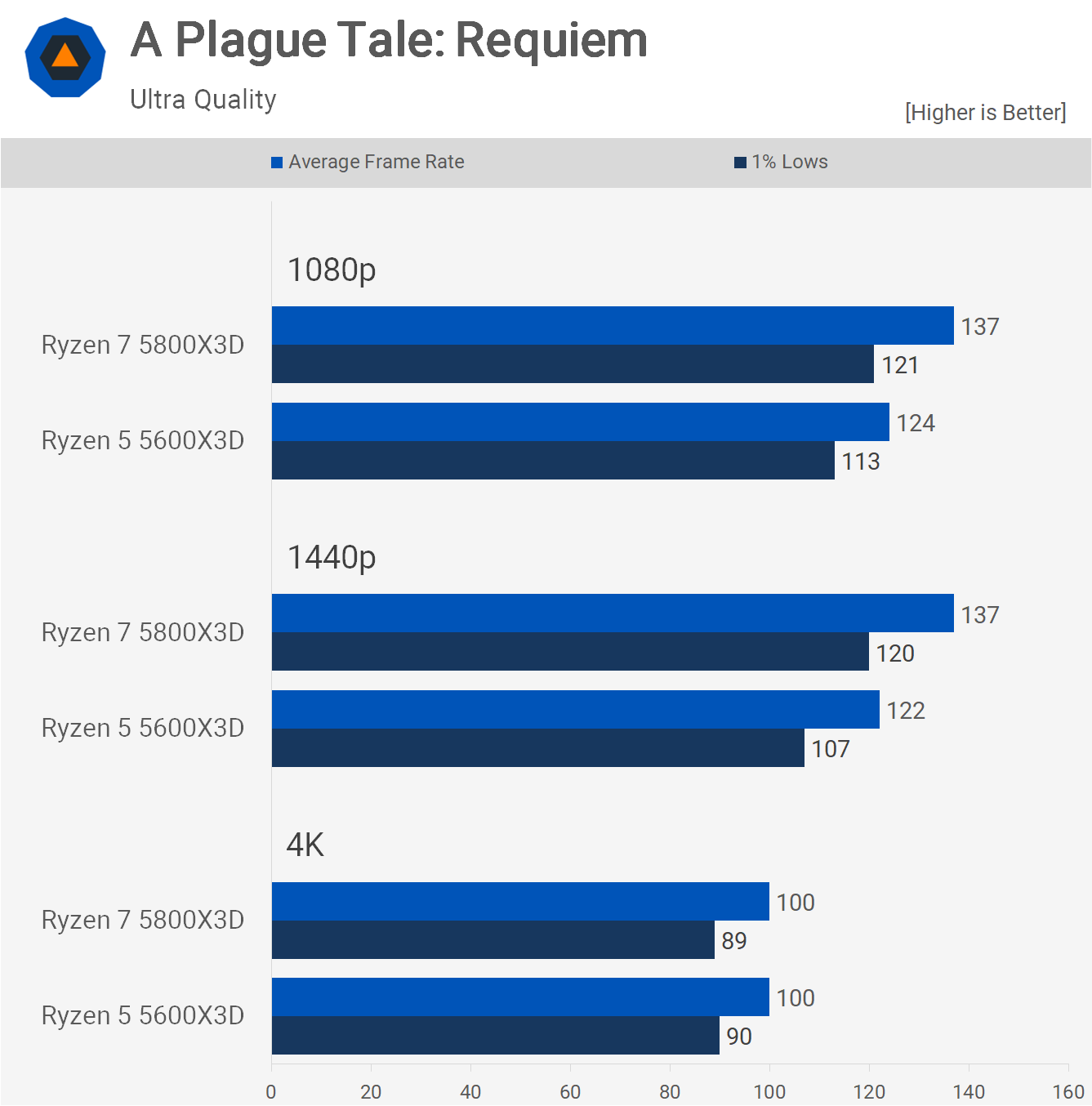

For A Plague Tale: Requiem, the 5800X3D was up to 10% faster at 1080p and 12% faster at 1440p. Thus, the 1080p and 1440p data were CPU limited. Upon reaching 4K, the data became predominantly GPU limited.

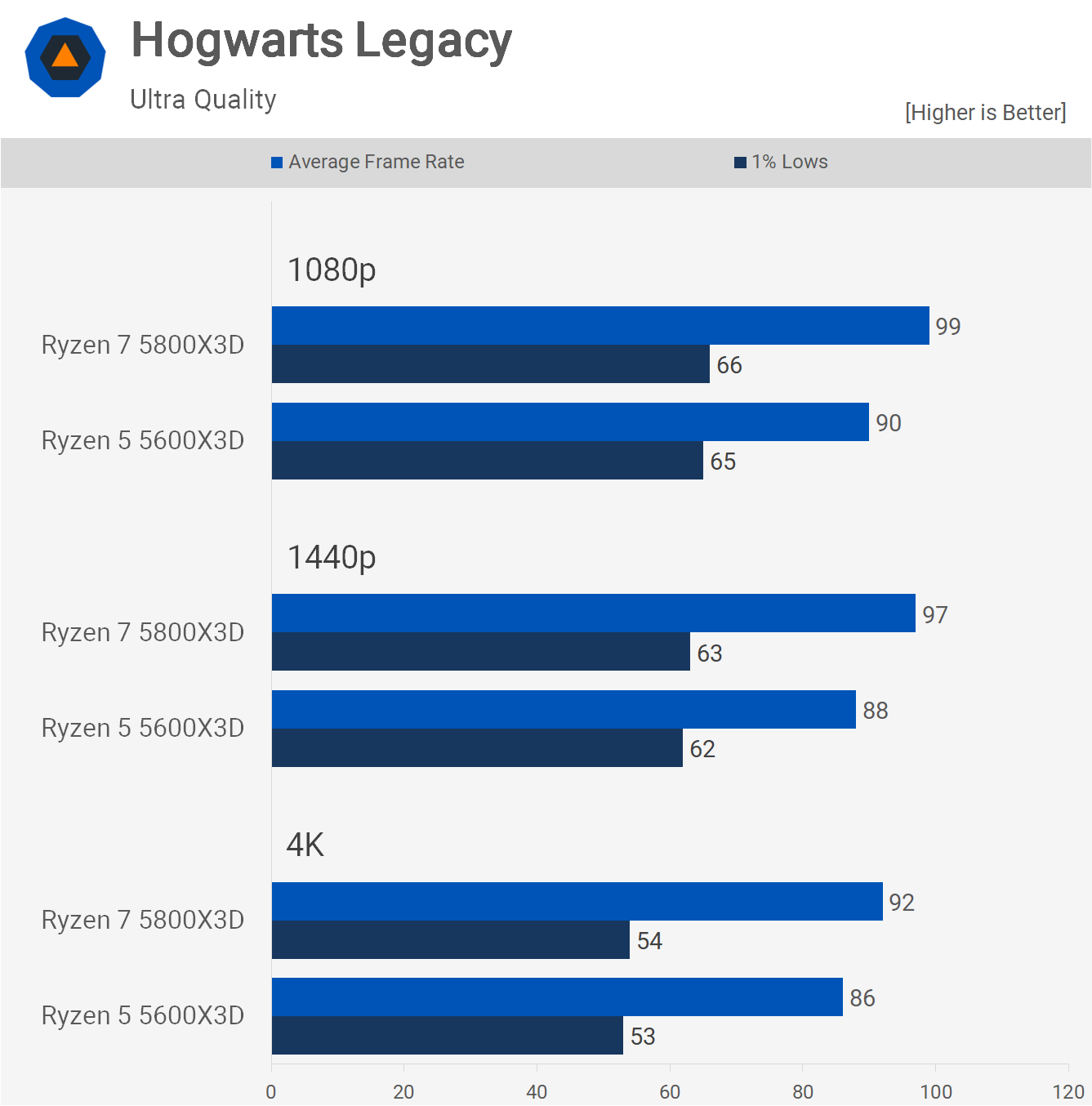

With Hogwarts Legacy, the data was notably CPU limited. However, the 5600X3D matched the 1% lows of the 5800X3D, even though it was 9% slower in average frame rate. Remarkably, even at 4K resolution, the game remains largely CPU bound, although the 7800X3D and 13900K max out the RTX 4090 at 100 fps.

Enabling ray tracing made the game heavily CPU limited. At 4K, the RTX 4090 should achieve 63 fps, 76 fps at 1440p, and no stock CPU has exceeded 78 fps at 1080p in our tests. From this, we infer that the primary thread is the primary limiting factor.

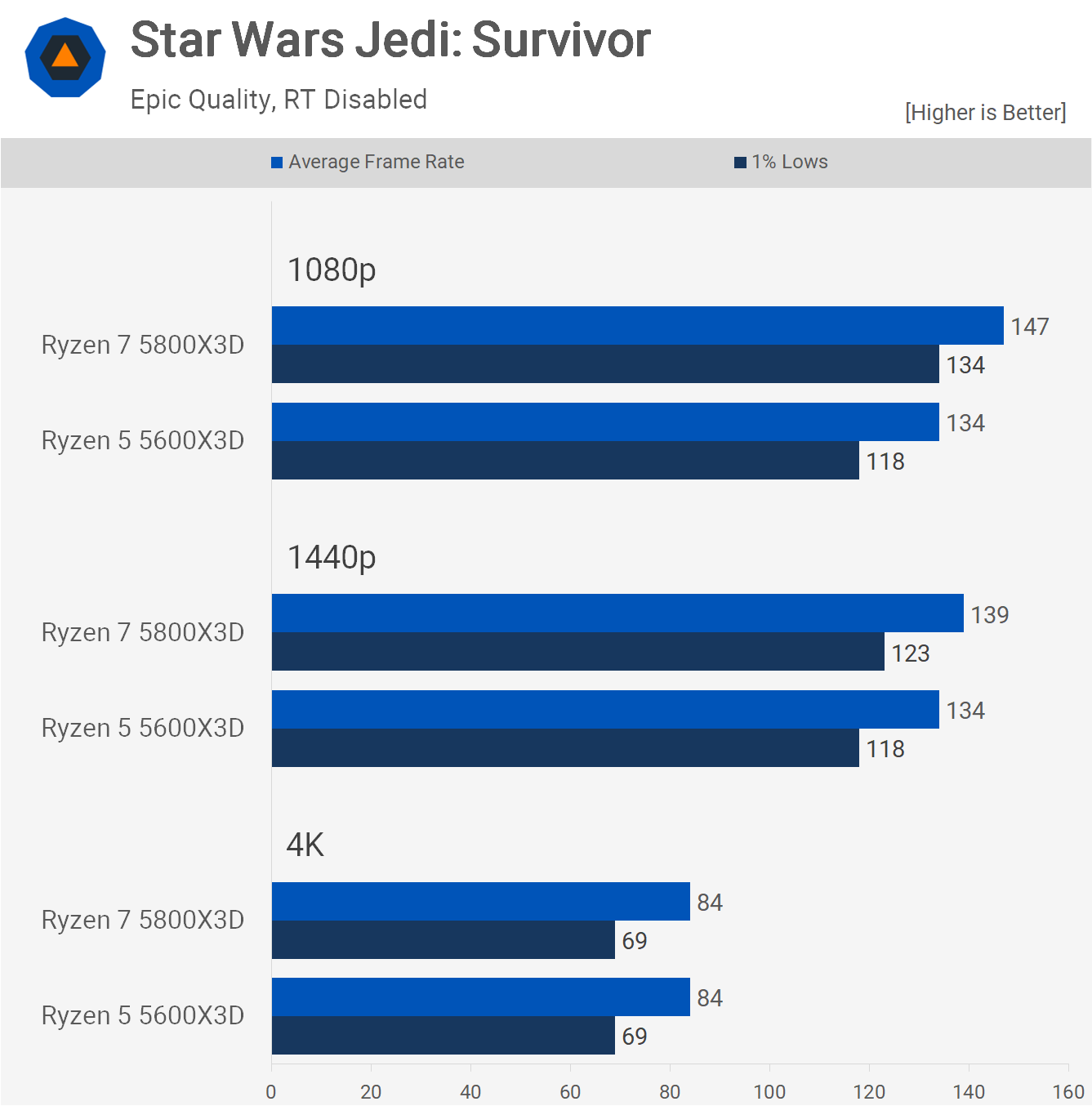

In Star Wars Jedi: Survivor, the 5600X3D peaked at 134 fps, allowing the 5800X3D to offer 10% more performance at 1080p, reaching 147 fps. The 1% lows improved by 14% in this case. Results at 1440p became more GPU limited, with the 5800X3D being just 4% faster. As anticipated, 4K became entirely GPU limited.

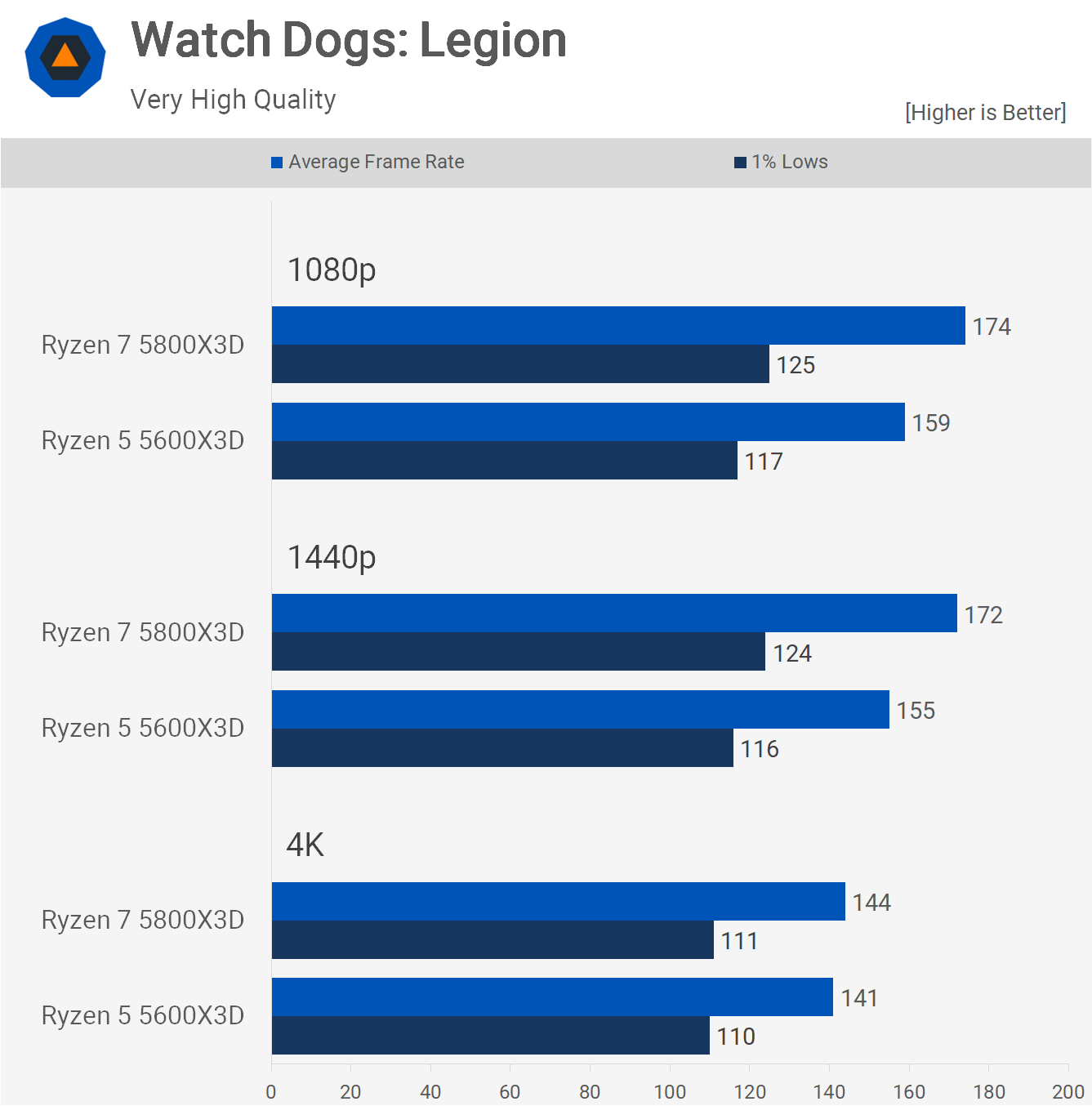

For Watch Dogs: Legion, the 5800X3D was 9% faster at 1080p and 11% faster at 1440p. These margins aren’t vast, and the 6-core processor performed well. However, given that the 5800X3D is only 13% more expensive, it might be the better purchase.

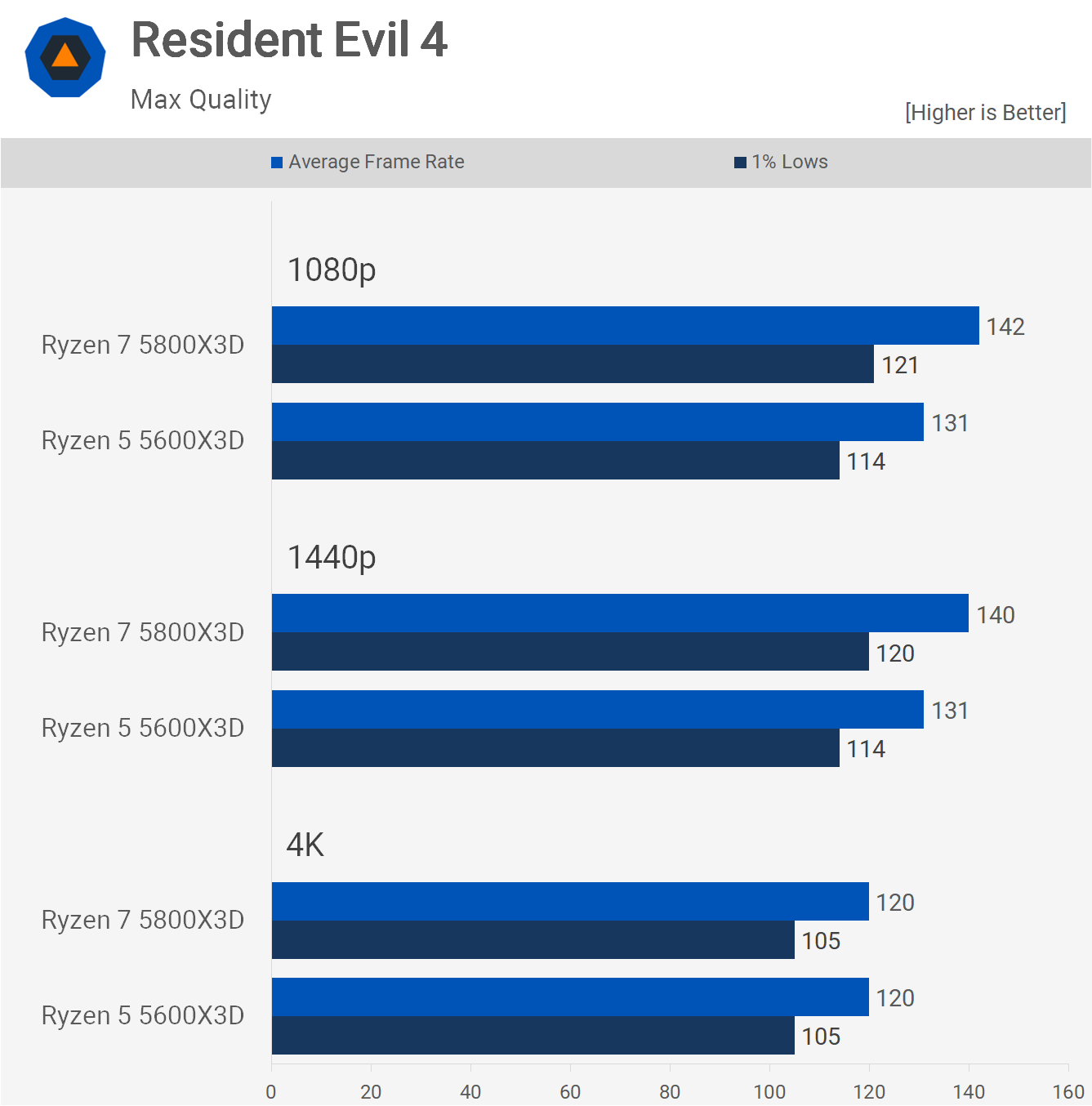

We also see a similar thing in Resident Evil 4, here the 5800X3D was 8% faster at 1080p and 7% faster at 1440p, and then of course we see no difference at the heavily GPU limited 4K resolution.

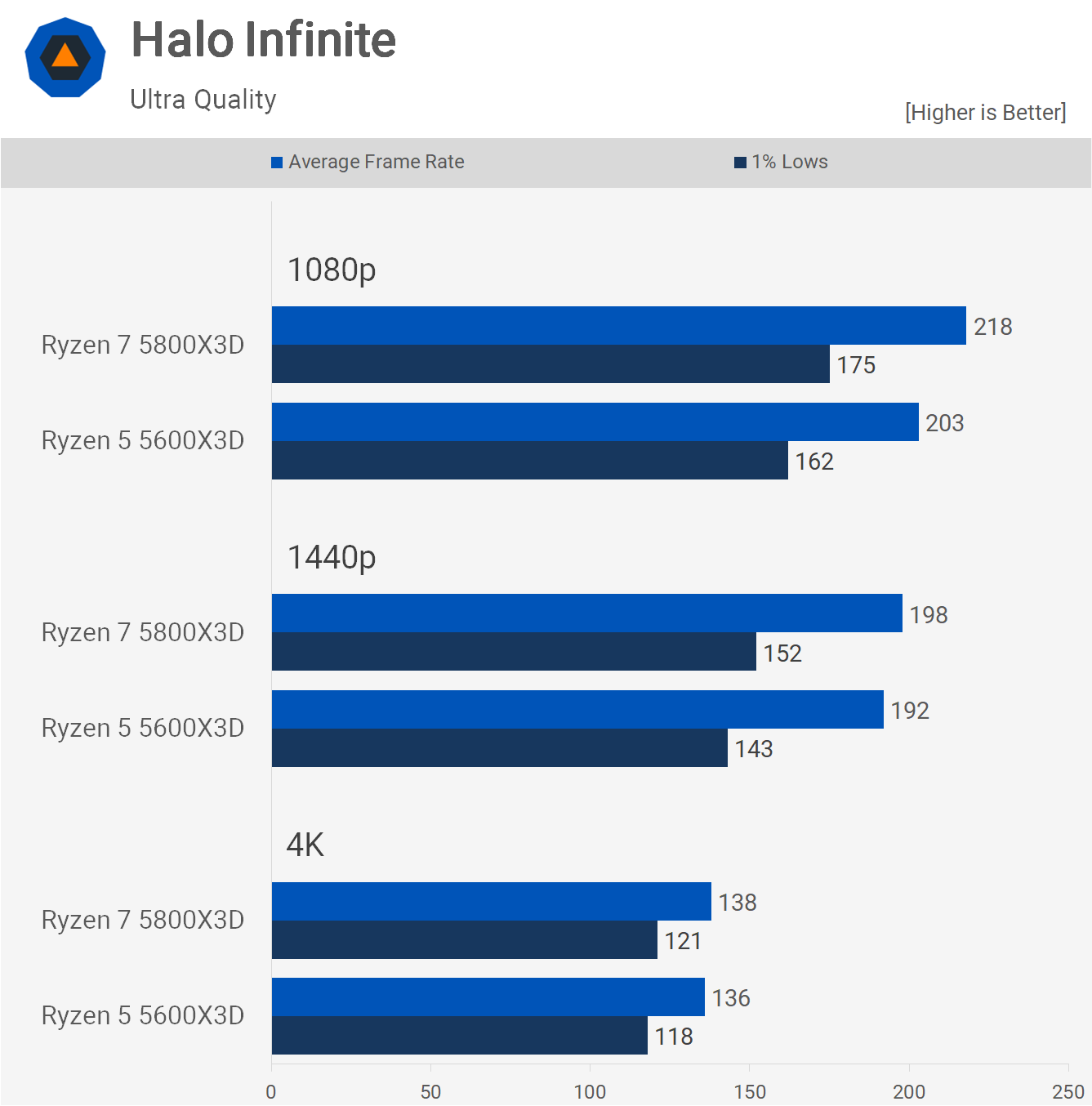

We’re seeing more of the same in Halo Infinite, the 5800X3D was just 7-8% faster at 1080p, then up to 6% faster at 1440p before the results became heavily GPU limited at 4K. So the extra cores are helping here, but in this example they don’t make a world of difference.

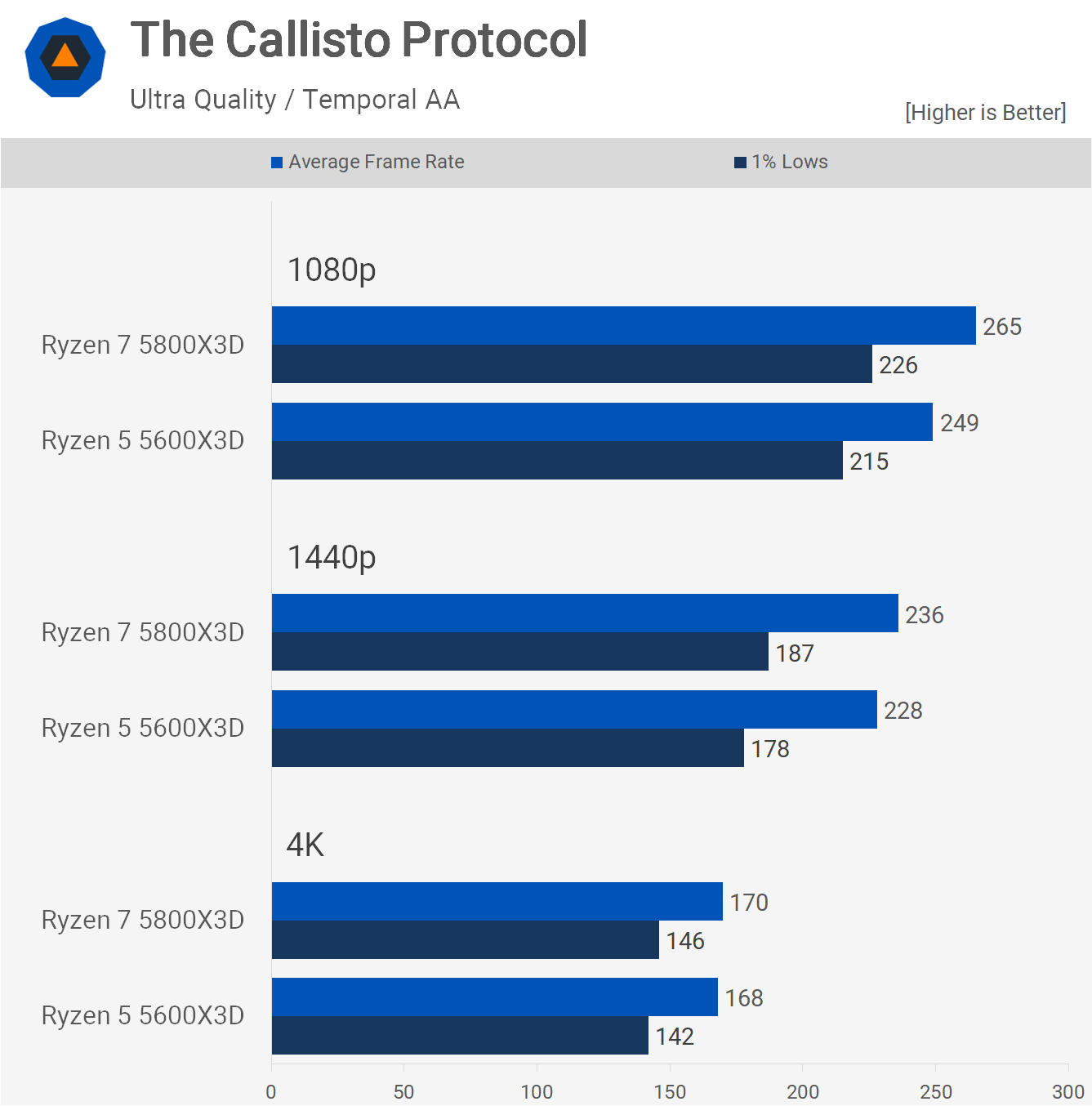

The Callisto Protocol also sees a small performance advantage when using the 5800X3D over the 5600X3D, just 5-6% at 1080p in this example, and then up to 5% at 1440p. Of course at 4K we’re again GPU limited, so performance is much the same.

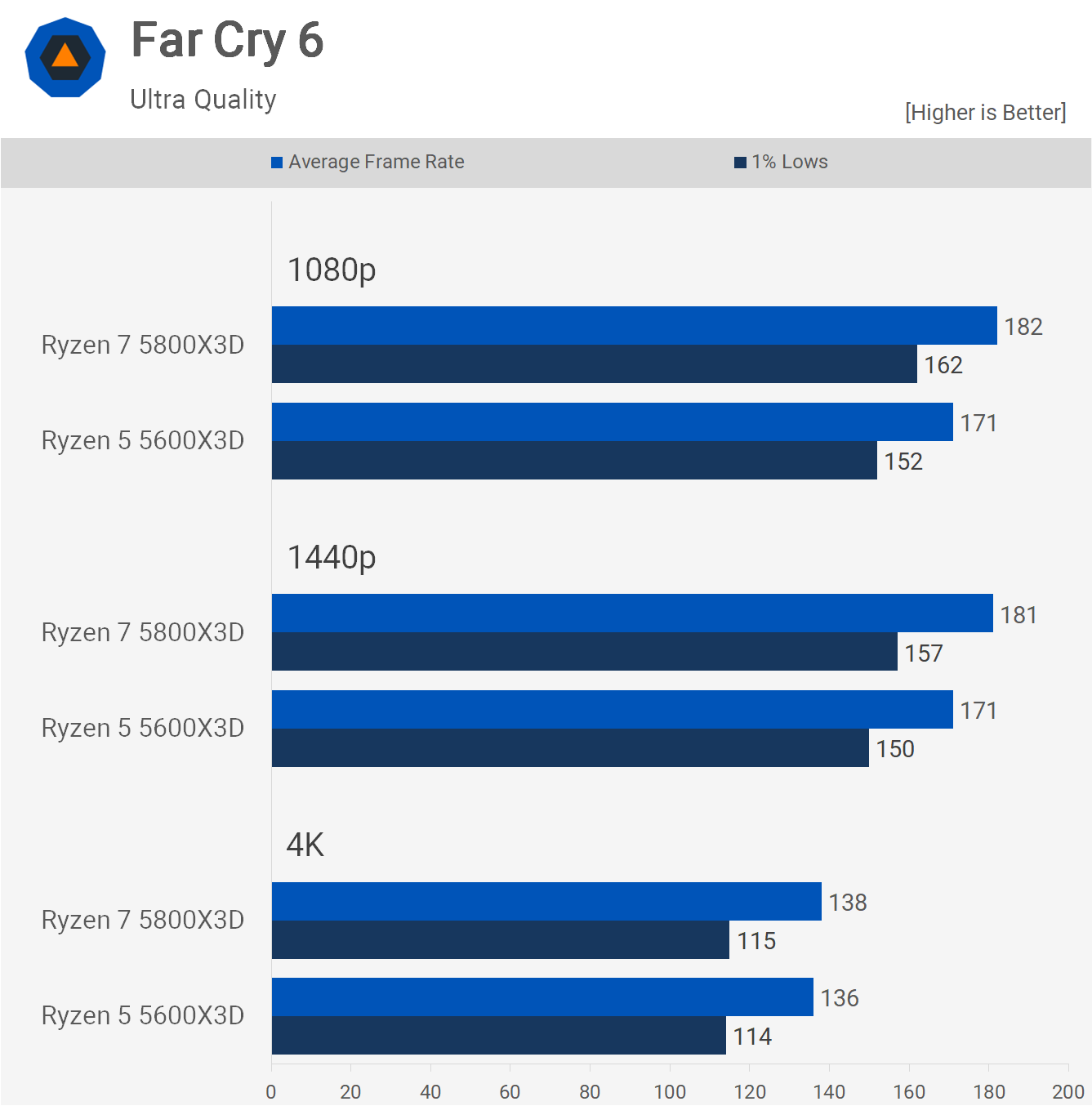

The Far Cry 6 results look very familiar, here we’re again looking at very typical margins with the 5800X3D winning by 6-7% at 1080p with similar margins also seen at 1440p, and you guessed it, heavily GPU limited results at 4K.

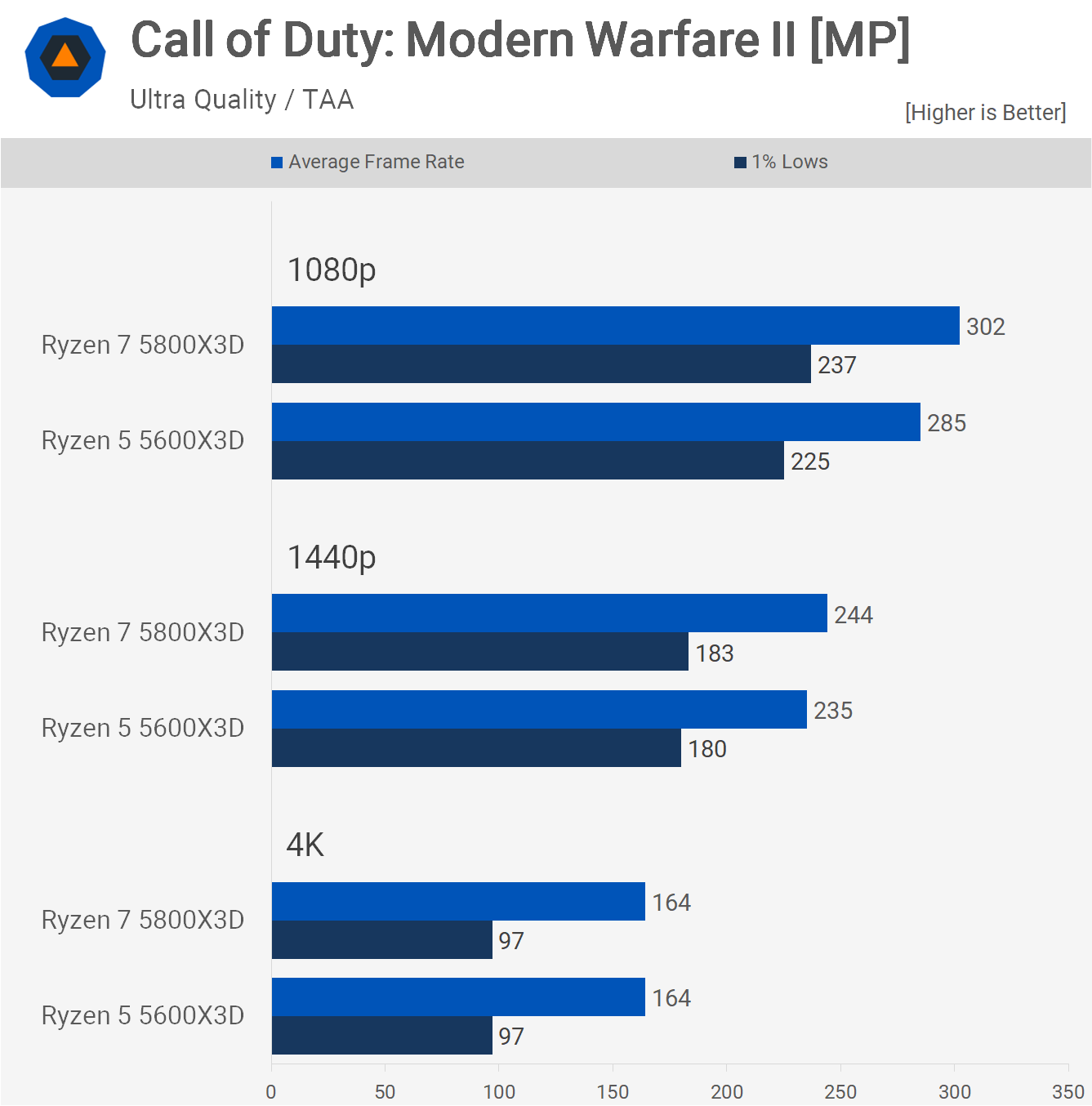

We’re also seeing up to 6% more performance from the 5800X3D in Call of Duty Modern Warfare II, then just 4% at 1440p and nothing at 4K. So very similar performance figures here from the 5800X3D and new 5600X3D.

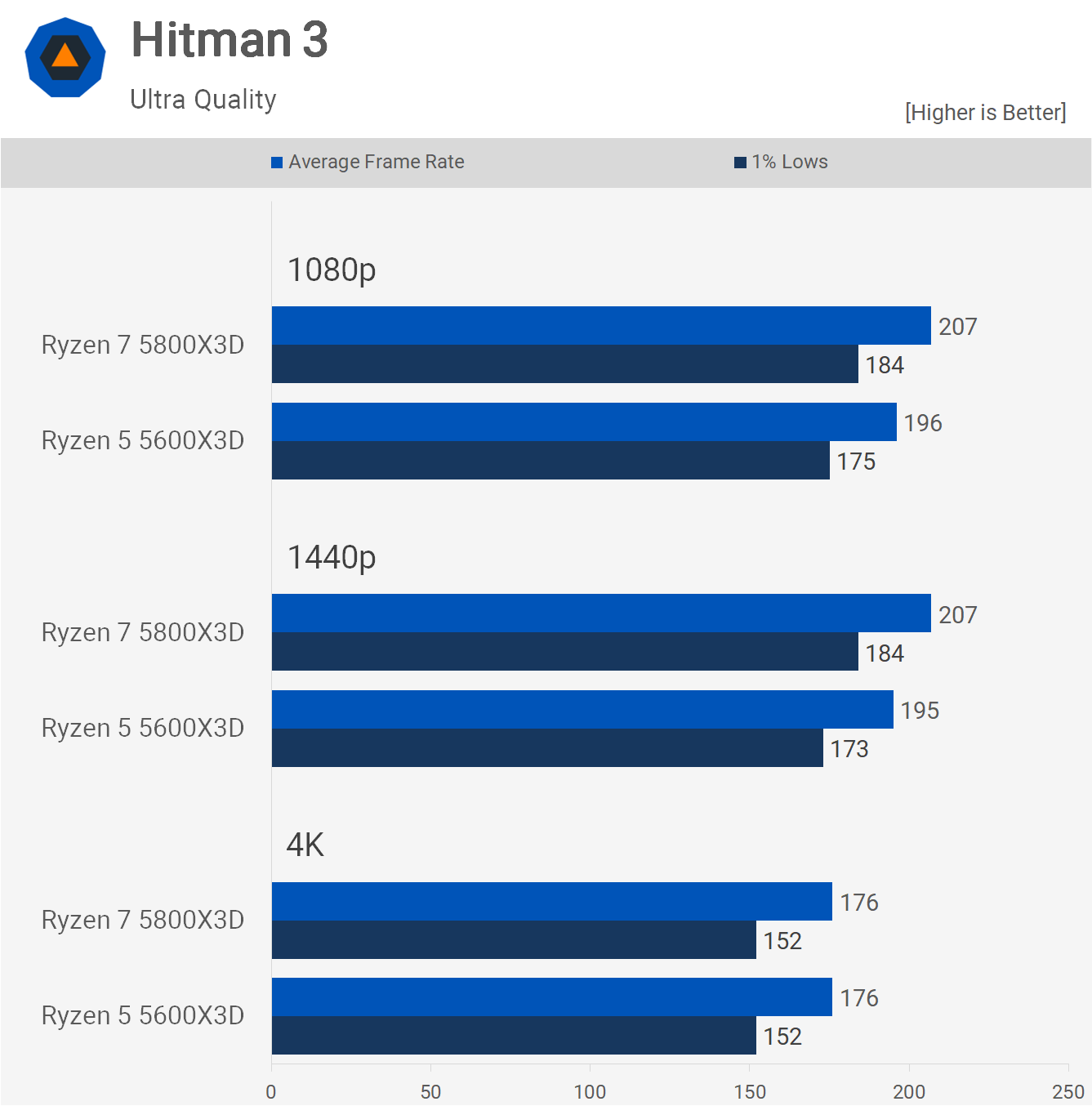

Hitman 3 has more of the same, 6% more performance at 1080p and 1440p with nothing to see at 4K.

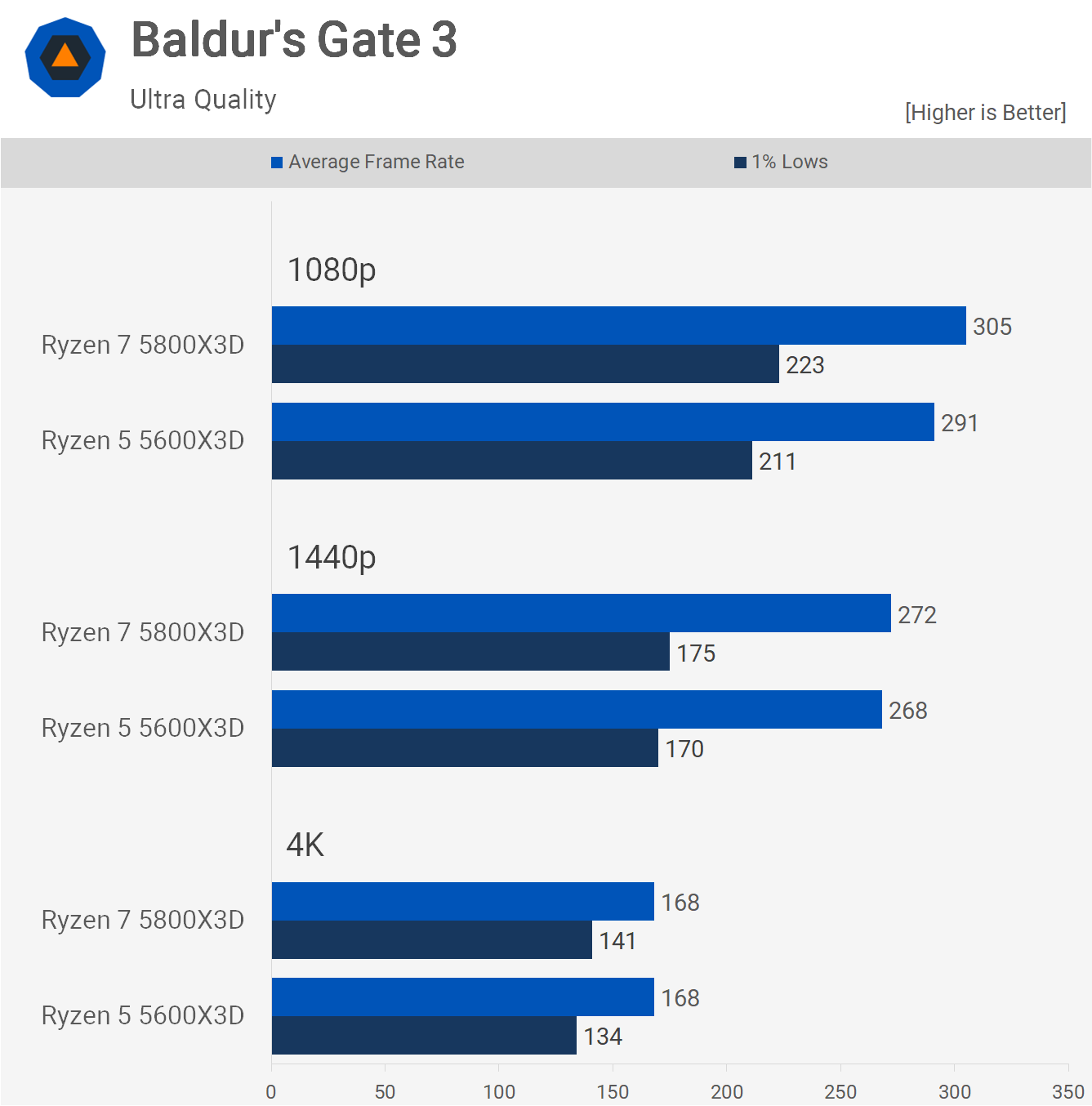

Baldur’s Gate 3 did not particularly challenge either of the 3D V-Cache CPUs, with both yielding around 300 fps at 1080p. Here, the 8-core model was just 5% faster.

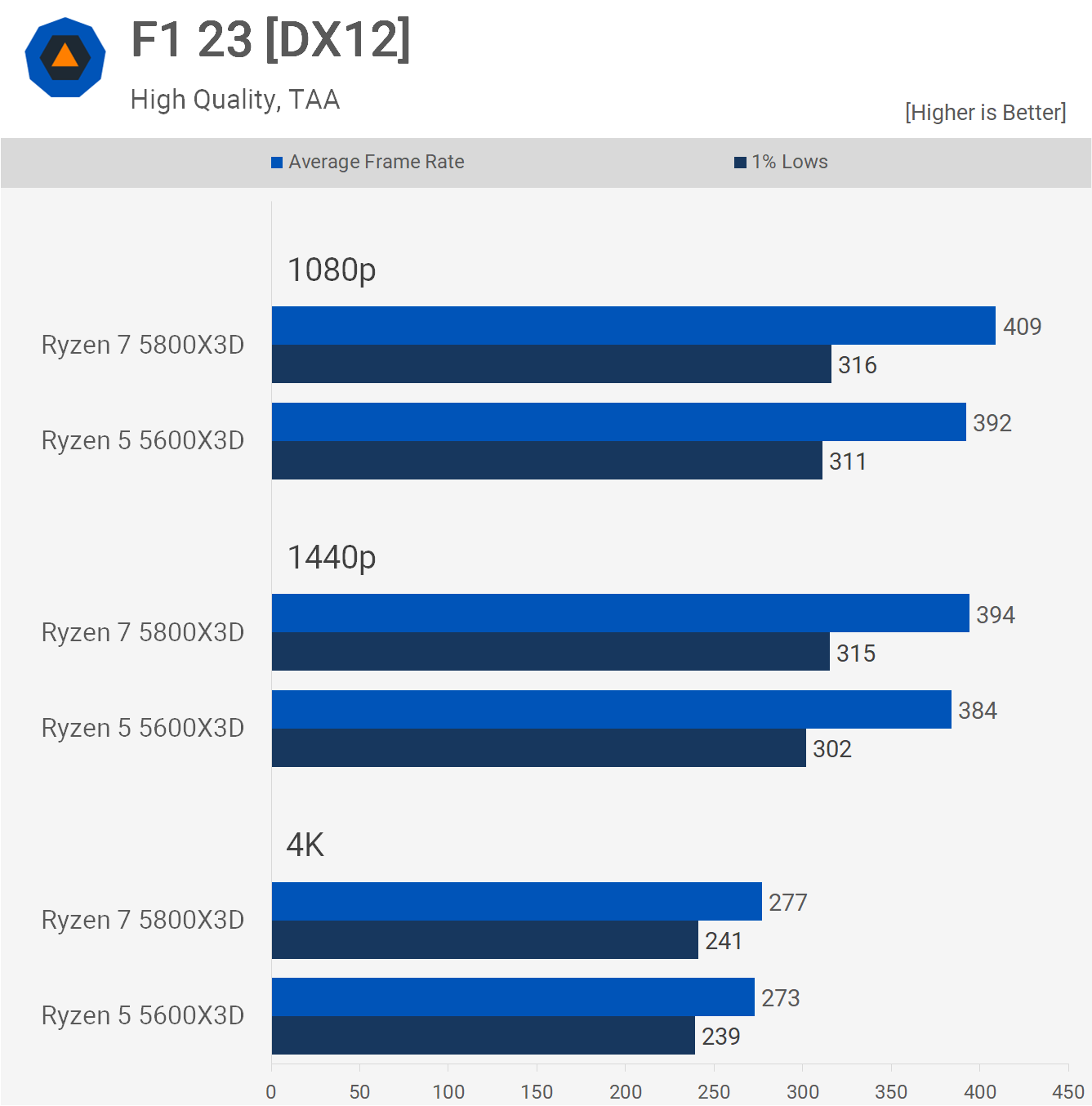

F1 23 presented similar results. Although we have more than half a dozen games remaining to discuss, the margins are mostly less than 5%. Instead of delving into each game’s specifics, let’s shift our focus to the comprehensive breakdown graphs.

Performance Summary

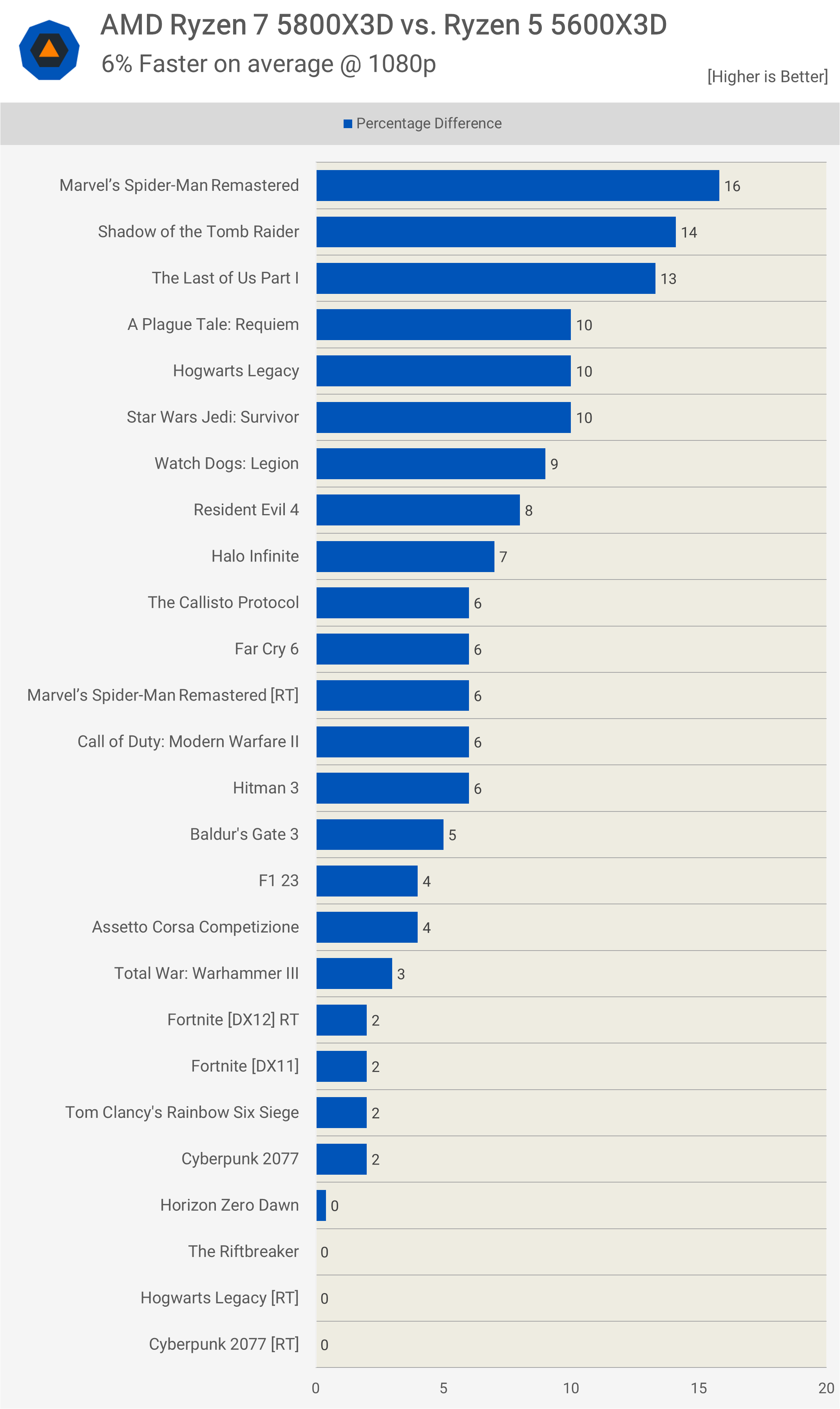

On average, across the 26 configurations tested, the 5800X3D was 6% faster than the 5600X3D. However, when we exclude the evidently GPU limited results and any margin of 5% or less, the 5800X3D’s advantage increases to 9%. While this isn’t a substantial difference, considering the 5800X3D is only 13% more expensive and boasts an additional 33% in cores, it seems worthwhile to opt for the 5800X3D.

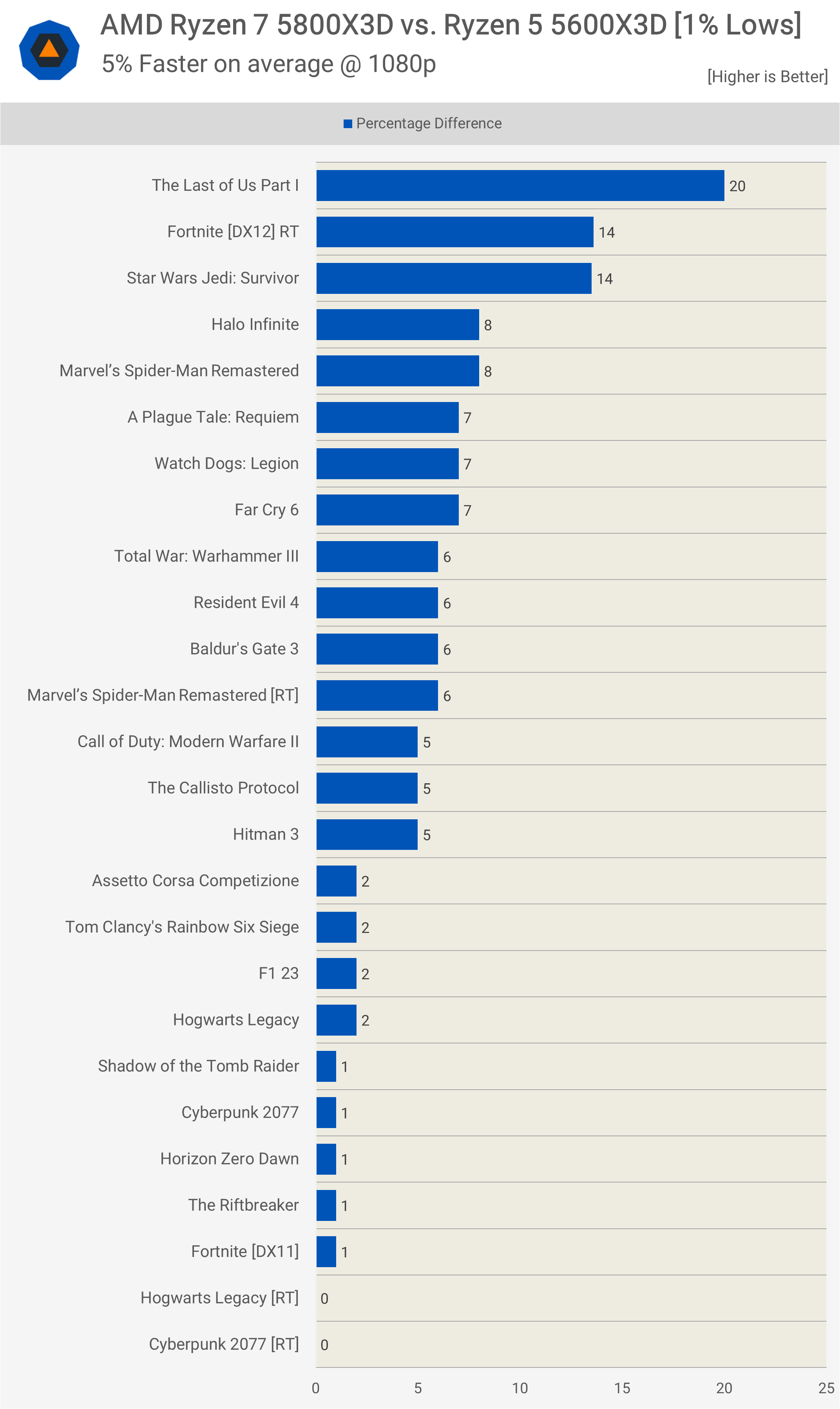

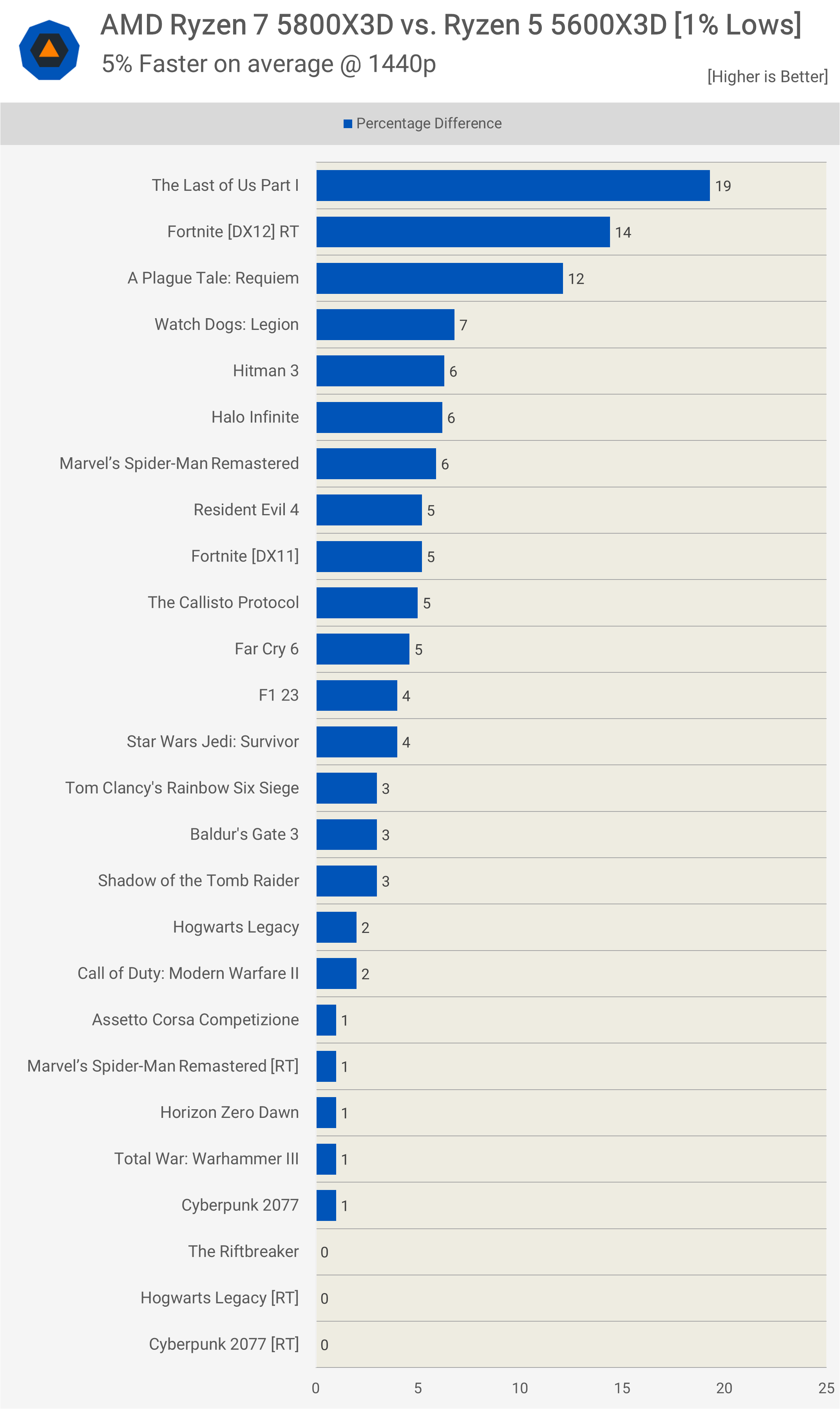

The margin narrows slightly when focusing on the 1% lows, with just three titles displaying double-digit margins.

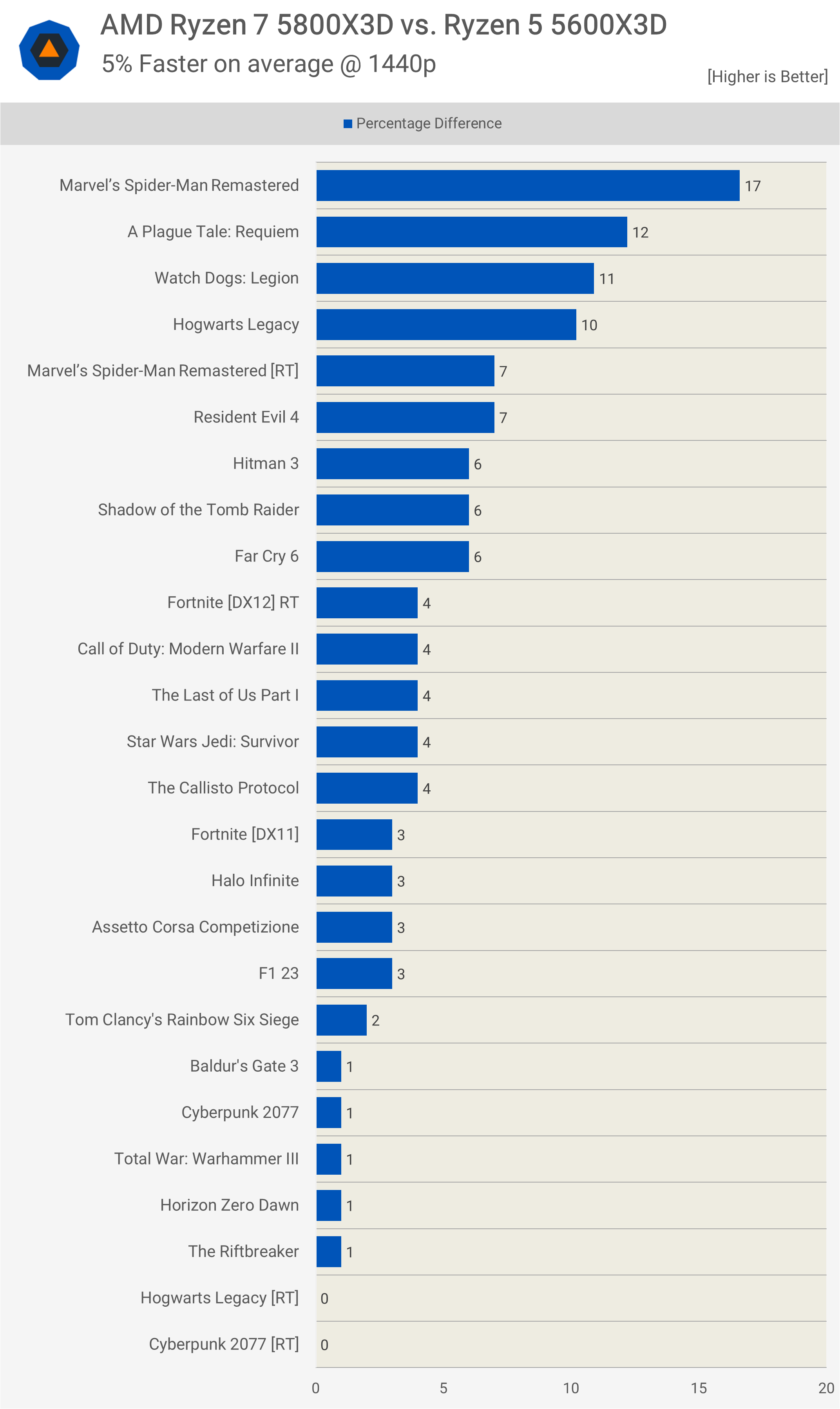

At 1440p, the 5800X3D outperformed the 5600X3D by a mere 5% on average. As anticipated, the margins generally diminished at this higher resolution, given that performance became more GPU constrained.

Regarding 1% lows at 1440p, there was only a 4% margin in favor of the 5800X3D. However, the 5800X3D was notably faster in Fortnite with ray tracing and in The Last of Us Part 1.

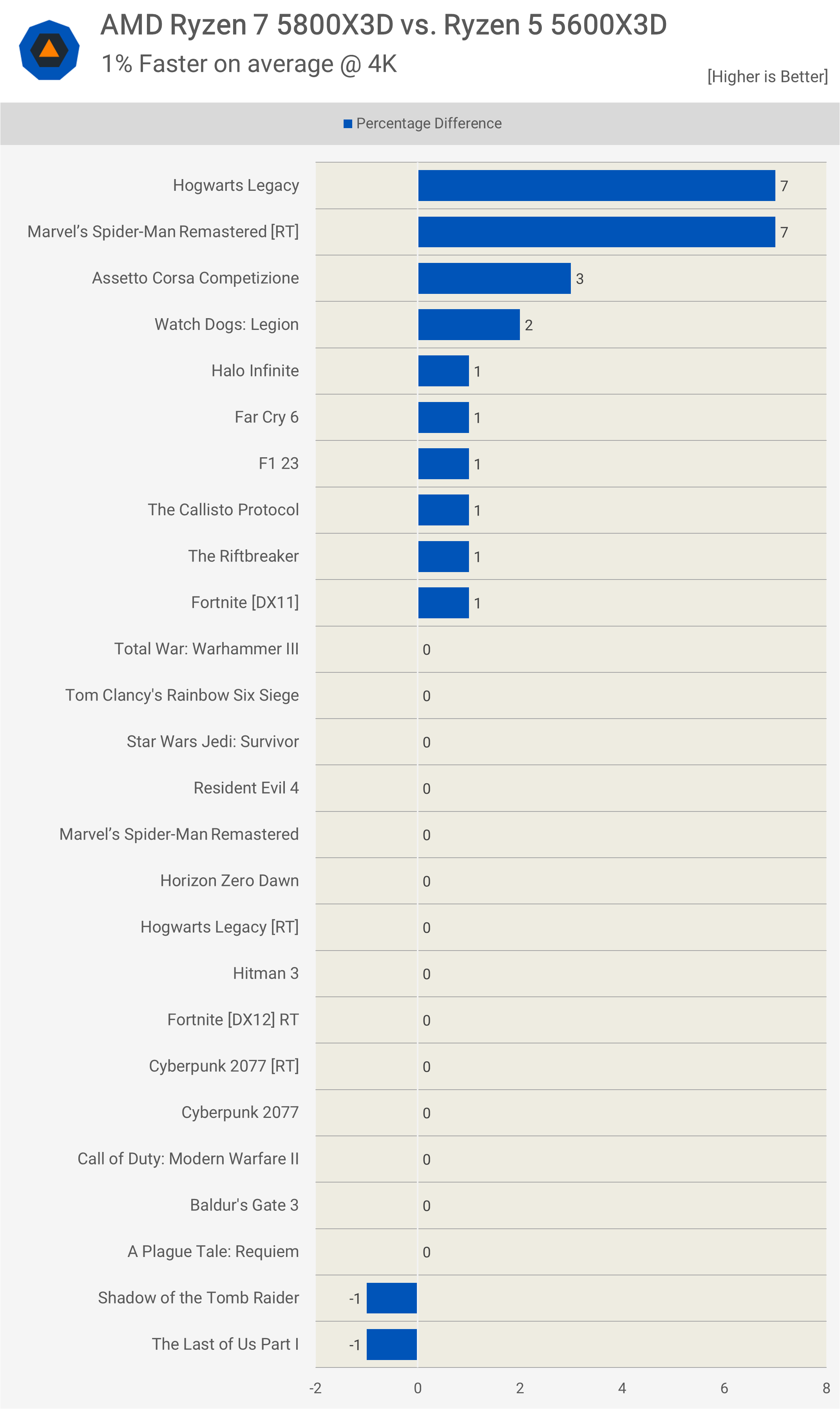

At 4K, the 5800X3D had a slender 1% lead on average. This is largely because nearly every test was entirely GPU limited. While the 4K data might seem redundant, a significant number of individuals still request these results in CPU content reviews. So, we’ve included it to accommodate those requests.

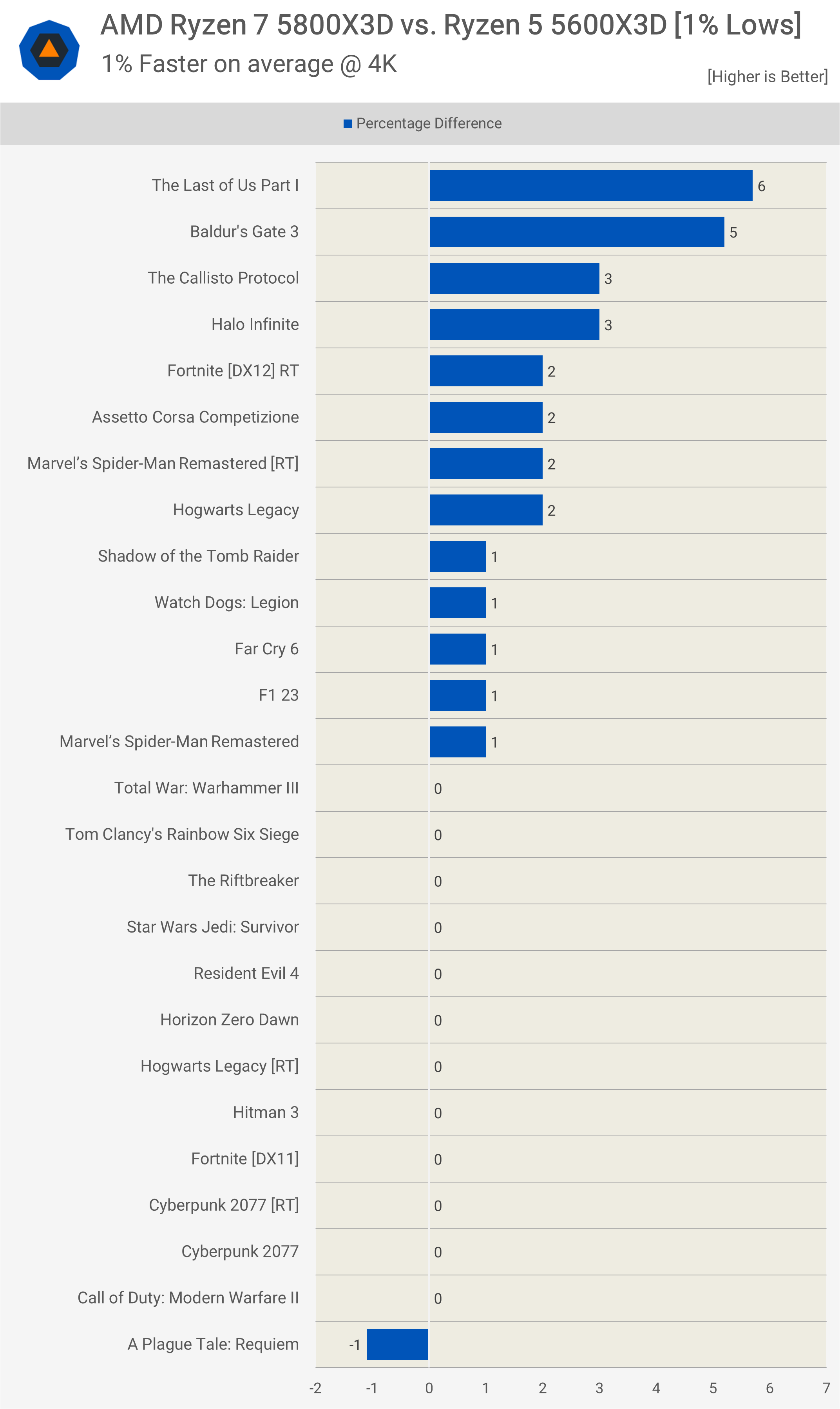

The narrative remains consistent when analyzing the 1% lows at 4K. Essentially, we’re gauging GPU performance and lack a clear perspective on which CPU reigns supreme. This data underscores the limited utility of 4K CPU benchmarks. Drawing a conclusion that the 5800X3D isn’t worth the additional $30, and that one should simply opt for the 5600X3D based on this, would be rather misguided.

Best Value Remains with the 5800X3D

So there you have it. The 5600X3D is a cool concept, but at its current price point, it’s hard to justify. If it had been released alongside the 5800X3D, it might have been priced at $300, assuming AMD matched the MSRP of the original component. For context, the 5800X3D was priced at the original $450 MSRP of the 5800X. Based on this, we could infer that the 5600X3D would have been priced similarly to the original 5600X at $300. If this were the case, it would have been 33% cheaper, making it a compelling choice for budget gamers.

However, it made no sense for AMD to make such a product. Doing so would mean selling X3D CPUs at $300 that could have fetched $450. The only viable path for the 5600X3D’s existence was what ultimately happened: AMD stockpiled defective dies until they had sufficient silicon to roll out a limited batch of 6-core models.

The problem now is that since March, the 5800X3D’s price has dropped to as low as $295, even dipping below this mark at some online stores. As of writing, Newegg is offering the 5800X3D for $330, just over 40% more than the 5600X3D at Micro Center, while B&H and Amazon have it as low as $291. And that’s ignoring the fact that Micro Center also sell the 5800X3D for $260 (that sale seems to be over now, but it was live until a few days ago).

If one were to disregard Micro Center’s own deal on the 5800X3D, then the 5600X3D might appear to be an attractive option. However, considering the 5600X3D is exclusive to that retailer, the logical choice would be the 5800X3D, rendering the 5600X3D moot.

In conclusion, this was a fun exercise that allowed us to see how a 6-core Zen 3 3D V-Cache CPU compares to the 5800X3D for gaming, and it turns out 6-cores is still fine, who would have thought.

It might be interesting to see how the 5600X3D compares to the 5600X, but if it’s anything like the 5800X3D vs 5800X, which it will be, you can expect about a 15% uplift across a massive range of games, with some titles showing upwards of 40% gains with others showing no improvement.

For those already on the AM4 platform seeking a cost-effective gaming CPU upgrade, we recommend the competitively-priced 5800X3D. Everyone else might find better value in AMD’s newer AM5 or Intel’s LGA1700 platforms.