Today’s PC gamers can consider themselves lucky when it comes to choosing a graphics card (relatively, of course). While there are only three GPU manufacturers, every model they make is pretty much guaranteed to run any game you like, albeit at varying speeds. Thirty years ago, it was a very different picture, with over ten GPU companies, each offering its own way of rendering graphics. Many were highly successful, others rather less so.

But none were quite like 3d gaming industry pioneer Rendition — a great first product, followed by two less-than-average ones, before fading into total obscurity, and all within five years. Now that’s a story worth telling!

Did you know? (Editor’s note)

TechSpot started life in 1998 as a personal tech blog called “Pure Rendition” to report news on the Rendition Verite chip, one of the first consumer 3D graphics processing units. Shortly after, the site was renamed to “3D Spotlight” to expand coverage to the nascent 3D industry represented at the time by the likes of 3dfx and later Nvidia and ATI.

In 2001, the site was relaunched pointing to the new domain TechSpot.com. The name was acquired after the 90s dot-com bubble for a handsome $200 as the original owners had no good use for it. From there, the site expanded to cover to the entire tech industry. By that point, TechSpot was receiving around a million visitors each month. These days, TechSpot is home to over 8 million monthly readers, catering to PC power users, gamers and IT professionals around the globe.

A messy start to the decade

PC gaming in the early 1990s was exciting and frustrating in equal measure — getting hardware to work properly required endless fiddling about with BIOS and driver settings, and even then, games at the time were notoriously picky about it all. But as the years rolled on, PCs became increasingly popular purchases, and millions of people around the globe proudly owned machines from Compaq, Dell, Gateway, and Packard Bell.

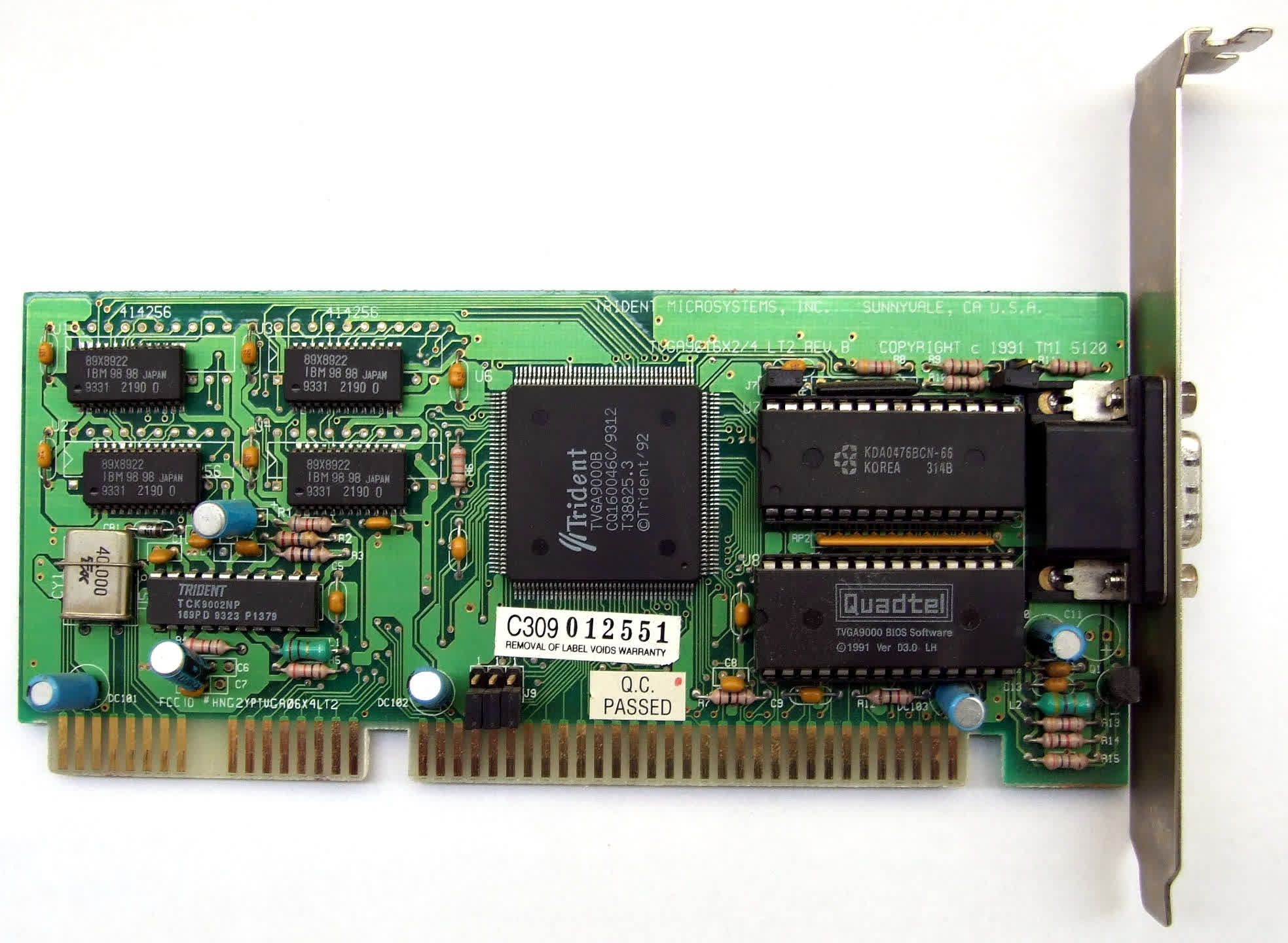

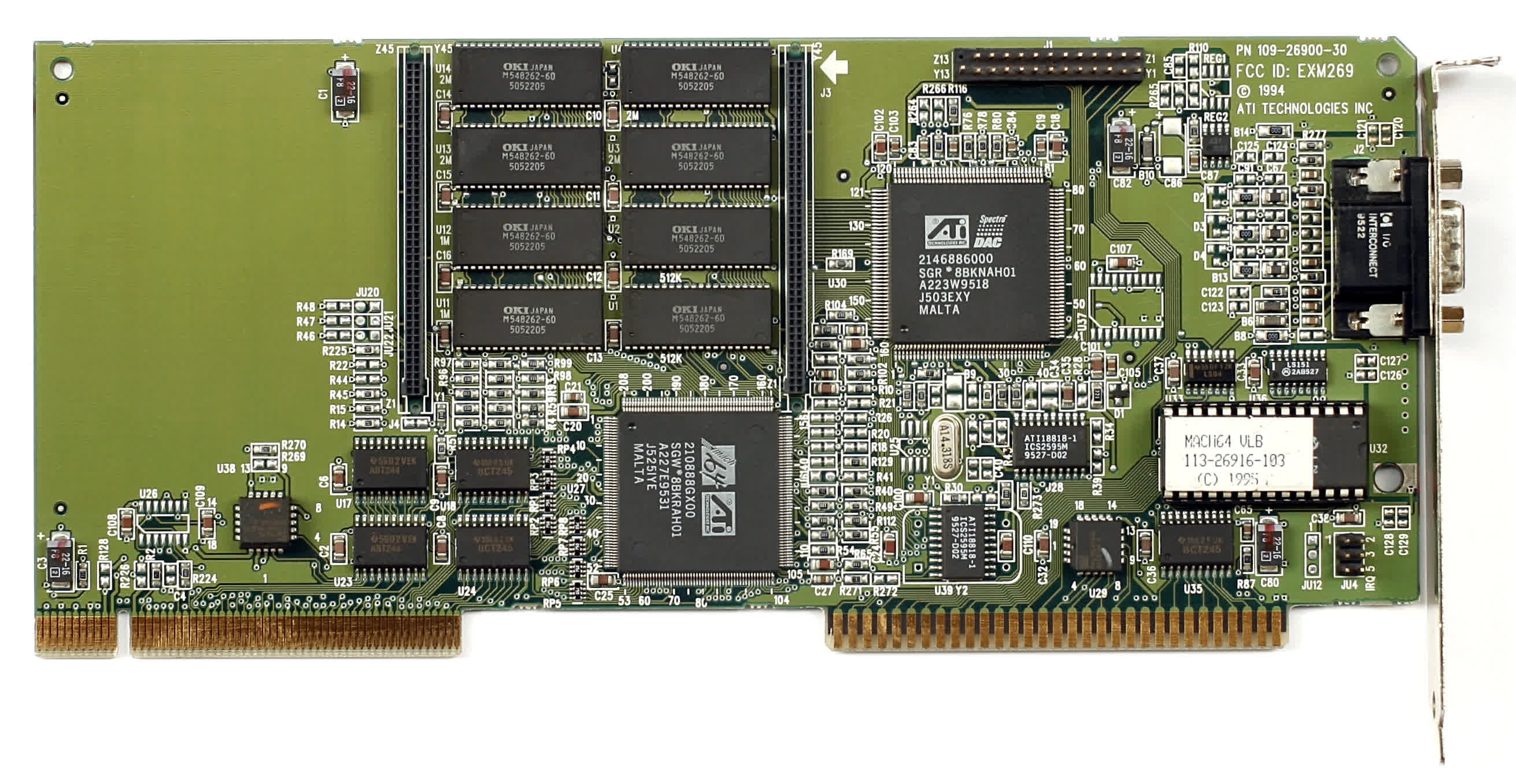

Not that these were aimed at gaming — $3,000 would get you a nice Intel 486DX2 machine, running at 66 MHz, and boasting 4MB of RAM and a 240 MB hard drive. But the graphics card? If you were lucky, it might have sported an ATI Graphics Ultra Pro 2D accelerator, replete with 1 MB of VRAM and the Mach 32 graphics chip. But if lady luck wasn’t on your side, then you’d be cursed with something ultra cheap from SiS or Trident.

Compared to the visual output of the likes of Sega’s Mega Drive/Genesis or the Super Nintendo Entertainment System, the graphics from even the best PCs were basic but serviceable. However, there were no games on that platform that really pushed the limits of the hardware.

The best-selling PC titles throughout the formative years of the 90s were point-and-click adventure games, such as Myst, or other interactive titles. None of these games needed ultra-powerful graphics cards to run, just those that supported 8 or 16-bit colors.

Change was afoot, though. The direction that games were heading toward was obvious, as 3D graphics were becoming the norm in arcade machines, though they used specialized hardware to achieve this. PC games that did have 3D graphics, such as Doom, were doing all the rendering via the CPU — the system’s graphics card simply turned the frame into something that could be displayed on a monitor.

To emulate the graphics seen in Namco’s Ridge Racer, for example, the home PC would need hardware with similar capabilities but at a fraction of the retail price. Interest from the old group of graphics companies (ATI, Matrix, S3, etc.) was slow in forming, so there was plenty of scope for fresh blood to enter the field.

Numerous startups were founded to design and make new graphics adapters that would be used instead of the CPU for 3D rendering. Enter stage left, Rendition Inc, co-founded by Jay Eisenlohr and Mike Boich, in 1993.

Their ambitions were simple yet big — create a chipset for an add-in card that provided acceleration for all 2D and 3D graphical duties, and then sell to gaming and professional industries. However, they weren’t alone in this endeavor and faced stiff competition from 4 other new companies, as well as those that had been around for many years already.

First blood is drawn

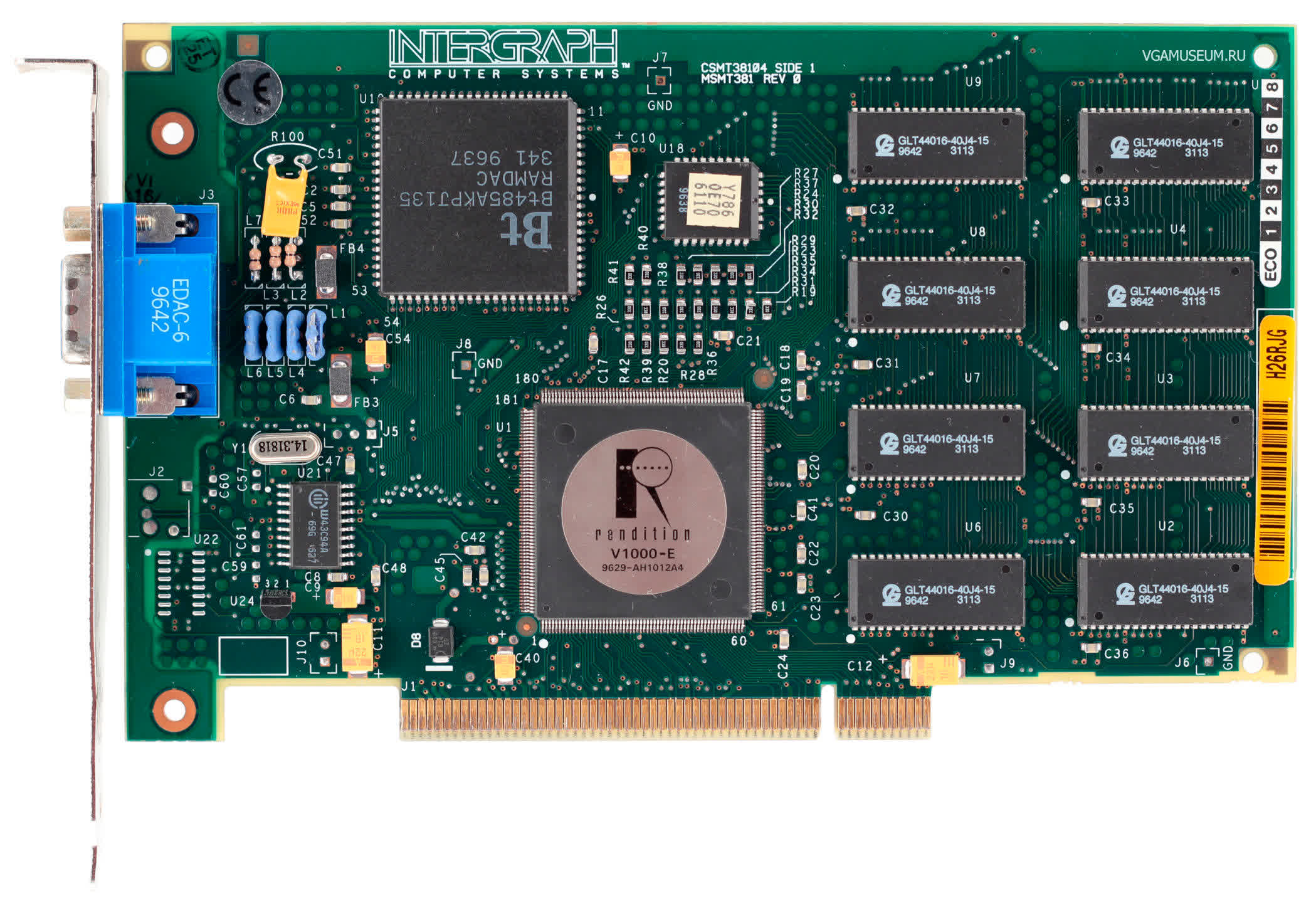

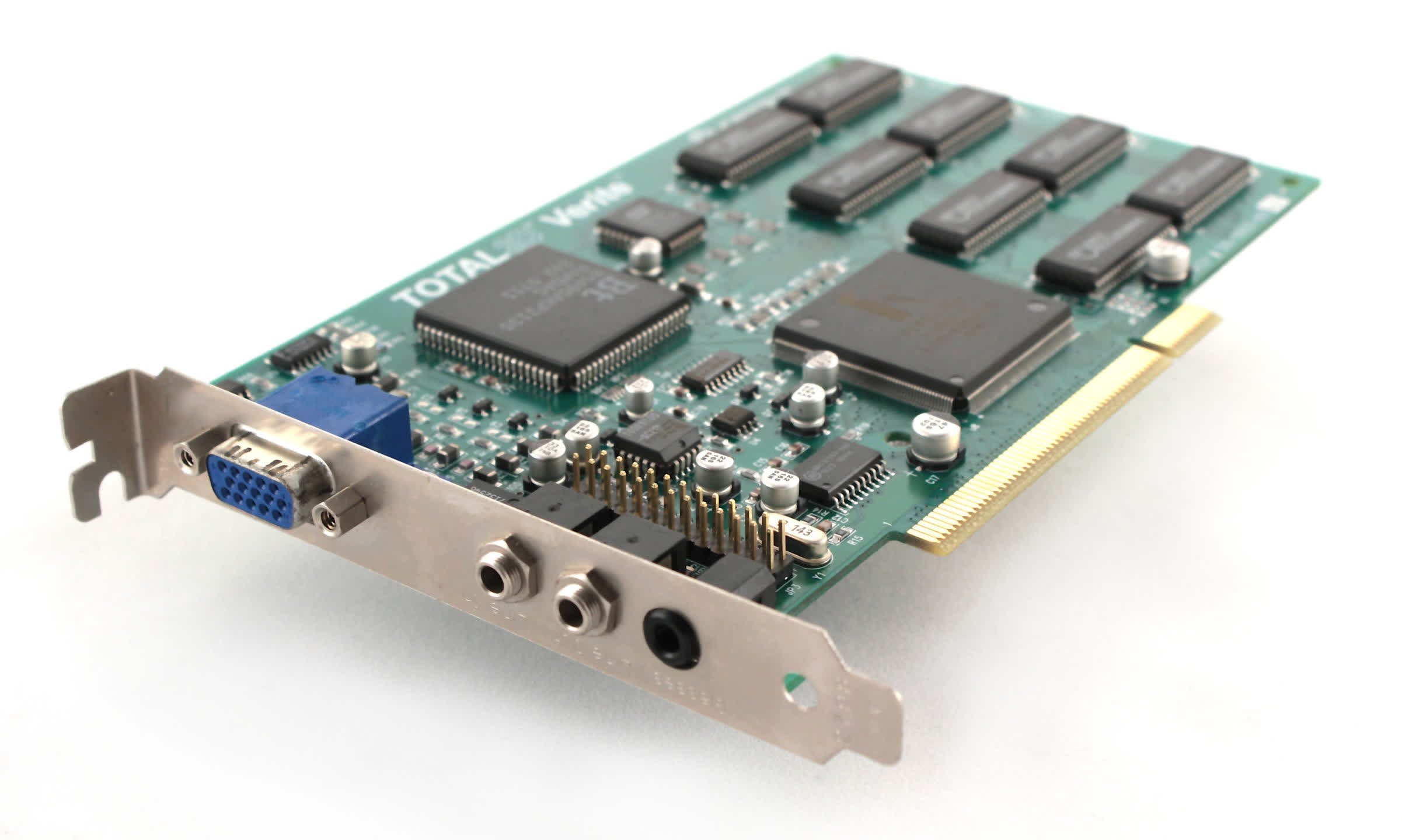

In 1995, Rendition announced its first product, the Vérité V1000-E, but since it was a fabless company, it relied on selling licenses to manufacture and use the design. Four OEM vendors took up the gauntlet and one year later, Canopus, Creative Labs, Intergraph, and Sierra all launched cards using the new chipset.

Today, there’s relatively little difference between the various architectures to be found in graphics cards, but the first iterations of 3D accelerators were remarkably disparate. Rendition took an unusual approach with the V1000, as the central chip was essentially a RISC CPU, similar to MIPS, acting as a front end to the pixel pipeline.

Running at 25 MHz, the chip could do a single INT32 multiplication in one clock cycle, but standard rendering tricks such as texture filtering or depth testing all took multiple cycles to carry out.

Theoretically, the V1000 could output 25 Mpixels per second (known as the fill rate of a graphics card), but only in very specific circumstances. For standard 3D games, the chip took at least two clock cycles to output a single, textured pixel, halving the fill rate.

But Rendition had a few aces up its sleeve with its first Vérité model. It did all of the triangle setup routines in hardware, whereas every other graphics card required this to be done by the CPU. Since the PC’s central processor was needed for all of the vertex processing in a 3D game, this extra feature that the V1000 sported gave the CPU a little more breathing space.

All V1000 cards used the PCI bus to connect to the host computer, something that was still new in the industry, and they could take advantage of features such as bus mastering and direct memory access (DMA) for extra performance.

Rendition’s graphics card was also fully programmable (technically, it was the first ever consumer-grade GPGPU) and the engineers developed multiple hardware abstraction layers (HALs) for Windows and DOS, which would convert instructions from various APIs into code for the chipset. In theory, this meant that the Vérité graphics card had the widest software support of any in stores at that time.

Bringing in the big guns

The pièce de résistance came in the form of a rather famous game — Quake.

After the rampant successes of Doom and its sequel, id Software began work on a new title, one that would take place in a fully 3D world (rather than pseudo-3D nature of Doom). Quake was released in June 1996 and six months later, id Software offered a port of the code, calling it VQuake.

Where the original game did all 3D rendering on the CPU, programmers John Carmack and Michael Abrash rewrote large parts of the code to take advantage of the Vérité’s capabilities. Processors at that time, such as Intel’s Pentium 166, could run Quake at around 30 fps, with a resolution of 320 x 200. The combination of VQuake and Rendition’s graphics card increased this to over 40 fps, but this also included proper bilinear filtering of textures and even anti-aliasing (via a system developed by Rendition and patented a few years later).

That might not sound like much of an improvement, but for the fast-paced nature of the game, every extra fps and graphical enhancement was welcome. The question to ask, though, is why wasn’t the performance better than this? Part of the answer lies in the V1000’s lack of hardware support for z-buffers, so programmers still had to rely on using the CPU to do any depth calculations, and even then do them as little as possible.

For a first attempt at a graphics accelerator, the Rendition Vérité V1000 was pretty good. It was the only offering on the market that did 2D and 3D, and had lots of performance available, as long as the game avoided its weaknesses — such as using DirectDraw or one of its proprietary 3D HALs, it was very fast.

The V1000 was also notoriously buggy (especially in motherboards that didn’t support DMA), very slow when operating in legacy VGA modes, and OpenGL support was very poor. And it would be the latter by which the product would eventually be most judged against.

Taking second place to the star performance

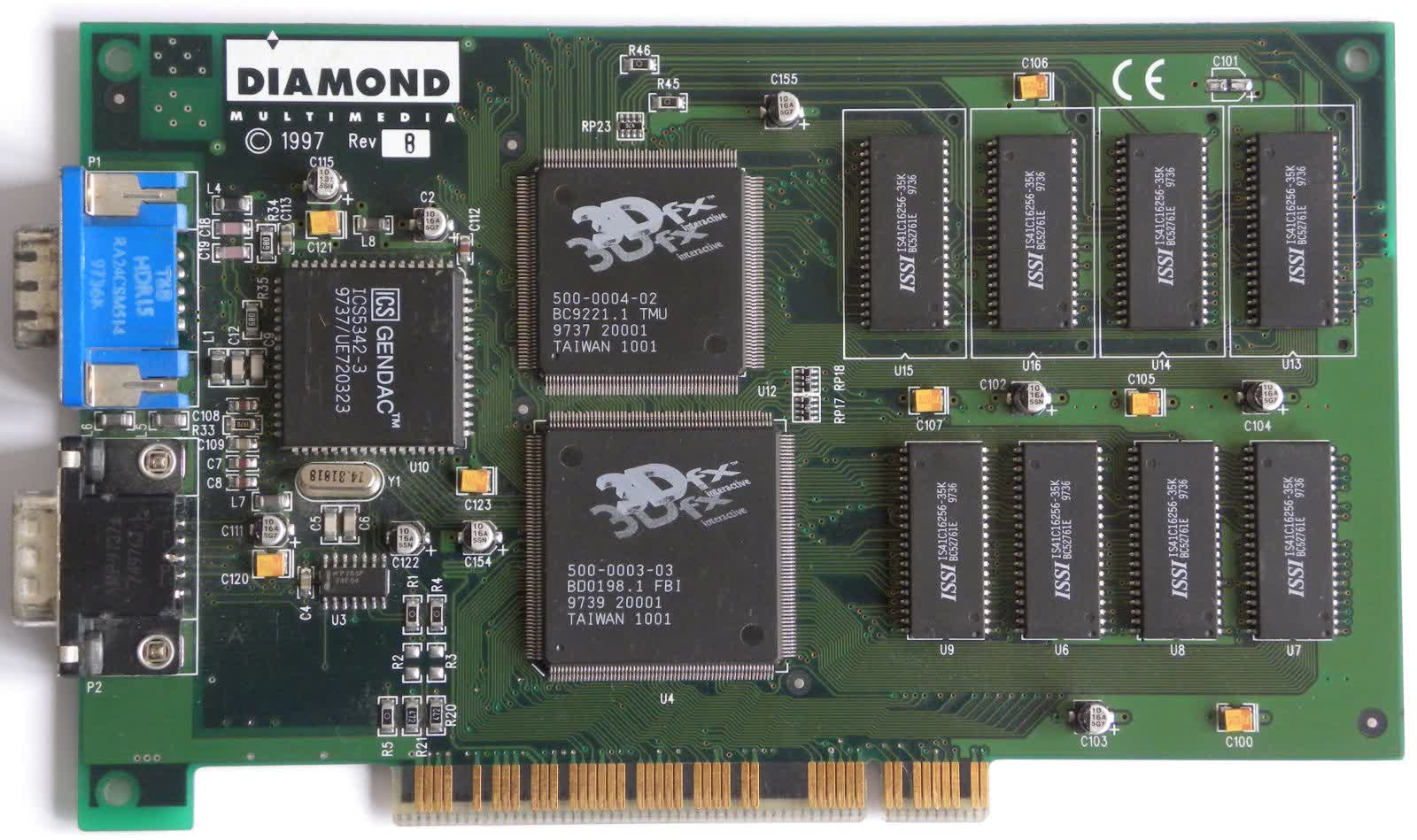

Another startup company that had ambitions in the world of 3D was 3Dfx Interactive, which formed a year after Rendition but released its first product, the Voodoo Graphics, well before the V1000. Initially sold to the professional industry, the 3D-only accelerator made its way into home PCs by 1996/1997, due to the significant drop in the price of DRAM.

Boasting a 50 MHz clock speed, this graphics chipset (codenamed SST1) could output a single pixel, with a bilinear filtered texture applied, once per clock cycle — considerably faster than anything else on the general market.

Like Rendition, 3Dfx developed its own software, called Glide, to program the accelerator but by the time it was being sported in a wealth of OEM models (from the same ones that used the Vérité V1000), another HAL was introduced — MiniGL. In essence, this was a highly cut-down version of OpenGL (an API normally used in the professional market) and it came about entirely because of another version of Quake that id Software released in early 1997.

GLQuake was created because Carmack didn’t enjoy having to work with proprietary software — this version utilized a standardized, open-source API to manage the rendering and provided a much-needed boost to performance and the quality of the graphics, with cards that supported it. 3Dfx’s MiniGL driver converted OpenGL instructions into Glide ones, allowing its Voodoo Graphics chipset to fully support GLQuake.

With a 50 MHz clock speed and hardware support for z-buffers, cards using the Voodoo Graphics were notably faster than any using Rendition’s V1000. 3Dfx’s first model could only run at a maximum resolution of 640 x 480 and always in 16-bit color, but even the best of Vérité cards were half the speed of any Voodoo model.

Attempts were made to fight back with the release of an updated version (known as the V1000L or L-P), that ran on a lower voltage, allowing it to be clocked to 30 MHz (as well as support faster RAM), but it was still a lot slower than the 3Dfx’s offering. And the semiconductor industry in the 1990s was rather like sports in general — history tends to forget those who came second.

A faltering second act

Rendition began work on a successor to the V1000 almost immediately, with goals to expand on the first model’s strengths, while resolving as many of its failings as possible. With a launch target of summer 1997, the engineers reworked the chip designs — improving the clock speed and feature set of the RISC processor (up to 3 instructions per cycle), expanding the capabilities of the pixel engine (hardware support for z-buffers and single cycle texture filtering, for example), and changing the memory from the old, slow EDO DRAM to faster SGRAM.

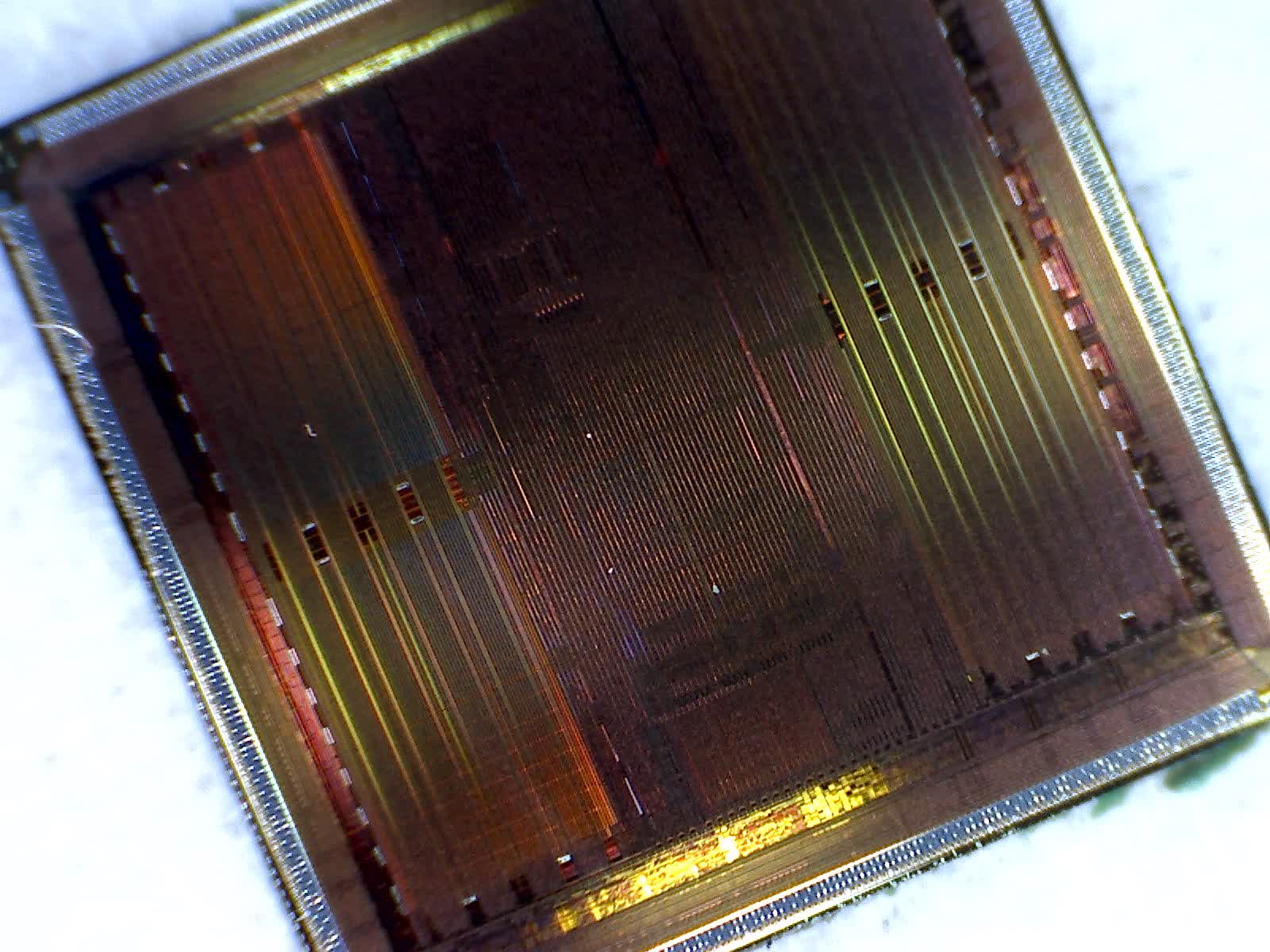

Chip binning was starting to become more noticeable in the graphics chip industry

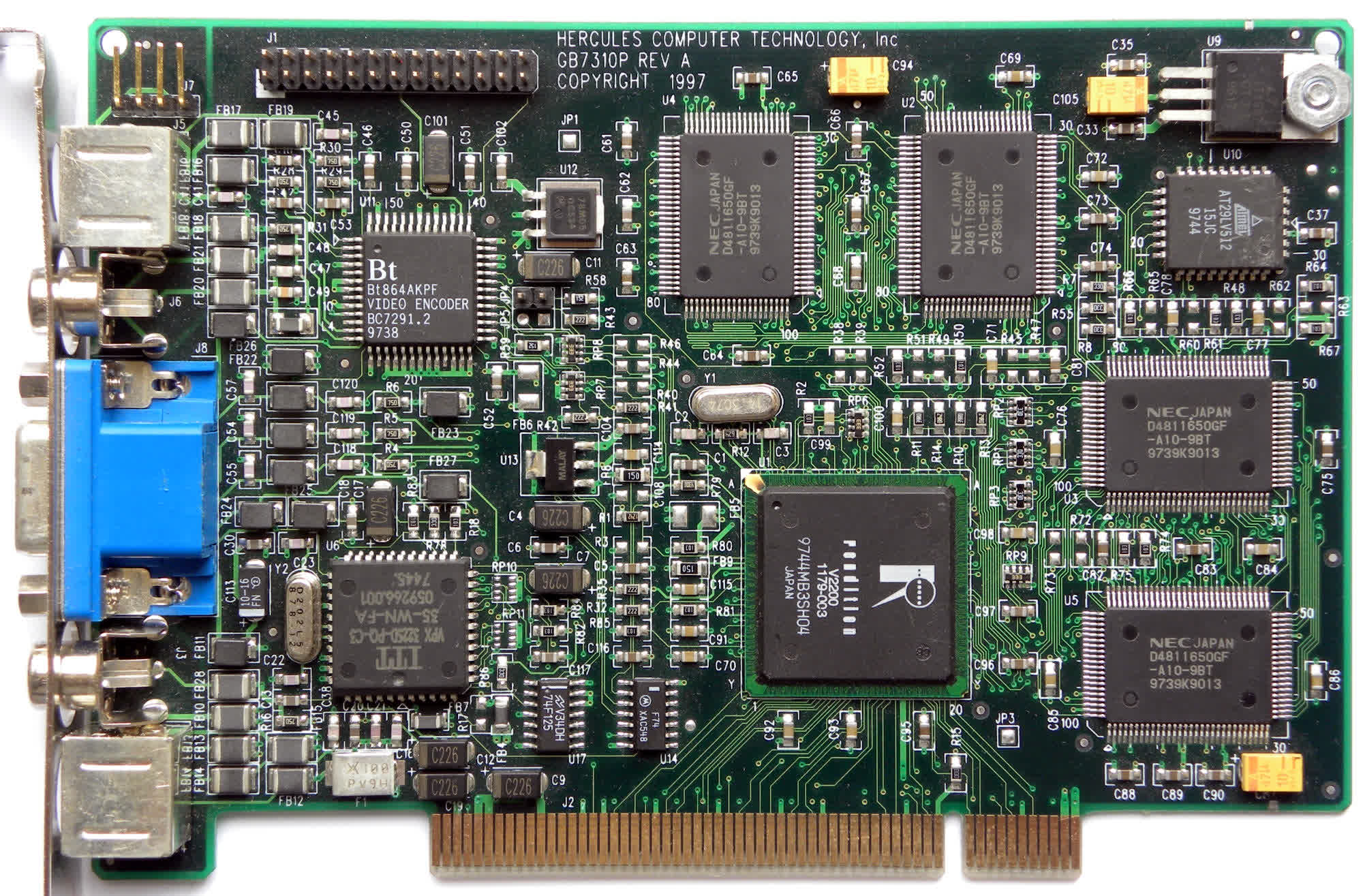

Chip binning was starting to become more noticeable in the graphics chip industry, enabling manufacturers to sell different versions of the same chip, across wider price ranges. Rendition took advantage of this by releasing two versions, in September 1997 — the V2100, clocked around 45 MHz, and the V2200, which ran 10 to 15 MHz faster. Other than the clock rates, there was no difference between the two chips, but most vendors just went with the faster chip.

The reason behind this was simple — they could charge a lot more for it. For example, Diamond Multimedia initially sold the V2100-powered Stealth II S220 at $99 (eventually reducing it to half the price when it sold badly), whereas the Hercules Thriller 3D, using a 63 MHz V2200, cost $129 for the 4 MB version and $240 for the 8 MB one.

As follow-ups to the V1000, the new chips were a clear, if somewhat understated, improvement. Performance was definitely better and the extra feature support meant better compatibility with the flurry of 3D games appearing on the market. However, all was not well at Rendition.

The development of the V2000 series had apparently been marred by numerous glitches, in part due to how the company designed its chips. Since it had no fabrication plants of its own, most of the testing of the processors was done via software. But what works well in simulations often doesn’t transfer properly once fleshed out in silicon, and Rendition missed their target launch by a number of months.

The competition wasn’t necessarily fairing any better (for example, 3Dfx’s second offering, the Voodoo Rush, was actually slower than the original Voodoo Graphics), but the V2000 series just wasn’t a big enough step forward when compared to what ATI, Nvidia, and PowerVR were releasing.

While mip-mapping was finally introduced in the new hardware, it could only be applied per triangle, whereas everything else was able to do it per pixel. Rendition was also still pushing the use of its own software, perhaps at the cost of providing better support for OpenGL and Direct3D (although both APIs were now properly covered).

But there was enough interest in what the engineers were doing to garner interest and further investment from other companies.

Hopes and failed ambitions

In 1998, while Rendition was still developing its third iteration of the Vérité graphics chipset, the company (IP and staff) was sold to Micron Technology, an American DRAM manufacturing firm that was acquiring numerous businesses at that time. The V3000 series was expected to be more of the same, albeit with even higher clocks (IBM was scheduled to produce the chip) and a substantially better pixel engine.

However, despite having access to its own fabs and considerably more resources, the project didn’t progress anywhere near fast enough to be a viable product against those being released by 3Dfx, ATI, and Nvidia. Micron’s bosses pulled the plug and the V3000 was abandoned.

Instead, a new direction for the graphics chip was initiated and the V4000 project, scheduled for launch in 2000, was designed to include a host of new features, all in one chip. The most impressive of which was to be at least 4 MB of embedded DRAM (eDRAM). The graphics chip in Sony’s PlayStation 2 (launched March 2000) also boasted this aspect, so it wasn’t an overly ambitious decision, but Micron wasn’t really targeting consoles or discrete graphics cards.

To the management, the motherboard chipset market seemed to be more profitable, as 3D accelerators were only of interest to PC gamers. By making a single processor that could take over the role of the traditional (and separate) Northbridge and Southbridge chips, Micron was confident that they had the money and people to beat the likes of Intel, S3, and VIA at the same game.

Ultimately, it was not to be. The project was dropped over concerns that the single chip would have been far too large — over 125 million transistors. In comparison, AMD’s Athlon 1200 CPU, from the same period, comprised just 37 million transistors. Micron would go on to try and develop a chipset for the Athlon processor, which still had some eDRAM acting as a L3 cache, but it didn’t get very far, and the company soon dropped out of the chipset market altogether.

An ignominious end

As for Rendition and its graphics processors, it was all over. Micron never did anything with the IP, but used the name for a budget range of its Crucial memory lineup for a few years, before swapping it for something new.

Cards with Vérité chips were only on shelves for a handful of years, though for a brief moment if you wanted the best performance and graphics in Quake, it was the name to have inside your PC. Time pruned away almost all of the other graphics firms and the market is now dominated by Nvidia, who purchased 3Dfx over 20 years ago. ATI was bought by AMD in 2006, Imagination Technologies stopped making PowerVR cards a few years earlier, and Matrox abandoned the gaming sector to serve a niche professional market.

Today, Rendition remains as a tiny footnote in the history of the graphics processor — a reminder of the days when 3D graphics was the next big thing and chip designers all found radically different ways to do them. It’s been long gone, but at least it’s not forgotten.

TechSpot’s Gone But Not Forgotten Series

TechSpot’s Gone But Not Forgotten Series

The story of key hardware and electronics companies that at one point were leaders and pioneers in the tech industry, but are now defunct. We cover the most prominent part of their history, innovations, successes and controversies.

Masthead credit: vgamuseum